- The paper proposes interface features for LLM output sensemaking using exact matches, TF-IDF for unique words, and positional diction clustering.

- The study demonstrates that these techniques reduce cognitive load and support rapid identification of text variations in model responses.

- User studies on tasks such as email rewriting validate the approach as a practical and scalable solution for inspecting diverse LLM outputs.

Essay on "Supporting Sensemaking of LLM Outputs at Scale"

Introduction

The paper "Supporting Sensemaking of LLM Outputs at Scale" addresses the challenges and opportunities associated with the presentation and inspection of outputs generated by LLMs. The core proposition is to enhance user capabilities in dealing with variations in LLM outputs by developing interface features that can present many responses simultaneously. The paper proposes methods for computing and visualizing textual similarities and differences, bringing clarity to the variety of LLM outputs.

Key Features and Methodologies

The paper introduces several novel interface features designed to support sensemaking across large sets of LLM outputs:

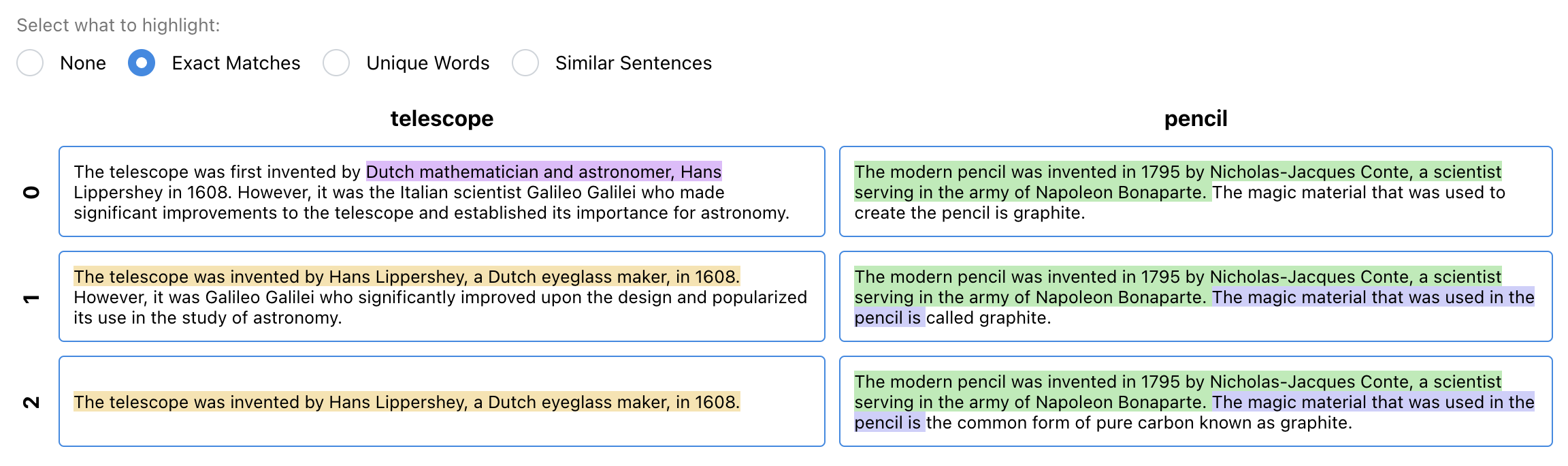

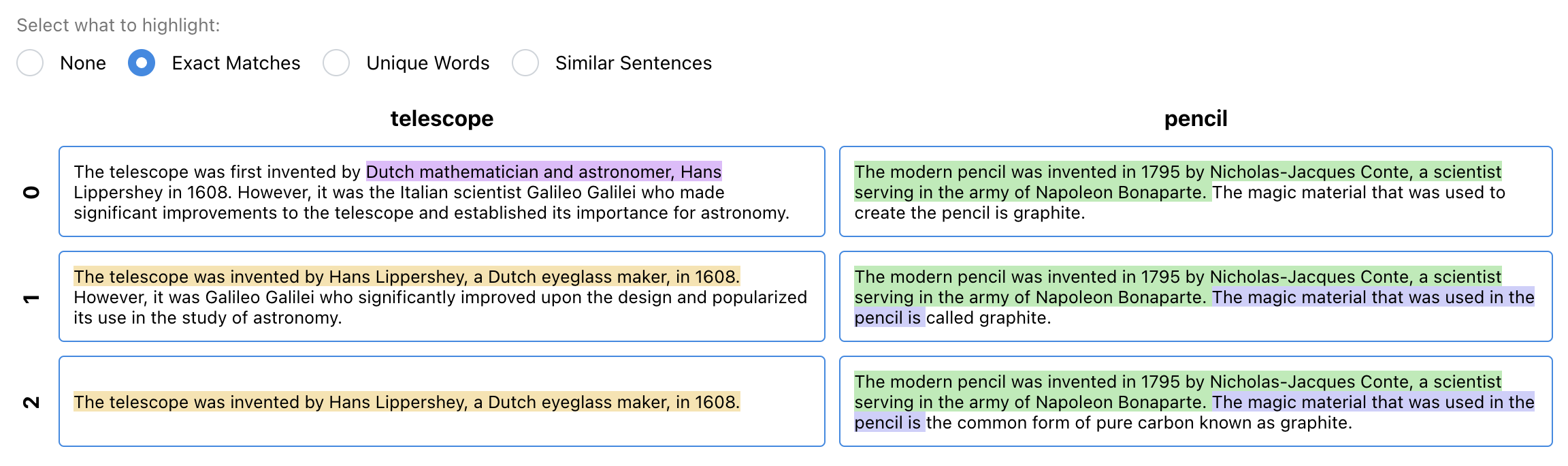

- Exact Matches: This feature highlights identical text segments shared across multiple outputs. By identifying longest common substrings, the feature allows users to detect repetitive language patterns. This approach is useful when evaluating the consistency of LLM responses or identifying frequently recurring phrases.

Figure 1: Example of the exact matches feature for a prompt highlighting repeated segments across responses.

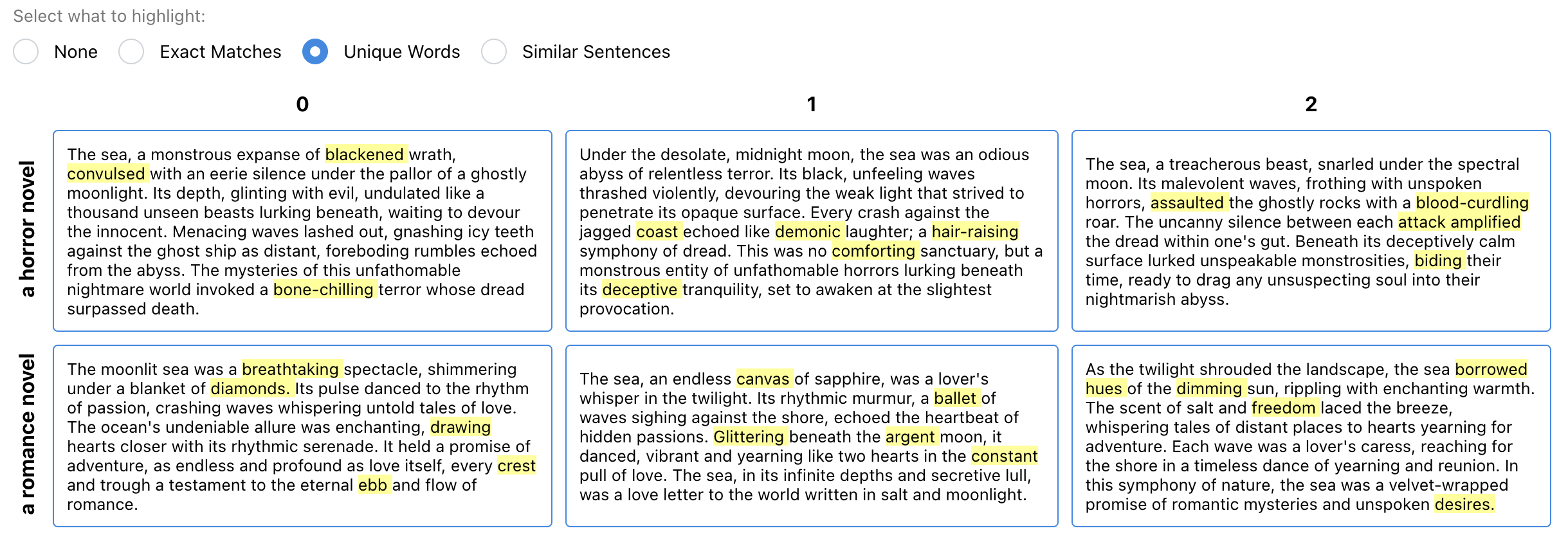

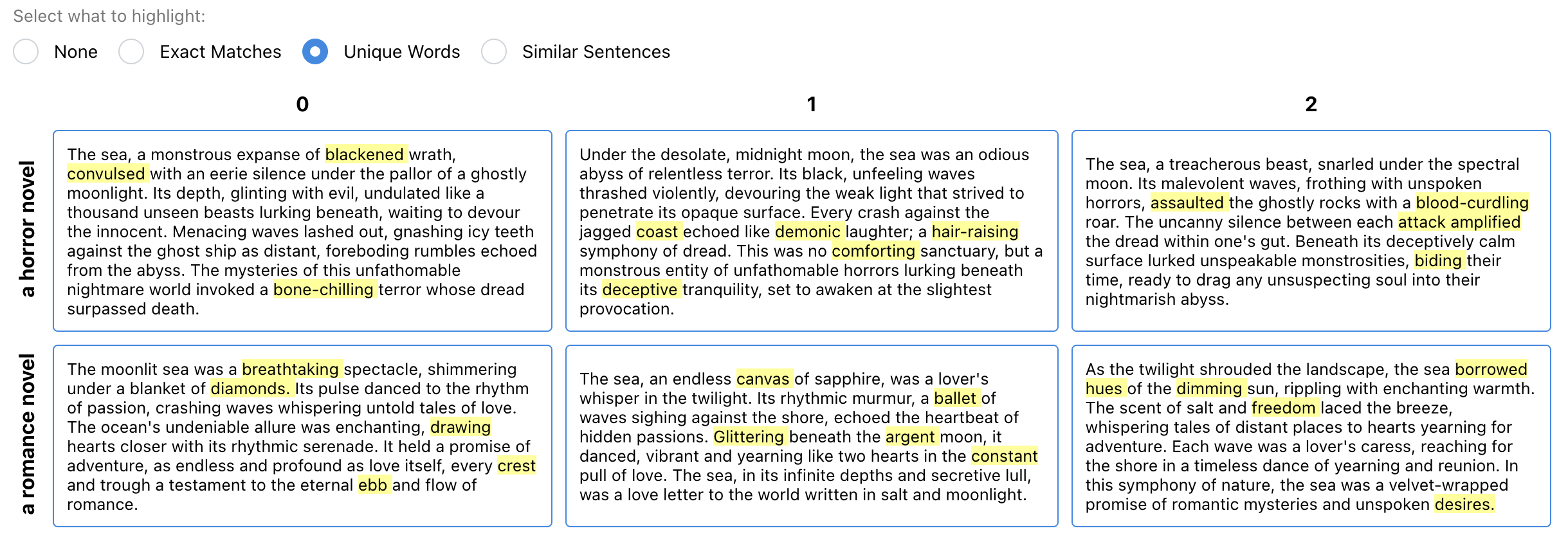

- Unique Words: Utilizing TF-IDF, this feature highlights words that are distinctive within a response compared to others in the set. It provides users with a means to gauge the uniqueness of each output, aiding in the discovery of diverse expressions or ideas.

Figure 2: Example of the unique words feature illustrating how distinctive language choices are surfaced in responses.

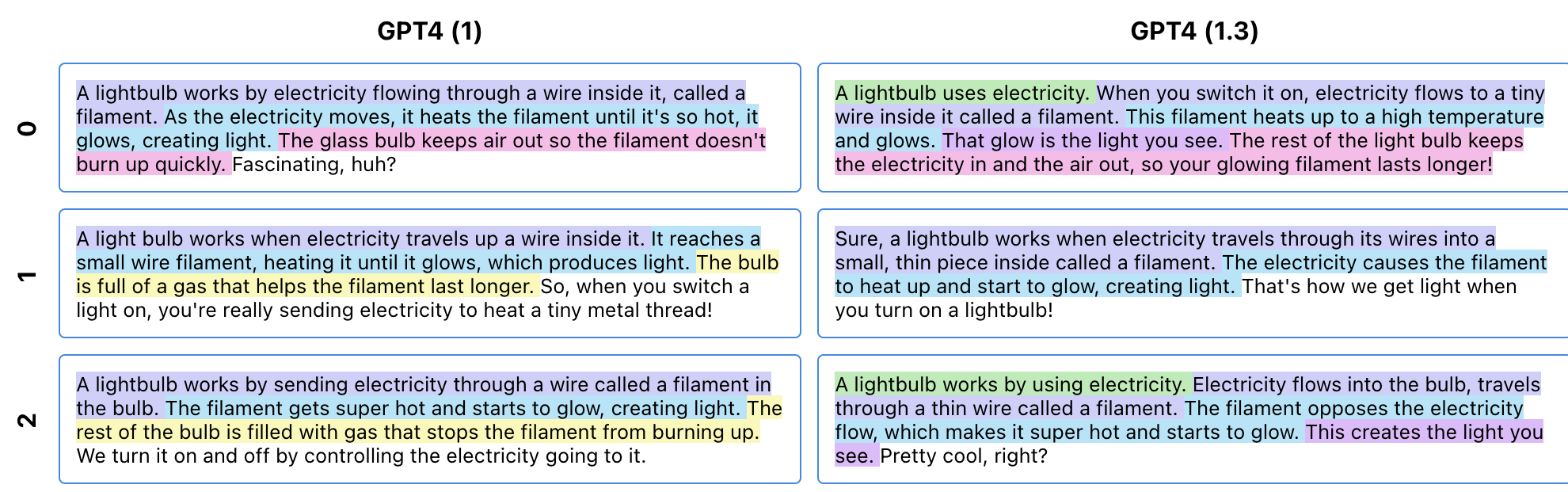

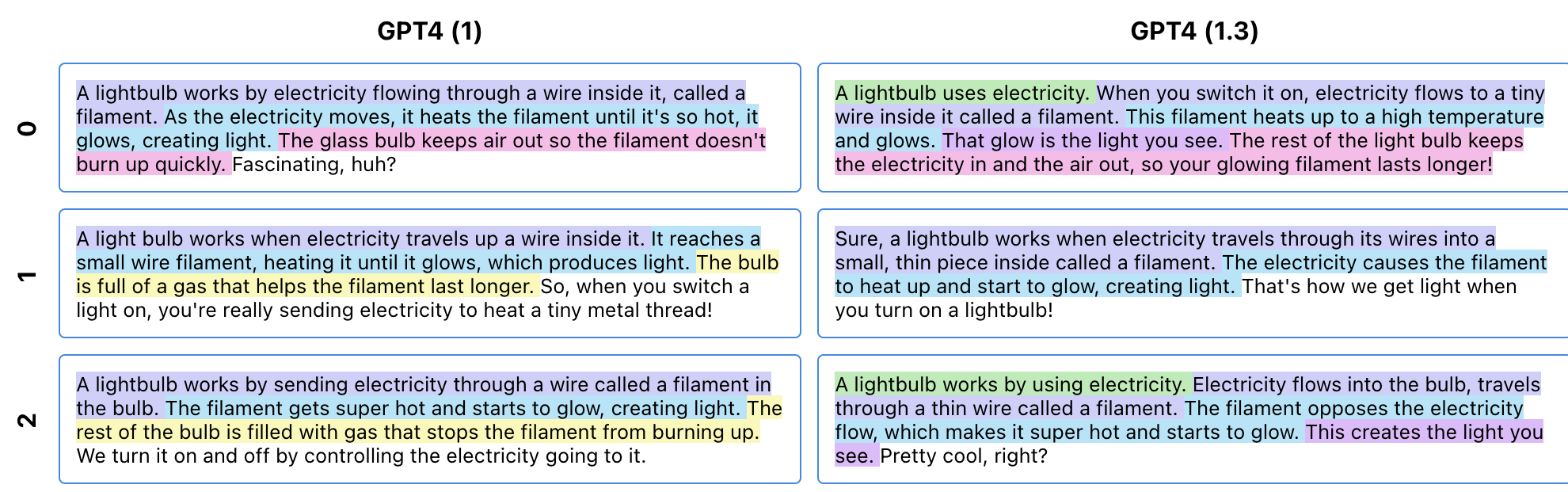

- Positional Diction Clustering (PDC): This novel algorithm detects semantically and positionally similar sentences across responses. PDC captures structures resembling emergent templates, allowing users to discern overarching themes or patterns within LLM outputs without predefining specific terms of interest.

Figure 3: Example of the PDC feature in the grid layout showing structural and semantic sentence groupings.

User Studies and Practical Implications

Through a controlled user study and multiple case studies, the paper evaluates the effectiveness of these features. The study focuses on diverse tasks like email rewriting and model comparison, which simulate real-world application scenarios for LLMs. Key findings suggest that the grid layout and PDC feature empower users to rapidly identify output variations and inform hypothesis testing during sensemaking tasks.

Noteworthy is the feature's ability to reduce cognitive load by supporting tasks previously deemed too complex for manual inspection. This capability introduces a compelling argument for integrating such tools into systems where human oversight of model outputs is crucial, such as applications involving model auditing or creative content generation.

Algorithm Design and Usage Considerations

The algorithmic approaches are designed to work without requiring users to specify queries ahead of time, thus supporting open-ended exploration. The choice to render all LLM outputs in their entirety caters to users' needs for direct engagement with the text, countering the potential pitfalls of over-reliance on abstracted automated measures.

Deployment of these features highlights potential adjustments tailored to specific contexts; for instance, using shading to intensify visual focus on important segments. Moreover, Expanding the applicability of these features to examine LLM behavior across various prompts or model versions could leverage their strength in distinguishing nuanced language patterns.

Conclusion

The paper makes significant contributions to the methods of inspecting and interpreting LLM outputs at scale. By innovatively combining rendering techniques with clustering algorithms, the proposed features advance the state-of-the-art in human-computer interaction for AI applications. Future work should consider expanding on more granular customization options in interfaces and exploring cross-modal integrations, allowing these sensemaking tools to meet the evolving needs of AI practitioners.