Image Safeguarding: Reasoning with Conditional Vision Language Model and Obfuscating Unsafe Content Counterfactually (2401.11035v1)

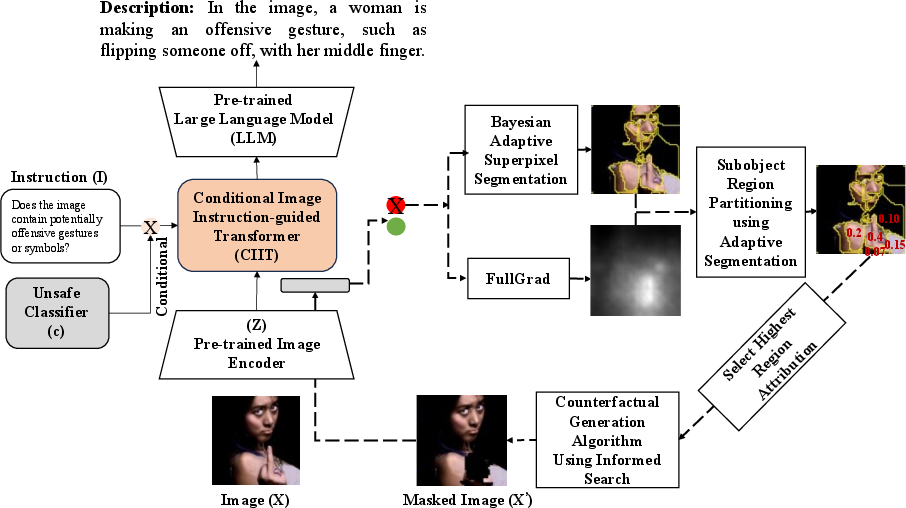

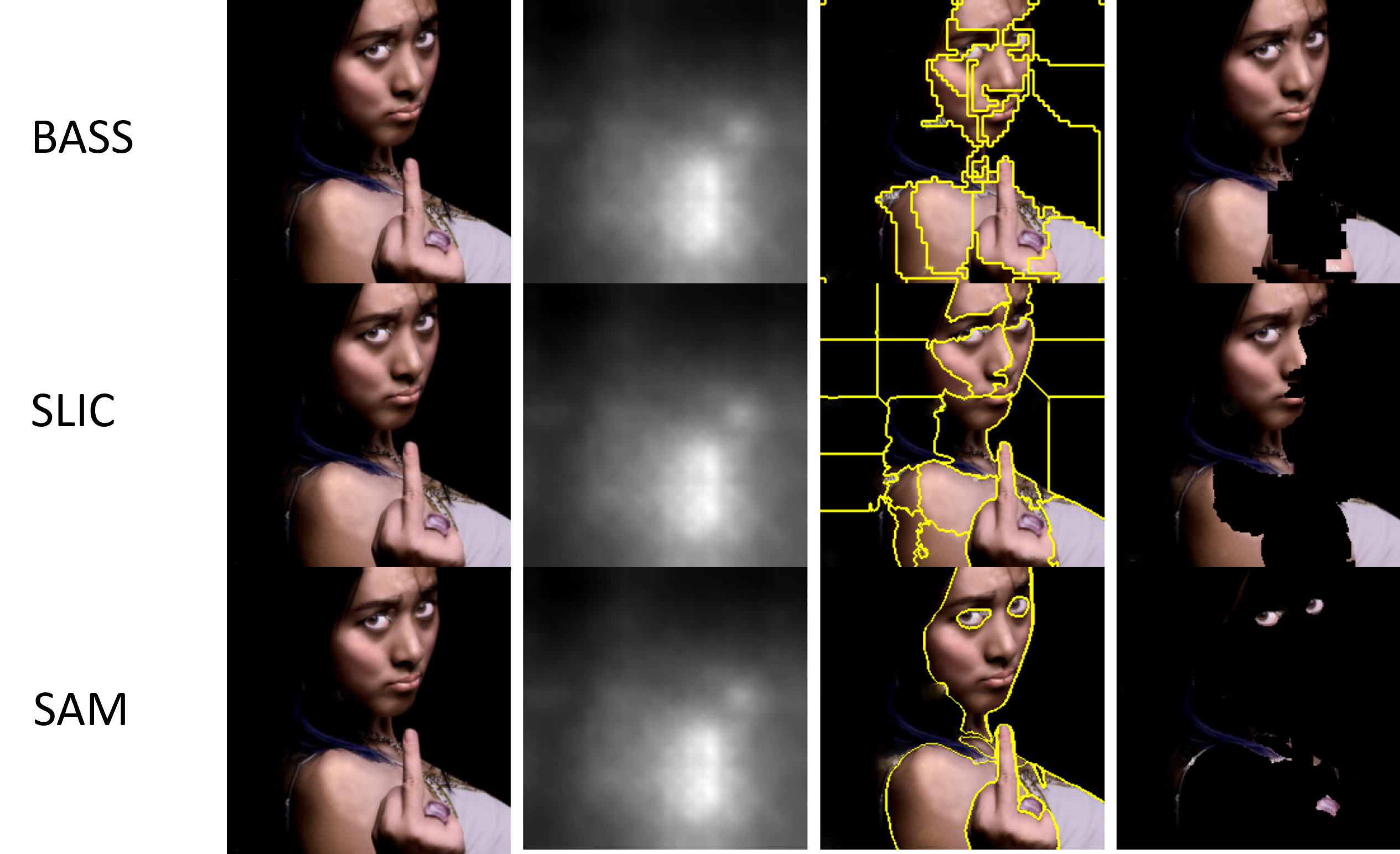

Abstract: Social media platforms are being increasingly used by malicious actors to share unsafe content, such as images depicting sexual activity, cyberbullying, and self-harm. Consequently, major platforms use AI and human moderation to obfuscate such images to make them safer. Two critical needs for obfuscating unsafe images is that an accurate rationale for obfuscating image regions must be provided, and the sensitive regions should be obfuscated (\textit{e.g.} blurring) for users' safety. This process involves addressing two key problems: (1) the reason for obfuscating unsafe images demands the platform to provide an accurate rationale that must be grounded in unsafe image-specific attributes, and (2) the unsafe regions in the image must be minimally obfuscated while still depicting the safe regions. In this work, we address these key issues by first performing visual reasoning by designing a visual reasoning model (VLM) conditioned on pre-trained unsafe image classifiers to provide an accurate rationale grounded in unsafe image attributes, and then proposing a counterfactual explanation algorithm that minimally identifies and obfuscates unsafe regions for safe viewing, by first utilizing an unsafe image classifier attribution matrix to guide segmentation for a more optimal subregion segmentation followed by an informed greedy search to determine the minimum number of subregions required to modify the classifier's output based on attribution score. Extensive experiments on uncurated data from social networks emphasize the efficacy of our proposed method. We make our code available at: https://github.com/SecureAIAutonomyLab/ConditionalVLM

- 2021. Facebook moderator: ‘Every day was a nightmare’. https://www.bbc.com/news/technology-57088382.

- 2021. Judge OKs $85 mln settlement of Facebook moderators’ PTSD claims. https://www.reuters.com/legal/transactional/judge-oks-85-mln-settlement-facebook-moderators-ptsd-claims-2021-07-23/.

- 2023. Vicuna. https://github.com/lm-sys/FastChat.

- Slic superpixels. Technical report.

- “When a Tornado Hits Your Life:” Exploring Cyber Sexual Abuse Survivors’ Perspectives on Recovery. Journal of Counseling Sexology & Sexual Wellness: Research, Practice, and Education, 4(1): 1–8.

- Flamingo: a visual language model for few-shot learning. Advances in Neural Information Processing Systems, 35: 23716–23736.

- Are, C. 2020. How Instagram’s algorithm is censoring women and vulnerable users but helping online abusers. Feminist media studies, 20(5): 741–744.

- Young people, peer-to-peer grooming and sexual offending: Understanding and responding to harmful sexual behaviour within a social media society. Probation Journal, 62(4): 374–388.

- Towards Language Models That Can See: Computer Vision Through the LENS of Natural Language. arXiv preprint arXiv:2306.16410.

- Towards targeted obfuscation of adversarial unsafe images using reconstruction and counterfactual super region attribution explainability. In 32nd USENIX Security Symposium (USENIX Security 23), 643–660.

- Billy Perrigo. 2019. Facebook Says It’s Removing More Hate Speech Than Ever Before. But There’s a Catch.

- Binder, M. 2019. Facebook claims its new AI technology can automatically detect revenge porn. https://mashable.com/article/facebook-ai-tool-revenge-porn.

- Bronstein, C. 2021. Deplatforming sexual speech in the age of FOSTA/SESTA. Porn Studies, 8(4): 367–380.

- The EU digital markets act: a report from a panel of economic experts. Cabral, L., Haucap, J., Parker, G., Petropoulos, G., Valletti, T., and Van Alstyne, M., The EU Digital Markets Act, Publications Office of the European Union, Luxembourg.

- A combinatorial approach to explaining image classifiers. In 2021 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), 35–43. IEEE.

- Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In 2018 IEEE winter conference on applications of computer vision (WACV), 839–847. IEEE.

- Minority report: Cyberbullying prediction on Instagram. In Proceedings of the 10th ACM conference on web science, 37–45.

- InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning. arXiv:2305.06500.

- Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, 248–255. Ieee.

- Exon, J. 1996. The Communications Decency Act. Federal Communications Law Journal, 49(1): 4.

- Eva: Exploring the limits of masked visual representation learning at scale. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 19358–19369.

- Efficient graph-based image segmentation. International journal of computer vision, 59(2): 167–181.

- Axiom-based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs. In BMVC.

- Gesley, J. 2021. Germany: Network Enforcement Act Amended to Better Fight Online Hate Speech. In Library of Congress, at: https://www. loc. gov/item/global-legal-monito r/2021-07-06/germany-network-enforcement-act-amended-to-better-fight-online-hat e-speech/#:~: text= Article% 20Germany% 3A% 20Network% 20Enforcement% 20Act, fake% 20news% 20in% 20social% 20networks.

- PyTorch library for CAM methods. https://github.com/jacobgil/pytorch-grad-cam.

- Harm and offence in media content: A review of the evidence.

- Hendricks, T. 2021. Cyberbullying increased 70% during the pandemic; Arizona schools are taking action. https://www.12news.com/article/news/crime/cyberbullying-increased-70-during-the-pandemic-arizona-schools-are-taking-action/75-fadf8d2c-cf11-43f0-b074-5de485a3247d.

- Self-harm, suicidal behaviours, and cyberbullying in children and young people: Systematic review. Journal of Medical Internet Research, 20(4).

- Kim, A. 2021. NSFW Data Scraper. https://github.com/alex000kim/nsfw˙data˙scraper.

- Segment anything. arXiv preprint arXiv:2304.02643.

- Krause, M. 2009. Identifying and managing stress in child pornography and child exploitation investigators. Journal of Police and Criminal Psychology, 24(1): 22–29.

- Nonconsensual image sharing: one in 25 Americans has been a victim of” revenge porn”.

- mPLUG: Effective and Efficient Vision-Language Learning by Cross-modal Skip-connections. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, 7241–7259.

- Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint arXiv:2301.12597.

- Effectiveness and users’ experience of obfuscation as a privacy-enhancing technology for sharing photos. Proceedings of the ACM on Human-Computer Interaction, 1(CSCW): 1–24.

- Meta. 2022. Appealed Content. https://transparency.fb.com/policies/improving/appealed-content-metric/.

- Compact watershed and preemptive slic: On improving trade-offs of superpixel segmentation algorithms. In 2014 22nd international conference on pattern recognition, 996–1001. IEEE.

- PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Wallach, H.; Larochelle, H.; Beygelzimer, A.; d'Alché-Buc, F.; Fox, E.; and Garnett, R., eds., Advances in Neural Information Processing Systems 32, 8024–8035. Curran Associates, Inc.

- Learning transferable visual models from natural language supervision. In International conference on machine learning, 8748–8763. PMLR.

- Ramaswamy, H. G.; et al. 2020. Ablation-cam: Visual explanations for deep convolutional network via gradient-free localization. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 983–991.

- Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125.

- A practitioner survey exploring the value of forensic tools, AI, filtering, & safer presentation for investigating child sexual abuse material (CSAM). Digital Investigation, 29.

- Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, 618–626.

- Full-gradient representation for neural network visualization. Advances in neural information processing systems, 32.

- The psychological well-being of content moderators: the emotional labor of commercial moderation and avenues for improving support. In Proceedings of the 2021 CHI conference on human factors in computing systems, 1–14.

- Tenbarge, K. 2023. Instagram’s sex censorship sweeps up educators, adult stars and sex workers.

- Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971.

- Bayesian adaptive superpixel segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 8470–8479.

- scikit-image: image processing in Python. PeerJ, 2: e453.

- Explainable image classification with evidence counterfactual. Pattern Analysis and Applications, 25(2): 315–335.

- Towards Understanding and Detecting Cyberbullying in Real-world Images. In 2020 19th IEEE International Conference on Machine Learning and Applications (ICMLA).

- Counterfactual explanations without opening the black box: Automated decisions and the GDPR. Harv. JL & Tech., 31: 841.

- Ofa: Unifying architectures, tasks, and modalities through a simple sequence-to-sequence learning framework. In International Conference on Machine Learning, 23318–23340. PMLR.

- Image as a Foreign Language: BEiT Pretraining for Vision and Vision-Language Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 19175–19186.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.