RoleCraft-GLM: Advancing Personalized Role-Playing in Large Language Models

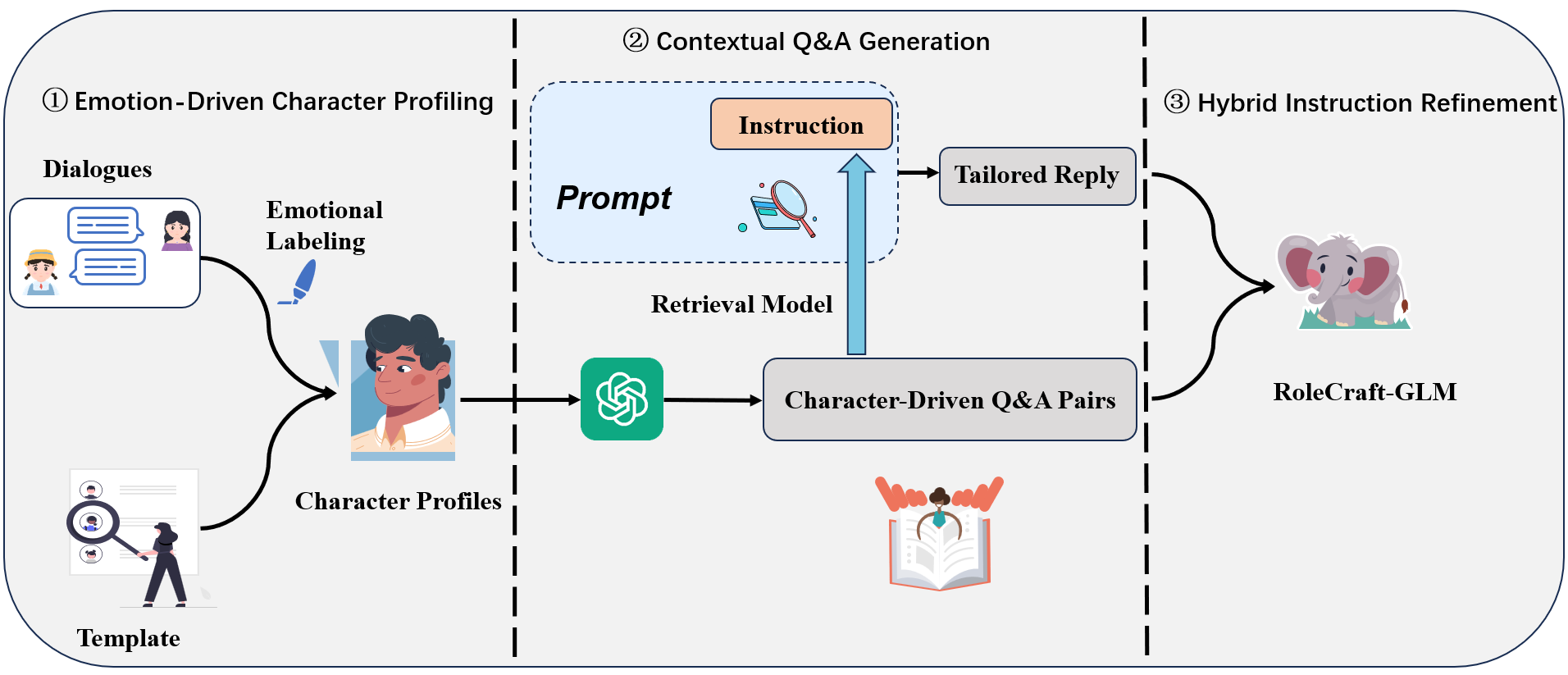

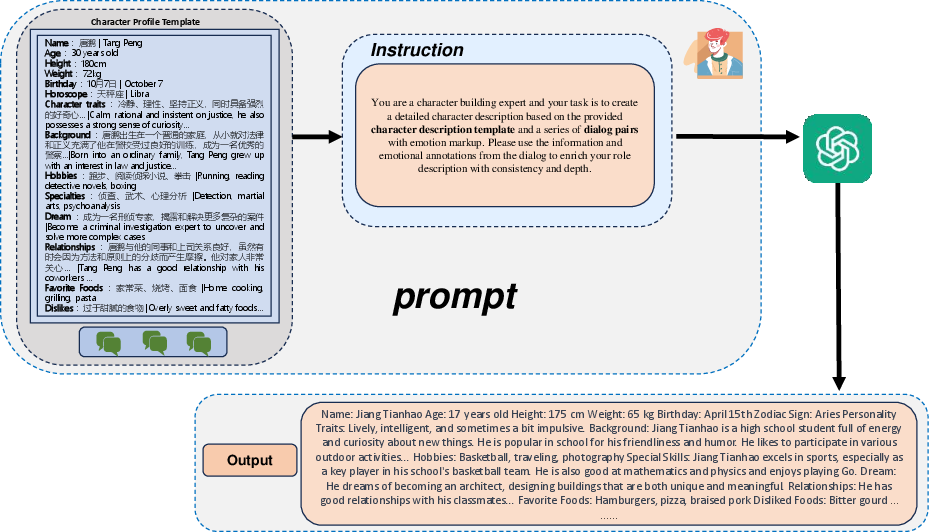

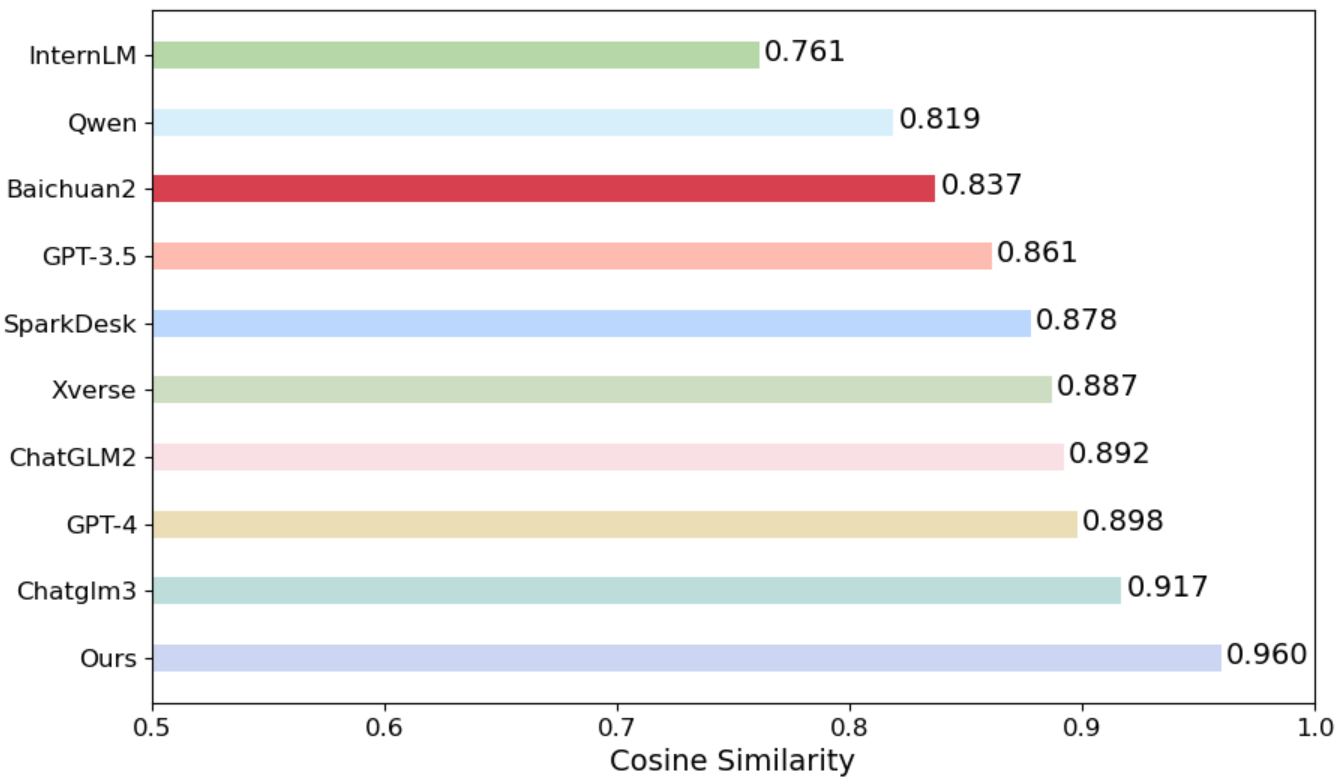

Abstract: This study presents RoleCraft-GLM, an innovative framework aimed at enhancing personalized role-playing with LLMs. RoleCraft-GLM addresses the key issue of lacking personalized interactions in conversational AI, and offers a solution with detailed and emotionally nuanced character portrayals. We contribute a unique conversational dataset that shifts from conventional celebrity-centric characters to diverse, non-celebrity personas, thus enhancing the realism and complexity of language modeling interactions. Additionally, our approach includes meticulous character development, ensuring dialogues are both realistic and emotionally resonant. The effectiveness of RoleCraft-GLM is validated through various case studies, highlighting its versatility and skill in different scenarios. Our framework excels in generating dialogues that accurately reflect characters' personality traits and emotions, thereby boosting user engagement. In conclusion, RoleCraft-GLM marks a significant leap in personalized AI interactions, and paves the way for more authentic and immersive AI-assisted role-playing experiences by enabling more nuanced and emotionally rich dialogues

- High-quality conversational systems. ArXiv, abs/2204.13043.

- Conversational contextual cues: The case of personalization and history for response ranking. arXiv preprint arXiv:1606.00372.

- Qwen technical report. arXiv preprint arXiv:2309.16609.

- David Baidoo-Anu and Leticia Owusu Ansah. 2023. Education in the era of generative artificial intelligence (ai): Understanding the potential benefits of chatgpt in promoting teaching and learning. Journal of AI, 7(1):52–62.

- On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency, pages 610–623.

- Emily M Bender and Alexander Koller. 2020. Climbing towards nlu: On meaning, form, and understanding in the age of data. In Proceedings of the 58th annual meeting of the association for computational linguistics, pages 5185–5198.

- Enriching existing conversational emotion datasets with dialogue acts using neural annotators. ArXiv, abs/1912.00819.

- P. Brandtzæg and A. Følstad. 2018. Chatbots: changing user needs and motivations. Interactions, 25:38–43.

- Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901.

- Towards teachable reasoning systems: Using a dynamic memory of user feedback for continual system improvement. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 9465–9480.

- Paul Ekman. 1992. An argument for basic emotions. Cognition & emotion, 6(3-4):169–200.

- Gptscore: Evaluate as you desire. arXiv preprint arXiv:2302.04166.

- Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

- Prometheus: Inducing fine-grained evaluation capability in language models. arXiv preprint arXiv:2310.08491.

- Sangwon Lee and Richard J Koubek. 2010. Understanding user preferences based on usability and aesthetics before and after actual use. Interacting with Computers, 22(6):530–543.

- Emotionprompt: Leveraging psychology for large language models enhancement via emotional stimulus. arXiv preprint arXiv:2307.11760.

- Chin-Yew Lin. 2004. Rouge: A package for automatic evaluation of summaries. In Text summarization branches out, pages 74–81.

- Memory-assisted prompt editing to improve gpt-3 after deployment. arXiv preprint arXiv:2201.06009.

- OpenAI. 2023. Gpt-4 technical report.

- Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, pages 1–22.

- Improving language understanding by generative pre-training.

- Data-driven response generation in social media. In Empirical Methods in Natural Language Processing (EMNLP).

- Representation matters: Assessing the importance of subgroup allocations in training data. ArXiv, abs/2103.03399.

- Noemi Sabadoš. 2021. Automatsko generisanje skupa podataka za treniranje modela za automatsko prepoznavanje osobe na slici. 36:536–539.

- Neural responding machine for short-text conversation. arXiv preprint arXiv:1503.02364.

- Character-llm: A trainable agent for role-playing. arXiv preprint arXiv:2310.10158.

- Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971.

- Rolellm: Benchmarking, eliciting, and enhancing role-playing abilities of large language models. arXiv preprint arXiv:2310.00746.

- C-pack: Packaged resources to advance general chinese embedding. arXiv preprint arXiv:2309.07597.

- Baichuan 2: Open large-scale language models. arXiv preprint arXiv:2309.10305.

- Cadge: Context-aware dialogue generation enhanced with graph-structured knowledge aggregation. arXiv preprint arXiv:2305.06294.

- Single turn chinese emotional conversation generation based on information retrieval and question answering. 2017 International Conference on Asian Language Processing (IALP), pages 103–106.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.