- The paper demonstrates that combining AI and data contextual information significantly improves delegation decisions by mediating self-efficacy and perceived task difficulty.

- It employs a robust experimental design using income prediction tasks with participants randomly assigned to treatment groups with varied contextual information.

- Findings support the design of human-AI systems that integrate comprehensive contextual insights to optimize collaboration performance.

Human Delegation Behavior in Human-AI Collaboration: The Effect of Contextual Information

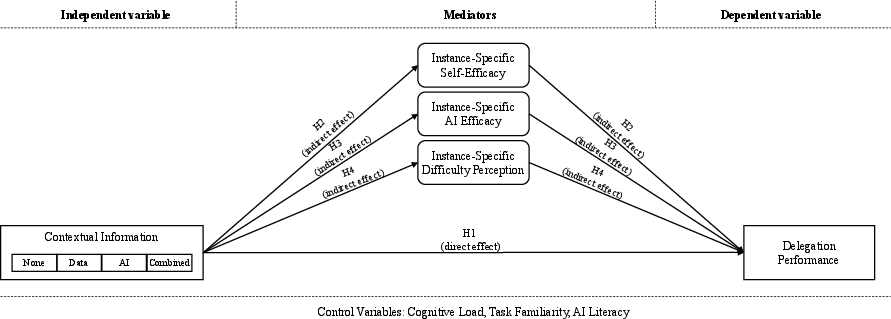

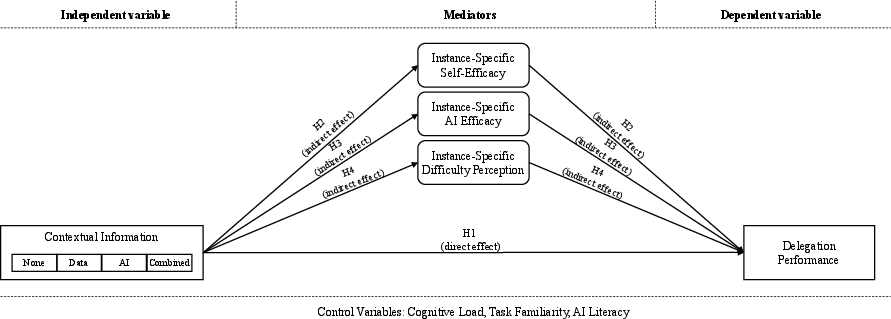

The paper under discussion addresses the intricate dynamics between human delegation behavior and AI systems in collaborative environments, particularly focusing on the impact of contextual information. It seeks to unravel how different forms of contextual data can influence the decision-making processes involved when humans delegate tasks to AI, affecting overall performance. The research utilizes a robust experimental design to probe the mediation effects of self-efficacy, AI efficacy, and difficulty perception on delegation decisions.

Introduction to Human-AI Collaboration Dynamics

Human-AI collaboration represents a frontier with significant promise across various domains, from healthcare to finance. The automation of decision-making through the delegation to AI systems is a nuanced aspect of this collaboration, demanding a comprehensive understanding of when and how humans opt to entrust tasks to AI. The study hypothesizes that contextual information—pertaining to both the dataset used and the AI's capabilities—could profoundly affect these delegation decisions.

Recent developments suggest that humans are inclined to delegate tasks perceived as complex or when self-efficacy is lowered while retaining tasks when confident in their abilities. This interaction is mediated by their perception of AI's strength and data difficulty, providing a fertile ground for strategically deploying AI to supplement human tasks without complete replacement, thereby achieving higher collaborative performance.

Experimental Framework

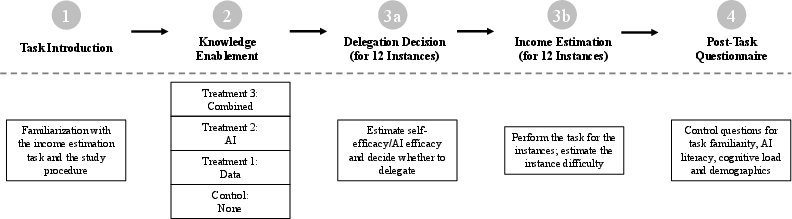

The research adopts a structured experimental approach consisting of introducing participants to the task, random assignment to specific treatment groups with varied contextual information, task execution, and post-task evaluation.

Figure 1: The design of the study is set up in four parts: First, participants are introduced to the task of the study, followed by part two in which they are randomly assigned to one of the four treatment groups. In part three, participants conduct the task of the study and have to fill out a questionnaire in part four.

Participants engaged in income prediction tasks using census data, interacting with AI decisions assisted by contextual information about the data distribution and AI's prediction confidence. Treatments involved provision of neither, either, or both types of contextual information—data and AI capabilities to gauge their effects on delegation.

Results and Analysis

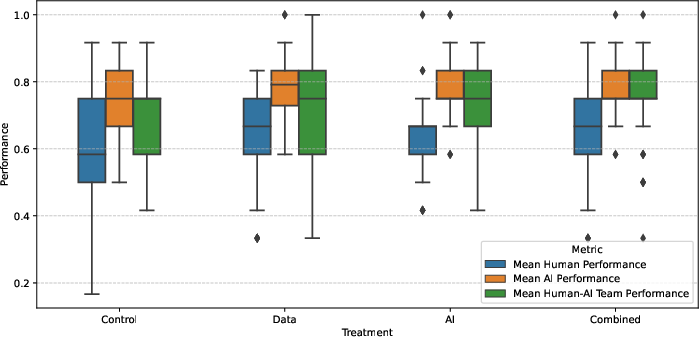

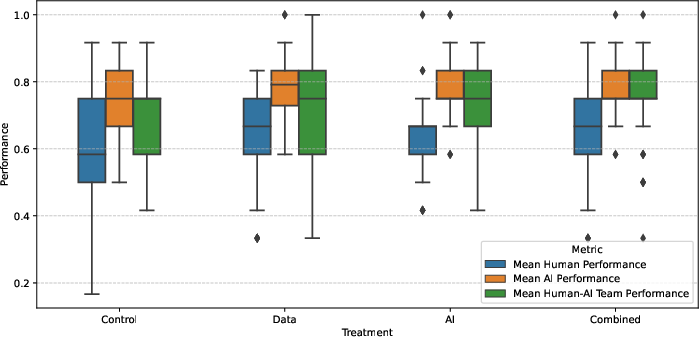

The study's results underscore that task delegation efficacy materially benefits from the provision of both contextual data and AI information. Simply providing AI performance data or data distribution independently did not significantly uplift performance metrics. Contrarily, when participants had access to both types of information, human-AI team performance saw tangible improvement.

Figure 2: A comparison of the performance and 95% confidence intervals across the four treatments: Control treatment in which participants do not receive contextual information, data treatment in which participants receive contextual data information, AI treatment in which participants receive contextual AI information and the combined treatment in which participants receive contextual data and AI information.

The mediation analysis using Hayes' framework further reveals that self-efficacy, AI efficacy, and perceived difficulty act as mediators in the interaction model. The decision to delegate tasks aligns closely with these mediating variables, elucidating the role of comprehensive contextualization in achieving optimal human-AI teaming.

Practical and Theoretical Implications

These findings suggest that AI systems designed for collaboration should integrate comprehensive explanations involving both the AI's capability and the contextual data properties. This dual-awareness allows human collaborators to calibrate their delegation decisions optimally, leveraging AI strengths to complement human judgment actively.

Moreover, the research beckons further exploration into refining AI's communicative interfaces to deliver contextual information seamlessly, reducing cognitive load without overwhelming the decision-maker. A balance must be struck, where transparency and actionable insight promote trust and effective collaboration in human-AI partnerships.

Conclusion

The paper "Human Delegation Behavior in Human-AI Collaboration: The Effect of Contextual Information" provides a substantial contribution to understanding the nuanced impact of contextual information on delegation behavior in AI-integrated systems. By establishing the criticality of effective information medley, it sets the ground for designing more intelligent and intuitively cooperative human-AI systems. Future exploration should explore cross-domain applications and refine methods of contextual information delivery to bolster collaboration efficiency further.

Figure 3: The research models describes the relationship of the independent variable (contextual information), mediators (instance-specific self-efficacy, instance-specific AI efficacy, instance-specific difficulty perception) and the dependent variable (delegation performance).