FurniScene: A Large-scale 3D Room Dataset with Intricate Furnishing Scenes (2401.03470v2)

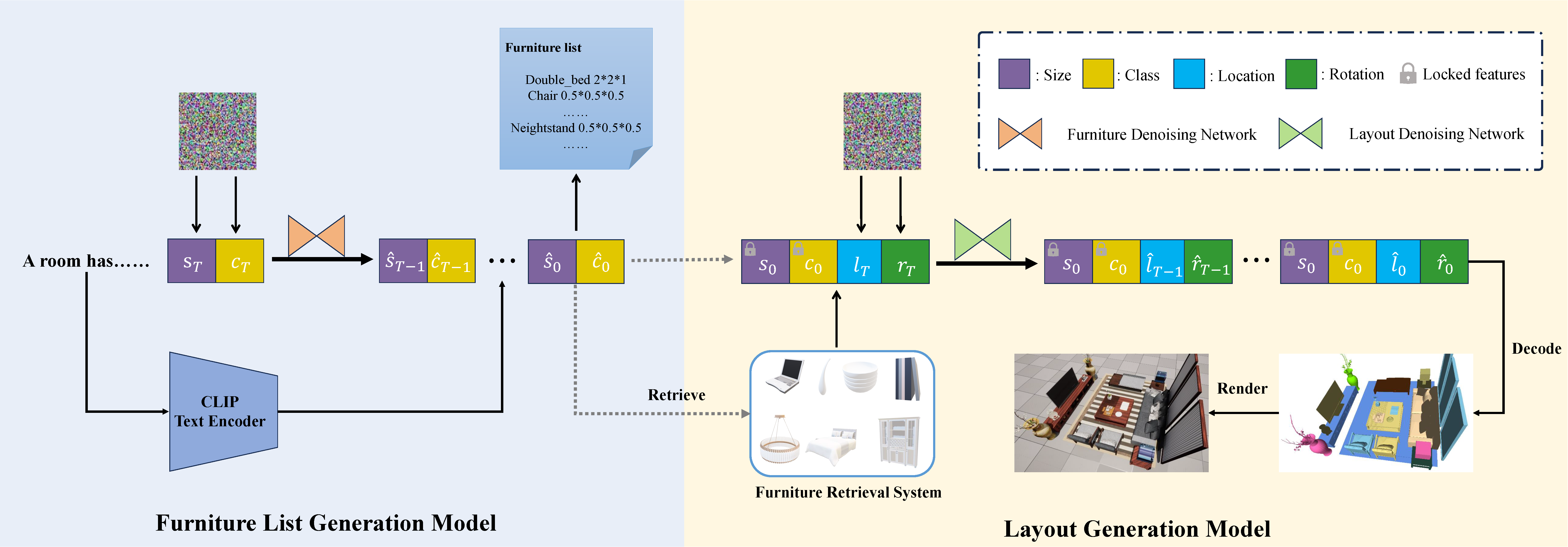

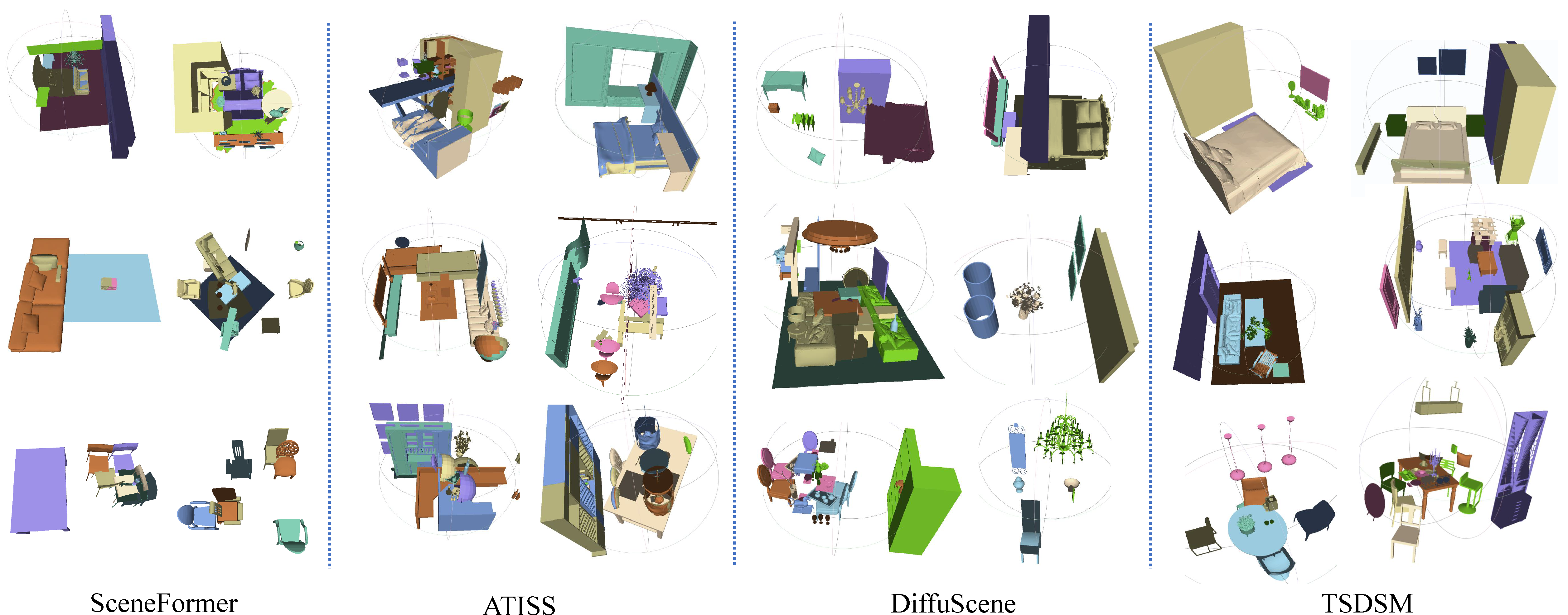

Abstract: Indoor scene generation has attracted significant attention recently as it is crucial for applications of gaming, virtual reality, and interior design. Current indoor scene generation methods can produce reasonable room layouts but often lack diversity and realism. This is primarily due to the limited coverage of existing datasets, including only large furniture without tiny furnishings in daily life. To address these challenges, we propose FurniScene, a large-scale 3D room dataset with intricate furnishing scenes from interior design professionals. Specifically, the FurniScene consists of 11,698 rooms and 39,691 unique furniture CAD models with 89 different types, covering things from large beds to small teacups on the coffee table. To better suit fine-grained indoor scene layout generation, we introduce a novel Two-Stage Diffusion Scene Model (TSDSM) and conduct an evaluation benchmark for various indoor scene generation based on FurniScene. Quantitative and qualitative evaluations demonstrate the capability of our method to generate highly realistic indoor scenes. Our dataset and code will be publicly available soon.

- 3d semantic parsing of large-scale indoor spaces. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1534–1543, 2016.

- Scan2cad: Learning cad model alignment in rgb-d scans. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2614–2623, 2019.

- Matterport3d: Learning from rgb-d data in indoor environments. In Proc. International Conference on 3D Vision, pages 667–676, 2017.

- Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5828–5839, 2017.

- Characterizing structural relationships in scenes using graph kernels. ACM Transactions on Graphics, 30(4):1–12, 2011.

- Procedural generation of multistory buildings with interior. IEEE Transactions on Games, 12(3):323–336, 2019.

- 3d-front: 3d furnished rooms with layouts and semantics. In Proc. IEEE/CVF International Conference on Computer Vision, pages 10933–10942, 2021a.

- 3d-future: 3d furniture shape with texture. International Journal of Computer Vision, 129(12):3313–3337, 2021b.

- Scenehgn: Hierarchical graph networks for 3d indoor scene generation with fine-grained geometry. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(07):8902–8919, 2023.

- Understanding real world indoor scenes with synthetic data. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4077–4085, 2016.

- Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33:6840–6851, 2020.

- Diffpose: Multi-hypothesis human pose estimation using diffusion models. In Proc. IEEE/CVF International Conference on Computer Vision, pages 15977–15987, 2023.

- Scenenn: A scene meshes dataset with annotations. In Proc. International Conference on 3D Vision, pages 92–101, 2016.

- Fastdiff: A fast conditional diffusion model for high-quality speech synthesis. In Proc. International Joint Conference on Artificial Intelligence, pages 4157–4163, 2022.

- Diffusionclip: Text-guided diffusion models for robust image manipulation. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2426–2435, 2022.

- Grains: Generative recursive autoencoders for indoor scenes. ACM Transactions on Graphics, 38(2):1–16, 2019.

- Openrooms: An open framework for photorealistic indoor scene datasets. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7190–7199, 2021.

- Diffsinger: Singing voice synthesis via shallow diffusion mechanism. In Proc. AAAI Conference on Artificial Intelligence, pages 11020–11028, 2022.

- Clip-layout: Style-consistent indoor scene synthesis with semantic furniture embedding. arXiv preprint arXiv:2303.03565, 2023.

- End-to-end optimization of scene layout. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3753–3762, 2020.

- Interactive furniture layout using interior design guidelines. ACM transactions on graphics, 30(4):1–10, 2011.

- Glide: Towards photorealistic image generation and editing with text-guided diffusion models. In Proc. International Conference on Machine Learning, pages 16784–16804, 2022.

- Learning 3d scene priors with 2d supervision. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 792–802, 2023.

- Atiss: Autoregressive transformers for indoor scene synthesis. Advances in Neural Information Processing Systems, 34:12013–12026, 2021.

- Sg-vae: Scene grammar variational autoencoder to generate new indoor scenes. In Proc. European Conference on Computer Vision, pages 155–171, 2020.

- Human-centric indoor scene synthesis using stochastic grammar. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5899–5908, 2018.

- Learning structure-guided diffusion model for 2d human pose estimation. arXiv preprint arXiv:2306.17074, 2023.

- Fast and flexible indoor scene synthesis via deep convolutional generative models. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6182–6190, 2019.

- High-resolution image synthesis with latent diffusion models. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10684–10695, 2022.

- Generative modeling by estimating gradients of the data distribution. Advances in Neural Information Processing Systems, 32:11895–11907, 2019.

- Improved techniques for training score-based generative models. Advances in Neural Information Processing Systems, 33:12438–12448, 2020.

- Metropolis procedural modeling. ACM Transactions on Graphics, 30(2):1–14, 2011.

- Diffuscene: Scene graph denoising diffusion probabilistic model for generative indoor scene synthesis. arXiv preprint arXiv:2303.14207, 2023.

- Rule-based layout solving and its application to procedural interior generation. In Proc. 3D Advanced Media In Gaming And Simulation, pages 15–24, 2009.

- Attention is all you need. In Proc. International Conference on Neural Information Processing Systems, page 6000–6010, 2017.

- Deep convolutional priors for indoor scene synthesis. ACM Transactions on Graphics, 37(4):1–14, 2018.

- Planit: Planning and instantiating indoor scenes with relation graph and spatial prior networks. ACM Transactions on Graphics, 38(4):1–15, 2019.

- Sceneformer: Indoor scene generation with transformers. In Proc. International Conference on 3D Vision, pages 106–115, 2021.

- Lego-net: Learning regular rearrangements of objects in rooms. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19037–19047, 2023.

- Sun3d: A database of big spaces reconstructed using sfm and object labels. In Proc. IEEE International Conference on Computer Vision, pages 1625–1632, 2013.

- Scene synthesis via uncertainty-driven attribute synchronization. In Proc. IEEE/CVF International Conference on Computer Vision, pages 5630–5640, 2021a.

- Indoor scene generation from a collection of semantic-segmented depth images. In Proc. IEEE/CVF International Conference on Computer Vision, pages 15203–15212, 2021b.

- Make it home: automatic optimization of furniture arrangement. ACM Transactions on Graphics, 30(4), 2011.

- The clutterpalette: An interactive tool for detailing indoor scenes. IEEE Transactions on Visualization and Computer Graphics, 22(2):1138–1148, 2015.

- Deep generative modeling for scene synthesis via hybrid representations. ACM Transactions on Graphics, 39(2):1–21, 2020.

- Structured3d: A large photo-realistic dataset for structured 3d modeling. In Proc. European Conference on Computer Vision, pages 519–535, 2020.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.