On the Prospects of Incorporating Large Language Models (LLMs) in Automated Planning and Scheduling (APS) (2401.02500v2)

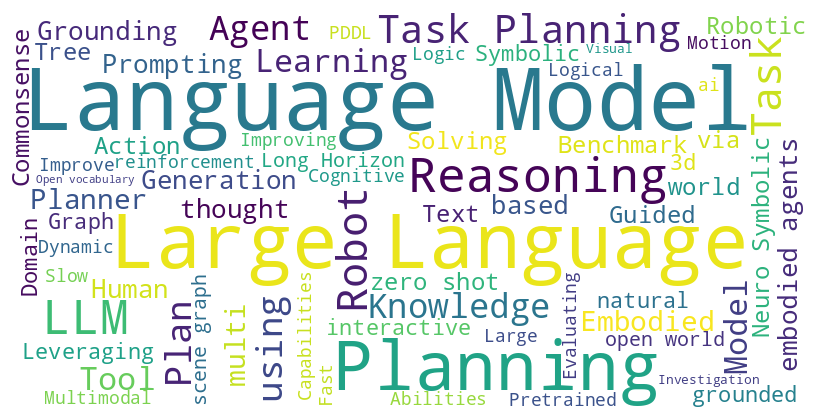

Abstract: Automated Planning and Scheduling is among the growing areas in AI where mention of LLMs has gained popularity. Based on a comprehensive review of 126 papers, this paper investigates eight categories based on the unique applications of LLMs in addressing various aspects of planning problems: language translation, plan generation, model construction, multi-agent planning, interactive planning, heuristics optimization, tool integration, and brain-inspired planning. For each category, we articulate the issues considered and existing gaps. A critical insight resulting from our review is that the true potential of LLMs unfolds when they are integrated with traditional symbolic planners, pointing towards a promising neuro-symbolic approach. This approach effectively combines the generative aspects of LLMs with the precision of classical planning methods. By synthesizing insights from existing literature, we underline the potential of this integration to address complex planning challenges. Our goal is to encourage the ICAPS community to recognize the complementary strengths of LLMs and symbolic planners, advocating for a direction in automated planning that leverages these synergistic capabilities to develop more advanced and intelligent planning systems.

- Llm-deliberation: Evaluating llms with interactive multi-agent negotiation games. arXiv preprint arXiv:2309.17234.

- Learning and Leveraging Verifiers to Improve Planning Capabilities of Pre-trained Language Models. arXiv preprint arXiv:2305.17077.

- Asai, M. 2018. Photo-Realistic Blocksworld Dataset. arXiv preprint arXiv:1812.01818.

- Graph of thoughts: Solving elaborate problems with large language models. arXiv preprint arXiv:2308.09687.

- Do as i can, not as i say: Grounding language in robotic affordances. In Conference on Robot Learning, 287–318. PMLR.

- A Framework to Generate Neurosymbolic PDDL-compliant Planners. arXiv preprint arXiv:2303.00438.

- Grounding large language models in interactive environments with online reinforcement learning. arXiv preprint arXiv:2302.02662.

- Learning to reason over scene graphs: a case study of finetuning GPT-2 into a robot language model for grounded task planning. Frontiers in Robotics and AI, 10.

- Open-vocabulary queryable scene representations for real world planning. In 2023 IEEE International Conference on Robotics and Automation (ICRA), 11509–11522. IEEE.

- Asking Before Action: Gather Information in Embodied Decision Making with Language Models. arXiv preprint arXiv:2305.15695.

- AutoTAMP: Autoregressive Task and Motion Planning with LLMs as Translators and Checkers. arXiv preprint arXiv:2306.06531.

- Scalable Multi-Robot Collaboration with Large Language Models: Centralized or Decentralized Systems? arXiv preprint arXiv:2309.15943.

- Dynamic Planning with a LLM. arXiv preprint arXiv:2308.06391.

- Optimal Scene Graph Planning with Large Language Model Guidance. arXiv preprint arXiv:2309.09182.

- Plan-Seq-Learn: Language Model Guided RL for Solving Long Horizon Robotics Tasks. In 2nd Workshop on Language and Robot Learning: Language as Grounding.

- Emergent Cooperation and Strategy Adaptation in Multi-Agent Systems: An Extended Coevolutionary Theory with LLMs. Electronics, 12(12): 2722.

- Integrating Action Knowledge and LLMs for Task Planning and Situation Handling in Open Worlds. arXiv preprint arXiv:2305.17590.

- Leveraging Commonsense Knowledge from Large Language Models for Task and Motion Planning. In RSS 2023 Workshop on Learning for Task and Motion Planning.

- Task and motion planning with large language models for object rearrangement. arXiv preprint arXiv:2303.06247.

- Palm-e: An embodied multimodal language model. arXiv preprint arXiv:2303.03378.

- From Static to Dynamic: A Continual Learning Framework for Large Language Models. arXiv preprint arXiv:2310.14248.

- Halo: Estimation and reduction of hallucinations in open-source weak large language models. arXiv preprint arXiv:2308.11764.

- Fast and Slow Planning. arXiv preprint arXiv:2303.04283.

- Alphazero-like tree-search can guide large language model decoding and training. arXiv preprint arXiv:2309.17179.

- Strategic Reasoning with Language Models. arXiv preprint arXiv:2305.19165.

- Openagi: When llm meets domain experts. arXiv preprint arXiv:2304.04370.

- HTN planning: Overview, comparison, and beyond. Artif. Intell., 222: 124–156.

- LPG: A Planner Based on Local Search for Planning Graphs with Action Costs. In Aips, volume 2, 281–290.

- Automated Planning: Theory and Practice. The Morgan Kaufmann Series in Artificial Intelligence. Amsterdam: Morgan Kaufmann. ISBN 978-1-55860-856-6.

- Exploring the Limitations of using Large Language Models to Fix Planning Tasks.

- Generating executable action plans with environmentally-aware language models. arXiv preprint arXiv:2210.04964.

- GG-LLM: Geometrically Grounding Large Language Models for Zero-shot Human Activity Forecasting in Human-Aware Task Planning. arXiv preprint arXiv:2310.20034.

- Conceptgraphs: Open-vocabulary 3d scene graphs for perception and planning. arXiv preprint arXiv:2309.16650.

- Leveraging Pre-trained Large Language Models to Construct and Utilize World Models for Model-based Task Planning. arXiv preprint arXiv:2305.14909.

- Reasoning with language model is planning with world model. arXiv preprint arXiv:2305.14992.

- ToolkenGPT: Augmenting Frozen Language Models with Massive Tools via Tool Embeddings. arXiv preprint arXiv:2305.11554.

- SayCanPay: Heuristic Planning with Large Language Models using Learnable Domain Knowledge. arXiv preprint arXiv:2308.12682.

- VAL: automatic plan validation, continuous effects and mixed initiative planning using PDDL. In 16th IEEE International Conference on Tools with Artificial Intelligence, 294–301.

- Tool documentation enables zero-shot tool-usage with large language models. arXiv preprint arXiv:2308.00675.

- Enabling Efficient Interaction between an Algorithm Agent and an LLM: A Reinforcement Learning Approach. arXiv preprint arXiv:2306.03604.

- Chain-of-Symbol Prompting Elicits Planning in Large Langauge Models. arXiv preprint arXiv:2305.10276.

- Tree-Planner: Efficient Close-loop Task Planning with Large Language Models. arXiv preprint arXiv:2310.08582.

- Tree-of-mixed-thought: Combining fast and slow thinking for multi-hop visual reasoning. arXiv preprint arXiv:2308.09658.

- Language Models, Agent Models, and World Models: The LAW for Machine Reasoning and Planning. arXiv:2312.05230.

- Language models as zero-shot planners: Extracting actionable knowledge for embodied agents. In International Conference on Machine Learning, 9118–9147. PMLR.

- Voxposer: Composable 3d value maps for robotic manipulation with language models. arXiv preprint arXiv:2307.05973.

- Grounded decoding: Guiding text generation with grounded models for robot control. arXiv preprint arXiv:2303.00855.

- Inner monologue: Embodied reasoning through planning with language models. arXiv preprint arXiv:2207.05608.

- Neuro Symbolic Reasoning for Planning: Counterexample Guided Inductive Synthesis using Large Language Models and Satisfiability Solving. arXiv preprint arXiv:2309.16436.

- CoPAL: Corrective Planning of Robot Actions with Large Language Models. arXiv preprint arXiv:2310.07263.

- SMART-LLM: Smart Multi-Agent Robot Task Planning using Large Language Models. arXiv preprint arXiv:2309.10062.

- Housekeep: Tidying virtual households using commonsense reasoning. In European Conference on Computer Vision, 355–373. Springer.

- Kautz, H. A. 2022. The third AI summer: AAAI Robert S. Engelmore Memorial Lecture. AI Magazine, 43(1): 105–125.

- There and back again: extracting formal domains for controllable neurosymbolic story authoring. In Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, volume 19, 64–74.

- DynaCon: Dynamic Robot Planner with Contextual Awareness via LLMs. arXiv preprint arXiv:2309.16031.

- Exploiting Language Models as a Source of Knowledge for Cognitive Agents. arXiv preprint arXiv:2310.06846.

- Getting from generative ai to trustworthy ai: What llms might learn from cyc. arXiv preprint arXiv:2308.04445.

- Api-bank: A benchmark for tool-augmented llms. arXiv preprint arXiv:2304.08244.

- Human-Centered Planning. arXiv preprint arXiv:2311.04403.

- SwiftSage: A Generative Agent with Fast and Slow Thinking for Complex Interactive Tasks. arXiv preprint arXiv:2305.17390.

- Videodirectorgpt: Consistent multi-scene video generation via llm-guided planning. arXiv preprint arXiv:2309.15091.

- Text2motion: From natural language instructions to feasible plans. arXiv preprint arXiv:2303.12153.

- Llm+ p: Empowering large language models with optimal planning proficiency. arXiv preprint arXiv:2304.11477.

- Reflect: Summarizing robot experiences for failure explanation and correction. arXiv preprint arXiv:2306.15724.

- Code Models are Zero-shot Precondition Reasoners. arXiv preprint arXiv:2311.09601.

- Chameleon: Plug-and-play compositional reasoning with large language models. arXiv preprint arXiv:2304.09842.

- Neuro-symbolic causal language planning with commonsense prompting. arXiv e-prints, arXiv–2206.

- Multimodal Procedural Planning via Dual Text-Image Prompting. arXiv preprint arXiv:2305.01795.

- Reasoning on graphs: Faithful and interpretable large language model reasoning. arXiv preprint arXiv:2310.01061.

- Roco: Dialectic multi-robot collaboration with large language models. arXiv preprint arXiv:2307.04738.

- PDDL-the planning domain definition language.

- Evaluating Cognitive Maps and Planning in Large Language Models with CogEval. arXiv preprint arXiv:2309.15129.

- Diversity of Thought Improves Reasoning Abilities of Large Language Models. arXiv preprint arXiv:2310.07088.

- Do embodied agents dream of pixelated sheep?: Embodied decision making using language guided world modelling. arXiv preprint arXiv:2301.12050.

- Plansformer: Generating symbolic plans using transformers. arXiv preprint arXiv:2212.08681.

- Understanding the Capabilities of Large Language Models for Automated Planning. arXiv preprint arXiv:2305.16151.

- Plansformer Tool: Demonstrating Generation of Symbolic Plans Using Transformers. In IJCAI, volume 2023, 7158–7162. International Joint Conferences on Artificial Intelligence.

- Logic-lm: Empowering large language models with symbolic solvers for faithful logical reasoning. arXiv preprint arXiv:2305.12295.

- Human-Assisted Continual Robot Learning with Foundation Models. arXiv preprint arXiv:2309.14321.

- Improving Generalization in Task-oriented Dialogues with Workflows and Action Plans. arXiv preprint arXiv:2306.01729.

- Saynav: Grounding large language models for dynamic planning to navigation in new environments. arXiv preprint arXiv:2309.04077.

- Planning with large language models via corrective re-prompting. In NeurIPS 2022 Foundation Models for Decision Making Workshop.

- Sayplan: Grounding large language models using 3d scene graphs for scalable task planning. arXiv preprint arXiv:2307.06135.

- Robots that ask for help: Uncertainty alignment for large language model planners. arXiv preprint arXiv:2307.01928.

- Tptu: Task planning and tool usage of large language model-based ai agents. arXiv preprint arXiv:2308.03427.

- Artificial Intelligence, A Modern Approach. Second Edition.

- From Cooking Recipes to Robot Task Trees–Improving Planning Correctness and Task Efficiency by Leveraging LLMs with a Knowledge Network. arXiv preprint arXiv:2309.09181.

- Evaluation of Pretrained Large Language Models in Embodied Planning Tasks. In International Conference on Artificial General Intelligence, 222–232. Springer.

- RoboVQA: Multimodal Long-Horizon Reasoning for Robotics. arXiv preprint arXiv:2311.00899.

- Navigation with large language models: Semantic guesswork as a heuristic for planning. arXiv preprint arXiv:2310.10103.

- Hugginggpt: Solving ai tasks with chatgpt and its friends in huggingface. arXiv preprint arXiv:2303.17580.

- Vision-Language Interpreter for Robot Task Planning. arXiv preprint arXiv:2311.00967.

- Generalized Planning in PDDL Domains with Pretrained Large Language Models. arXiv preprint arXiv:2305.11014.

- PDDL planning with pretrained large language models. In NeurIPS 2022 Foundation Models for Decision Making Workshop.

- ProgPrompt: program generation for situated robot task planning using large language models. Autonomous Robots, 1–14.

- Llm-planner: Few-shot grounded planning for embodied agents with large language models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2998–3009.

- Cognitive architectures for language agents. arXiv preprint arXiv:2309.02427.

- AdaPlanner: Adaptive Planning from Feedback with Language Models. arXiv preprint arXiv:2305.16653.

- Large Language Models are In-Context Semantic Reasoners rather than Symbolic Reasoners. arXiv preprint arXiv:2305.14825.

- Can Large Language Models Really Improve by Self-critiquing Their Own Plans? arXiv preprint arXiv:2310.08118.

- PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change. In Thirty-seventh Conference on Neural Information Processing Systems Datasets and Benchmarks Track.

- Large Language Models Still Can’t Plan (A Benchmark for LLMs on Planning and Reasoning about Change). arXiv preprint arXiv:2206.10498.

- On the planning abilities of large language models (a critical investigation with a proposed benchmark). arXiv preprint arXiv:2302.06706.

- Conformal Temporal Logic Planning using Large Language Models: Knowing When to Do What and When to Ask for Help. arXiv preprint arXiv:2309.10092.

- Plan-and-solve prompting: Improving zero-shot chain-of-thought reasoning by large language models. arXiv preprint arXiv:2305.04091.

- Guiding language model reasoning with planning tokens. arXiv preprint arXiv:2310.05707.

- Describe, explain, plan and select: Interactive planning with large language models enables open-world multi-task agents. arXiv preprint arXiv:2302.01560.

- A Prefrontal Cortex-inspired Architecture for Planning in Large Language Models. arXiv preprint arXiv:2310.00194.

- Unleashing the Power of Graph Learning through LLM-based Autonomous Agents. arXiv preprint arXiv:2309.04565.

- From Word Models to World Models: Translating from Natural Language to the Probabilistic Language of Thought. arXiv preprint arXiv:2306.12672.

- Plan, Eliminate, and Track–Language Models are Good Teachers for Embodied Agents. arXiv preprint arXiv:2305.02412.

- Integrating Common Sense and Planning with Large Language Models for Room Tidying. In RSS 2023 Workshop on Learning for Task and Motion Planning.

- Embodied task planning with large language models. arXiv preprint arXiv:2307.01848.

- Translating natural language to planning goals with large-language models. arXiv preprint arXiv:2302.05128.

- Gentopia: A collaborative platform for tool-augmented llms. arXiv preprint arXiv:2308.04030.

- Xu, L. 1995. Case based reasoning. IEEE Potentials, 13(5): 10–13.

- Creative Robot Tool Use with Large Language Models. arXiv preprint arXiv:2310.13065.

- LLM-Grounder: Open-Vocabulary 3D Visual Grounding with Large Language Model as an Agent. arXiv preprint arXiv:2309.12311.

- OceanChat: Piloting Autonomous Underwater Vehicles in Natural Language. arXiv preprint arXiv:2309.16052.

- Learning Automata-Based Task Knowledge Representation from Large-Scale Generative Language Models. arXiv preprint arXiv:2212.01944.

- On the Planning, Search, and Memorization Capabilities of Large Language Models. arXiv preprint arXiv:2309.01868.

- Yang, Z. 2023. Neuro-Symbolic AI Approaches to Enhance Deep Neural Networks with Logical Reasoning and Knowledge Integration. Ph.D. thesis, Arizona State University.

- Coupling Large Language Models with Logic Programming for Robust and General Reasoning from Text. arXiv preprint arXiv:2307.07696.

- Tree of thoughts: Deliberate problem solving with large language models. arXiv preprint arXiv:2305.10601.

- Statler: State-maintaining language models for embodied reasoning. arXiv preprint arXiv:2306.17840.

- EIPE-text: Evaluation-Guided Iterative Plan Extraction for Long-Form Narrative Text Generation. arXiv preprint arXiv:2310.08185.

- Plan4mc: Skill reinforcement learning and planning for open-world minecraft tasks. arXiv preprint arXiv:2303.16563.

- Distilling Script Knowledge from Large Language Models for Constrained Language Planning. arXiv preprint arXiv:2305.05252.

- Parsel: Algorithmic Reasoning with Language Models by Composing Decompositions. In Thirty-seventh Conference on Neural Information Processing Systems.

- Large language models as zero-shot human models for human-robot interaction. arXiv preprint arXiv:2303.03548.

- Prefer: Prompt ensemble learning via feedback-reflect-refine. arXiv preprint arXiv:2308.12033.

- Planning with Logical Graph-based Language Model for Instruction Generation. arXiv:2308.13782.

- Building cooperative embodied agents modularly with large language models. arXiv preprint arXiv:2307.02485.

- Bootstrap your own skills: Learning to solve new tasks with large language model guidance. arXiv preprint arXiv:2310.10021.

- Large Language Models as Commonsense Knowledge for Large-Scale Task Planning. arXiv preprint arXiv:2305.14078.

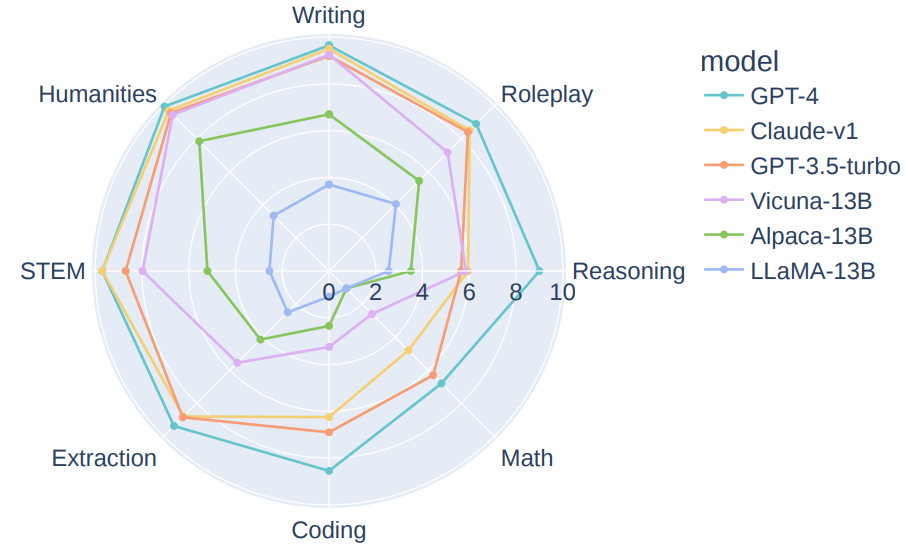

- Judging LLM-as-a-judge with MT-Bench and Chatbot Arena. arXiv:2306.05685.

- Steve-Eye: Equipping LLM-based Embodied Agents with Visual Perception in Open Worlds. arXiv preprint arXiv:2310.13255.

- ISR-LLM: Iterative Self-Refined Large Language Model for Long-Horizon Sequential Task Planning. arXiv preprint arXiv:2308.13724.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.