Diffusion Model with Perceptual Loss (2401.00110v7)

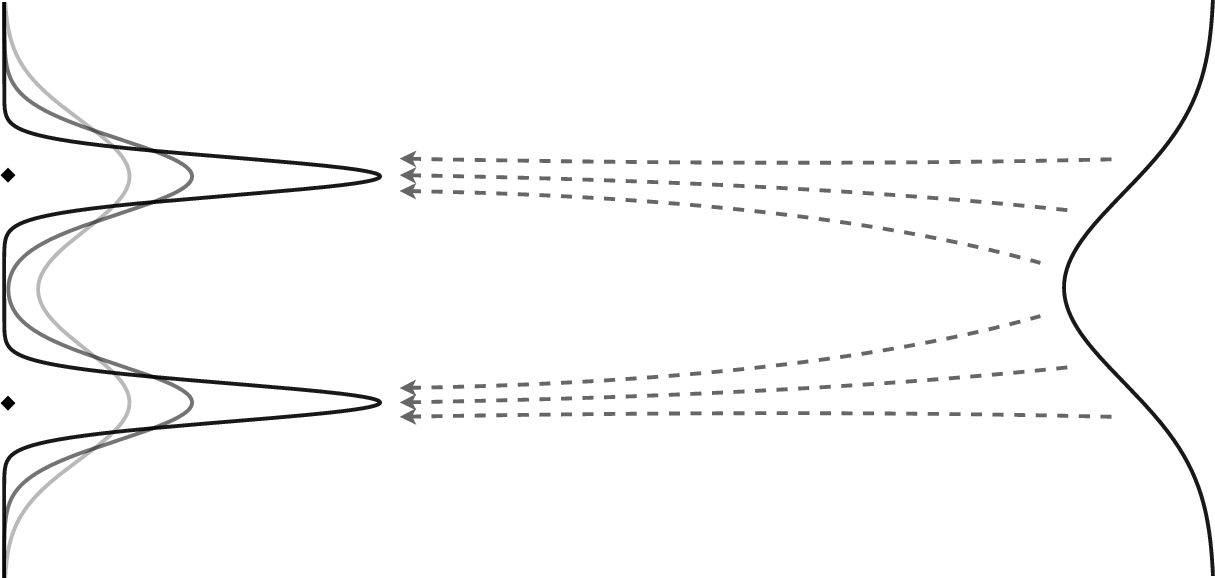

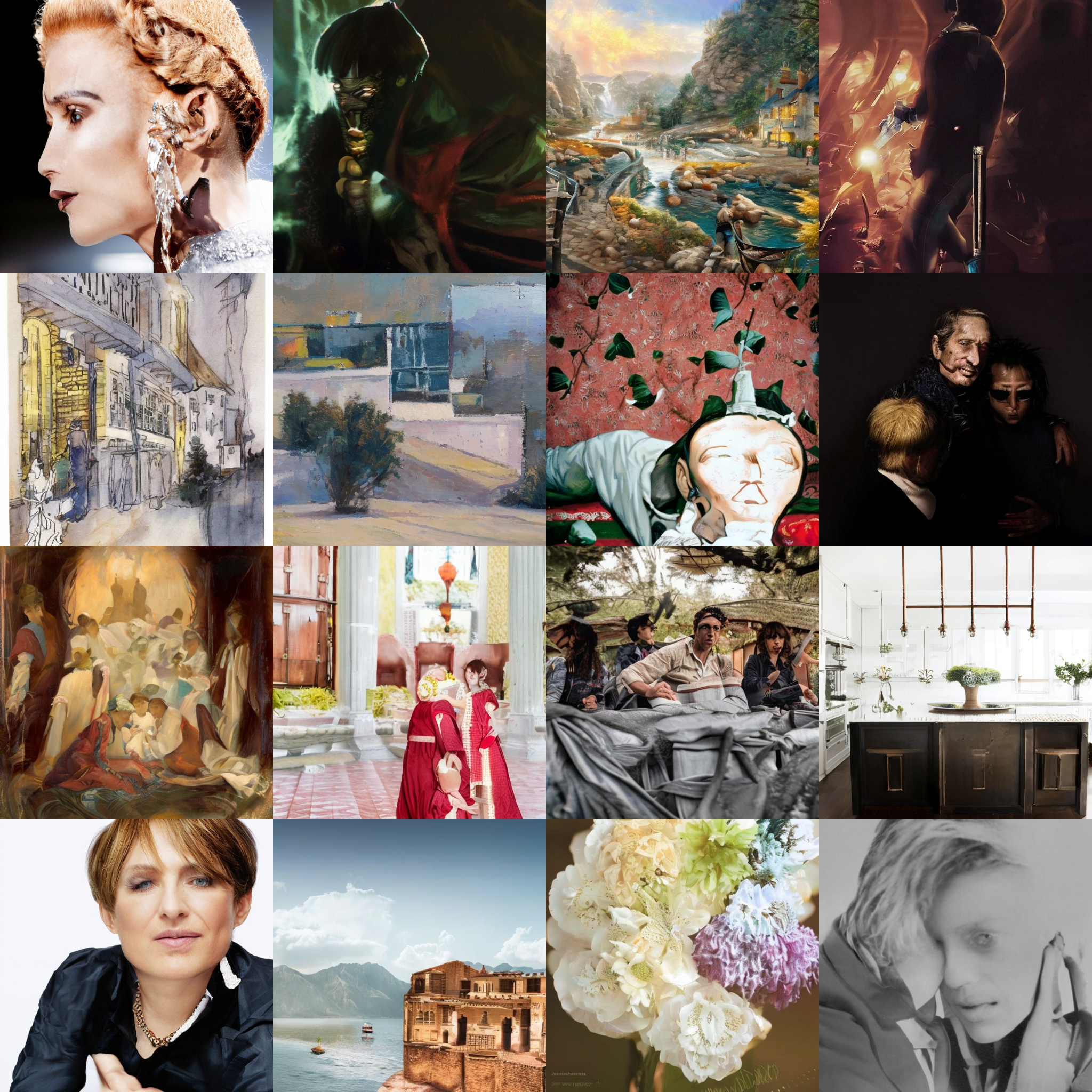

Abstract: Diffusion models without guidance generate very unrealistic samples. Guidance is used ubiquitously, and previous research has attributed its effect to low-temperature sampling that improves quality by trading off diversity. However, this perspective is incomplete. Our research shows that the choice of the loss objective is the underlying reason raw diffusion models fail to generate desirable samples. In this paper, (1) our analysis shows that the loss objective plays an important role in shaping the learned distribution and the MSE loss derived from theories holds assumptions that misalign with data in practice; (2) we explain the effectiveness of guidance methods from a new perspective of perceptual supervision; (3) we validate our hypothesis by training a diffusion model with a novel self-perceptual loss objective and obtaining much more realistic samples without the need for guidance. We hope our work paves the way for future explorations of the diffusion loss objective.

- Stable video diffusion: Scaling latent video diffusion models to large datasets, 2023.

- Align your latents: High-resolution video synthesis with latent diffusion models, 2023.

- Magicdance: Realistic human dance video generation with motions & facial expressions transfer, 2023.

- Pixart-α𝛼\alphaitalic_α: Fast training of diffusion transformer for photorealistic text-to-image synthesis, 2023.

- Diffusion models beat gans on image synthesis, 2021.

- Structure and content-guided video synthesis with diffusion models, 2023.

- Animatediff: Animate your personalized text-to-image diffusion models without specific tuning, 2023.

- Gans trained by a two time-scale update rule converge to a local nash equilibrium, 2018.

- Imagen video: High definition video generation with diffusion models, 2022.

- Denoising diffusion probabilistic models, 2020.

- Classifier-free diffusion guidance, 2022.

- Aapo Hyvärinen. Estimation of non-normalized statistical models by score matching. Journal of Machine Learning Research, 6(24):695–709, 2005.

- Elucidating the design space of diffusion-based generative models, 2022.

- Analyzing and improving the training dynamics of diffusion models, 2023.

- Common diffusion noise schedules and sample steps are flawed, 2023.

- Microsoft coco: Common objects in context, 2015.

- Flow matching for generative modeling, 2023.

- Flow straight and fast: Learning to generate and transfer data with rectified flow, 2022.

- Dpm-solver: A fast ode solver for diffusion probabilistic model sampling in around 10 steps, 2022.

- Dpm-solver++: Fast solver for guided sampling of diffusion probabilistic models, 2023.

- On aliased resizing and surprising subtleties in gan evaluation, 2022.

- Scalable diffusion models with transformers, 2023.

- Wuerstchen: An efficient architecture for large-scale text-to-image diffusion models, 2023.

- Sdxl: Improving latent diffusion models for high-resolution image synthesis, 2023.

- Dreamfusion: Text-to-3d using 2d diffusion, 2022.

- Learning transferable visual models from natural language supervision, 2021.

- Hierarchical text-conditional image generation with clip latents, 2022.

- High-resolution image synthesis with latent diffusion models, 2022.

- Photorealistic text-to-image diffusion models with deep language understanding, 2022.

- Improved techniques for training gans, 2016.

- Progressive distillation for fast sampling of diffusion models, 2022.

- Laion-5b: An open large-scale dataset for training next generation image-text models, 2022.

- Mvdream: Multi-view diffusion for 3d generation, 2023.

- Make-a-video: Text-to-video generation without text-video data, 2022.

- Deep unsupervised learning using nonequilibrium thermodynamics, 2015.

- Denoising diffusion implicit models, 2022.

- Score-based generative modeling through stochastic differential equations, 2021.

- Pascal Vincent. A Connection Between Score Matching and Denoising Autoencoders. Neural Computation, 23(7):1661–1674, 07 2011.

- Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res., 11:3371–3408, dec 2010.

- Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 13:600–612, 2004.

- Prolificdreamer: High-fidelity and diverse text-to-3d generation with variational score distillation, 2023.

- Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, volume 2, pages 1398–1402 Vol.2, 2003.

- Magicprop: Diffusion-based video editing via motion-aware appearance propagation, 2023.

- The unreasonable effectiveness of deep features as a perceptual metric, 2018.

- Movq: Modulating quantized vectors for high-fidelity image generation, 2022.

- Magicvideo: Efficient video generation with latent diffusion models, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.