- The paper introduces a novel method (S2M) that converts abundant single-turn QA datasets into multi-turn conversational formats.

- The methodology leverages a QA pair generator, a knowledge graph-based reassembler, and a question rewriter to ensure coherent dialogue.

- Experimental results on the QuAC benchmark show improved performance with DEBERTA-S2M and higher human evaluation scores.

S2M: Converting Single-Turn to Multi-Turn Datasets for Conversational Question Answering

The paper presents a novel approach for enhancing Conversational Question Answering (CQA) performance by transforming single-turn QA datasets into multi-turn datasets. This transformation aims to close the gap between the wide availability of single-turn dataset resources and the scarcity of annotated multi-turn conversational datasets, which are crucial for advancing CQA systems.

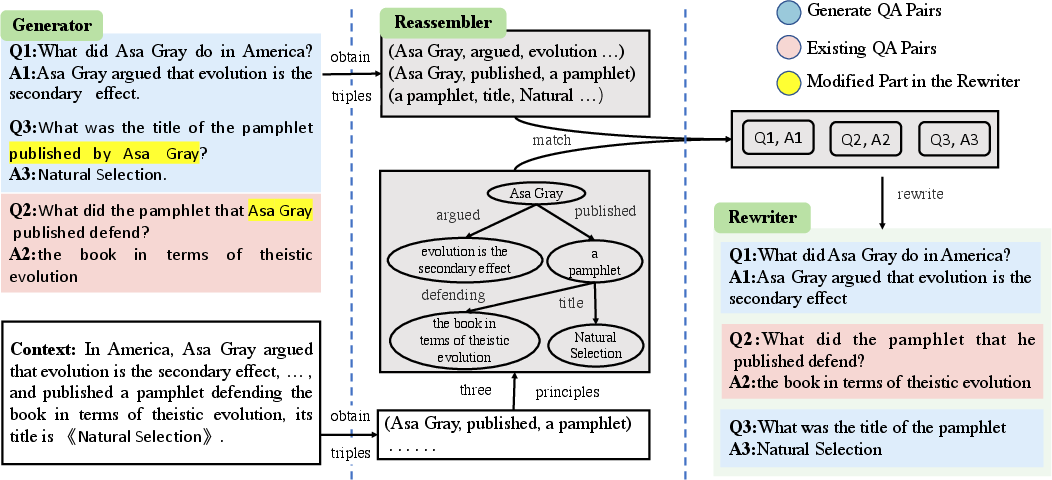

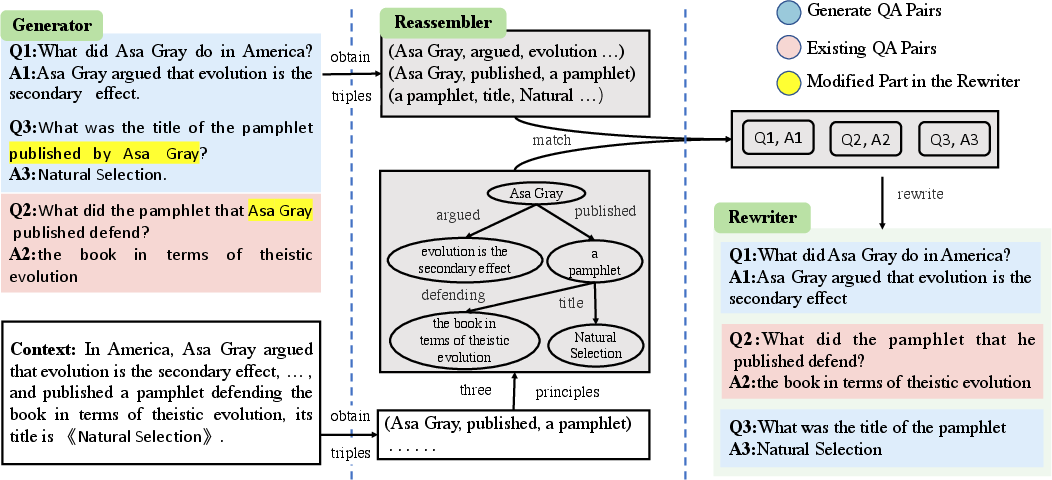

The core of S2M's approach is to leverage the abundant single-turn data and convert it to mimic the characteristics of multi-turn dialogues, facilitating more rich and contextually aware QA pairings crucial for realistic interactions. The method comprises three distinct modules: a QA pair Generator for synthesizing candidate pairs, a QA pair Reassembler for assembling coherent sequences of QA pairs using a knowledge graph, and a question Rewriter to ensure conversational flow. This process addresses the distribution shift between single-turn and multi-turn datasets, which typically hinders performance when single-turn datasets are directly applied to CQA tasks.

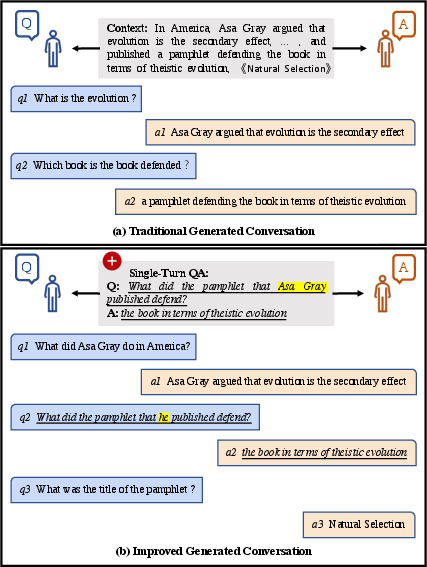

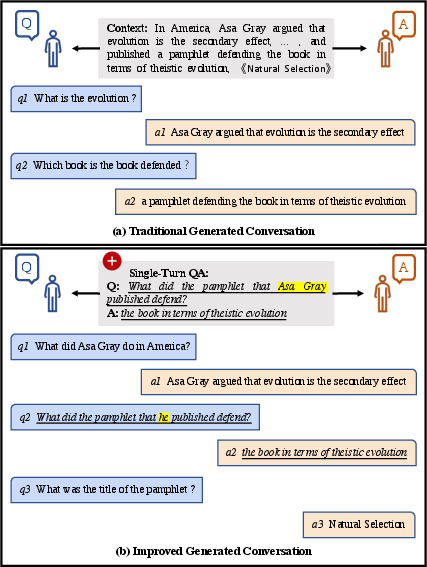

Figure 1: Two examples of generated conversations. The former represents traditional context-based conversation generation; the latter additionally considers the single-turn QA pair and rewrites it. Our method considers both context and additional single-turn question-answer pairs, thus generating more complete information.

Methodology Overview

QA Pair Generation and Knowledge Graph Construction

The QA pair Generator employs a self-training framework to produce high-quality candidate pairs from available contexts, augmented through a QAE loss function to score them based on challenge and noise. The knowledge graph constructed from these contexts uses OpenIE6 to extract relevant triples, which are then aligned to form a structured, semantically meaningful sequence of QA interactions.

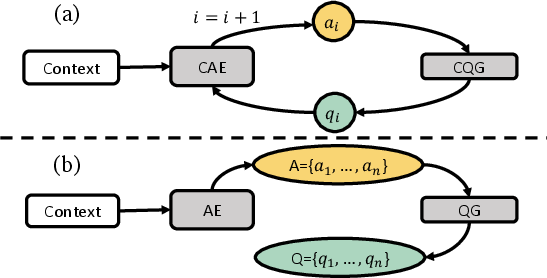

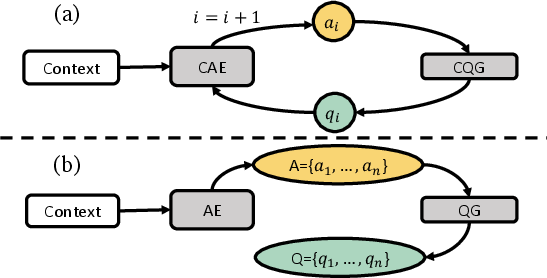

Figure 2: Two types of CQA dataset generation methods. Compared with method-b, method-a considers the conversational history. Although its generation is slow, the resulting quality is higher.

Figure 3: Our model overview consists of three main parts: a Single-Turn Candidate Question-Answer Pair, a QA pair Reassembler, and a Question Rewriter. The input to the model comprises the context and the existing single-turn question-answer pair of the red part. The output is the multi-turn dialogue rewritten by the Rewriter.

Reassembler and Question Rewriter

The Reassembler traverses the knowledge graph to sequence QA pairs logically, ensuring contextual continuity. The final step involves rewriting questions to fit conversational styles better, applying a reverse process of traditional question rewriting using a dataset derived from CANARD. This enhances the conversational realism by adapting self-contained questions to form coherent dialogue.

Experimental Results

Synthetic Dataset Efficacy

The authors' experiments demonstrate substantial improvements in baseline performance on the QuAC benchmark, emphasizing the effectiveness of S2M-generated synthetic data. DEBERTA-S2M, leveraging this dataset, achieves top ranks on the QuAC test set, underscoring the method's capability to produce high-quality conversational data from single-turn sources, outperforming existing generation approaches like SIMSEEK.

Human Evaluation Insights

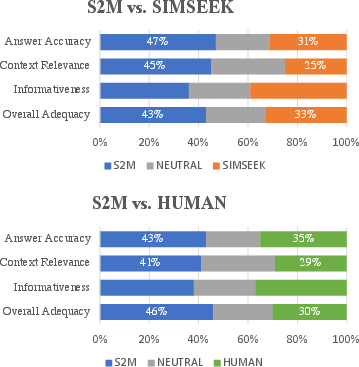

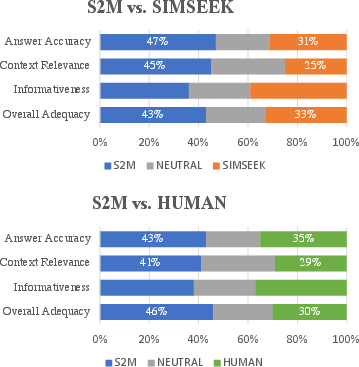

Human assessment further validates S2M's robustness, with evaluations indicating superior answer accuracy, context relevance, and conversational adequacy compared to peers. These results solidify the method's utility in generating datasets that support advanced conversational AI development.

Figure 4: Human Evaluation of S2M, SIMSEEK, and QuAC Datasets: Comparing Overall Adequacy and Additional Metrics.

Conclusion

S2M offers a strategic advancement in CQA by addressing the critical data scarcity issue. By converting single-turn QA data to multi-turn conversational datasets, S2M enriches the training resources available for complex, context-aware question-answering models. Future work will likely explore joint optimization strategies to further refine this transformation process and expand its applicability across diverse dialogue-based AI applications.