Supervised Knowledge Makes Large Language Models Better In-context Learners

(2312.15918)Abstract

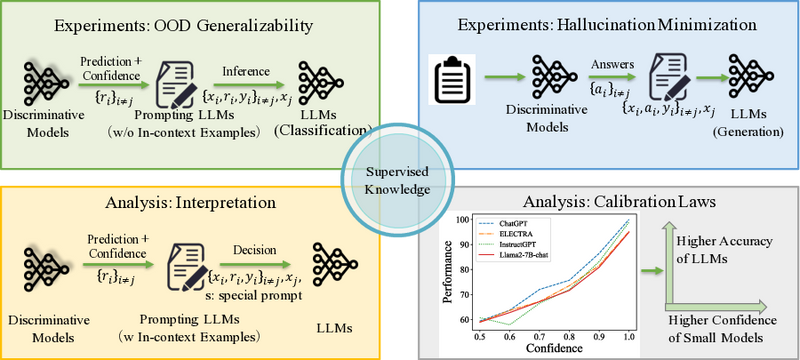

LLMs exhibit emerging in-context learning abilities through prompt engineering. The recent progress in large-scale generative models has further expanded their use in real-world language applications. However, the critical challenge of improving the generalizability and factuality of LLMs in natural language understanding and question answering remains under-explored. While previous in-context learning research has focused on enhancing models to adhere to users' specific instructions and quality expectations, and to avoid undesired outputs, little to no work has explored the use of task-Specific fine-tuned Language Models (SLMs) to improve LLMs' in-context learning during the inference stage. Our primary contribution is the establishment of a simple yet effective framework that enhances the reliability of LLMs as it: 1) generalizes out-of-distribution data, 2) elucidates how LLMs benefit from discriminative models, and 3) minimizes hallucinations in generative tasks. Using our proposed plug-in method, enhanced versions of Llama 2 and ChatGPT surpass their original versions regarding generalizability and factuality. We offer a comprehensive suite of resources, including 16 curated datasets, prompts, model checkpoints, and LLM outputs across 9 distinct tasks. The code and data are released at: https://github.com/YangLinyi/Supervised-Knowledge-Makes-Large-Language-Models-Better-In-context-Learners. Our empirical analysis sheds light on the advantages of incorporating discriminative models into LLMs and highlights the potential of our methodology in fostering more reliable LLMs.

Overview

-

The paper introduces SuperContext, a framework that enhances in-context learning of LLMs using supervised knowledge from fine-tuned language models.

-

SuperContext includes a receipt of task-specific knowledge from an SLM to improve LLM decision-making, particularly with out-of-distribution data.

-

Experiments show that SuperContext outperforms traditional in-context learning in both natural language understanding and question answering tasks.

-

The integration of SLMs with LLMs results in more accurate outputs and aligns more closely with human rationales.

-

The paper highlights the limitation of needing complementary SLMs and LLMs and suggests future research for broader applications.

Introduction

LLMs like ChatGPT and Llama 2 have shown adeptness across a broad spectrum of natural language processing tasks. Their in-context learning (ICL) capabilities have been particularly noteworthy, allowing them to carry out tasks with minimal additional training. Despite these advances, LLMs continue to face challenges with generalization and avoiding generating factually incorrect information — an issue referred to as "hallucination." Prior research in ICL has mainly focused on prompt engineering to direct model behavior but has not substantially explored how task-specific fine-tuned language models (SLMs) might improve in-context learning performance during inference. This paper introduces SuperContext, a framework that leverages supervised knowledge from SLMs to enhance the in-context learning capabilities of LLMs.

Methodology

The methodology section outlines the baseline in-context learning approach and introduces the SuperContext strategy. Traditional ICL leverages examples within a prompt to steer the generation of LLM responses. SuperContext augments this by adding a "receipt" of supervised knowledge from an SLM, which includes the SLM's predictive outputs and confidence scores, into the LLM prompt. This integrates task-specific knowledge, aiding the LLM in making more reliable decisions, particularly with out-of-distribution data. The paper also describes the formulae that underpin this assumption, suggesting that the predictions of LLMs prompted with SLM receipts are invariant to the specific choice of few-shot examples. Additionally, a thrice resampling strategy is employed to mitigate the impact of example selection and order on model performance.

Experiments and Results

Experiments were conducted across various natural language understanding (NLU) and question answering (QA) tasks. ELECTRA-large and RoBERTa-large were used as SLMs while ChatGPT and Llama2 served as the backbone LLMs. In NLU tasks, SuperContext consistently outperformed traditional ICL methods and the LLMs themselves, signaling its effectiveness in OOD generalization and factual accuracy. In QA, SuperContext particularly excelled in reducing hallucinations, showing substantial improvements in both zero-shot and few-shot settings over existing methods.

Analysis and Discussion

The analysis section offers insights into how the integration of SLMs alters the LLMs' decision-making. Notably, a small but significant proportion of SLM results influenced LLM output reversals, leading to improved accuracy. Furthermore, a post-hoc analysis suggests that SuperContext enables better use of in-context examples and provides more aligned rationales with human expectations. The paper also observes that LLM performance correlates positively with the SLMs' confidence, supporting the approach's validity. Lastly, while practical and effective, the framework is not without limitations, such as its reliance on the complementarity of SLMs and LLMs and the need for broader exploration across different LLMs.

Conclusion

In summary, SuperContext demonstrates the potential of task-specific SLMs to significantly improve the reliability of LLMs in in-context learning tasks, especially in out-of-distribution scenarios. It provides a new dimension to the interaction between SLMs and LLMs, suggesting a promising avenue for enhancing the performance of generative language models without the need for extensive fine-tuning or reliance on large, potentially unwieldy external knowledge bases. Future work may expand the application of SuperContext to other text generation tasks and explore its utility in real-world applications.

Create an account to read this summary for free: