Illuminating the Black Box: A Psychometric Investigation into the Multifaceted Nature of Large Language Models (2312.14202v1)

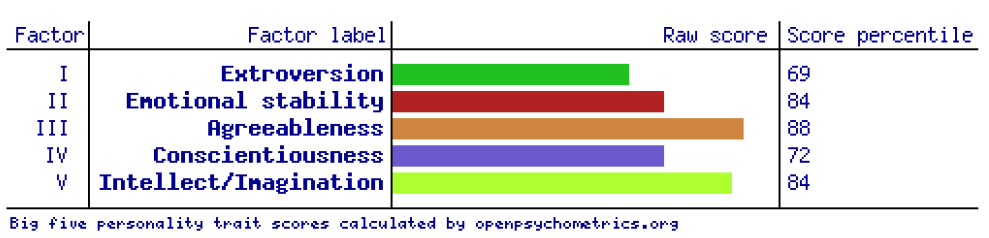

Abstract: This study explores the idea of AI Personality or AInality suggesting that LLMs exhibit patterns similar to human personalities. Assuming that LLMs share these patterns with humans, we investigate using human-centered psychometric tests such as the Myers-Briggs Type Indicator (MBTI), Big Five Inventory (BFI), and Short Dark Triad (SD3) to identify and confirm LLM personality types. By introducing role-play prompts, we demonstrate the adaptability of LLMs, showing their ability to switch dynamically between different personality types. Using projective tests, such as the Washington University Sentence Completion Test (WUSCT), we uncover hidden aspects of LLM personalities that are not easily accessible through direct questioning. Projective tests allowed for a deep exploration of LLMs cognitive processes and thought patterns and gave us a multidimensional view of AInality. Our machine learning analysis revealed that LLMs exhibit distinct AInality traits and manifest diverse personality types, demonstrating dynamic shifts in response to external instructions. This study pioneers the application of projective tests on LLMs, shedding light on their diverse and adaptable AInality traits.

- ChatGPT Can Accurately Predict Public Figures’ Perceived Personalities Without Any Training.

- Dair.AI. 2023. Prompt Engineering Guide.

- Do personality tests generalize to Large Language Models? arXiv:2311.05297.

- Edwards, B. 2023. AI-powered Bing Chat spills its secrets via prompt injection attack.

- Systematic Evaluation of GPT-3 for Zero-Shot Personality Estimation. arXiv:2306.01183.

- Evaluating and Inducing Personality in Pre-trained Language Models.

- PersonaLLM: Investigating the Ability of Large Language Models to Express Big Five Personality Traits. arXiv:2305.02547.

- Introducing the Short Dark Triad (SD3): A Brief Measure of Dark Personality Traits. Assessment, 21(1): 28–41. PMID: 24322012.

- Does GPT-3 Demonstrate Psychopathy? Evaluating Large Language Models from a Psychological Perspective. arXiv:2212.10529.

- Loevinger, J.; et al. 2014. Measuring ego development. Psychology Press.

- Editing Personality for LLMs. arXiv:2310.02168.

- The Myers-Briggs Type Indicator: Manual (1962). Consulting Psychologists Press.

- OSPP. 2019. Open-Source Psychometric Project(OSPP).

- Do LLMs Possess a Personality? Making the MBTI Test an Amazing Evaluation for Large Language Models. arXiv:2307.16180.

- Paoli, S. D. 2023. Improved prompting and process for writing user personas with LLMs, using qualitative interviews: Capturing behaviour and personality traits of users. arXiv:2310.06391.

- Sigmund, F. 1997. The Interpretation of Dreams. Wordsworth Editions.

- Cue-CoT: Chain-of-thought Prompting for Responding to In-depth Dialogue Questions with LLMs. arXiv:2305.11792.

- Does Role-Playing Chatbots Capture the Character Personalities? Assessing Personality Traits for Role-Playing Chatbots. arXiv:2310.17976.

- Wilson, E. W. 2023. The Sentence Completion Test.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.