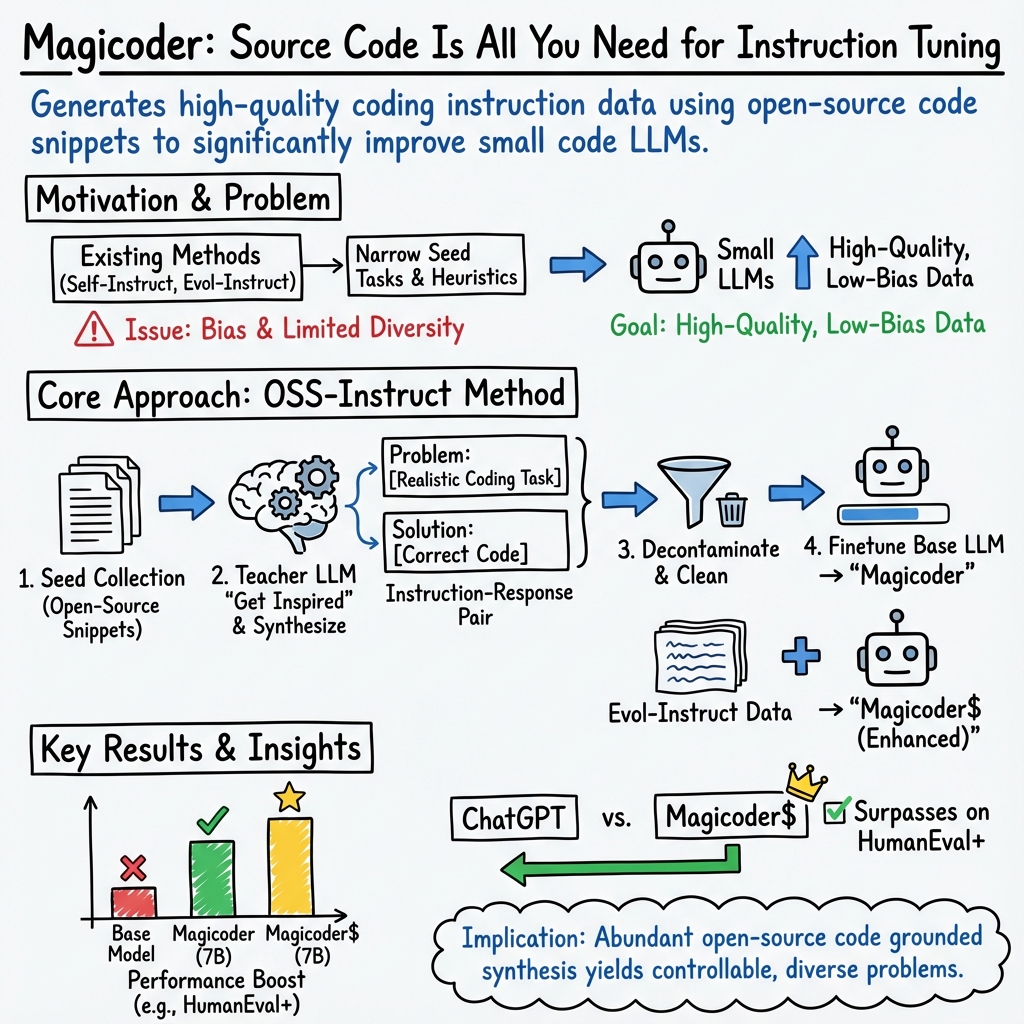

- The paper presents the innovative OSS-INSTRUCT method that utilizes open-source code to generate diverse, low-bias instruction data for fine-tuning 7B LLMs.

- The paper demonstrates significant performance gains, with the Magicoder series outperforming ChatGPT and other benchmarks on multilingual and Python coding tasks.

- The paper emphasizes open collaboration by releasing model weights, training data, and source code, paving the way for future advancements in LLM-based code generation.

Overview

Magicoder presents a series of open-source LLMs specifically designed for code generation. These models, which hold a maximum of 7 billion parameters, show significant performance improvements over other leading code models across coding benchmarks. The training of these models utilizes an original training method, OSS-INSTRUCT, which draws on open-source code snippets to generate diverse and realistic instruction data for code.

Methodology

OSS-INSTRUCT serves as a key innovation that enhances LLMs by tapping into the rich diversity of open-source code to create synthetic instruction data. The process avoids the bias inherent in synthetic data created by LLMs and benefits from utilizing a varied open-source code to produce unique and controllable data. Synthesized data is created by having a powerful LLM generate coding problems inspired by random segments of source code. This novel data then serves as the foundation for training the Magicoder series, with 75k synthetic data points being used to fine-tune models like CODE LLAMA-PYTHON-7B resulting in Magicoder-CL. Furthermore, combining OSS-INSTRUCT with other data generation methods like Evol-Instruct results in even more robust variants such as MagicoderS.

Extensive performance evaluations were carried out across a variety of coding tasks, such as Python text-to-code and multilingual code completion. The results revealed that Magicoder models significantly boosted the performance of baseline LLM models. Notably, the MagicoderS-CL variant even exceeded the performance of ChatGPT on rigorous coding challenges, showcasing the potential of generating robust code with a comparatively smaller 7 billion parameter model. Additionally, recent experimentation with the even more powerful DeepSeek-Coder series demonstrated the effectiveness and adaptability of the OSS-INSTRUCT approach across different base models.

Conclusions and Open-Source Contributions

The paper concludes by underscoring the innovative nature of the OSS-INSTRUCT method, which advances the field by producing higher-quality, lower-bias instruction data for LLMs. The success of Magicoder series offers a beacon for future work in LLMs for code generation. The authors have generously made the model weights, training data, and source code available at a specified GitHub repository to encourage collaborative efforts and future advancements in the domain.