- The paper demonstrates that self-consistency alone fails to ensure factual accuracy and introduces SAC3, which integrates semantic perturbations and cross-model evaluations.

- The SAC3 framework significantly improves hallucination detection, achieving a 99.4% AUROC in classification tasks and over 7% improvement in open-domain generation tasks.

- The method incurs moderate computational overhead but leverages parallel checking strategies to maintain efficiency in high-stakes, fact-sensitive applications.

SAC3: Reliable Hallucination Detection in Black-Box LLMs

Introduction

The paper "SAC3: Reliable Hallucination Detection in Black-Box LLMs via Semantic-aware Cross-check Consistency" (2311.01740) addresses the critical issue of hallucination in LMs. Hallucinations, or confidently incorrect predictions made by LMs, hinder their reliability across applications where factual accuracy is paramount. The paper critiques self-consistency methods for hallucination detection and introduces a new paradigm, semantic-aware cross-check consistency (SAC3), which extends self-consistency checks with semantic perturbation and cross-model evaluation techniques.

Limitations of Self-Consistency

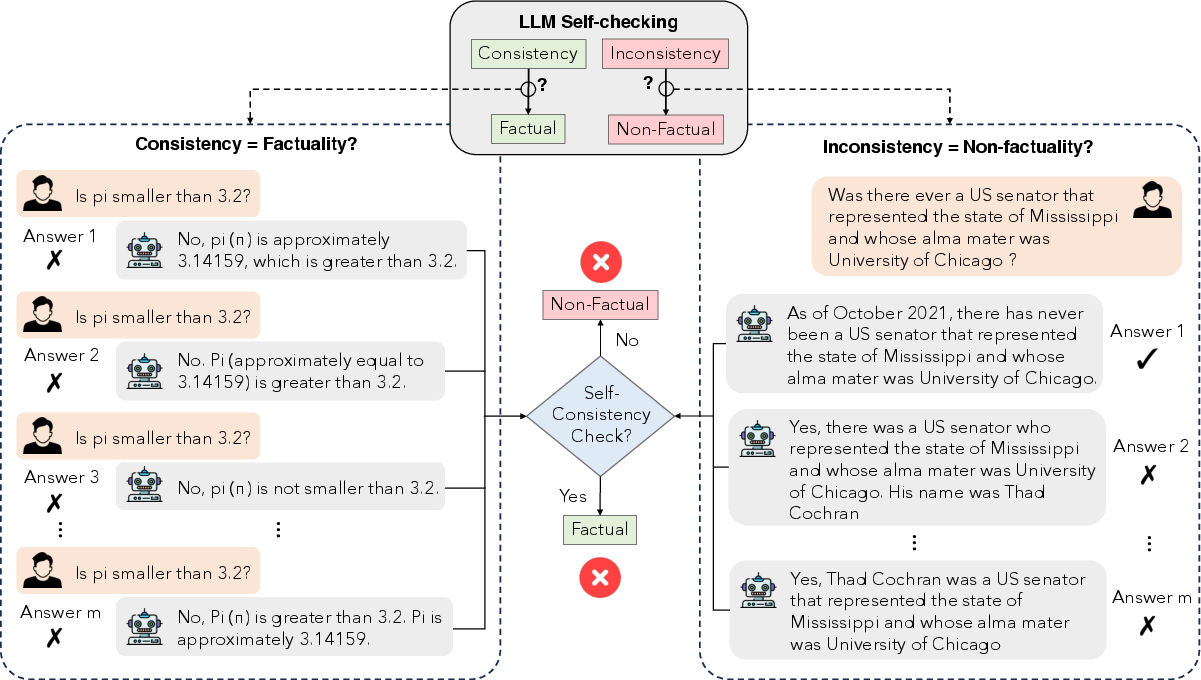

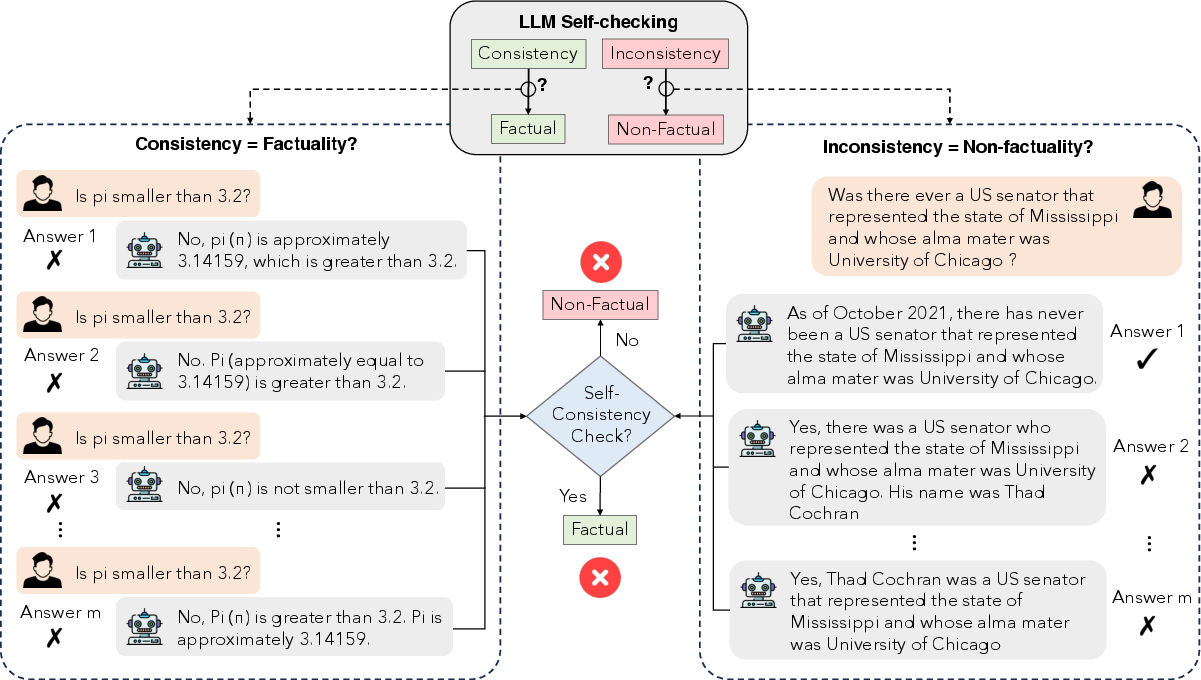

Current approaches to hallucination detection often rely on self-consistency checks, which assume LMs produce consistent outputs only for factual information. However, this paper demonstrates that consistency does not equal factuality (Figure 1). Two phenomena explain this: question-level hallucinations, where incorrect answers are consistently generated due to the phrasing of the question, and model-level hallucinations, where different LMs yield varied outputs for the same query. These insights underscore the inadequacy of relying entirely on self-consistency for factual assessment.

Figure 1: Key observation: solely checking the self-consistency of LLMs is not sufficient for deciding factuality. Left: generated responses to the same question may be consistent but non-factual. Right: generated responses may be inconsistent with the original answer that is factually correct.

SAC3 Methodology

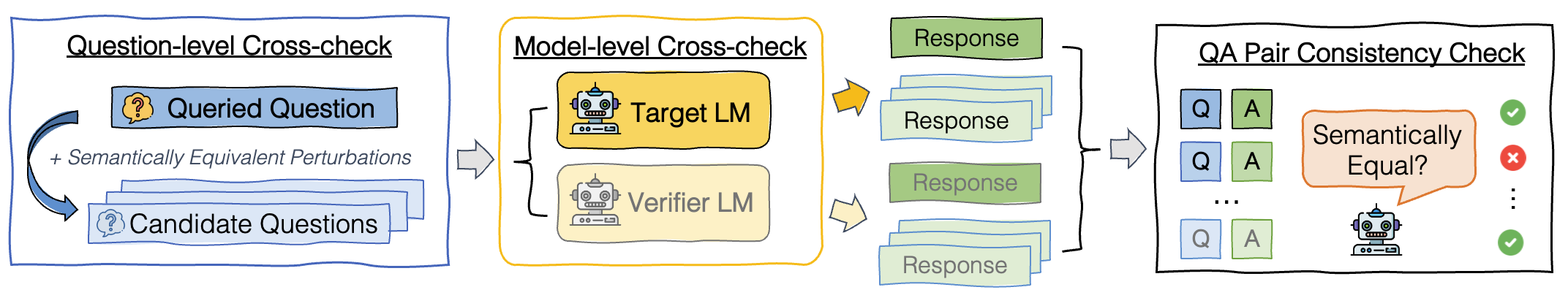

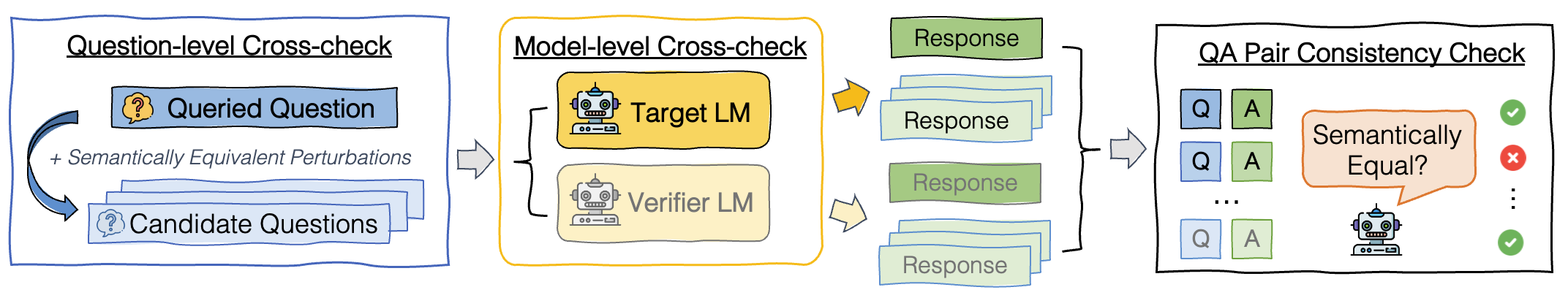

The SAC3 method enhances hallucination detection through two primary modules: question-level cross-checking and model-level cross-checking (Figure 2).

- Semantic Question Perturbation: SAC3 generates semantically equivalent variations of a given question to assess the response consistency across these variations. This module mitigates question-level hallucinations by identifying consistent yet incorrect responses and suggests rephrasing critical queries to verify factuality.

- Cross-Model Consistency Check: This component involves evaluating the responses from multiple LMs to detect inconsistencies. Discrepancies between LMs help identify model-specific hallucination tendencies, leveraging smaller models' occasional factual correctness over larger models' hallucinations.

Figure 2: Overview of the proposed semantic-aware cross-check consistency (SAC3) method.

Empirical Evaluation

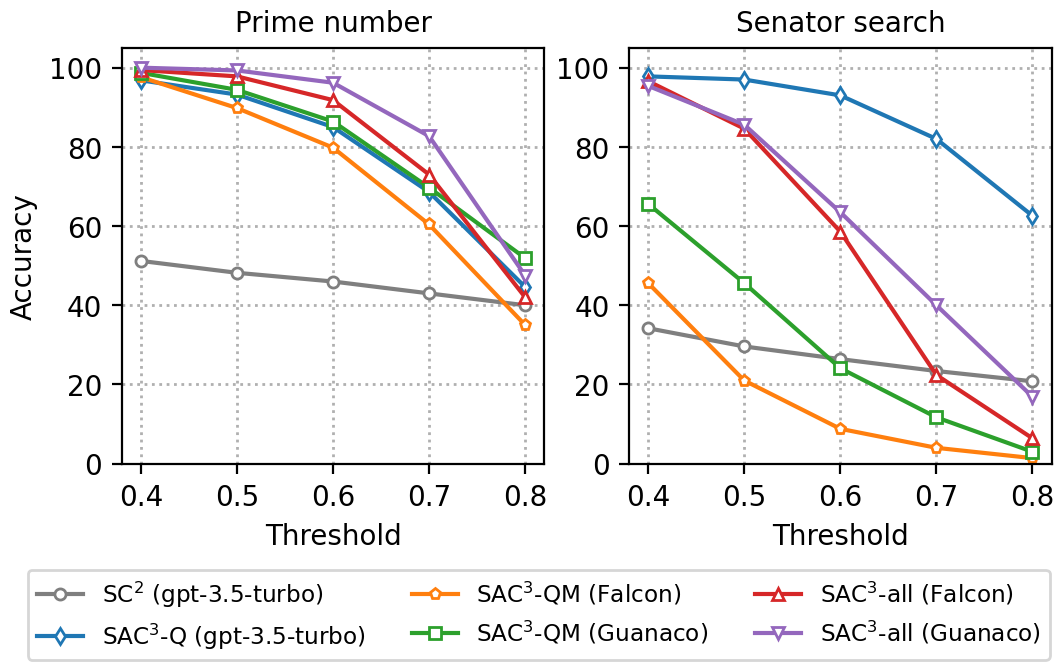

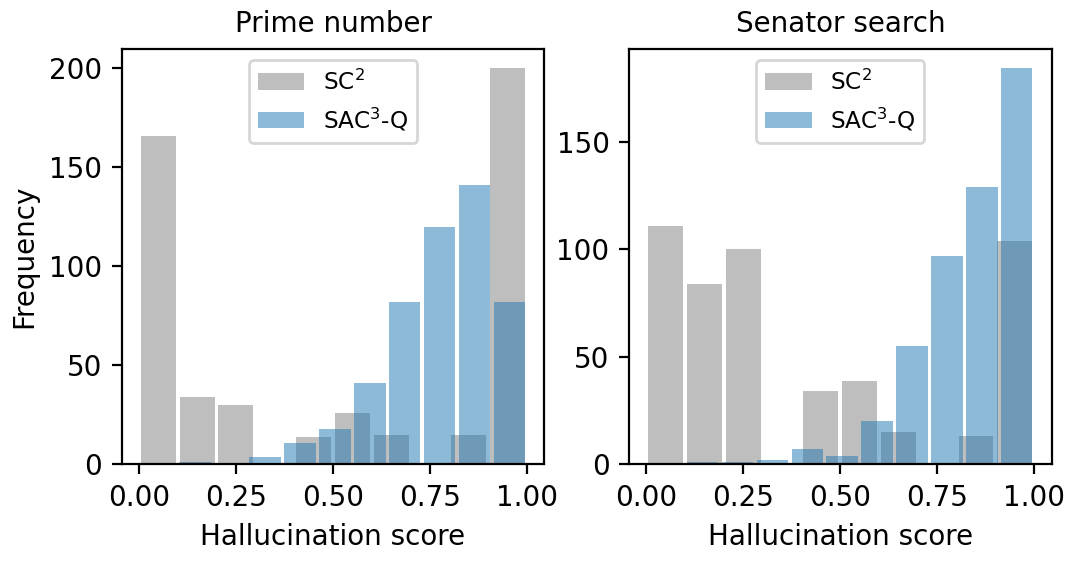

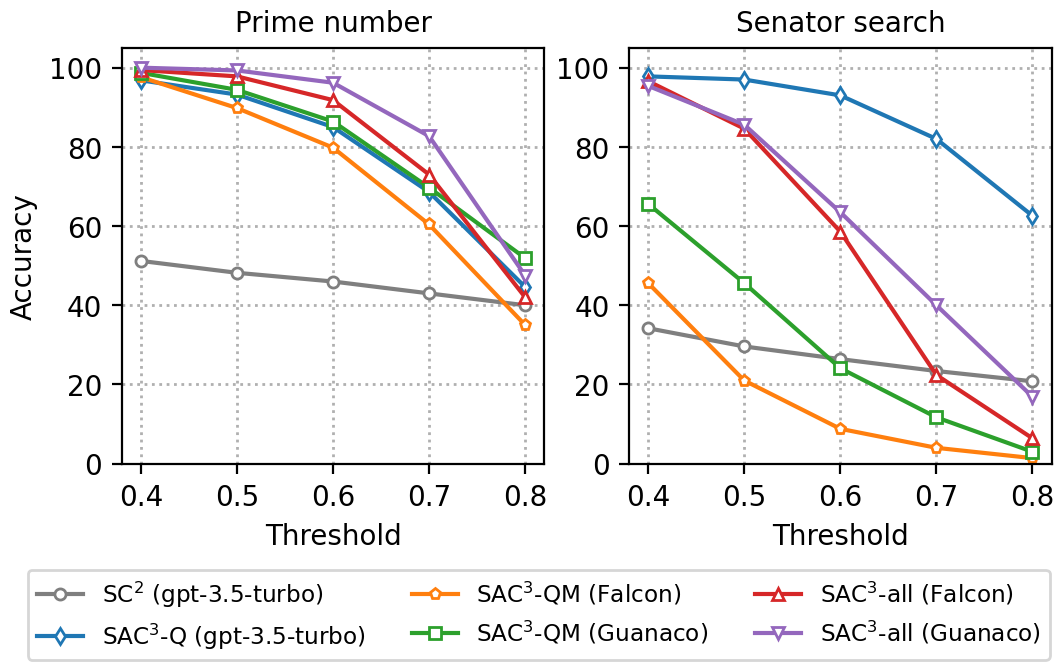

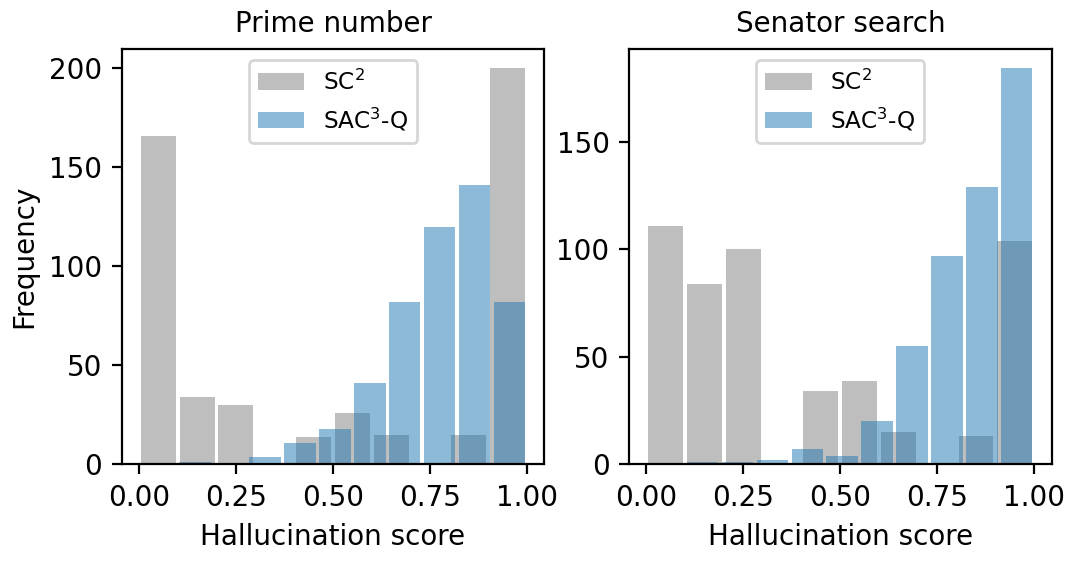

SAC3 was evaluated across multiple QA datasets, outperforming existing self-consistency frameworks significantly. In classification QA tasks, SAC3 achieved an AUROC of 99.4%, markedly superior to self-consistency baselines which lingered below 70%. The model also demonstrated robustness in imbalanced datasets, maintaining high detection accuracy even when samples were preponderantly hallucinatory (Figure 3, Figure 4).

Figure 3: Impact of threshold on detection accuracy.

Figure 4: Histogram of hallucination score.

For open-domain generation tasks, SAC3 improved detection rates by over 7%, indicating its substantial efficacy in diverse linguistic contexts. Its performance is notable considering the greater complexity and ambiguity inherent to these tasks compared to structured classification scenarios.

Computational Considerations

SAC3 introduces a moderate computational overhead compared to self-consistency checks due to additional semantic perturbations and cross-model evaluations. However, this overhead is counterbalanced by its ability to execute parallel checks and optimized prompting strategies that reduce latency and cost. The method's robustness and accuracy justify the additional computations, especially in high-stakes applications where misinformation poses significant risks.

Conclusion

The SAC3 framework represents a significant advancement in hallucination detection for black-box LMs. By integrating semantic cross-checks and leveraging model diversity, it addresses the shortcomings of previous self-consistency approaches. As LMs continue to pervade critical domains, methods such as SAC3 that enhance their reliability will become indispensable. Future work may explore integrating SAC3 with adaptive sampling strategies and extending its applicability to broader NLP tasks.