- The paper presents a novel active instruction tuning framework that leverages prompt uncertainty to identify and select the most informative tasks.

- The methodology employs a Task Map to categorize tasks and strategically focus on ambiguous prompts for enhanced out-of-distribution performance.

- Empirical results on NIV2 and Self-Instruct datasets demonstrate improved efficiency and generalization with fewer training tasks.

Active Instruction Tuning: Improving Cross-Task Generalization by Training on Prompt Sensitive Tasks

Introduction

The paper "Active Instruction Tuning: Improving Cross-Task Generalization by Training on Prompt Sensitive Tasks" aims to address the limitations of instruction tuning (IT) in LLMs. While IT has achieved significant success in zero-shot generalization by training LLMs on diverse tasks, selecting new tasks to enhance IT models' performance and generalizability remains an open challenge. The exhaustive training on all existing tasks is computationally prohibitive, and random task selection often results in suboptimal outcomes. This work proposes a novel framework, Active Instruction Tuning based on prompt uncertainty, which identifies and selects informative tasks to refine the model actively.

Methodology

The core innovation of the proposed framework lies in its task selection process driven by prompt uncertainty. The informativeness of tasks is quantified by the disagreement in the model's outputs across perturbed prompts. By focusing on tasks where predictions exhibit high uncertainty, the approach aligns model training with areas that significantly contribute to improved generalization.

Additionally, the paper introduces the concept of a Task Map, which categorizes tasks based on prompt uncertainty and prediction probability. The findings suggest that training on ambiguous tasks, characterized by high prompt uncertainty, effectively enhances model generalization. In contrast, tasks that are deemed difficult (prompt-certain and low-probability) offer little to no improvement in performance, proving the necessity for strategic task selection.

Experimentation

Experiments were conducted using the NIV2 and Self-Instruct datasets to empirically validate the approach. The results consistently demonstrate the superiority of active instruction tuning over random sampling and other baseline task selection strategies. Specifically, the models trained on the selected prompt-uncertain tasks achieved better out-of-distribution generalization with fewer training tasks. This underscores the efficacy of active tuning in optimizing both performance and computational resources.

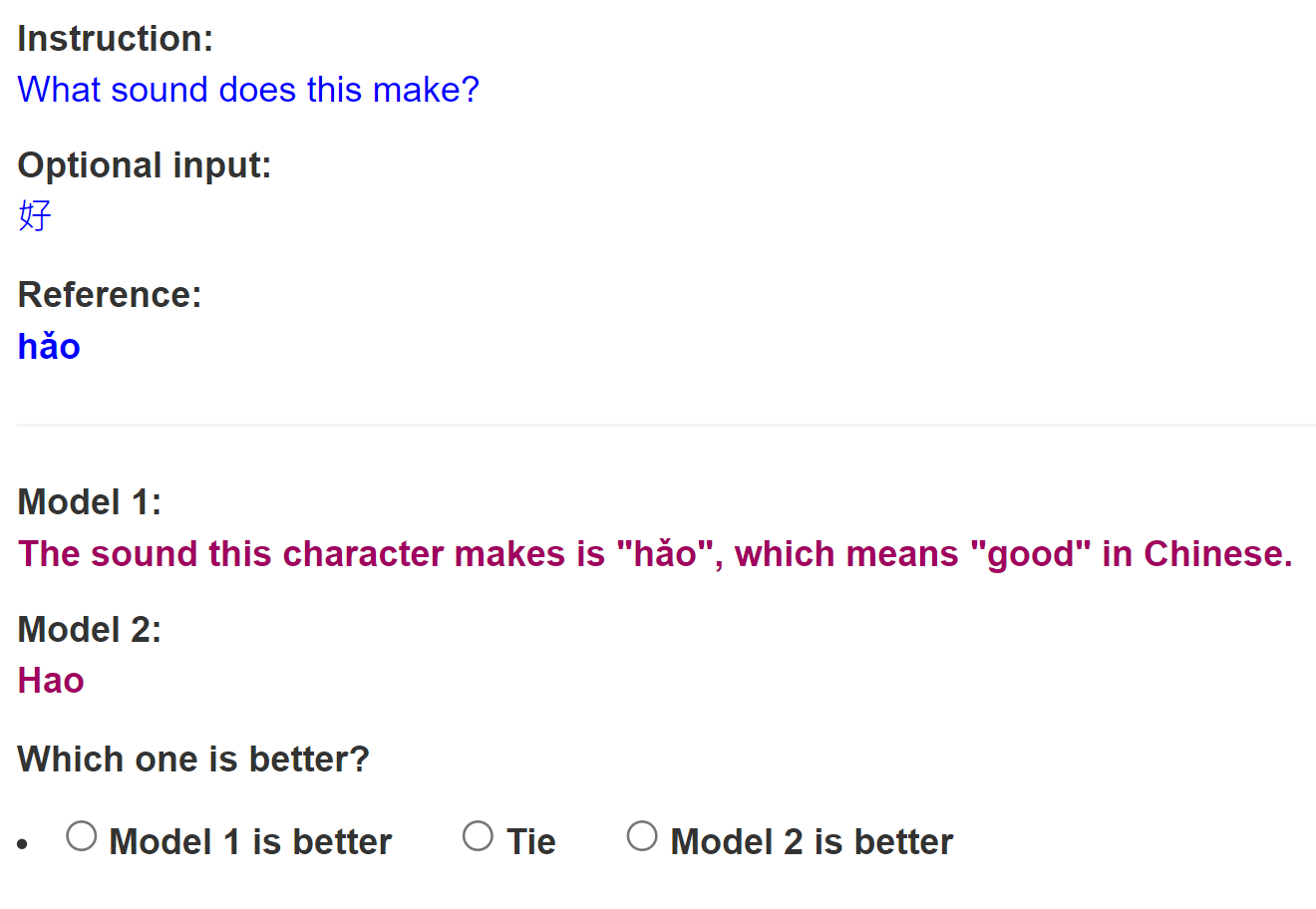

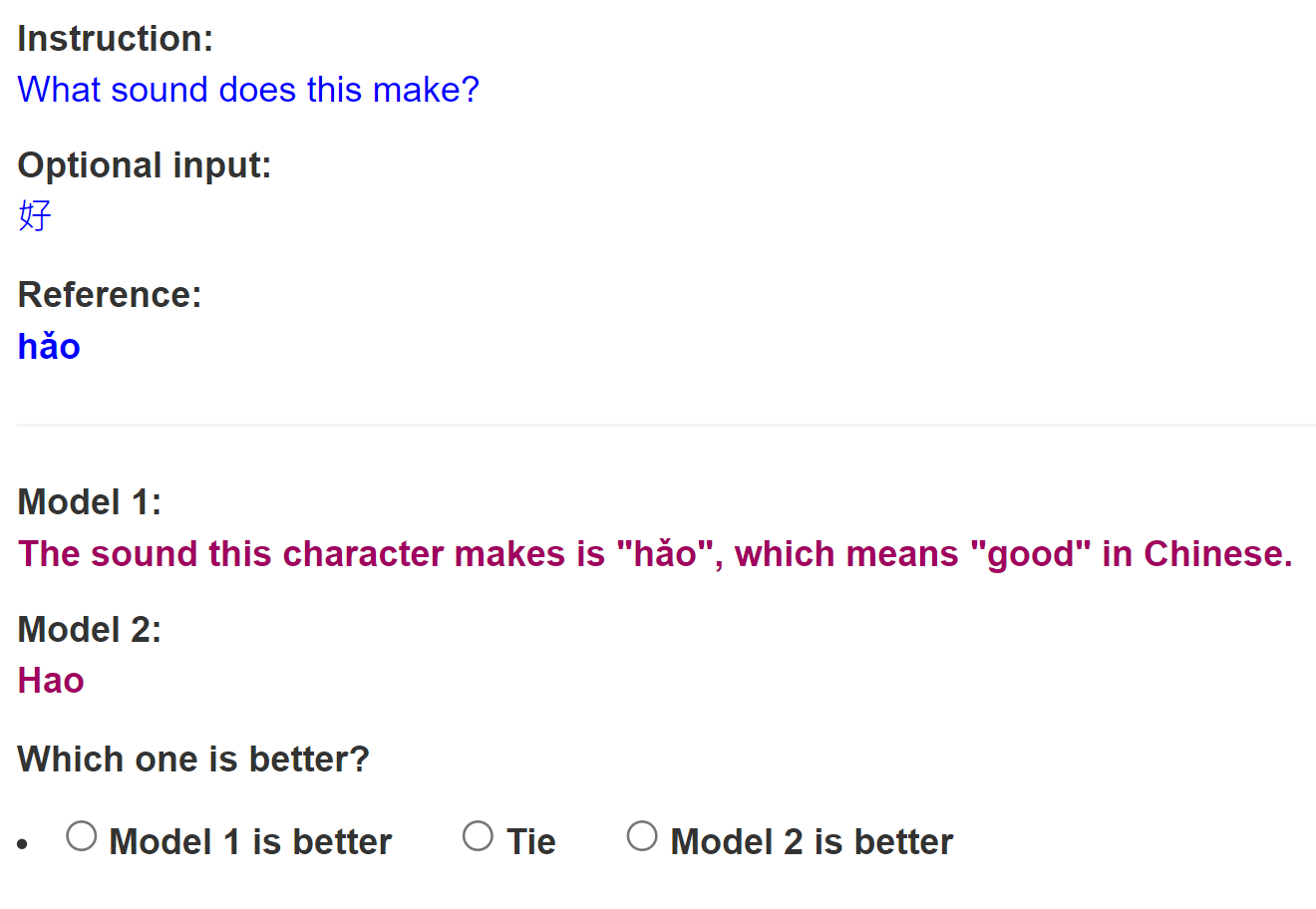

Figure 1: An example of the annotation interface for the human evaluation in Active Instruction Tuning.

Results

A significant result of the study is the consistent outperformance of active instruction tuning methods across different datasets. The clear advantage of training on ambiguous tasks was highlighted, presenting a stark contrast to the ineffectiveness of difficult tasks in boosting generalization. This prompts a reevaluation of task selection paradigms in instruction tuning, advocating for methodologies that prioritize task uncertainty as a critical metric.

Implications and Future Directions

The implications of this research extend into both theoretical and practical domains. Theoretically, it enhances the understanding of cross-task generalization and the role of prompt uncertainty in instruction tuning. Practically, it proposes a scalable framework that optimizes task selection, thereby advancing the efficiency and effectiveness of LLMs. Future research could explore the integration of reinforcement learning or continual learning approaches to further enhance model robustness and lifelong learning capabilities.

Conclusion

"Active Instruction Tuning" presents a compelling argument for refining task selection processes in instruction tuning through prompt uncertainty assessments. By focusing on task informativeness, the method achieves improved generalization with computational efficiency. The introduction of Task Maps provides a diagnostic tool for understanding task complexity, paving the way for more strategic methodologies in LLM training. The findings motivate continued exploration into task selection strategies, promising advancements in the understanding and application of instruction tuned models.