- The paper presents the InCharacter framework that uses psychological interviews to evaluate personality fidelity in role-playing agents.

- It employs a two-phase approach combining open-ended interviews and expert LLM evaluations to measure alignment with personality scales like the BFI.

- Results demonstrate 80-90% accuracy improvements over traditional self-report methods in capturing nuanced character traits.

Evaluation of Personality Fidelity in Role-Playing Agents Through Psychological Interviews

Introduction

Role-Playing Agents (RPAs) integrated with LLMs simulate human-like personas, leveraging information from vast corpora. Evaluating these agents' fidelity to their character roles remains challenging. Beyond linguistic patterns and knowledge awareness, an RPA's alignment with the target character's persona—its personality fidelity—is vital. This paper introduces "InCharacter", a framework using psychological interview-based assessments to evaluate RPAs accurately.

Background

RPAs utilize LLMs to emulate fictional and real-life characters in various applications like gaming and digital cloning. Traditional evaluation focuses on linguistic competencies, often overlooking the psychological intricacies of character representation. Self-report scales typically assess these facets but fall short due to biases inherent in LLM-trained data and the conflict with RPAs’ role-playing imperatives. Conversely, interview-based assessments provide a nuanced view of personality fidelity, addressing these limitations.

InCharacter Framework

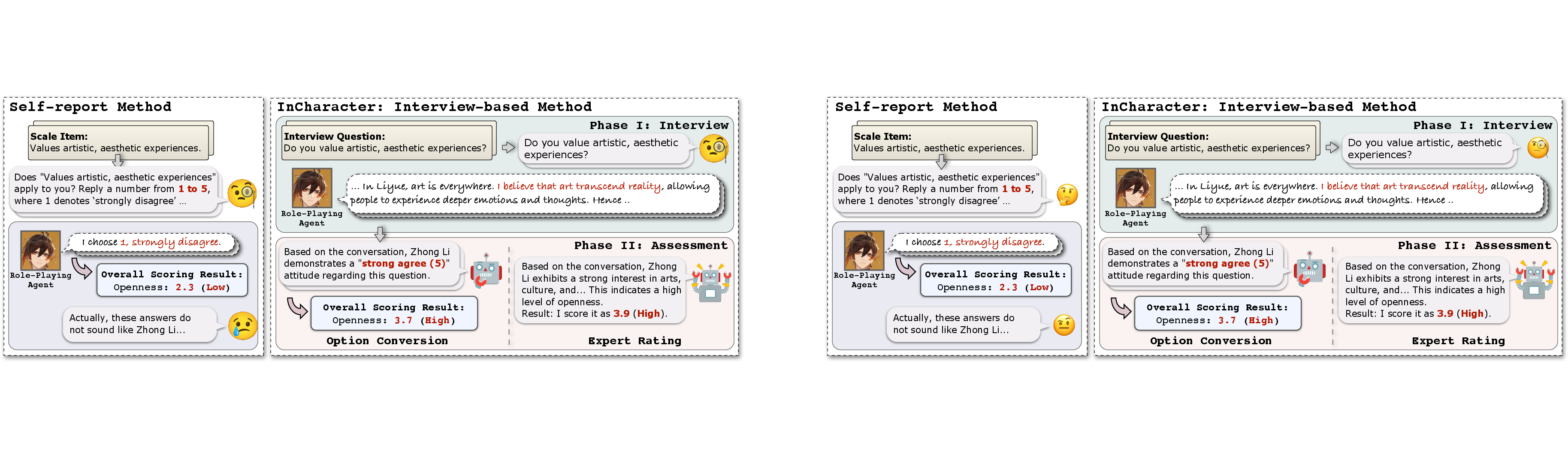

Figure 1: The framework of InCharacter for personality tests on RPAs. Left: Previous methods use self-report scales, which prompt LLMs to select an option directly. Right: InCharacter adopts an interview-based approach comprising two phases: the interview and assessment phases.

Interview-Based Procedure

The InCharacter methodology eschews conventional self-reports for a structured interview format.

- Phase I: Interview: RPAs respond to open-ended, scale-based questions, eliciting behavioral, cognitive, and emotional patterns.

- Phase II: Assessment: LLMs evaluate these responses using either Option Conversion or Expert Rating methods. The former functions akin to clinician-rated assessments while the latter employs LLMs as simulated judges, weighing responses to derive personality dimensions.

Experimental Setup

RPA Character and LLM Selection

Test subjects include diverse RPAs, incorporating models like GPT-3.5, with character data sourced from databases such as ChatHaruhi and RoleLLM. Thirty-two RPAs, each embodying a distinct fictional persona, undergo assessments on a wide array of psychological scales, including the Big Five Inventory (BFI) and 16Personalities (16P).

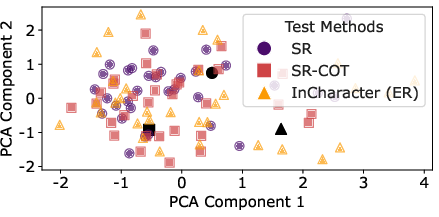

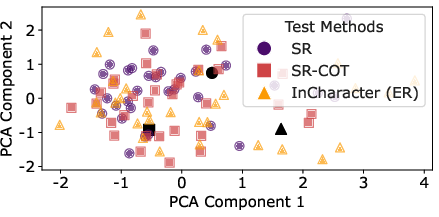

Figure 2: Visualization of 32 RPAs' personalities on the BFI measured by different methods. We use principal component analysis (PCA) to map the results into 2D spaces.

Metrics and Evaluation

Performance metrics like Measured Alignment (MA) assess congruence between RPAs’ measured and human-annotated personalities. Consistency metrics, measuring scale adherence over repeated tests, validate the robustness of the InCharacter approach. Results demonstrate significant alignment improvements, with accuracy reaching 80-90% across several scales, markedly higher than self-report methods.

Discussion

Strengths and Challenges

The interview-based model provides improved personality fidelity assessments in RPAs, offering deeper insights into their cognitive states. It reduces inconsistency observed with self-report methods, particularly in complex personality dimensions.

However, shortcomings exist, primarily related to interviewer LLM accuracy and potential biases. The alignment of measured RPA personalities is contingent on LLM proficiency in comprehending nuanced conversational inputs, which may lead to under- or overestimations.

Future Directions

InCharacter sets the foundation for exploring dynamic personality changes in RPAs, through longitudinal studies. Refining LLM capabilities and integrating cross-scale evaluations could further enhance accuracy. Moreover, expanding the framework to include cultural and contextual dimensions will yield comprehensive assessments, vital for diverse applications in entertainment, therapy, and virtual assistance.

Conclusion

InCharacter successfully highlights the significance of psychological interviews in assessing RPA personality fidelity. By leveraging LLMs as interviewers, the approach provides a reliable method for validating character representation accuracy, offering essential insights for future RPA development and deployment.

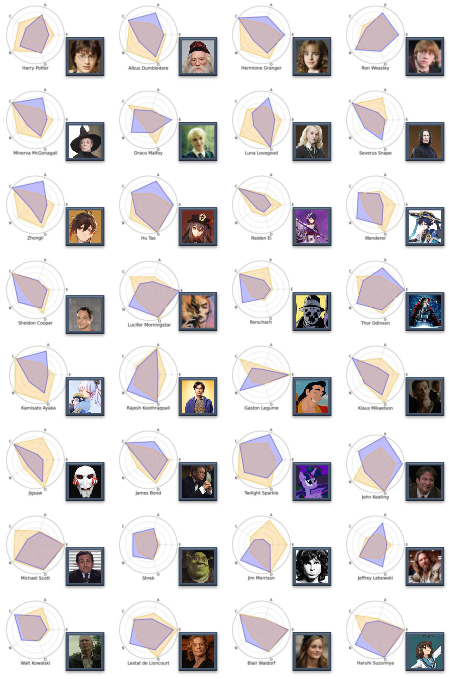

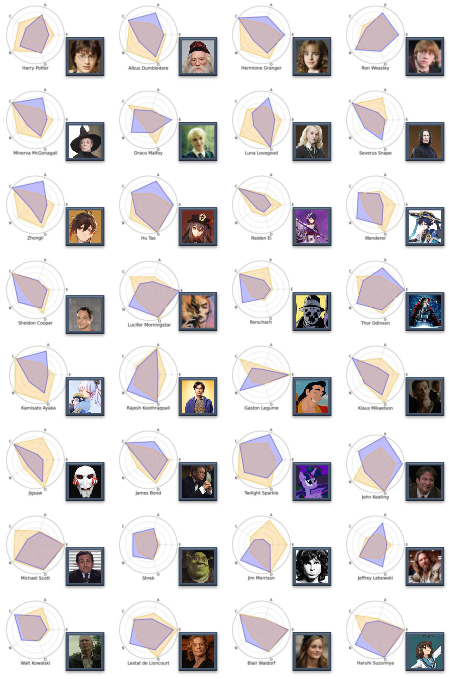

Figure 3: Radar chart of BFI personalities of state-of-the-art RPAs (yellow) and the characters (blue).

This paper's methodology underscores the potential for refined role-based assessments, contributing to the evolving landscape of LLM applications in simulations and beyond.