Tree Prompting: Efficient Task Adaptation without Fine-Tuning

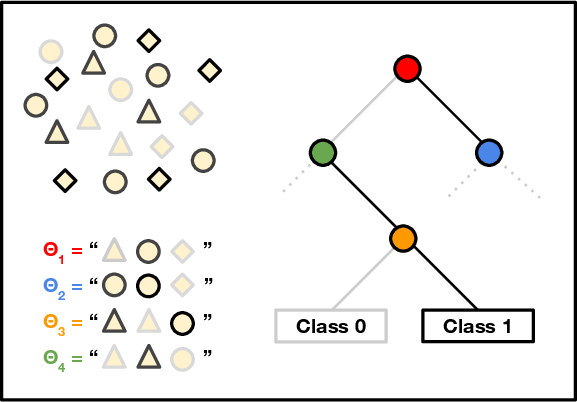

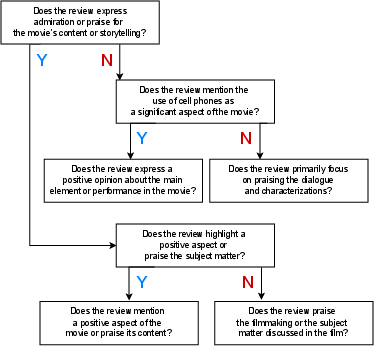

Abstract: Prompting LMs is the main interface for applying them to new tasks. However, for smaller LMs, prompting provides low accuracy compared to gradient-based finetuning. Tree Prompting is an approach to prompting which builds a decision tree of prompts, linking multiple LM calls together to solve a task. At inference time, each call to the LM is determined by efficiently routing the outcome of the previous call using the tree. Experiments on classification datasets show that Tree Prompting improves accuracy over competing methods and is competitive with fine-tuning. We also show that variants of Tree Prompting allow inspection of a model's decision-making process.

- Hierarchical shrinkage: improving the accuracy and interpretability of tree-based methods. arXiv:2202.00858 [cs, stat]. ArXiv: 2202.00858.

- PromptSource: An integrated development environment and repository for natural language prompts. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pages 93–104, Dublin, Ireland. Association for Computational Linguistics.

- Graph of thoughts: Solving elaborate problems with large language models. arXiv preprint arXiv:2308.09687.

- Classification and Regression Trees. Wadsworth and Brooks, Monterey, CA.

- Leo Breiman. 1996. Bagging predictors. Machine learning, 24:123–140.

- Leo Breiman. 2001. Random forests. Machine Learning, 45(1):5–32.

- Language models are few-shot learners. In Advances in Neural Information Processing Systems, volume 33, pages 1877–1901. Curran Associates, Inc.

- Harrison Chase. 2023. Langchain: Building applications with llms through composability. https://github.com/hwchase17/langchain.

- Frugalgpt: How to use large language models while reducing cost and improving performance. arXiv preprint arXiv:2305.05176.

- Tianqi Chen and Carlos Guestrin. 2016. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining, pages 785–794.

- Bart: Bayesian additive regression trees. The Annals of Applied Statistics, 4(1):266–298.

- Michael Collins. 1997. Three generative, lexicalised models for statistical parsing. In 35th Annual Meeting of the Association for Computational Linguistics and 8th Conference of the European Chapter of the Association for Computational Linguistics, pages 16–23, Madrid, Spain. Association for Computational Linguistics.

- Vinícius G Costa and Carlos E Pedreira. 2022. Recent advances in decision trees: An updated survey. Artificial Intelligence Review, pages 1–36.

- The pascal recognising textual entailment challenge. In Machine Learning Challenges. Evaluating Predictive Uncertainty, Visual Object Classification, and Recognising Tectual Entailment: First PASCAL Machine Learning Challenges Workshop, MLCW 2005, Southampton, UK, April 11-13, 2005, Revised Selected Papers, pages 177–190. Springer.

- The commitmentbank: Investigating projection in naturally occurring discourse. In proceedings of Sinn und Bedeutung, volume 23, pages 107–124.

- Lingjia Deng and Janyce Wiebe. 2015. MPQA 3.0: An entity/event-level sentiment corpus. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 1323–1328, Denver, Colorado. Association for Computational Linguistics.

- Yoav Freund and Robert E Schapire. 1997. A decision-theoretic generalization of on-line learning and an application to boosting. Journal of computer and system sciences, 55(1):119–139.

- Experiments with a new boosting algorithm. In icml, volume 96, pages 148–156. Citeseer.

- Promptboosting: Black-box text classification with ten forward passes.

- Toward semantics-based answer pinpointing. In Proceedings of the First International Conference on Human Language Technology Research.

- Minqing Hu and Bing Liu. 2004. Mining and summarizing customer reviews. In Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, pages 168–177.

- Knowledgeable prompt-tuning: Incorporating knowledge into prompt verbalizer for text classification. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2225–2240, Dublin, Ireland. Association for Computational Linguistics.

- Optimal sparse decision trees. Advances in Neural Information Processing Systems (NeurIPS).

- How can we know what language models know? Transactions of the Association for Computational Linguistics, 8:423–438.

- Learning optimal fair classification trees. arXiv preprint arXiv:2201.09932.

- Teven Le Scao and Alexander Rush. 2021. How many data points is a prompt worth? In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 2627–2636, Online. Association for Computational Linguistics.

- Dbpedia–a large-scale, multilingual knowledge base extracted from wikipedia. Semantic web, 6(2):167–195.

- Xin Li and Dan Roth. 2002. Learning question classifiers. In COLING 2002: The 19th International Conference on Computational Linguistics.

- Cutting down on prompts and parameters: Simple few-shot learning with language models. In Findings of the Association for Computational Linguistics: ACL 2022, pages 2824–2835, Dublin, Ireland. Association for Computational Linguistics.

- Jieyi Long. 2023. Large language model guided tree-of-thought.

- Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8086–8098, Dublin, Ireland. Association for Computational Linguistics.

- Large language model is not a good few-shot information extractor, but a good reranker for hard samples!

- Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, pages 142–150, Portland, Oregon, USA. Association for Computational Linguistics.

- Self-refine: Iterative refinement with self-feedback.

- David M. Magerman. 1995. Statistical decision-tree models for parsing. In 33rd Annual Meeting of the Association for Computational Linguistics, pages 276–283, Cambridge, Massachusetts, USA. Association for Computational Linguistics.

- Good debt or bad debt: Detecting semantic orientations in economic texts. Journal of the Association for Information Science and Technology, 65.

- On the risks of stealing the decoding algorithms of language models.

- OpenAI. 2023. Gpt-4 technical report.

- A sentimental education: Sentiment analysis using subjectivity summarization based on minimum cuts. In Proceedings of the 42nd Annual Meeting of the Association for Computational Linguistics (ACL-04), pages 271–278, Barcelona, Spain.

- Seeing stars: Exploiting class relationships for sentiment categorization with respect to rating scales. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL’05), pages 115–124, Ann Arbor, Michigan. Association for Computational Linguistics.

- Boosted prompt ensembles for large language models. arXiv preprint arXiv:2304.05970.

- Measuring and narrowing the compositionality gap in language models.

- Language models are unsupervised multitask learners. OpenAI blog, 1(8):9.

- Alexander M. Rush. 2023. Mini chain: A tiny library for coding with large language models. https://github.com/srush/MiniChain.

- CARER: Contextualized affect representations for emotion recognition. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 3687–3697, Brussels, Belgium. Association for Computational Linguistics.

- Fabrizio Sebastiani. 2002. Machine learning in automated text categorization. ACM computing surveys (CSUR), 34(1):1–47.

- Augmenting interpretable models with llms during training.

- Explaining patterns in data with language models via interpretable autoprompting.

- Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, pages 1631–1642, Seattle, Washington, USA. Association for Computational Linguistics.

- Interactive and visual prompt engineering for ad-hoc task adaptation with large language models.

- Fast interpretable greedy-tree sums (figs). arXiv:2201.11931 [cs, stat]. ArXiv: 2201.11931.

- Attention is all you need. In Advances in Neural Information Processing Systems, volume 30. Curran Associates, Inc.

- {NBDT}: Neural-backed decision tree. In International Conference on Learning Representations.

- Ben Wang and Aran Komatsuzaki. 2021. GPT-J-6B: A 6 Billion Parameter Autoregressive Language Model. https://github.com/kingoflolz/mesh-transformer-jax.

- Iteratively prompt pre-trained language models for chain of thought. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 2714–2730, Abu Dhabi, United Arab Emirates. Association for Computational Linguistics.

- Annotating expressions of opinions and emotions in language. Language resources and evaluation, 39:165–210.

- $k$NN prompting: Beyond-context learning with calibration-free nearest neighbor inference. In The Eleventh International Conference on Learning Representations.

- Tree of thoughts: Deliberate problem solving with large language models.

- Prefer: Prompt ensemble learning via feedback-reflect-refine. arXiv preprint arXiv:2308.12033.

- Summit: Iterative text summarization via chatgpt.

- Tianyuan Zhang and Zhanxing Zhu. 2019. Interpreting adversarially trained convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, volume 97 of Proceedings of Machine Learning Research, pages 7502–7511. PMLR.

- Character-level convolutional networks for text classification. In Advances in Neural Information Processing Systems, volume 28. Curran Associates, Inc.

- Describing differences between text distributions with natural language. In Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, pages 27099–27116. PMLR.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.