- The paper introduces a PMI framework integrating working and long-term memory to enhance inference on complex tasks.

- The methodology simulates human cognitive processes using perception, dual-level memory, and multi-head attention for precise results.

- Experiments show notable error rate reduction on bAbI tasks and improved relational reasoning performance over standard models.

A Framework for Inference Inspired by Human Memory Mechanisms

Introduction

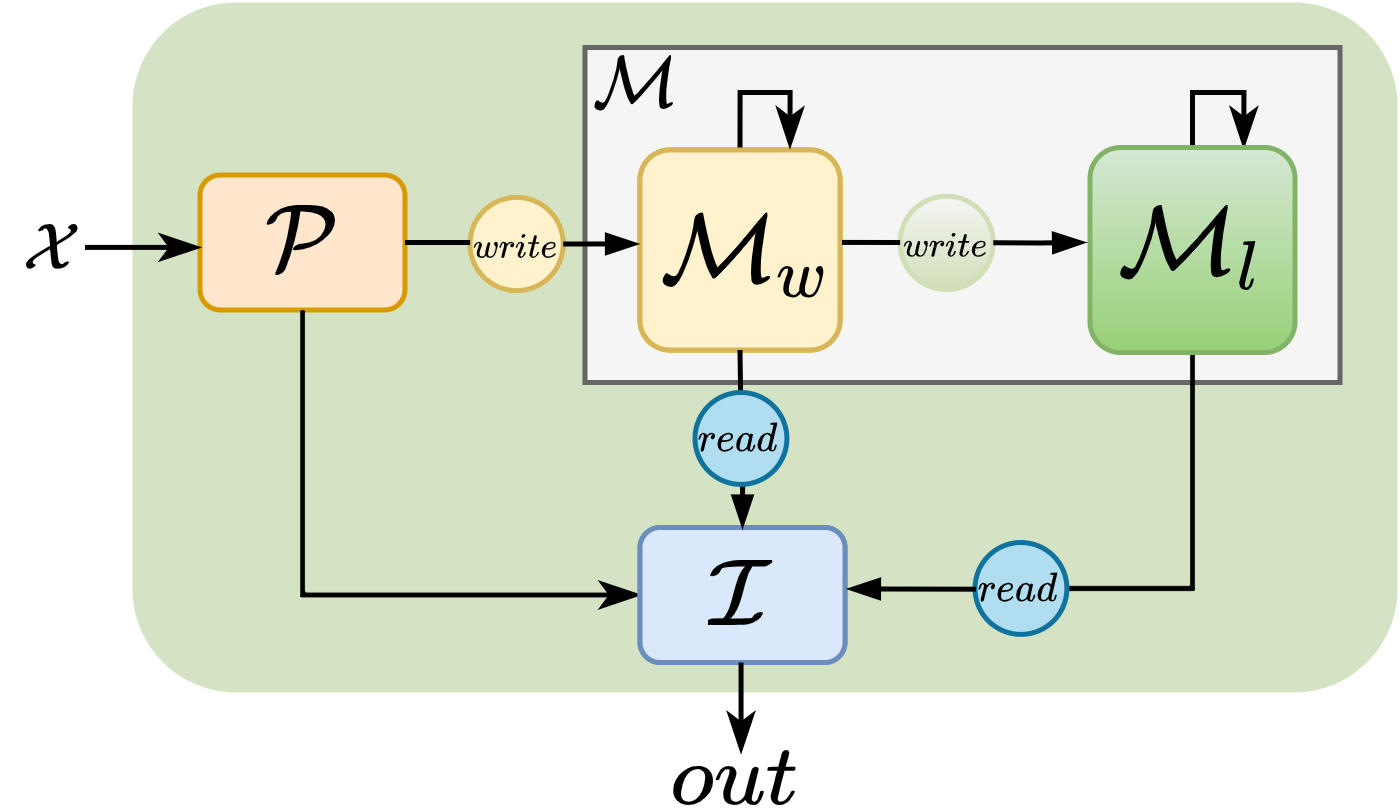

The paper proposes a novel approach to integrating human-like memory mechanisms in AI systems to enhance inference, particularly on complex reasoning tasks. By incorporating a Perception, Memory, and Inference (PMI) framework, the approach aims to replicate aspects of the human brain's memory system—specifically, leveraging both working and long-term memory. This framework is utilized to address limitations in current neural network architectures concerning long-term information retention and relational reasoning.

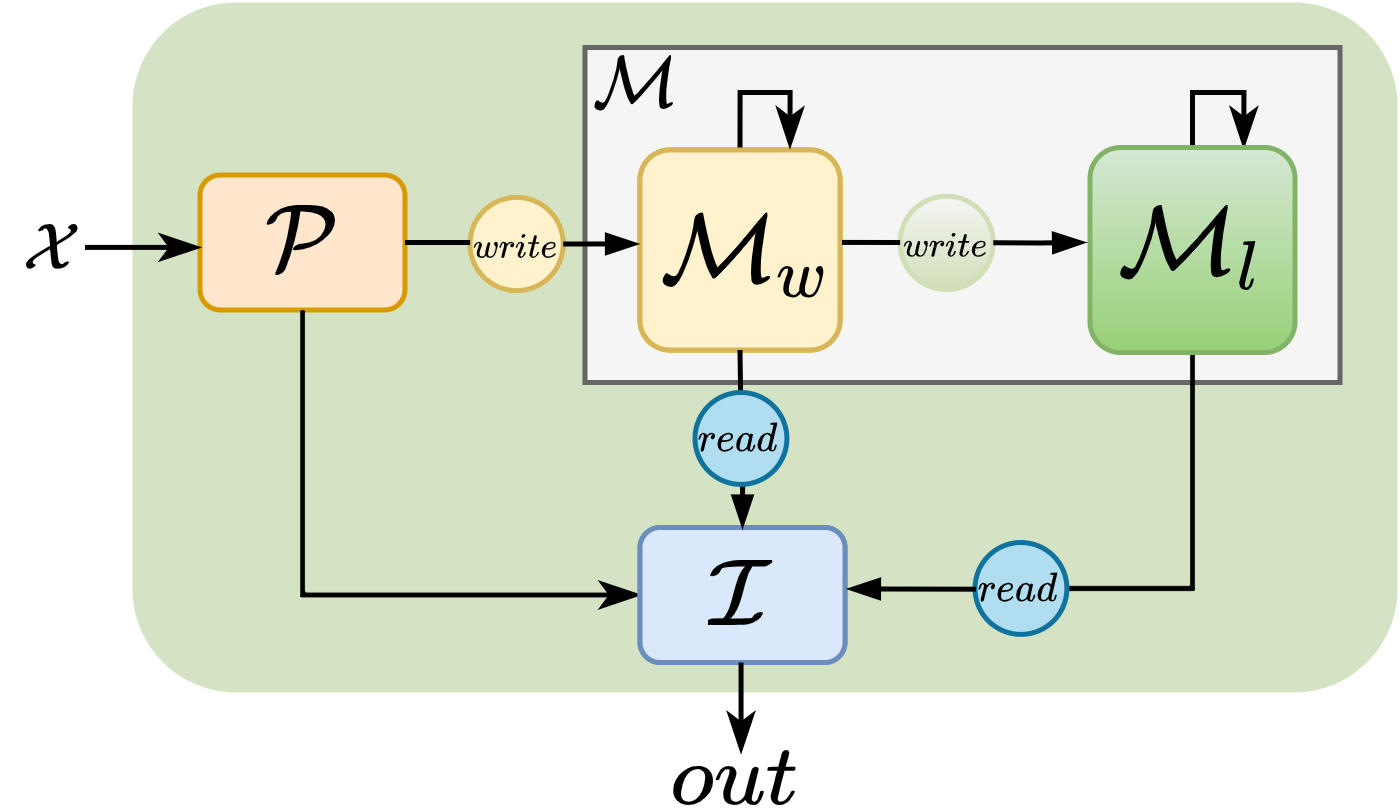

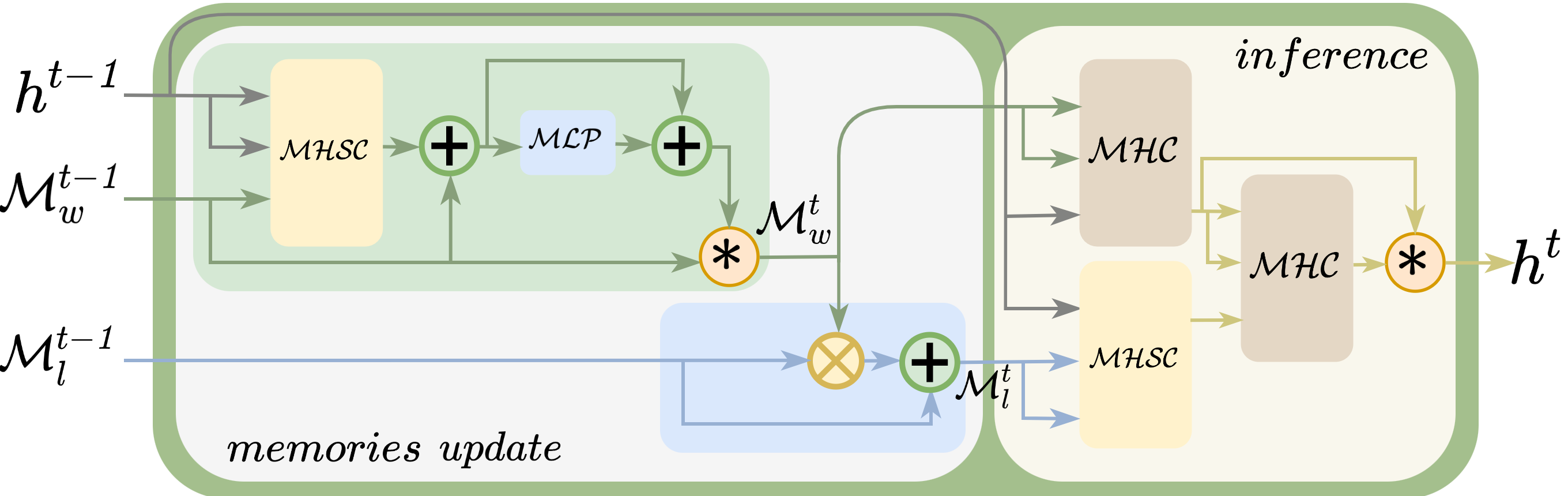

Figure 1: PMI framework

Methodology

The PMI framework comprises three major modules: perception, memory, and inference. Each module is designed to simulate the functionalities it is named after, inspired by cognitive neuroscience theories such as Multiple Memory Systems and Global Workspace Theory.

- Perception Component: Converts input data (textual, visual, etc.) into an internal representation utilizing techniques such as embedding and positional encoding similar to those in Transformers and ViT.

- Dual-Level Memory:

- Working Memory (WM): Operates as a limited-capacity buffer for current relevant information, updated through a competitive write mechanism enabling selective retention.

- Long-Term Memory (LTM): Structured as a higher-dimensional tensor to capture lasting relational knowledge, updated via tensorial operations to embed long-range interactions and cumulative knowledge.

- Inference Module: Retrieves pertinent information from both WM and LTM to refine understanding and extract insight. This involves complex interactions employing content-based addressing mechanisms, including multi-head attention for better information synthesis.

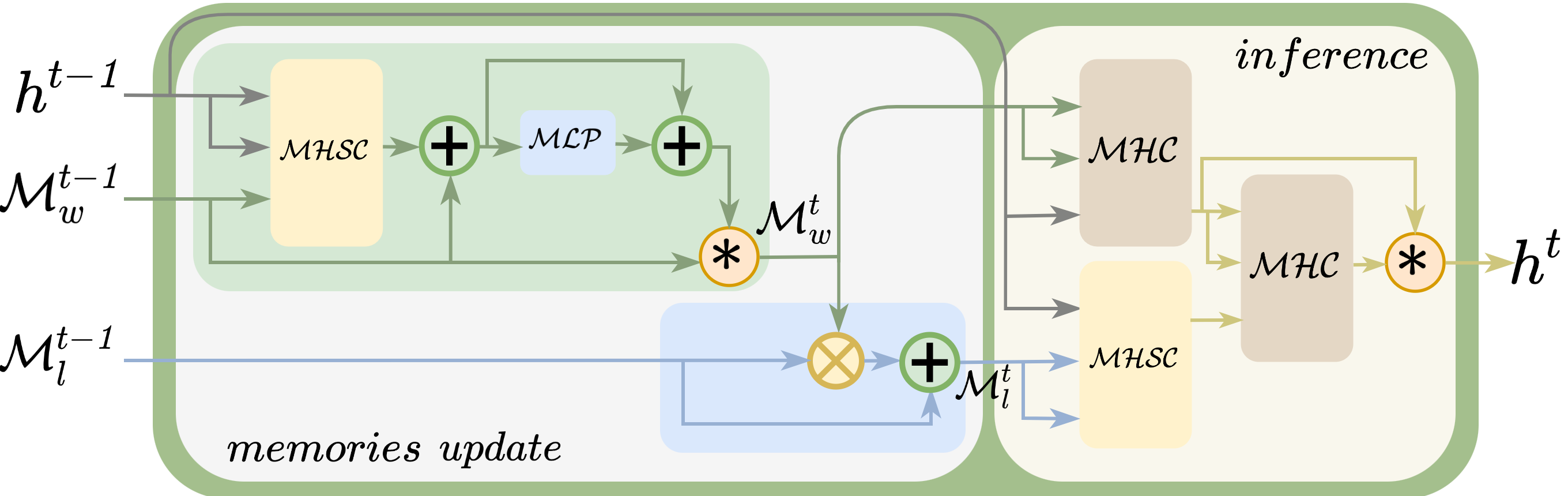

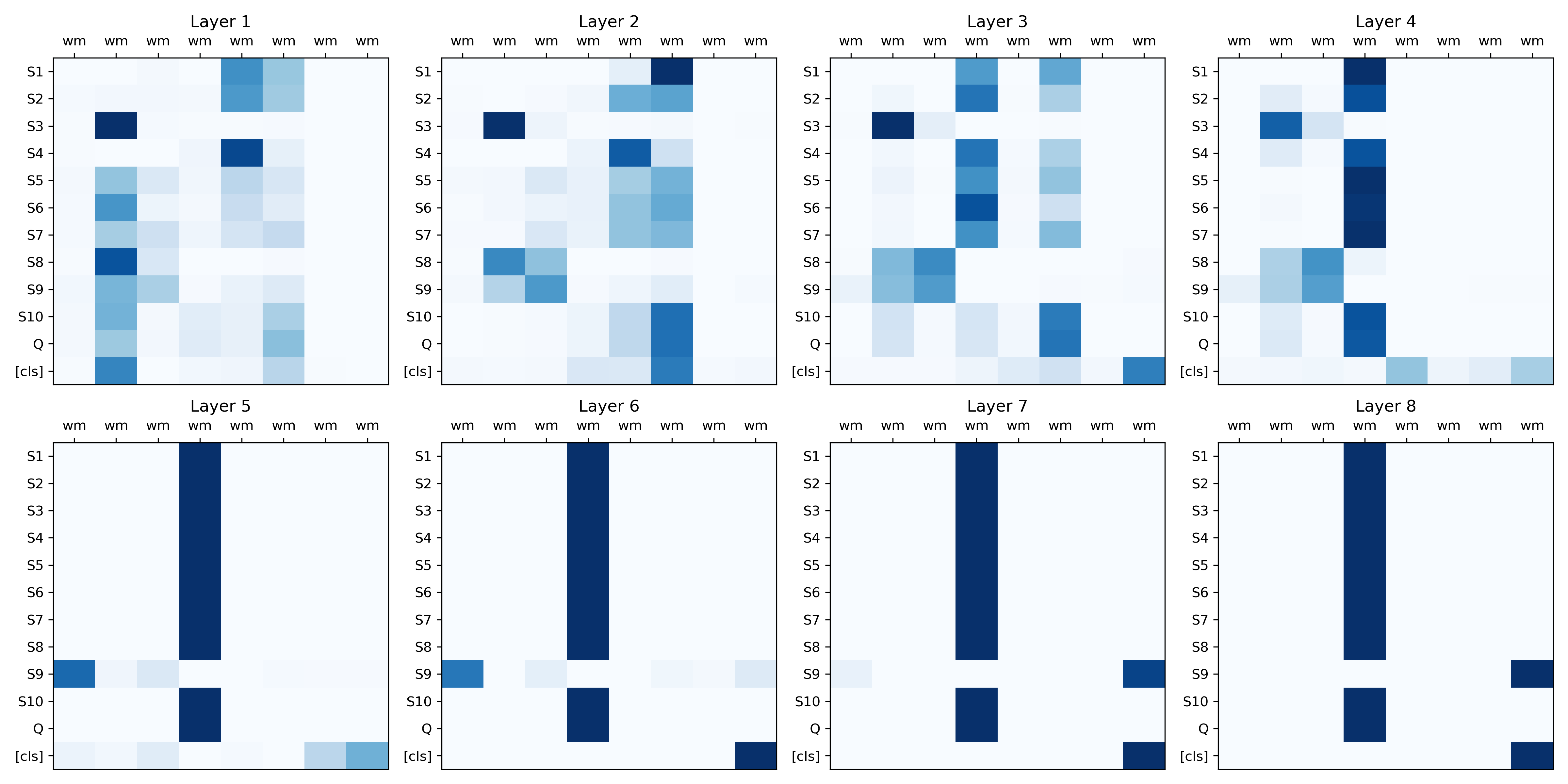

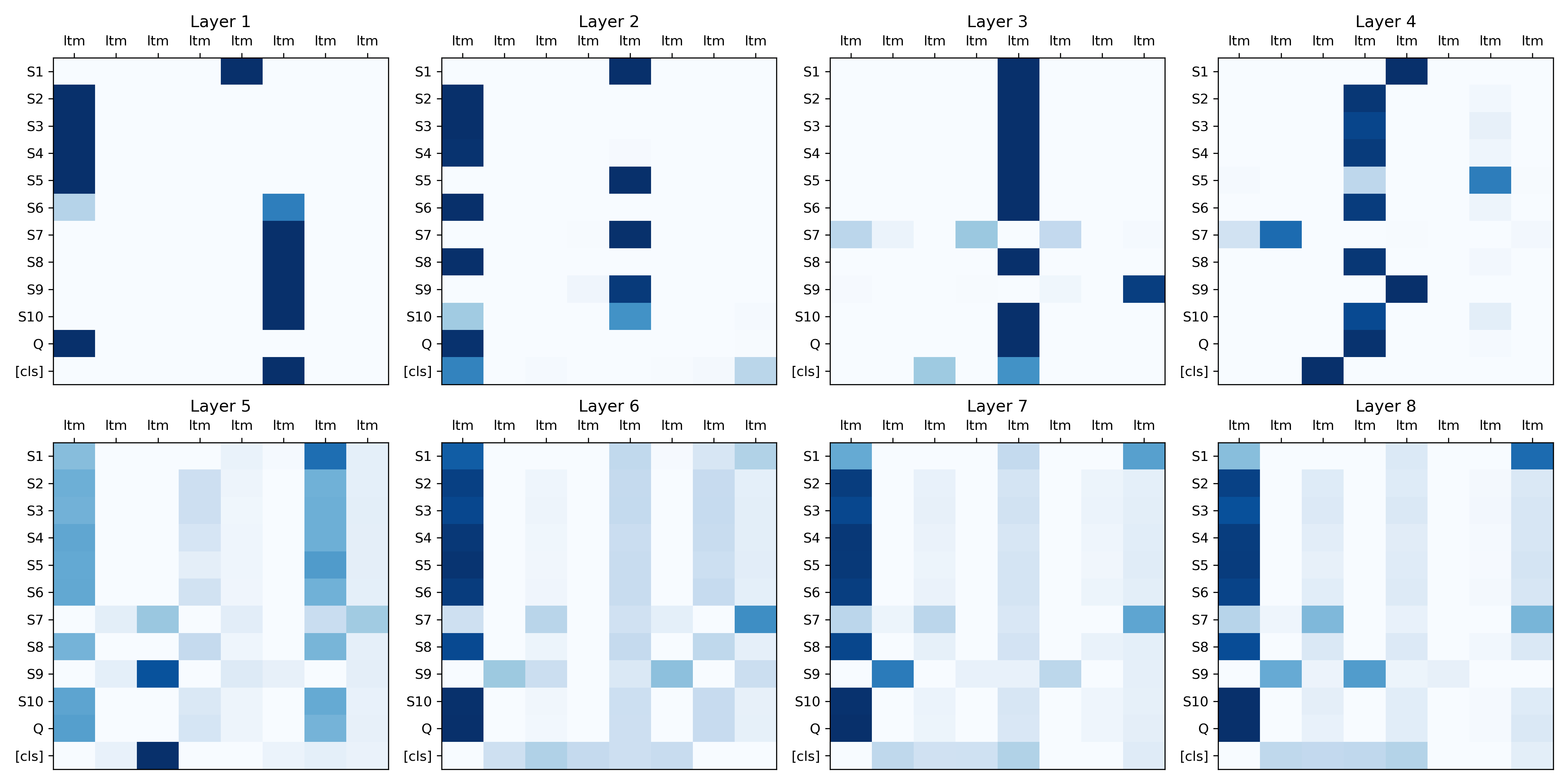

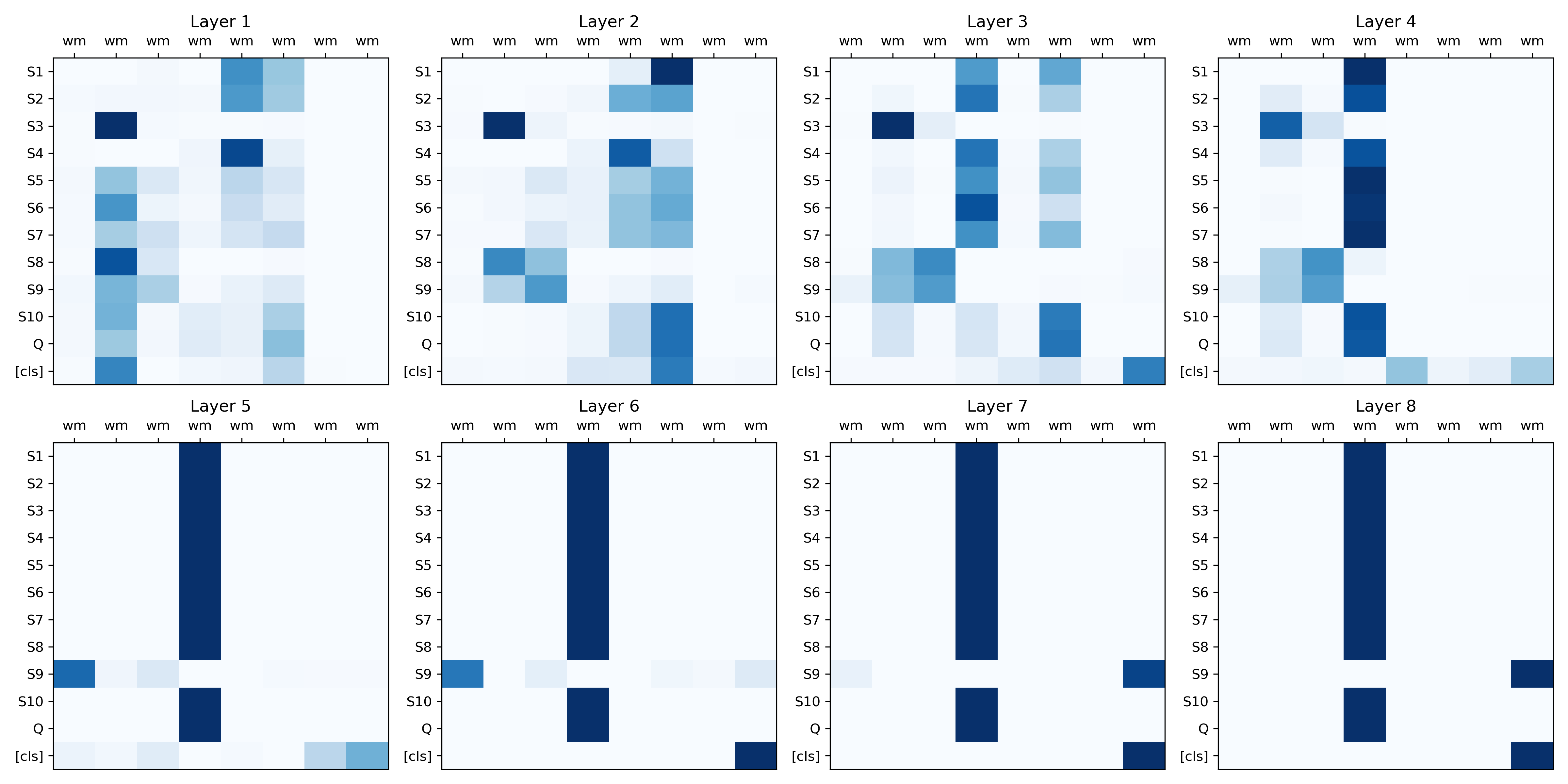

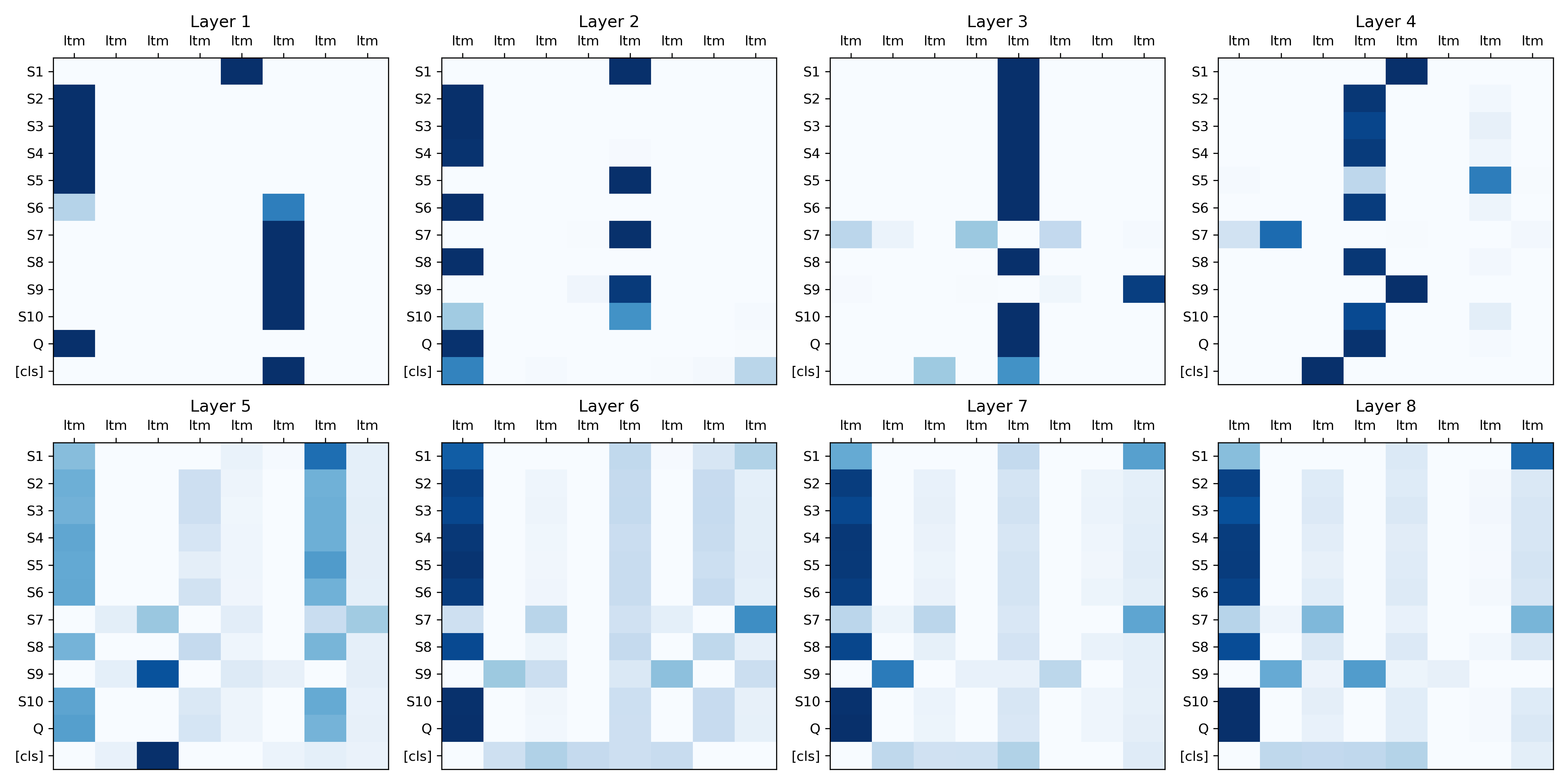

Figure 2: Attention patterns between inputs and WM

Experiments and Results

The framework was evaluated by enhancing existing Transformer and CNN architectures, achieving superior performance across diverse tasks such as image classification, question-answering (QA), and relational reasoning.

- bAbI Tasks: In applied tests on bAbI, a QA task set, the PMI-enhanced models significantly reduced error rates, demonstrating the effective use of long-term relational memory in reasoning tasks.

- Example: Performance improved from baseline models (such as LSTM) with PMI-TR achieving mean error rates as low as 2.55% compared to 27.3% of LSTM.

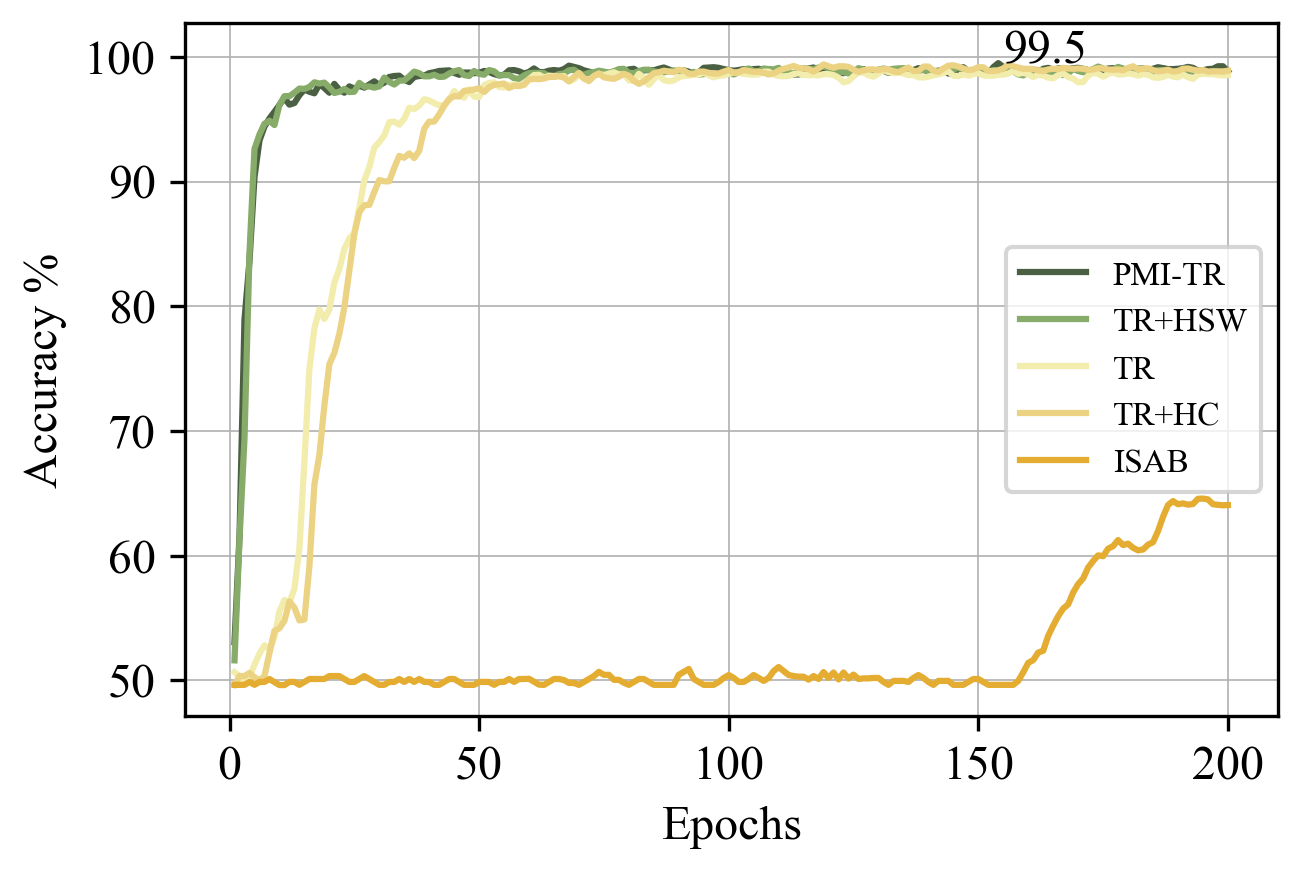

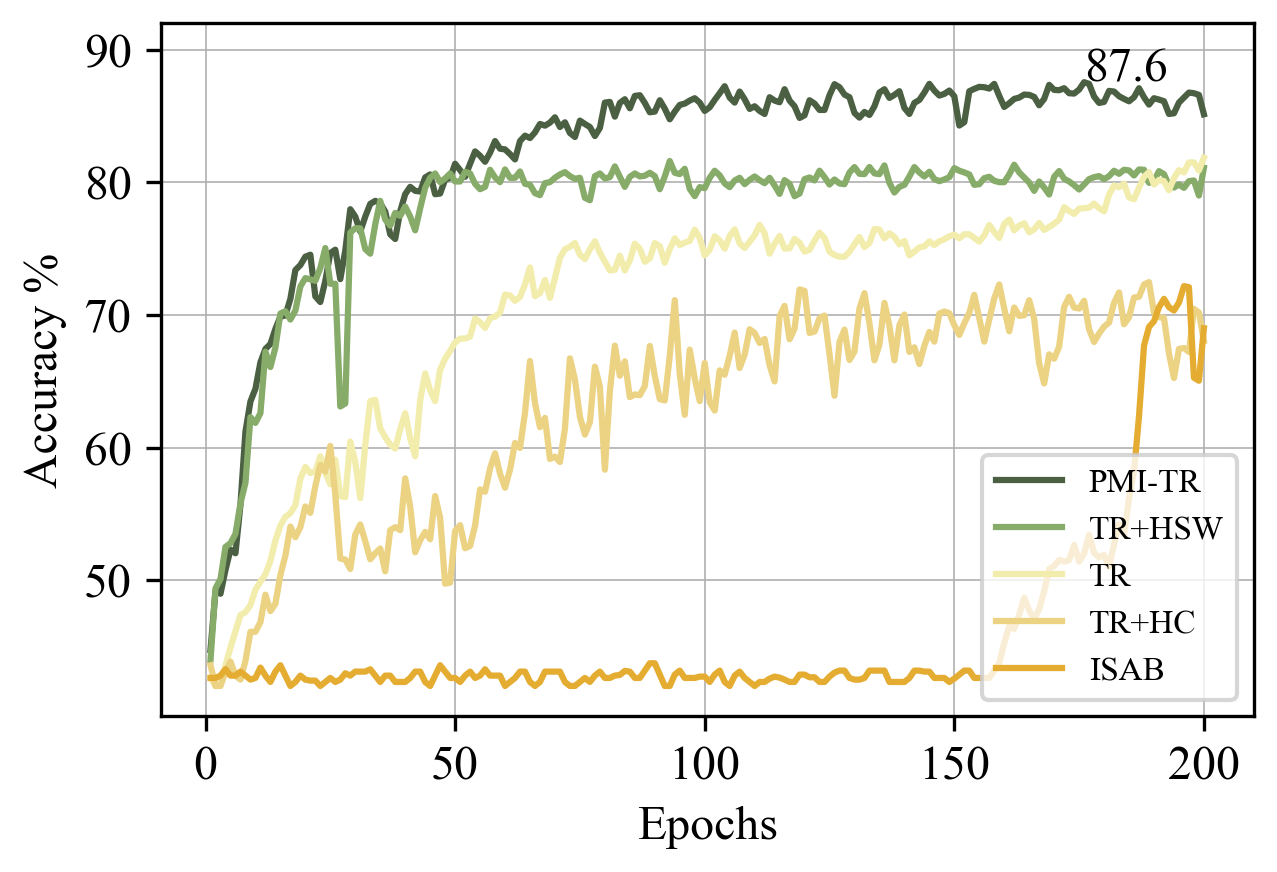

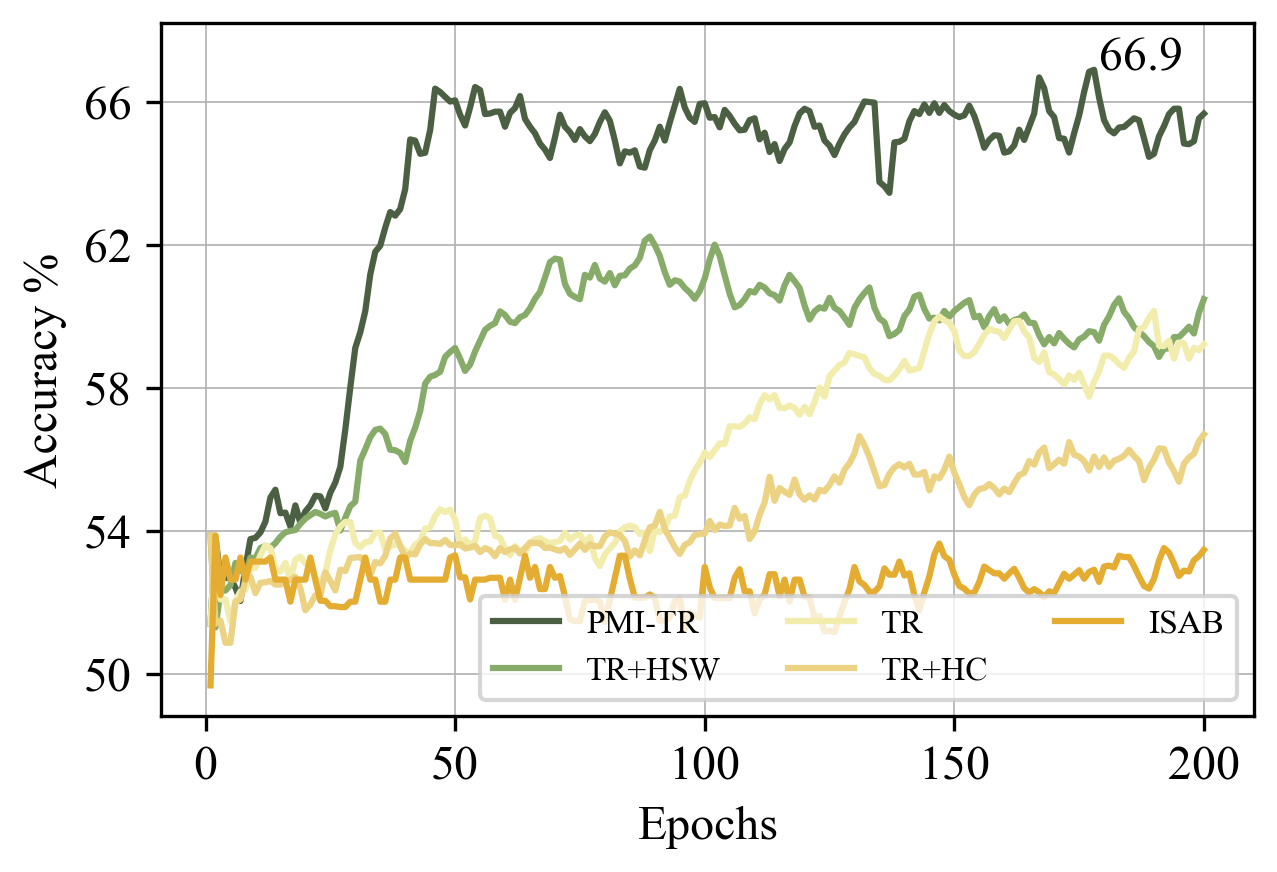

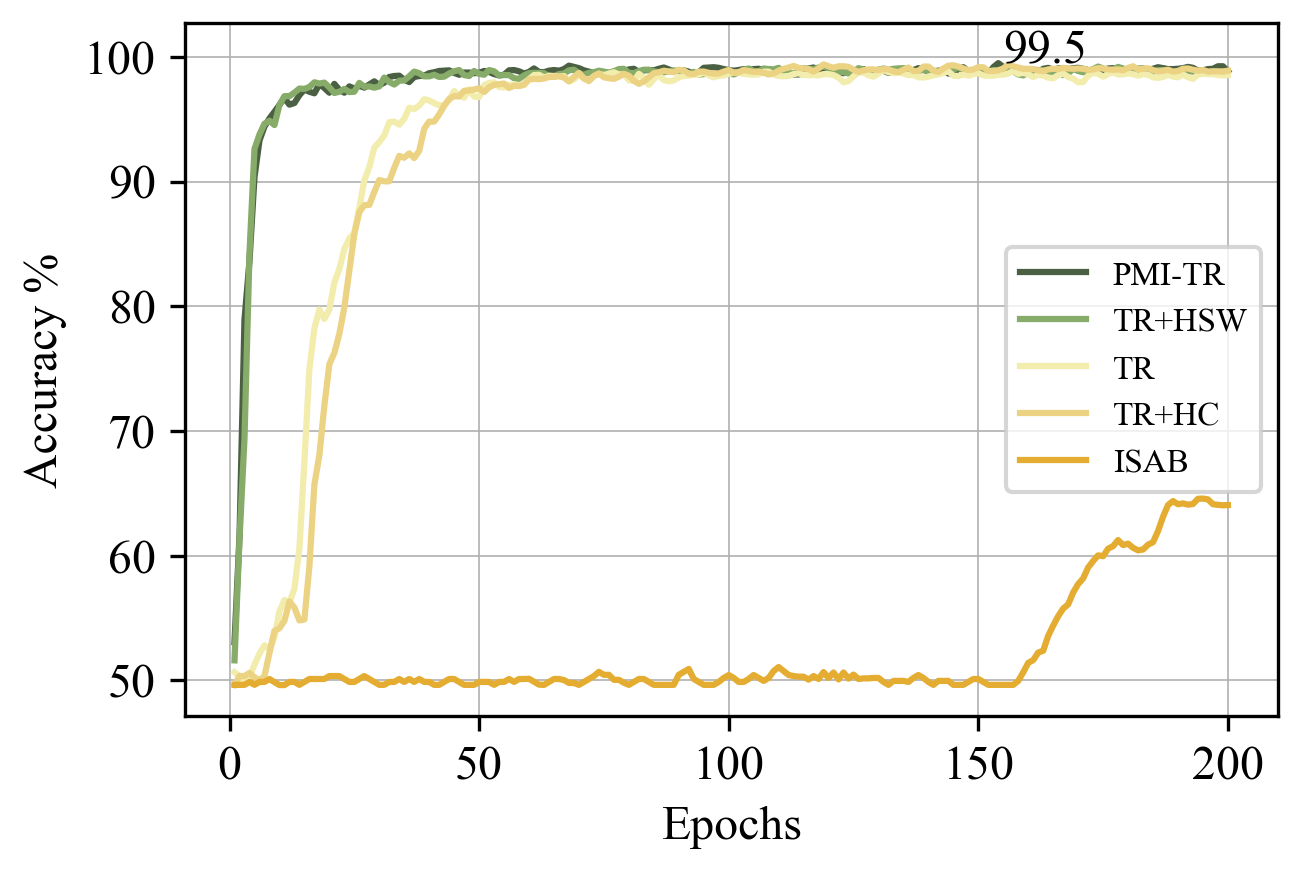

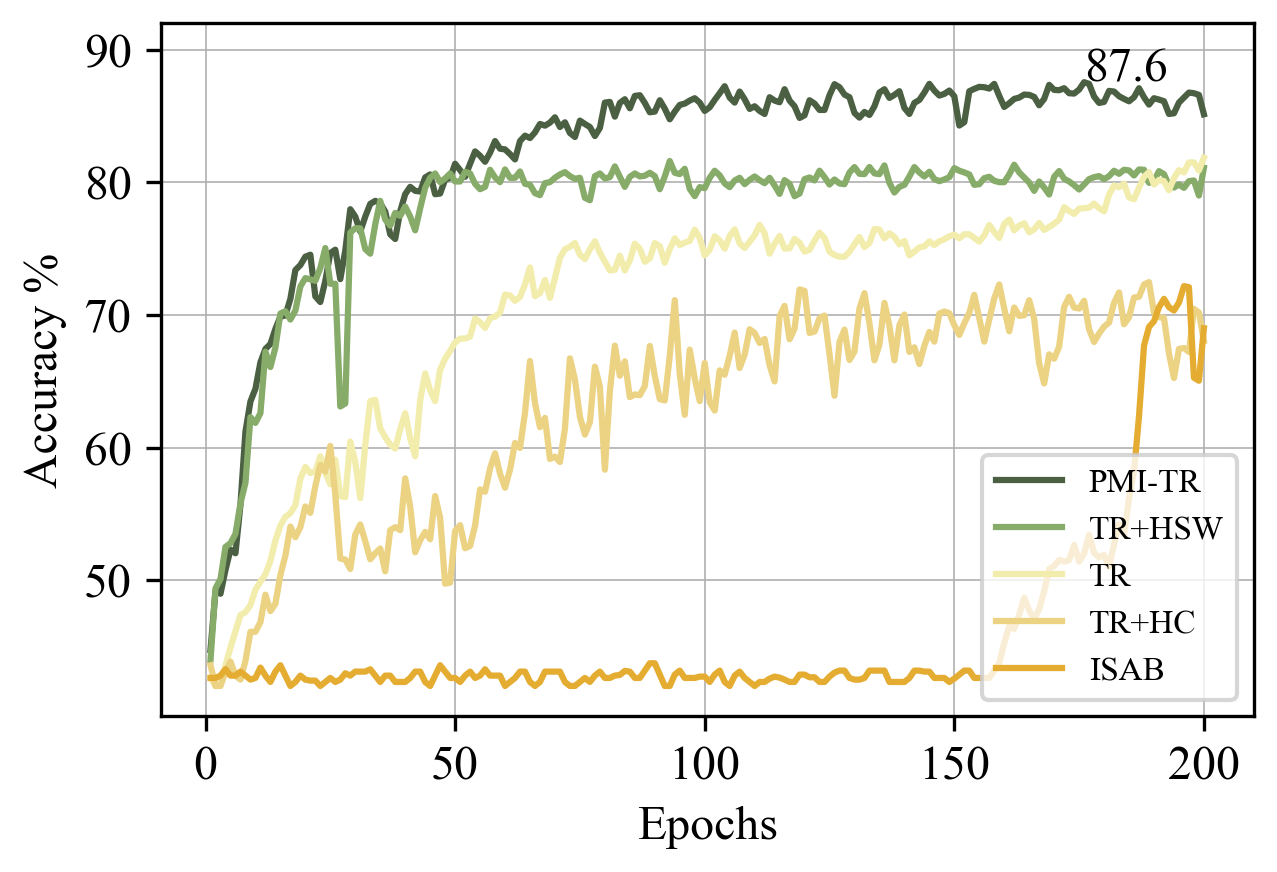

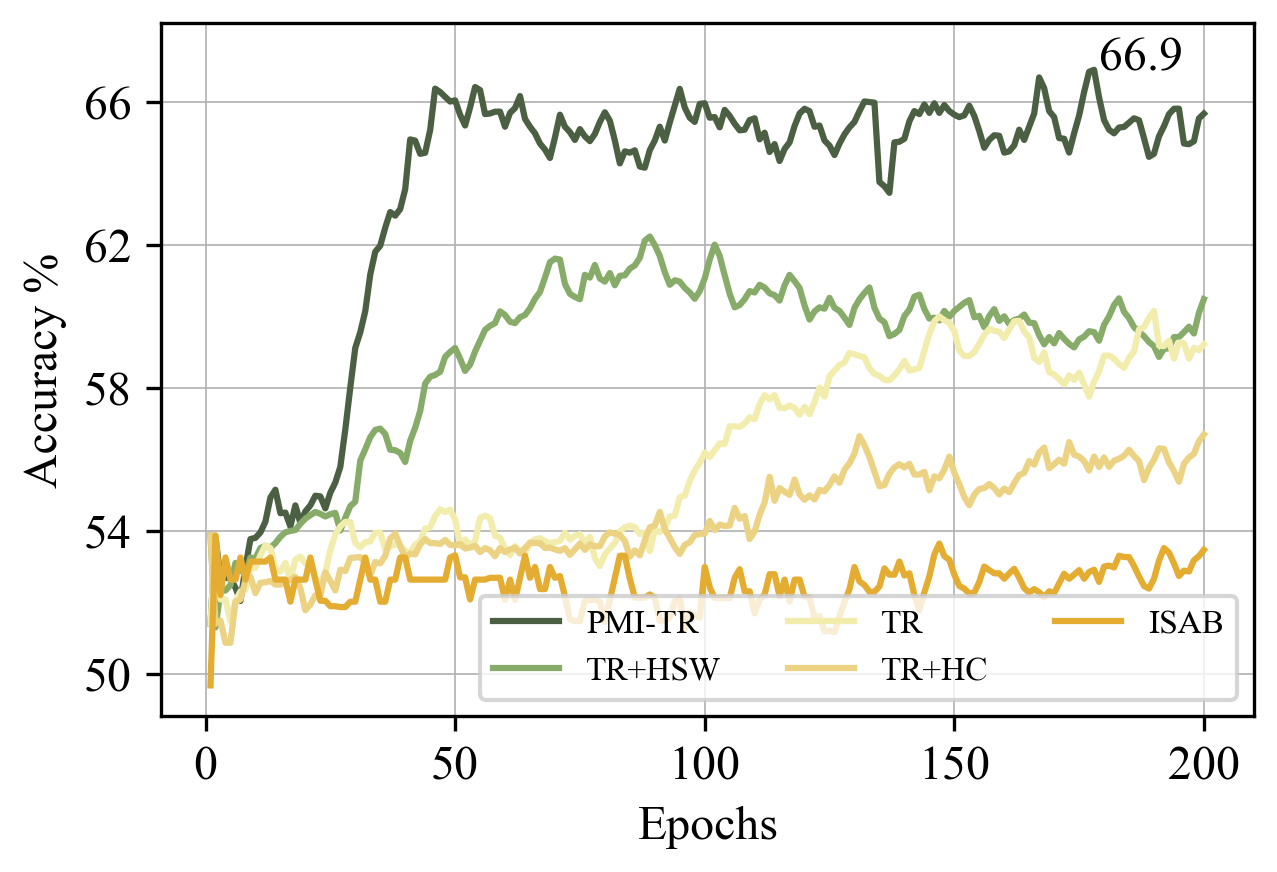

- Sort-of-CLEVR Dataset: On tasks requiring relational reasoning, such as identifying object relationships, the PMI framework showed faster convergence and higher accuracy than competing models.

Figure 3: Unary Accuracy

Implications

The PMI framework offers a robust method for enhancing existing neural architectures, particularly when tasks require retaining and reasoning over relational information across multiple steps. Concretely, it paves the way for incorporating richer cognitive models into AI, which better mimic human-like processing capabilities.

Conclusion

By bridging cognitive architectures with deep learning, the PMI framework not only improves task performance but also enriches our approach to building more human-like AI systems in diverse application domains. Future work should explore the integration of similar cognitive-inspired frameworks across a wider variety of neural architectures and tasks, potentially expanding the understanding of AI's capabilities in simulating human cognitive processes.

The provided figures show the PMI framework, unary accuracy on benchmarks, and attention patterns in memory, illustrating the mechanisms and outcomes of the proposed methodology.