- The paper introduces Selenite, a framework using GPT-4 and zero-shot NLI to generate detailed overviews of complex information spaces.

- It employs a Chrome extension architecture to extract topics, criteria, and options, achieving high recall and enhanced user efficiency.

- Empirical evaluations demonstrate reduced cognitive load, improved precision, and robust scalability across diverse application domains.

Scaffolding Sensemaking: A Technical Review of "Selenite: Scaffolding Online Sensemaking with Comprehensive Overviews Elicited from LLMs"

Introduction

"Selenite: Scaffolding Online Sensemaking with Comprehensive Overviews Elicited from LLMs" (2310.02161) addresses a fundamental barrier in web-scale sensemaking: the prohibitive cost of developing a comprehensive and unbiased overview of complex, unfamiliar information spaces, especially at the cold start of the sensemaking process. Conventional systems are either dependent on prior expert curation or manual user effort, leading to incomplete, biased, or fragmented overviews. Selenite circumvents these bottlenecks by leveraging LLMs—specifically, GPT-4—as both an implicit knowledge base and a reasoning machine to automagically scaffold criteria, options, and navigation aids for unfamiliar domains.

This essay examines Selenite’s underlying architecture, its integration of LLM and zero-shot NLI methods, user interface strategies, and the empirical findings derived from intrinsic and extrinsic evaluations. Emphasis is placed on implementation details, design trade-offs, observed numerical effects, potential limitations, and broader implications for interactive human-AI sensemaking systems.

Selenite Architecture and Workflow

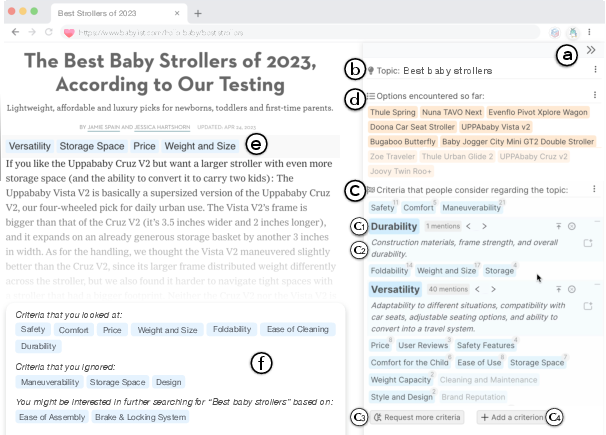

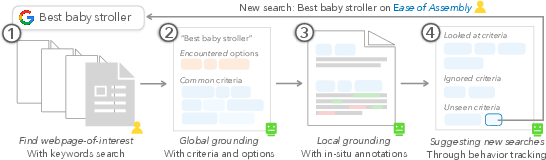

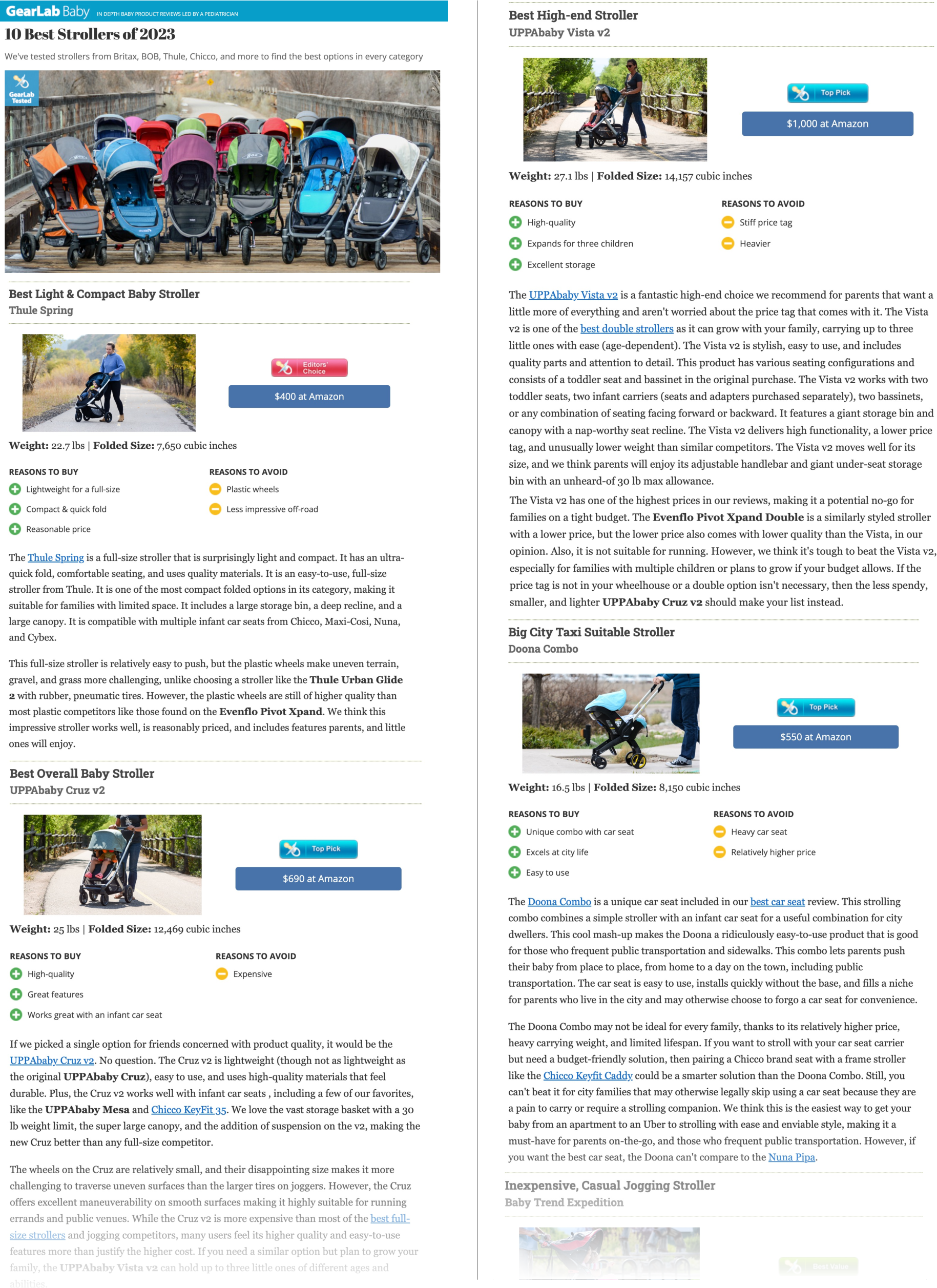

Selenite operationalizes three principal design goals: (D1) upfront construction of a global overview via common criteria and options; (D2) local comprehension through page- and paragraph-level summaries, alongside contextually grounded annotation; and (D3) dynamic suggestion of sensemaking next steps based on individual user coverage and reading provenance.

The core architecture is embodied as a Chrome extension, realized in TypeScript/React, with backend services on Google Firebase and GPU-accelerated ML inference for zero-shot NLI, using BART/MNLI models.

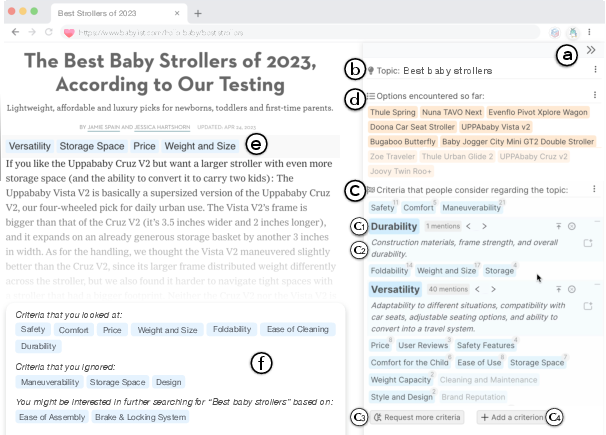

Principal System Workflow:

- Topic and Options Extraction On page load, Selenite frames topic inference as an LLM-based summarization problem, querying GPT-4 with the page title and opening paragraphs. For extraction of "options" (e.g., products in comparative reviews), Selenite partitions page content into manageable chunks (to respect LLM context window constraints), then parallelizes GPT-4 queries to extract candidate options.

- Criteria Elicitation and Refinement Selenite prompts GPT-4 iteratively (using Self-Refine methods) to generate, expand, and rank around 20–25 commonly considered criteria per domain, yielding string tuple lists of (criterion, description). This is supplemented by the user’s ability to post-edit, add, or request further diversification in the overview.

- Zero-Shot NLI-based Annotation For each paragraph, criteria coverage is computed using a BART-large-MNLI model as a multi-label zero-shot classification task, thresholded aggressively for recall (typical label prob. ≥ 0.96), and enriched with per-paragraph in situ annotation.

- Structured Navigation and Analysis Tools

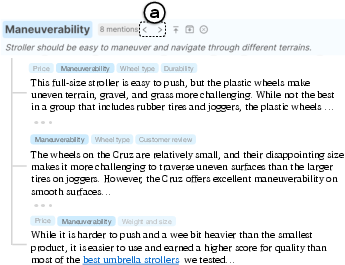

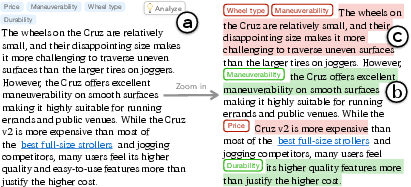

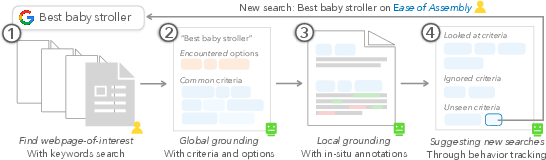

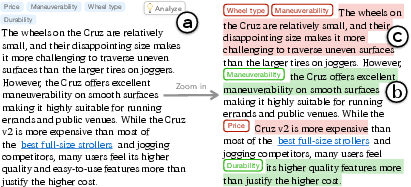

Selenite presents all criteria and options in a persistent sidebar, supports criterion-scoped navigation ("previous/next" buttons), and exposes a "zoom in" workflow (invoking GPT-4 again) to disambiguate convoluted paragraphs, labeling phrases with aspect/sentiment granularity.

Figure 1: The main Selenite interface sidebar, demonstrating global overview, encountered options, local annotations, and progress summaries for guided sensemaking.

Figure 2: Main workflow: after landing on a page, users receive a global overview (criteria/options), in-situ paragraph annotation, and dynamic search suggestions on exit.

Figure 3: Fast navigation to criteria mentions via structured UI affordances.

Figure 4: Zoom-in analysis: paragraph-level "Analyze" button triggers LLM-powered segmentation of phrases by criterion and sentiment polarization.

Implementation Details, Models, and Scalability

LLM and NLI Pipelines

- Topic and Criteria Elicitation: GPT-4, temperature 0.3, using multi-stage prompt chaining. To reduce latency and prevent rate limitations, dual-API and retry logic is implemented, with real-world response times typically <10s per prompt even under load.

- Paragraph Annotation: BART-large-MNLI, accessible via batched GPU inference over a cloud API. Paragraph-level processing is parallelized; empirical thresholds are tuned to favor recall, trading off spurious annotations for minimized criteria omission (which is more disruptive for navigation, per user study feedback).

- "Zoom-in" Deep Analysis: Multi-step GPT-4 prompt orchestration, first extracting relevant phrases per criterion and performing aspect-based sentiment classification, then labeling with candidate options.

UI/UX Considerations

- Sidebar: Present throughout the browsing session, aggregates criteria, options, and highlights local coverage (option/criteria presence determined by NLI models and LLM extraction).

- In-situ Annotations: Rendered as badges atop paragraphs, previewing covered criteria; users can use them for non-linear skimming/navigation.

- Progress Summaries: On page exit, the sidebar offers explicit coverage feedback—criteria seen/skipped—and proposes maximal-coverage search queries for unexplored dimensions (using a semantic diversity/relevance algorithm on top of embedding-space distance calculations).

Empirical Evaluations and Metrics

Intrinsic Measurement

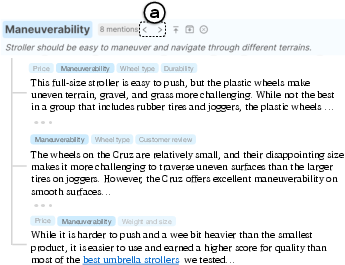

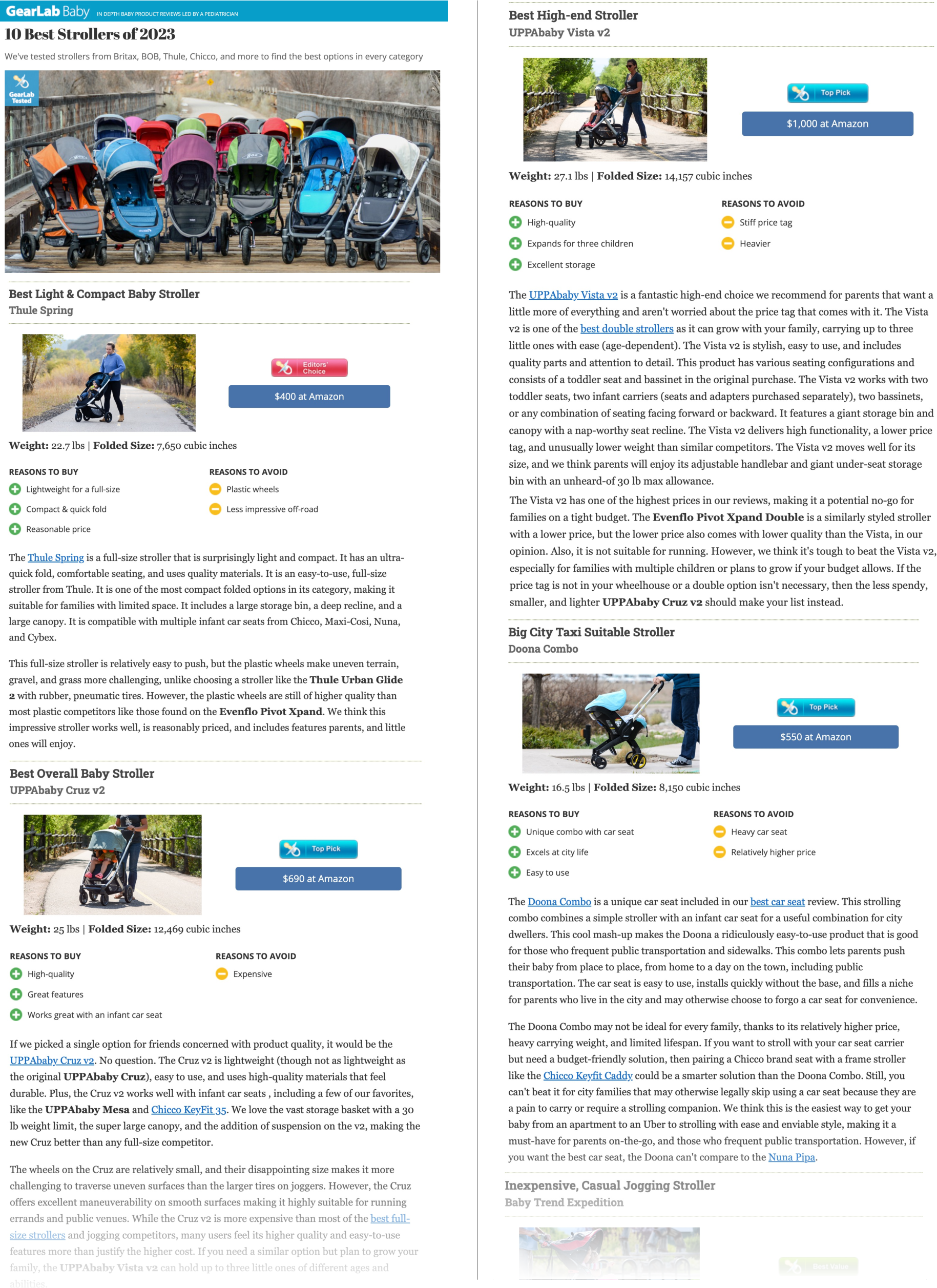

Selenite’s intrinsic capability was benchmarked on 10 representative, high-diversity domains (e.g., “best baby strollers”, “best air purifiers”, “birthday gift ideas”) by comparing LLM/NLI-extracted criteria and options against ground truth constructed by aggregation of top-5 Google search results and careful multi-annotator unification. Quantitative metrics:

| Metric |

Topic-level Mean |

Paragraph-level Mean |

| Precision |

0.80 |

0.85 |

| Recall |

0.95 |

0.98 |

| F1-score |

0.87 |

0.91 |

Strong recall at both levels indicates that, for most domains, Selenite surfaces a superset of user-identified criteria—critical to mitigating anchoring bias. No substantial hallucination of irrelevant criteria was observed on sampled topics.

Option extraction via GPT-4 achieved 100% accuracy relative to human-curated options per page, confirming that LLM-based extraction can outperform HTML/tag heuristics and is robust to semantic divergence and page structure non-uniformity.

Human-Centric Usability and Comprehension Gains

Efficiency and Coverage:

In controlled within-subjects studies (n=12), Selenite reduced average sensemaking task completion times by 36.3% and increased the number of valid criteria identified by ~90% compared to the baseline, and users achieved significantly higher precision (from 78.4% to 98.8%, p<0.05) and recall (from 30.4% to 73.0%, p<0.05) vs. ground truth.

Cognitive Load and Satisfaction:

NASA TLX scores confirmed significantly reduced mental demand, temporal demand, and effort, with increased perceived performance (paired t-tests, p<0.05). System Usability Scale (SUS) medians were 6–7 on a 7-point scale for comprehensibility and recommendability.

Behavioral Adaptations:

Qualitative studies revealed that after a short trust calibration phase, most users shifted from skimming entire articles to criteria-first, selective reading, guided directly by Selenite's in-situ metadata and navigation affordances. Search behavior diversified, with Selenite users issuing more queries and encountering more unique, high-utility information.

Design Trade-offs and Limitations

Knowledge Quality vs. Overload

By design, Selenite optimizes for coverage but trades off increased annotation density—which can, in rare cases, distract or overwhelm users, especially for unrelated or repetitive page sections. As LLM-generated criteria lists grow, effective UI affordances (collapsible, fade hierarchy, or knowledge graphs) become important, but Selenite currently defaults to a flat, ranked-list presentation.

Domain Validity and LLM Limitations

LLM-based world knowledge ensures broad domain validity but may underperform in highly specialized or emergent domains where training data coverage is lacking. For options, Selenite mitigates potential staleness by extracting from local page context; for criteria, the risk is lower due to stability of comparative dimensions across time, but could be addressed with hybrid RAG approaches in future implementations.

Annotation Robustness

The NLI model, while demonstrating high recall, is sensitive to perturbations in criterion descriptions; users may need to intervene occasionally by editing/fusing criteria or adjusting label thresholds. Ground-truth mismatches typically arise due to correlated or hierarchical criteria (e.g., "innovation" subsuming "growth speed" in deep learning frameworks); Selenite plans to expose criteria connection metadata in further iterations.

User Overdependence and Anchoring Bias

Exposure to criteria and progress summaries at reading onset and exit introduces anchoring risk, but empirical evaluations found that Selenite-proposed criteria typically cover a strict superset of those surfaced by unaided users. Still, further UI work is needed to encourage serendipitous exploration and critical engagement, such as progressive disclosure or counterfactually-suggested criteria.

Engineering and Computational Considerations

- Response Latency: LLM API requests per page (topic/criteria extraction) are pre-fetched and cached per session; per-paragraph NLI inference is parallelized as cloud function calls, with L4 calculation yielding low sub-second latency for typical pages in common domains.

- Scaling: The dual-API, retry, and session cache architecture supports moderate concurrent user counts; with future public API quotas for GPT-4 or equivalents, this could be further optimized by batch processing or moving to open-source LLM backends.

- Extensibility: Selenite’s architecture is task-independent at the core; domain prompt design can be extended for non-comparative sensemaking (debugging, skill learning) by changing LLM instruction templates.

Implications and Future Directions

Practical Applications

Selenite’s methodology is generalizable to any information foraging or sensemaking workflow constrained by knowledge disparity and information overload. It is particularly applicable for:

- Rapid onboarding in technical domains (e.g., new software framework comparisons)

- Consumer or B2B product comparison portals

- Cross-domain knowledge graph bootstrapping

- Reading aids for accessibility and cognitive scaffolding

Theoretical Significance

This work demonstrates that LLMs, when prompted with top-down, context-anchored instructions and combined with zero-shot NLI annotation, can operationalize human-expert "overviews" with high coverage and accuracy, matching—if not exceeding—manual annotation pipelines at a fraction of the cost and latency.

It also affirms that sensemaking systems need not be bottlenecked by prior expert curation, pushing the boundary of cold-start support in user modeling, information management, and HCI.

Prospective Developments

- Integration with RAG and verifiability models: Using external corpus RAG (retrieval-augmented generation) to supplement LLM world knowledge for specialized or dynamically-evolving domains.

- Knowledge structures: Moving from flat lists to hierarchical or graph-based criterion representations for improved cognitive ergonomics and scalable exploration.

- Beyond comparison tasks: Adapting the design goals and prompts for open-ended skill acquisition, troubleshooting, or investigative journalism domains.

- Field deployment and analytics: Large-scale, long-term field studies to observe longitudinal effects on user sensemaking patterns, anchoring behavior, and knowledge retention.

Conclusion

Selenite establishes a robust, extensible architecture for LLM-powered, context-grounded sensemaking scaffolding on the web, validated by both strong empirical metrics and high user adoption in behavioral studies. By combining global overviews, fine-grained automated annotation, and actionable guidance, Selenite provides a paradigm shift in how systems can lower the cost of entry into unfamiliar information spaces and support the active construction of comparative mental models.

The broader implication is a strengthening of interactive, human-in-the-loop AI collaboration protocols, where LLMs act as both collaborator and scaffold—raising the baseline for sensemaking and decision support in digital environments.

Figure 5: Example user study material: the "best baby strollers" article as used in option/criteria evaluation and user-guided information extraction analysis.