- The paper shows that optimized negative sampling, particularly using gradient embedding and BADGE, significantly improves classification performance.

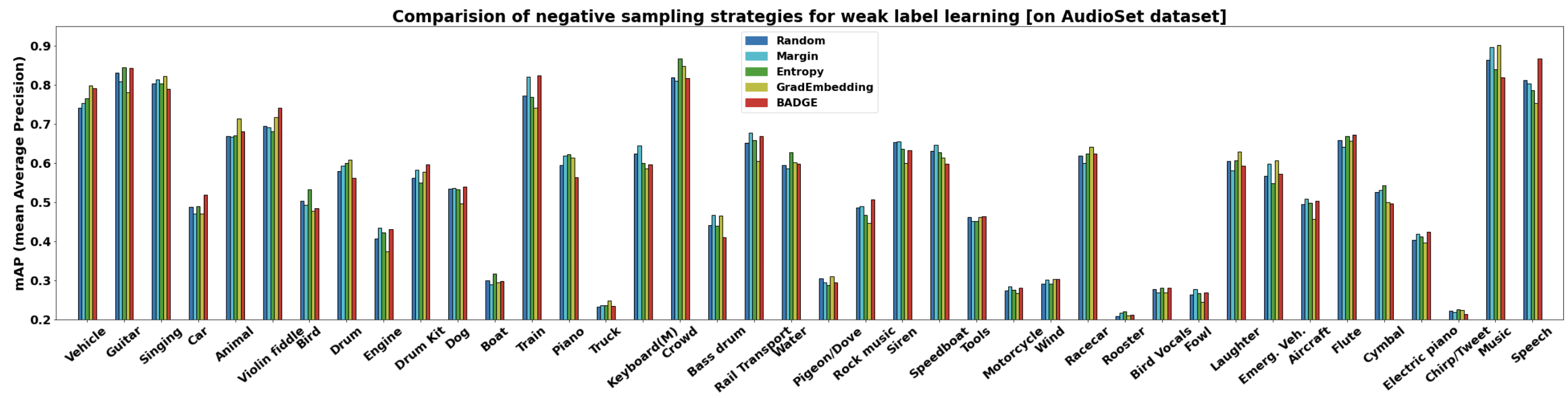

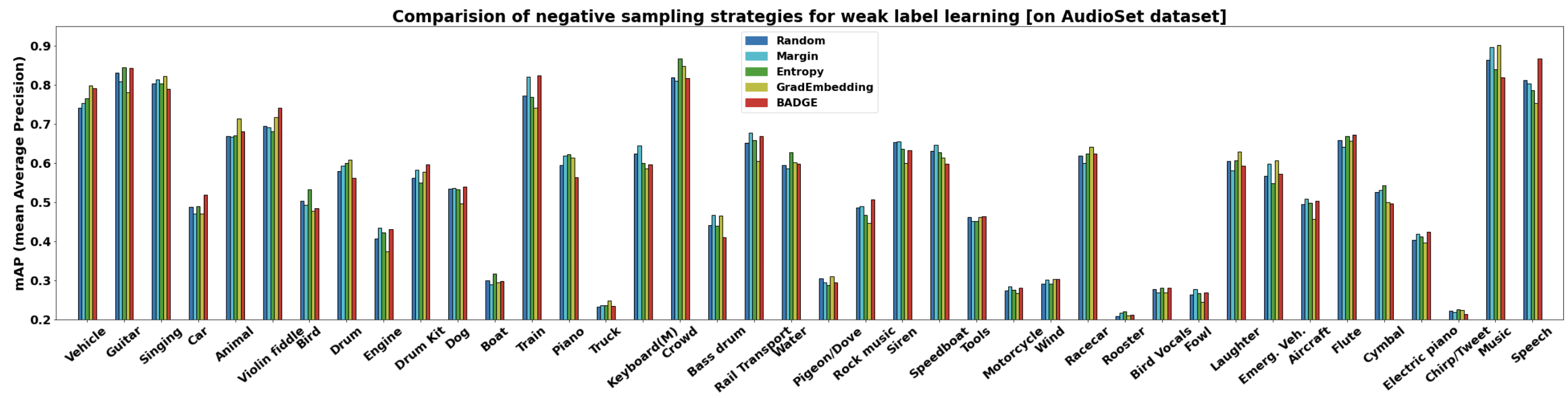

- It systematically compares various sampling methods—random, margin, entropy, gradient embedding, and BADGE—on CIFAR-10 and AudioSet datasets.

- The study highlights the enhanced model efficiency and scalability achieved by leveraging informative negative samples in imbalanced weak-label scenarios.

Importance of Negative Sampling in Weak Label Learning

Introduction

The paper "Importance of Negative Sampling in Weak Label Learning" focuses on the role of negative sampling strategies in weak-label learning systems. Weak-label learning presents unique challenges as it involves training models on data 'bags' containing a mix of positive and negative instances, where only bag-level labels are available. The imbalance prevalent in negative instances often complicates training, necessitating selective identification of informative negative samples. This paper evaluates various negative sampling methodologies, emphasizing their significance in improving classification accuracy and computational efficiency in domains like image and audio classification using datasets such as CIFAR-10 and AudioSet.

Research in weak-label learning spans several areas, using methods that encode noisy, incomplete, or partially informative labels. Previous work on strongly labeled datasets has explored negative sampling techniques, particularly in graph representation. Conversely, in weak-label settings, such strategies remain underexplored. Recognizing and implementing superior sampling strategies directly impact model training, aiding the classifier's ability to distinguish between meaningful negative samples and irrelevant ones. Active learning methodologies, which maximize information gain from uncertain samples, offer paradigms that can be adapted for negative sampling in weak-label scenarios.

Sampling Strategies and Methodology

The paper proposes multiple sampling strategies: random, margin-based, entropy-based, gradient embedding, and the BADGE technique. Each approach determines the selection of negative samples with varying levels of informativeness and diversity. The baseline comparison with random sampling reveals how tailored strategies can leverage the inherent imbalance of weak-label datasets. Notably, the study highlights the effectiveness of the gradient embedding and BADGE strategies in various experimental setups.

- Random Sampling: A baseline method where negative bags are chosen uniformly, serving as a control for other strategies.

- Margin Sampling: Selects bags with minimal positive-negative probability margins, highlighting uncertainty.

- Entropy Sampling: Prioritizes bags with high entropy, reflecting uncertain prediction distributions.

- Gradient Embedding: Utilizes L2 norm gradients to capture impactful samples, encouraging diverse and informative selections.

- BADGE: Combines uncertainty with clustering techniques (K-MEANS++), optimizing diversity alongside informativeness.

Experiments and Results

Image Bag Classification

Experiments conducted using CIFAR-10 reveal that strategies like BADGE and gradient embedding enhance classification metrics such as Average Precision (AP) and AUC-ROC over baseline random sampling. The network architectures applied, including Simple CNN and ResNet18, were tailored to maximize performance under weak-label constraints:

Figure 1: Comparing different negative sampling strategies for weak label learning on AudioSet.

Audio Classification

For audio tasks using AudioSet, preprocessing involved transforming clips into Mel spectrograms. Experiments confirmed that gradient-based strategies notably outperformed other sampling methods. Data augmentation techniques, such as MixUp and frequency masking, further supported robust classification results. Overall, the gradient embedding method demonstrated superior performance in more than half of the evaluated classes, underscoring its efficacy in diverse audio contexts.

Conclusion

The paper establishes the critical role of negative sampling in improving weak-label learning systems. Strategies like gradient embedding and BADGE advance the effectiveness of models, particularly when faced with imbalanced datasets prevalent in real-world applications. Future directions may explore the scalability of these methods across more extensive datasets and computational configurations, further refining the integration of weak-label learning in practical scenarios.