- The paper presents a comprehensive framework for LLM-based agents that integrates perception, reasoning, and action at both individual and societal levels.

- It details modular architecture enhancements such as extended memory, multimodal encoding, and tool integration to support flexible agent behaviors.

- It identifies key challenges including robustness, safety, and sim-to-real transfer, providing a roadmap for future research in agent development.

The Rise and Potential of LLM Based Agents

Introduction and Motivation

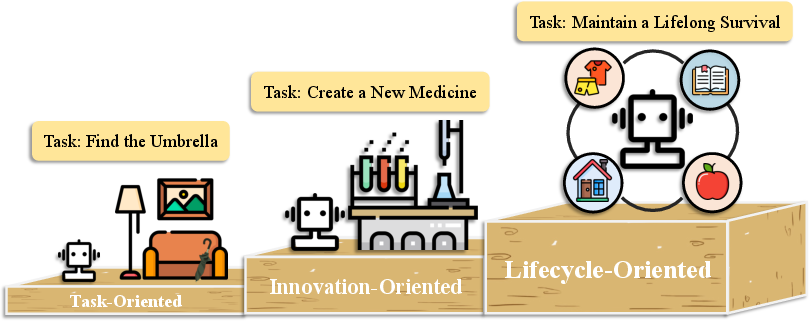

"The Rise and Potential of LLM Based Agents: A Survey" (2309.07864) presents a comprehensive analysis of the trajectory, design, capabilities, and open challenges surrounding LLM-based agents as a foundational element for next-generation AI. The manuscript integrates a historical perspective, a modular systems view, application scenarios, and a synthetic treatment of agent societies, covering both the micro- (individual/cognitive) and macro- (social/societal) levels of intelligence. By dissecting both architectural and behavioral paradigms, the survey offers a reference framework for both research and engineering communities forging cognitive agents with broad environmental, social, and task adaptivity.

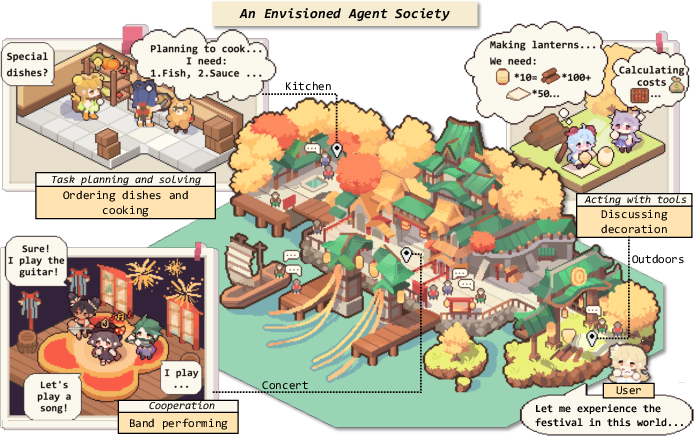

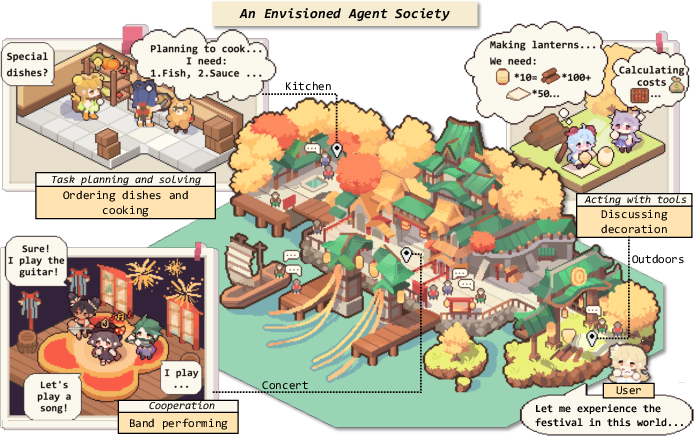

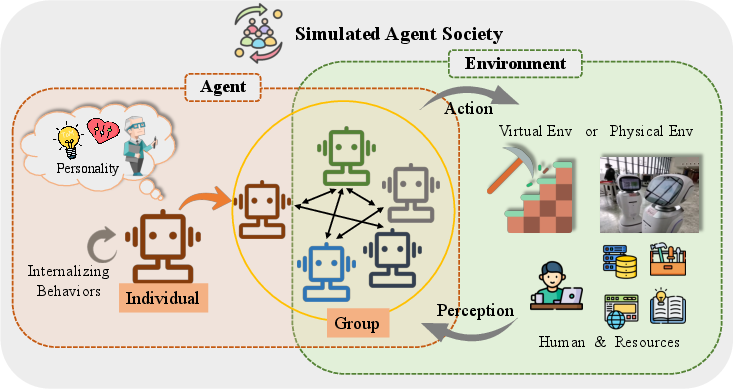

Figure 1: An envisioned society with LLM-based AI agents exhibiting collaborative and interactive behavior with humans across diverse, multimodal scenarios.

From Agents to LLM-based Agents: Conceptual and Technical Foundations

Conventional agent literature roots itself in the triad of perception, decision, and action, covering progression from disembodied, symbolic agents to RL-based and meta-learning agents. The contemporary paradigm shift originates from LLMs' emergent capabilities—natural language interaction, robust multi-modal reasoning, autonomy, and social ability. The survey unifies this with a modular LLM-agent architecture comprising:

- Brain: LLM-driven cognitive and control core.

- Perception: Multimodal sensing—textual, visual, auditory, spatial, gestural inputs.

- Action: Output modalities spanning text, tool usage, embodied/robotic control.

The review delineates how architectural augmentations—memory extension, multi-modal encoders, tool-chaining, and external world modeling—substantially enhance agent generalization, adaptability, and environmental grounding compared to prior approaches.

Key Claims

- LLM-based agents can blend the strengths of symbolic systems (logical reasoning, compositionality), reactive agents (fast environmental adaptation), and RL/meta-learning agents (policy generalization) with the unprecedented language-centric generalization and communication abilities of modern LLMs.

- The LLM component enables emergent properties such as social reasoning, context-sensitive planning, and human-aligned interaction, thereby bridging fundamental gaps in prior agent architectures.

Modular Framework: Design and Mechanisms

The survey details system-level blueprints and inter-module flows, with substantial technical focus on:

Brain (LLM Core)

- Language Understanding/Generation: SOTA in contextually grounded multi-turn dialogue, cross-lingual fluency, and natural instruction following.

- Memory: Mechanisms for extended context (attention scaling, summarization, vector memory, data structures), persistent knowledge (embedding-based or retrieval-augmented memory), and memory editing (to address outdated or incorrect knowledge).

- Reasoning/Planning: Performance benefits of Chain-of-Thought, Tree-of-Thoughts, Self-Consistency, Plan-Reflection, and Tool-based Reasoning, yielding enhanced accuracy for complex, multi-stage tasks.

- Transferability: Both ICL and zero/few-shot adaptation remain key for unseen task generalization (cf. FLAN, T0, InstructGPT), with continual learning methods being actively researched to overcome catastrophic forgetting.

Perception

- Text/Visual/Audio Inputs: Sophisticated fusion architectures (ViT, Q-former, BLIP-2, projection heads) map multimodal signals into LLM-compatible embeddings, enabling fine-grained perception and affordance recognition.

- Environmental and Embodiment Extensions: Support for richer 3D, gesture, physiological, and spatial data—crucial for physical/robotic agents.

Action

- Language and Tool Usage: Integration of tool APIs (retrieval, search, DBs, code execution, scientific tools) and action planning sequences (via LLM reasoning and skill library composition).

- Physical/Embodied Control: Methods such as say-can, instruction guidance, hierarchy-driven control—progress in virtual worlds (e.g., Minecraft, AI agents in simulated towns) and increasing transfer to real-world robotics.

Application Scenarios

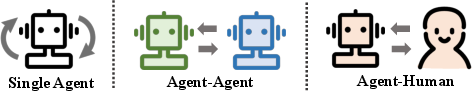

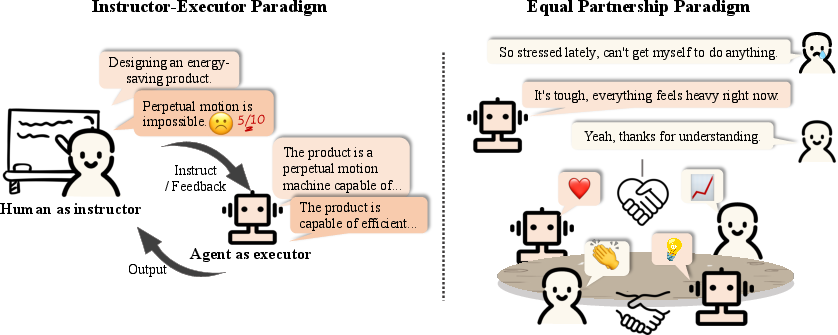

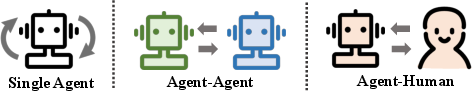

A taxonomy is established around single-agent, multi-agent, and human-agent systems:

Figure 2: Topologies of deployment: single LLM-agent systems, multi-agent societies, and human-agent collaborative environments.

Single-Agent Systems

Multi-Agent Systems

Human-Agent Interaction

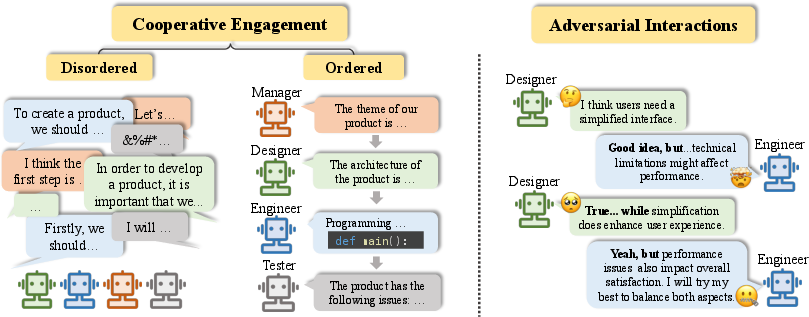

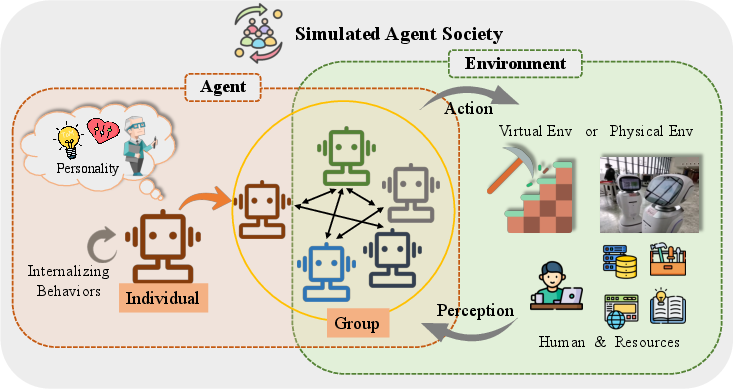

Agent Societies: Emergence, Simulation, and Social Phenomena

The survey advances to macro-level phenomena, including simulated societies and collective intelligence. LLM-agents, individually endowed with personalities (cognition, affect, character) and social capabilities, can form emergent group behaviors and social networks within both virtual and physical environments.

Figure 6: Multi-scale overview of an agent society: from internal cognition to group interaction and environmental integration.

Key properties include:

- Openness: Agents and environmental resources are dynamic, allowing real-world or synthetic population modeling.

- Persistence and Situatedness: Societal simulation is continuous, context-driven, and rules-based; agents possess spatial and temporal awareness.

- Organization: Rule-abiding, role-based interaction enables rich social phenomena, such as cooperative task execution, competitive games, network propagation, and ethical/moral decision-making.

Notably, studies demonstrate:

- Emergent social structures and collective behaviors such as coordinated problem-solving, adversarial correction (debate-based bias mitigation), and value propagation.

- Alignment with social values: The necessity for explicit evaluation and alignment with human societal norms, bias calibration, and mitigation of hallucinations or unethical behavior.

Challenges and Open Problems

The survey identifies several persistent and emerging open challenges including:

- Robustness and Safety: LLM-agents remain vulnerable to adversarial inputs/prompts, prompt injections, and knowledge poisoning, which can be catastrophic for multi-modality or tool-extended agents.

- Evaluation: Rigorous and multi-dimensional benchmarks assessing utility, sociability, value alignment, and continual adaptation are underdeveloped.

- Society Scaling: Scaling to large agent societies introduces computational, coordination, and information propagation complexities (e.g., information dilution/amplification, network bias).

- Sim-to-Real Transfer: Bridging virtual-physical gaps in perception and action for embodied agents requires hardware-adaptive designs and physically plausible simulation.

- Autonomy vs. Control: Ensuring agent autonomy does not undermine human alignment or control; safeguarding against unanticipated, unsafe emergent phenomena in open environments and societies.

Implications and Future Directions

The systematic treatment provided in this survey solidifies LLM-based agents as a central paradigm for artificial intelligence, catalyzing practical progress on the "AGI problem" at both the agent and society levels. Practically, LLM-agents enable broad applicability—from autonomous robotics and creative tasks to social simulation, scientific reasoning, and personalized digital assistance.

On the theoretical plane, the agent society abstraction paves the way for computational social science experiments, exploration of collective intelligence, and aligned value learning.

Future research directions are likely to emphasize:

- Enhanced evaluation frameworks for complex, evolving behavior and societal effects.

- More efficient algorithms and system design for large-scale, multi-agent, and multimodal architectures.

- Deeper alignment of agent behavior with human values, legal/ethical standards, and robust safety/monitoring.

- Bridging simulation and real-world deployment.

- Exploration of agents as universally composable services (Agent-as-a-Service) in cloud ecosystems.

Conclusion

This survey crystallizes the rapid emergence of LLM-based agents as a coherent, modular, and versatile paradigm, unifying perception, cognition, and action across single- and multi-agent configurations, and extending to full simulated societies. The outlined framework, challenges, and open problems provide a roadmap for the continued integration of LLM-agents into both research and complex, safety-critical practical domains throughout the broader AI landscape.