PointLLM: Empowering Large Language Models to Understand Point Clouds (2308.16911v3)

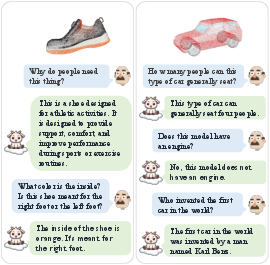

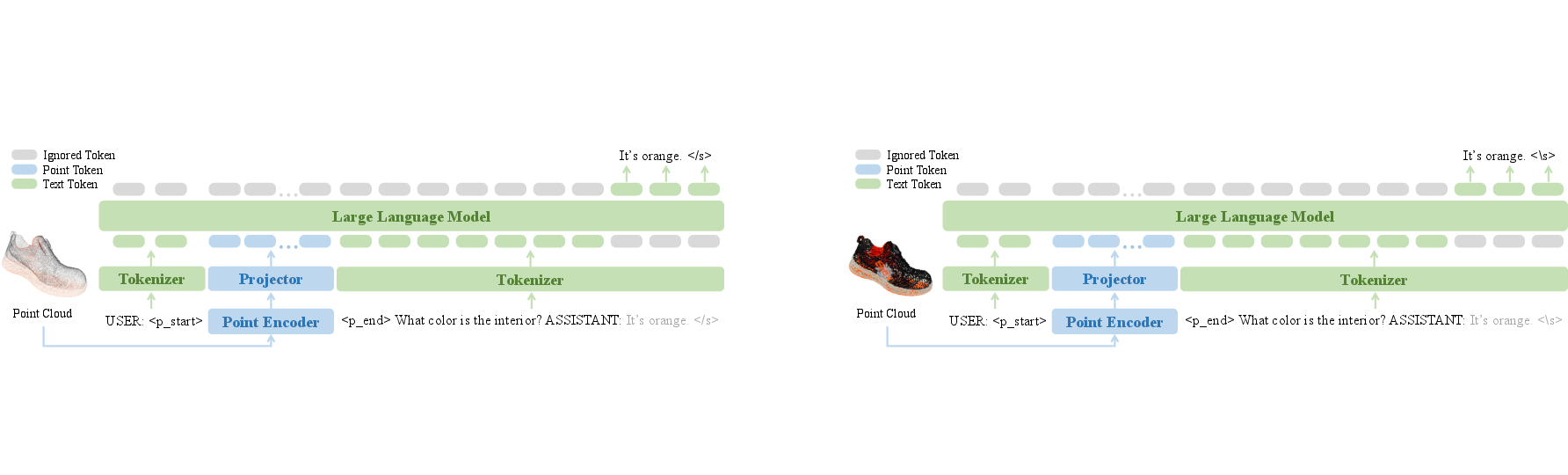

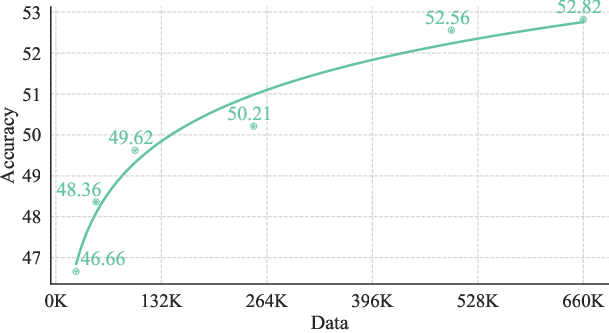

Abstract: The unprecedented advancements in LLMs have shown a profound impact on natural language processing but are yet to fully embrace the realm of 3D understanding. This paper introduces PointLLM, a preliminary effort to fill this gap, enabling LLMs to understand point clouds and offering a new avenue beyond 2D visual data. PointLLM understands colored object point clouds with human instructions and generates contextually appropriate responses, illustrating its grasp of point clouds and common sense. Specifically, it leverages a point cloud encoder with a powerful LLM to effectively fuse geometric, appearance, and linguistic information. We collect a novel dataset comprising 660K simple and 70K complex point-text instruction pairs to enable a two-stage training strategy: aligning latent spaces and subsequently instruction-tuning the unified model. To rigorously evaluate the perceptual and generalization capabilities of PointLLM, we establish two benchmarks: Generative 3D Object Classification and 3D Object Captioning, assessed through three different methods, including human evaluation, GPT-4/ChatGPT evaluation, and traditional metrics. Experimental results reveal PointLLM's superior performance over existing 2D and 3D baselines, with a notable achievement in human-evaluated object captioning tasks where it surpasses human annotators in over 50% of the samples. Codes, datasets, and benchmarks are available at https://github.com/OpenRobotLab/PointLLM .

- Flamingo: a visual language model for few-shot learning. In NeurIPS, 2022.

- Anonymous. Text-to-3d generation with bidirectional diffusion using both 3d and 2d priors. https://openreview.net/forum?id=V8PhVhb4pp, 2023.

- Openflamingo: An open-source framework for training large autoregressive vision-language models. arXiv:2308.01390, 2023.

- METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In ACL Workshop, 2005.

- Improving image generation with better captions. https://cdn.openai.com/papers/dall-e-3.pdf, 2023.

- Language models are few-shot learners. In NeurIPS, 2020.

- Vicuna: An open-source chatbot impressing gpt-4 with 90%* chatgpt quality, 2023.

- Palm: Scaling language modeling with pathways. arXiv:2204.02311, 2022.

- Instructblip: Towards general-purpose vision-language models with instruction tuning. arXiv:2305.06500, 2023.

- Flashattention: Fast and memory-efficient exact attention with io-awareness. In NeurIPS, 2022.

- Objaverse: A universe of annotated 3d objects. In CVPR, 2023.

- Palm-e: An embodied multimodal language model. arXiv:2303.03378, 2023.

- Datacomp: In search of the next generation of multimodal datasets. arXiv:2304.14108, 2023.

- Llama-adapter v2: Parameter-efficient visual instruction model. arXiv:2304.15010, 2023.

- Simcse: Simple contrastive learning of sentence embeddings. arXiv:2104.08821, 2021.

- Imagebind: One embedding space to bind them all. In CVPR, 2023.

- Multimodal-gpt: A vision and language model for dialogue with humans. arXiv:2305.04790, 2023.

- Point-bind & point-llm: Aligning point cloud with multi-modality for 3d understanding, generation, and instruction following. arXiv preprint arXiv:2309.00615, 2023.

- Lvis: A dataset for large vocabulary instance segmentation. In CVPR, 2019.

- Visual programming: Compositional visual reasoning without training. In CVPR, 2023.

- Imagebind-llm: Multi-modality instruction tuning. arXiv:2309.03905, 2023.

- Language models are general-purpose interfaces. arXiv:2206.06336, 2022.

- Clip goes 3d: Leveraging prompt tuning for language grounded 3d recognition. In ICCV, 2023.

- Gaussian error linear units (gelus). arXiv:1606.08415, 2016.

- 3d-llm: Injecting the 3d world into large language models. NeurIPS, 2023.

- Audiogpt: Understanding and generating speech, music, sound, and talking head. arXiv:2304.12995, 2023a.

- Language is not all you need: Aligning perception with language models. arXiv:2302.14045, 2023b.

- Clip2point: Transfer clip to point cloud classification with image-depth pre-training. In ICCV, 2023c.

- Openclip, 2021.

- Motiongpt: Human motion as a foreign language. arXiv:2306.14795, 2023.

- Shap-e: Generating conditional 3d implicit functions. arXiv preprint arXiv:2305.02463, 2023.

- Segment anything. ICCV, 2023.

- Mimic-it: Multi-modal in-context instruction tuning. arXiv:2306.05425, 2023a.

- Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. 2022.

- Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv:2301.12597, 2023b.

- Chin-Yew Lin. ROUGE: A package for automatic evaluation of summaries. In Text Summarization Branches Out, 2004.

- Visual instruction tuning. arXiv:2304.08485, 2023a.

- Openshape: Scaling up 3d shape representation towards open-world understanding. arXiv preprint arXiv:2305.10764, 2023b.

- Decoupled weight decay regularization. In ICLR, 2019.

- Scalable 3d captioning with pretrained models. arXiv:2306.07279, 2023.

- Point-e: A system for generating 3d point clouds from complex prompts. arXiv:2212.08751, 2022.

- OpenAI. Chatgpt. https://openai.com/blog/chatgpt, 2022.

- OpenAI. Gpt-4 technical report. arXiv:2303.08774, 2023.

- Training language models to follow instructions with human feedback. In NeurIPS, 2022.

- Bleu: a method for automatic evaluation of machine translation. In ACL, 2002.

- Gorilla: Large language model connected with massive apis. arXiv preprint arXiv:2305.15334, 2023.

- Kosmos-2: Grounding multimodal large language models to the world. arXiv:2306.14824, 2023.

- Pointnet: Deep learning on point sets for 3d classification and segmentation. In CVPR, 2017.

- Learning transferable visual models from natural language supervision. 2021.

- Exploring the limits of transfer learning with a unified text-to-text transformer. In JMLR, 2020.

- Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv:1908.10084, 2019.

- High-resolution image synthesis with latent diffusion models. In CVPR, 2022.

- Pandagpt: One model to instruction-follow them all. arXiv:2305.16355, 2023.

- Unig3d: A unified 3d object generation dataset. arXiv:2306.10730, 2023.

- Vipergpt: Visual inference via python execution for reasoning. arXiv:2303.08128, 2023.

- InternLM Team. Internlm: A multilingual language model with progressively enhanced capabilities. https://github.com/InternLM/InternLM, 2023.

- Llama: Open and efficient foundation language models. arXiv:2302.13971, 2023.

- Attention is all you need. NeurIPS, 2017.

- Beyond first impressions: Integrating joint multi-modal cues for comprehensive 3d representation. In ACM MM, 2023a.

- Visionllm: Large language model is also an open-ended decoder for vision-centric tasks. arXiv:2305.11175, 2023b.

- Self-instruct: Aligning language model with self generated instructions. arXiv:2212.10560, 2022.

- Finetuned language models are zero-shot learners. In ICLR, 2021.

- Visual chatgpt: Talking, drawing and editing with visual foundation models. arXiv:2303.04671, 2023.

- 3d shapenets: A deep representation for volumetric shapes. In CVPR, 2015.

- Ulip: Learning a unified representation of language, images, and point clouds for 3d understanding. In CVPR, 2023a.

- Ulip-2: Towards scalable multimodal pre-training for 3d understanding. arXiv:2305.08275, 2023b.

- A survey on multimodal large language models. arXiv:2306.13549, 2023.

- Point-bert: Pre-training 3d point cloud transformers with masked point modeling. In CVPR, 2022.

- Pointclip: Point cloud understanding by clip. In CVPR, 2022.

- Llama-adapter: Efficient fine-tuning of language models with zero-init attention. arXiv:2303.16199, 2023a.

- Gpt4roi: Instruction tuning large language model on region-of-interest. arXiv:2307.03601, 2023b.

- Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv:2304.10592, 2023a.

- Pointclip v2: Prompting clip and gpt for powerful 3d open-world learning. In ICCV, 2023b.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.