Shedding Light on CVSS Scoring Inconsistencies: A User-Centric Study on Evaluating Widespread Security Vulnerabilities (2308.15259v2)

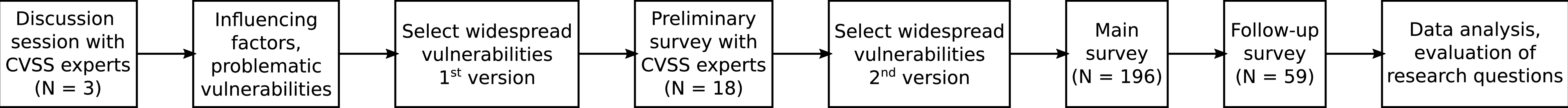

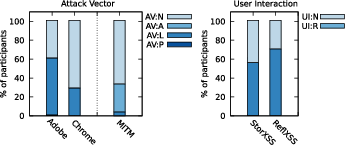

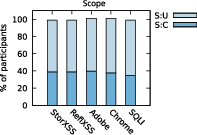

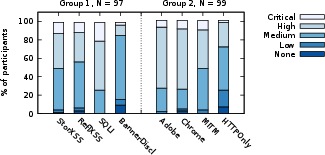

Abstract: The Common Vulnerability Scoring System (CVSS) is a popular method for evaluating the severity of vulnerabilities in vulnerability management. In the evaluation process, a numeric score between 0 and 10 is calculated, 10 being the most severe (critical) value. The goal of CVSS is to provide comparable scores across different evaluators. However, previous works indicate that CVSS might not reach this goal: If a vulnerability is evaluated by several analysts, their scores often differ. This raises the following questions: Are CVSS evaluations consistent? Which factors influence CVSS assessments? We systematically investigate these questions in an online survey with 196 CVSS users. We show that specific CVSS metrics are inconsistently evaluated for widespread vulnerability types, including Top 3 vulnerabilities from the "2022 CWE Top 25 Most Dangerous Software Weaknesses" list. In a follow-up survey with 59 participants, we found that for the same vulnerabilities from the main study, 68% of these users gave different severity ratings. Our study reveals that most evaluators are aware of the problematic aspects of CVSS, but they still see CVSS as a useful tool for vulnerability assessment. Finally, we discuss possible reasons for inconsistent evaluations and provide recommendations on improving the consistency of scoring.

- R. Wang, L. Gao, Q. Sun, and D. Sun, “An improved CVSS-based vulnerability scoring mechanism,” in 3rd International Conference on Multimedia Information Networking and Security. IEEE Computer Society, 2011, pp. 352–355.

- K. Scarfone and P. Mell, “An Analysis of CVSS Version 2 Vulnerability Scoring,” in 3rd International Symposium on Empirical Software Engineering and Measurement, 2009, pp. 516–525.

- L. Allodi, M. Cremonini, F. Massacci, and W. Shim, “Measuring the accuracy of software vulnerability assessments: experiments with students and professionals,” Empirical Software Engineering, pp. 1063–1094, 2020.

- H. Holm and K. K. Afridi, “An expert-based investigation of the Common Vulnerability Scoring System,” Computer Security, vol. 53, pp. 18–30, 2015.

- FIRST, Inc., “Common Vulnerability Scoring System v3.1: Specification Document,” accessed in March 2020. [Online]. Available: https://www.first.org/cvss/v3.1/specification-document

- J. Wunder, A. Kurtz, C. Eichenmüller, F. Gassmann, and Z. Benenson, “Study Dataset: Shedding Light on CVSS Scoring Inconsistencies: A User- Centric Study on Evaluating Widespread Security Vulnerabilities,” 2023. [Online]. Available: https://doi.org/10.5281/zenodo.8163826

- FIRST, Inc., “CVSS Frequently Asked Questions,” accessed in February 2020. [Online]. Available: https://www.first.org/cvss/v1/faq

- ——, “Common Vulnerability Scoring System v3.1: User Guide,” accessed in March 2020. [Online]. Available: https://www.first.org/cvss/v3.1/user-guide

- ——, “Common Vulnerability Scoring System version 3.1: Examples - Revision 2,” accessed in July 2020. [Online]. Available: https://www.first.org/cvss/examples

- L. Gallon, “Vulnerability discrimination Using CVSS framework,” 4th IFIP International Conference on New Technologies, Mobility and Security, pp. 1–6, 2011.

- D. J. Klinedinst, “CVSS and the Internet of Things,” 2015, accessed in March 2020. [Online]. Available: https://insights.sei.cmu.edu/cert/2015/09/cvss-and-the-internet-of-things.html

- D. Fall and Y. Kadobayashi, “The Common Vulnerability Scoring System vs. Rock Star Vulnerabilities: Why the Discrepancy?” in 5th International Conference on Information Systems Security and Privacy, 2019.

- J. M. Spring, E. Hatleback, A. Householder, A. Manion, and D. Shick, “Time to Change the CVSS?” IEEE Security & Privacy, vol. 19, pp. 74–78, 2021.

- M. Lipp, M. Schwarz, D. Gruss, T. Prescher, W. Haas, A. Fogh, J. Horn, S. Mangard, P. Kocher, D. Genkin, Y. Yarom, and M. Hamburg, “Meltdown: Reading Kernel Memory from User Space,” in 27th USENIX Security Symposium, 2018.

- P. Kocher, J. Horn, A. Fogh, D. Genkin, D. Gruss, W. Haas, M. Hamburg, M. Lipp, S. Mangard, T. Prescher, M. Schwarz, and Y. Yarom, “Spectre Attacks: Exploiting Speculative Execution,” in 40th IEEE Symposium on Security and Privacy, 2019.

- P. Kuehn, M. Bayer, M. Wendelborn, and C. Reuter, “Ovana: An approach to analyze and improve the information quality of vulnerability databases,” in 16th International Conference on Availability, Reliability and Security. Association for Computing Machinery, 2021.

- A. Anwar, A. Abusnaina, S. Chen, F. Li, and D. Mohaisen, “Cleaning the NVD: Comprehensive Quality Assessment, Improvements, and Analyses,” arXiv, pp. 1–13, 2020. [Online]. Available: https://arxiv.org/abs/2006.15074v1

- Y. Dong, W. Guo, Y. Chen, X. Xing, Y. Zhang, and G. Wang, “Towards the Detection of Inconsistencies in Public Security Vulnerability Reports,” in USENIX Security, 2019, pp. 869–885.

- J. Spring, E. Hatleback, A. Householder, A. Manion, and D. Shick, “Prioritizing Vulnerability Response: A Stakeholder-Specific Vulnerability Categorization (Version 1.1),” Workshop on the Economics of Information Security, Dec. 2020.

- Z. Zeng, Z. Yang, D. Huang, and C.-J. Chung, “LICALITY - Likelihood and Criticality: Vulnerability Risk Prioritization Through Logical Reasoning and Deep Learning,” IEEE Transactions on Network and Service Management, vol. 19, no. 2, pp. 1746–1760, 2022.

- A. A. Ganin, P. Quach, M. Panwar, Z. A. Collier, J. M. Keisler, D. Marchese, and I. Linkov, “Multicriteria decision framework for cybersecurity risk assessment and management,” Risk Analysis, vol. 40, no. 1, pp. 183–199, 2020.

- H. Chen, R. Liu, N. Park, and V. Subrahmanian, “Using twitter to predict when vulnerabilities will be exploited,” in Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery. New York, NY, USA: Association for Computing Machinery, 2019, p. 3143–3152. [Online]. Available: https://doi.org/10.1145/3292500.3330742

- K. Alperin, A. Wollaber, D. Ross, P. Trepagnier, and L. Leonard, “Risk prioritization by leveraging latent vulnerability features in a contested environment,” in Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security. New York, NY, USA: Association for Computing Machinery, 2019, p. 49–57. [Online]. Available: https://doi.org/10.1145/3338501.3357365

- The MITRE Corporation, “2022 CWE Top 25 Most Dangerous Software Weaknesses – Detailed Methodology,” accessed in April 2023. [Online]. Available: https://cwe.mitre.org/top25/archive/2022/2022_cwe_top25_supplemental.html#methodDetails

- NVD, “CVE-2020-27658 Detail,” accessed in December 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2020-27658

- ——, “CVE-2014-9635 Detail,” accessed in October 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2014-9635

- ——, “CVE-2017-4013 Detail,” accessed in December 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2017-4013

- ——, “CVE-2020-3193 Detail,” accessed in December 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2020-3193

- A. Kurtz, “Von niedrig bis kritisch: Schwachstellenbewertung mit CVSS,” 2021, accessed in January 2021. [Online]. Available: https://www.heise.de/hintergrund/Von-niedrig-bis-kritisch-Schwachstellenbewertung-mit-CVSS-5031983.html

- G. Corfield, “How good are you at scoring security vulnerabilities, really? Boffins seek infosec pros to take rating skill survey,” 2021, accessed in January 2021. [Online]. Available: https://www.theregister.com/2021/01/08/cvss_scoring_survey/

- J. D. Thayer, “Stepwise regression as an exploratory data analysis procedure.” 2002.

- NVD, “CVE-2019-20512 Detail,” accessed in December 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2019-20512

- ——, “CVE-2020-13145 Detail,” accessed in December 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2020-13145

- ——, “CVE-2020-3184 Detail,” accessed in October 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2020-3184

- ——, “CVE-2020-5523 Detail,” accessed in October 2020. [Online]. Available: https://nvd.nist.gov/vuln/detail/CVE-2020-5523

- R. H. Finn, “A Note on Estimating the Reliability of Categorical Data,” Educational and Psychological Measurement, vol. 30, pp. 71 – 76, 1970.

- J. Cohen, “A Coefficient of Agreement for Nominal Scales,” Educational and Psychological Measurement, vol. 20, pp. 37 – 46, 1960.

- N. McDonald, S. Schoenebeck, and A. Forte, “Reliability and inter-rater reliability in qualitative research: Norms and guidelines for CSCW and HCI practice,” Proceedings of the ACM on Human-Computer Interaction, vol. 3, pp. 1–23, 2019.

- J. Cohen, “Statistical power analysis for the behavioral sciences.” Lawrence Erlbaum Associates, 1977.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.