A Survey on Large Language Model based Autonomous Agents

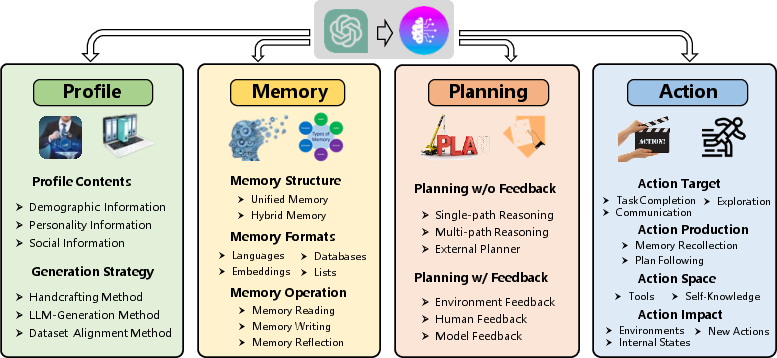

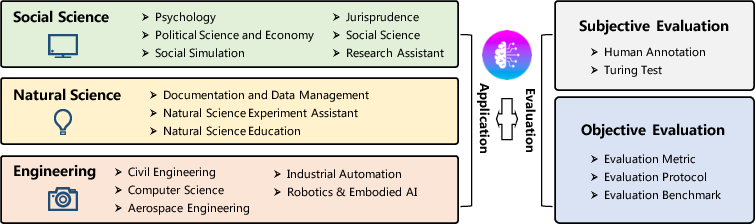

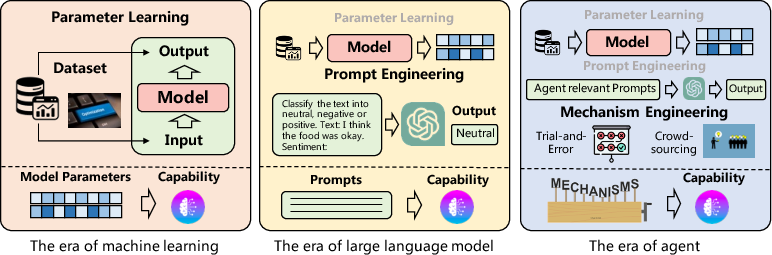

Abstract: Autonomous agents have long been a prominent research focus in both academic and industry communities. Previous research in this field often focuses on training agents with limited knowledge within isolated environments, which diverges significantly from human learning processes, and thus makes the agents hard to achieve human-like decisions. Recently, through the acquisition of vast amounts of web knowledge, LLMs have demonstrated remarkable potential in achieving human-level intelligence. This has sparked an upsurge in studies investigating LLM-based autonomous agents. In this paper, we present a comprehensive survey of these studies, delivering a systematic review of the field of LLM-based autonomous agents from a holistic perspective. More specifically, we first discuss the construction of LLM-based autonomous agents, for which we propose a unified framework that encompasses a majority of the previous work. Then, we present a comprehensive overview of the diverse applications of LLM-based autonomous agents in the fields of social science, natural science, and engineering. Finally, we delve into the evaluation strategies commonly used for LLM-based autonomous agents. Based on the previous studies, we also present several challenges and future directions in this field. To keep track of this field and continuously update our survey, we maintain a repository of relevant references at https://github.com/Paitesanshi/LLM-Agent-Survey.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Glossary

Below is an alphabetical list of advanced domain-specific terms from the paper, each with a brief definition and a verbatim usage example.

- Admissible actions: Actions that satisfy the constraints or preconditions of a planning environment, used for selecting valid next steps. "then determine the final one based on their distances to admissible actions."

- Algorithm of Thoughts (AoT): A prompting strategy that embeds algorithmic examples to improve structured reasoning in LLMs. "In AoT~\cite{sel2023algorithm}, the authors design a novel method to enhance the reasoning processes of LLMs by incorporating algorithmic examples into the prompts."

- AGI: A form of AI aiming for human-level generality across diverse tasks via autonomous planning and action. "Autonomous agents have long been recognized as a promising approach to achieving artificial general intelligence (AGI), which is expected to accomplish tasks through self-directed planning and actions."

- Chain of Thought (CoT): A prompting technique that elicits step-by-step reasoning traces to solve complex problems. "Chain of Thought (CoT)~\cite{wei2022chain} proposes inputting reasoning steps for solving complex problems into the prompt."

- Context window: The maximum span of input tokens a transformer-based LLM can attend to at once. "short-term memory is analogous to the input information within the context window constrained by the transformer architecture."

- Embedding vectors: Numeric representations of text or memory items enabling efficient retrieval and similarity search. "memory information is encoded into embedding vectors, which can enhance the memory retrieval and reading efficiency."

- Embodied agent: An agent that plans and acts within a physical or simulated environment, often grounded in perception. "SayPlan~\cite{rana2023sayplan} is an embodied agent specifically designed for task planning."

- Environmental Feedback: Signals received from the environment (real or simulated) that inform subsequent planning or action. "Environmental Feedback. This feedback is obtained from the objective world or virtual environment."

- External planner: A specialized planning tool (often operating on formal representations) used to compute action sequences. "To address this challenge, researchers turn to external planners."

- FAISS: A library for efficient similarity search on high-dimensional vectors, commonly used for memory retrieval. "s{rel}(q,m) can be realized based on LSH, ANNOY, HNSW, FAISS and so on."

- Few-shot examples: A small set of labeled instances provided in prompts to guide LLMs in generating or classifying similar outputs. "Then, one can optionally specify several seed agent profiles to serve as few-shot examples."

- Graph of Thoughts (GoT): An extension of tree-based reasoning that structures multiple reasoning paths as a graph for richer exploration. "In GoT~\cite{besta2023graph}, the authors expand the tree-like reasoning structure in ToT to graph structures, resulting in more powerful prompting strategies."

- Grounded re-planning algorithm: A method that revises plans based on observed mismatches between planned and actual world states. "LLM-Planner~\cite{song2023llmplanner} introduces a grounded re-planning algorithm that dynamically updates plans generated by LLMs when encountering object mismatches and unattainable plans during task completion."

- Hallucination: The tendency of LLMs to generate factually incorrect or unfounded outputs. "In addition, LLMs may also encounter hallucination problems, which are hard to be resolved by themselves."

- Heuristic policy functions: Rule-of-thumb policies guiding agent actions, often used in simplified or restricted environments. "the agents are assumed to act based on simple and heuristic policy functions, and learned in isolated and restricted environments"

- HNSW: Hierarchical Navigable Small World graphs for fast approximate nearest neighbor search. "s{rel}(q,m) can be realized based on LSH, ANNOY, HNSW, FAISS and so on."

- Human Feedback: Guidance from human users that helps align agents with human preferences and correct errors in planning. "Human Feedback. In addition to obtaining feedback from the environment, directly interacting with humans is also a very intuitive strategy to enhance the agent planning capability."

- In-context learning: Conditioning LLM behavior via examples or instructions placed directly in the prompt without parameter updates. "This structure only simulates the human shot-term memory, which is usually realized by in-context learning, and the memory information is directly written into the prompts."

- Key-value list structure: A memory design storing items as key-value pairs (e.g., vector keys with natural-language values) to enable efficient retrieval. "A notable example is the memory module of GITM~\cite{zhu2023ghost}, which utilizes a key-value list structure."

- Locality-Sensitive Hashing (LSH): A hashing technique that preserves similarity, enabling fast retrieval of related items. "s{rel}(q,m) can be realized based on LSH, ANNOY, HNSW, FAISS and so on."

- Long-horizon planning: Planning that spans many steps to tackle complex tasks with extended reasoning chains. "In many real-world scenarios, the agents need to make long-horizon planning to solve complex tasks."

- Long-term memory: Persistent memory that consolidates and stores information over time for later retrieval. "The short-term memory temporarily buffers recent perceptions, while long-term memory consolidates important information over time."

- Memory reading: Retrieving relevant, recent, and important information from memory to guide current actions. "The objective of memory reading is to extract meaningful information from memory to enhance the agent's actions."

- Memory reflection: Summarizing and abstracting past experiences to derive higher-level insights that guide future behavior. "Memory reflection emulates humans' ability to witness and evaluate their own cognitive, emotional, and behavioral processes."

- Memory writing: Storing new information about observations or actions into memory, handling duplicates and overflows. "The purpose of memory writing is to store information about the perceived environment in memory."

- Monte Carlo Tree Search (MCTS): A simulation-based search algorithm used to evaluate and choose plans via sampled rollouts. "RAP~\cite{hao2023reasoning} builds a world model to simulate the potential benefits of different plans based on Monte Carlo Tree Search (MCTS), and then, the final plan is generated by aggregating multiple MCTS iterations."

- Planning Domain Definition Languages (PDDL): A formal language for specifying planning problems and domains for automated planners. "LLM+P~\cite{liu2023llmp+} first transforms the task descriptions into formal Planning Domain Definition Languages (PDDL), and then it uses an external planner to deal with the PDDL."

- Scene graphs: Structured representations of entities and relations in a scene, used for grounded planning. "In this agent, the scene graphs and environment feedback serve as the agent's short-term memory, guiding its actions."

- Self-consistent CoT (CoT-SC): A technique that samples multiple CoT reasoning paths and selects the most consistent final answer. "Self-consistent CoT (CoT-SC)~\cite{wang2022self} believes that each complex problem has multiple ways of thinking to deduce the final answer."

- Short-term memory: Temporarily maintained information (often within the prompt or context window) that guides immediate actions. "short-term memory is analogous to the input information within the context window constrained by the transformer architecture."

- Sliding window: A bounded, moving window over recent history used to retain the latest information for decision-making. "{Reflexion}~\cite{shinn2023reflexion} utilizes a short-term sliding window to capture recent feedback and incorporates persistent long-term storage to retain condensed insights."

- Symbolic memory: Memory represented in structured, queryable forms (e.g., databases) enabling precise manipulation. "For example, {ChatDB}~\cite{hu2023chatdb} uses a database as a symbolic memory module."

- Transformer architecture: A neural network design leveraging self-attention mechanisms, constraining context via window size. "short-term memory is analogous to the input information within the context window constrained by the transformer architecture."

- Tree of Thoughts (ToT): A reasoning framework that explores branching thought sequences as a tree, evaluated step by step. "Tree of Thoughts (ToT)~\cite{yao2023tree} is designed to generate plans using a tree-like reasoning structure."

- Vector database: A storage system for vector embeddings enabling efficient similarity search and retrieval. "the authors propose a long-term memory system that utilizes a vector database, facilitating efficient storage and retrieval."

- Vector storage: External storage of vectorized information for fast querying by similarity. "Long-term memory resembles the external vector storage that agents can rapidly query and retrieve from as needed."

- World model: An internal simulation or representation of the environment used to evaluate and choose plans. "RAP~\cite{hao2023reasoning} builds a world model to simulate the potential benefits of different plans..."

- Zero-shot-CoT: A prompting approach that induces step-by-step reasoning without examples using trigger phrases. "Zero-shot-CoT~\cite{kojima2022large} enables LLMs to generate task reasoning processes by prompting them with trigger sentences like "think step by step"."

- Zero-shot planner: Using an LLM to plan without task-specific training by prompting it to generate action sequences. "In~\cite{huang2022language}, the LLMs are leveraged as zero-shot planners."

Collections

Sign up for free to add this paper to one or more collections.