- The paper demonstrates that balancing racial representation in controlled datasets significantly improves fairness metrics, with F1-scores increasing by up to 27.2 percentage points.

- It employs systematic experimentation using the CAFE and AffectNet datasets and analyzes key metrics such as demographic parity and equality of odds.

- The paper highlights that while dataset balancing enhances performance in smaller, controlled settings, more robust strategies are needed for larger, diverse datasets due to persistent biases.

Addressing Racial Bias in Facial Emotion Recognition

Introduction

The paper "Addressing Racial Bias in Facial Emotion Recognition" (2308.04674) explores the persistent challenge of racial bias within facial emotion recognition (FER) systems. The inequities in performance across different racial groups pose ethical and practical hurdles in deploying such systems in real-world applications. The authors explore the source and extent of these biases through systematic experimentation with data subsampling and model training, aiming to achieve racial balance in training datasets and evaluate its impact on fairness and performance metrics.

Methods

The study employs two major datasets: the Child Affective Facial Expression (CAFE) dataset and AffectNet. The authors filter and preprocess these datasets to align their emotion labels and formats, ensuring they are suitable for the subsequent bias analyses. The exploration involves simulating racial compositions in training datasets by manipulating the proportion of different racial groups and analyzing the effect on test performance. Critical to these investigations is the use of fairness metrics like the racial balance of F1-scores, demographic parity, and equality of odds. These are assessed by employing a ResNet-50 model, fine-tuned with varied racial representations in the training data.

Results

(Presented results are accompanied by visual figures for clarity.)

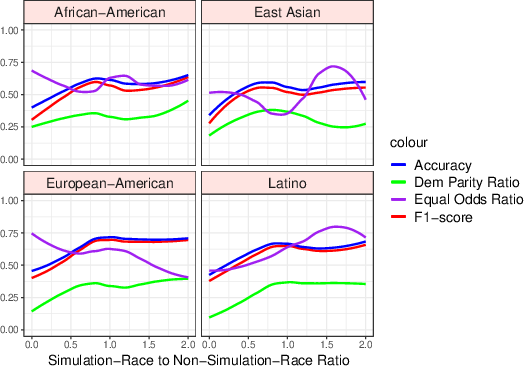

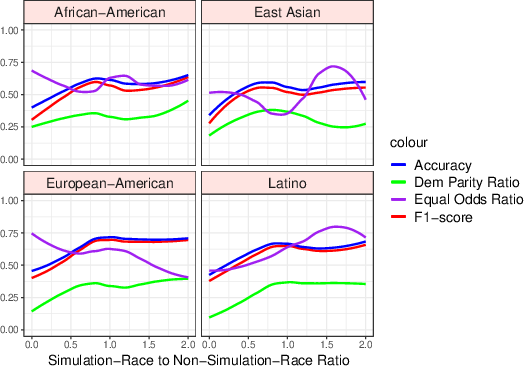

Figure 1: CAFE racial composition simulations with all test metrics. Each cell shows a varied simulated race with all non-simulated races held constant.

The experiments reveal a significant improvement in fairness and performance metrics for CAFE simulations when the datasets approach racial balance. For instance, the F1-score increases by 27.2\% points, and demographic parity improves by 15.7\% points on average. However, despite these encouraging trends in smaller datasets with posed images, larger datasets like AffectNet, which include more varied expressions, do not exhibit similar improvements. The fairness metrics in these larger datasets remain mostly unchanged, suggesting that simple racial balancing of datasets may be inadequate for bias mitigation.

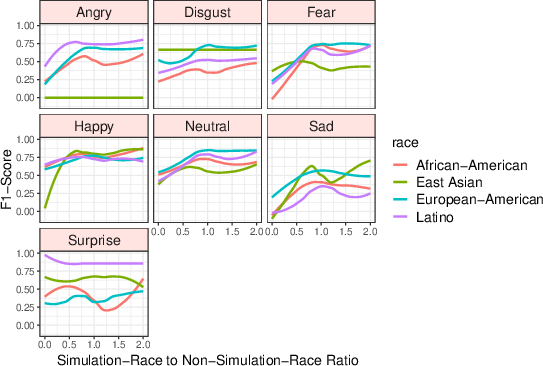

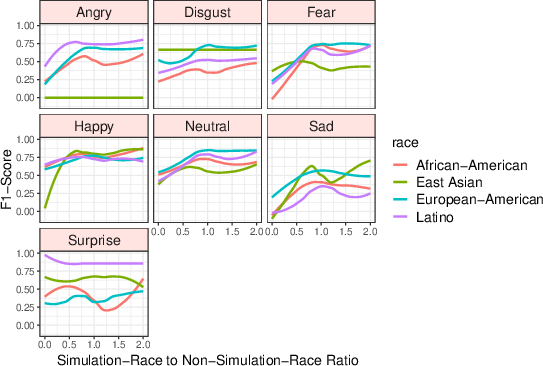

Figure 2: CAFE racial composition simulations with unaggregated F1-scores show racial balance is correlated with increases in most emotion-specific F1-scores.

Discussion

The findings highlight a complex interplay between dataset size, racial composition, and bias in FER. While racial balancing shows promise in smaller, more controlled settings, the persistence of bias in larger, more diverse datasets like AffectNet indicates that compositional adjustments alone are insufficient. Potential other sources of bias include the inherent biases in race estimation models and annotator biases during dataset labeling. The AffectNet data, for example, suffers from racial estimation inaccuracies which could skew the fairness assessments. Additionally, annotator biases are a concern, particularly when labelers hold implicit biases dependent on race, potentially leading to subjective label assignments.

Conclusion

This research underscores the necessity of comprehensive strategies for addressing racial bias in facial emotion recognition models. Simulating balanced racial compositions demonstrates improvements in small datasets but not in extensive ones. Future work needs to investigate other mitigation strategies, including more robust race estimation models and integrative labeling processes that factor in potential annotation biases.

This study calls for the research community to develop novel techniques to address the combined challenges of high-dimensional inputs and subjective labeling, thereby advancing the fairness and applicability of FER systems worldwide.