- The paper demonstrates that large language models exhibit emergent behaviors similar to complex systems, where simple interactions lead to sophisticated global dynamics.

- It introduces a structured taxonomy to decompose and interpret model behaviors, enabling comparisons between new and established architectures.

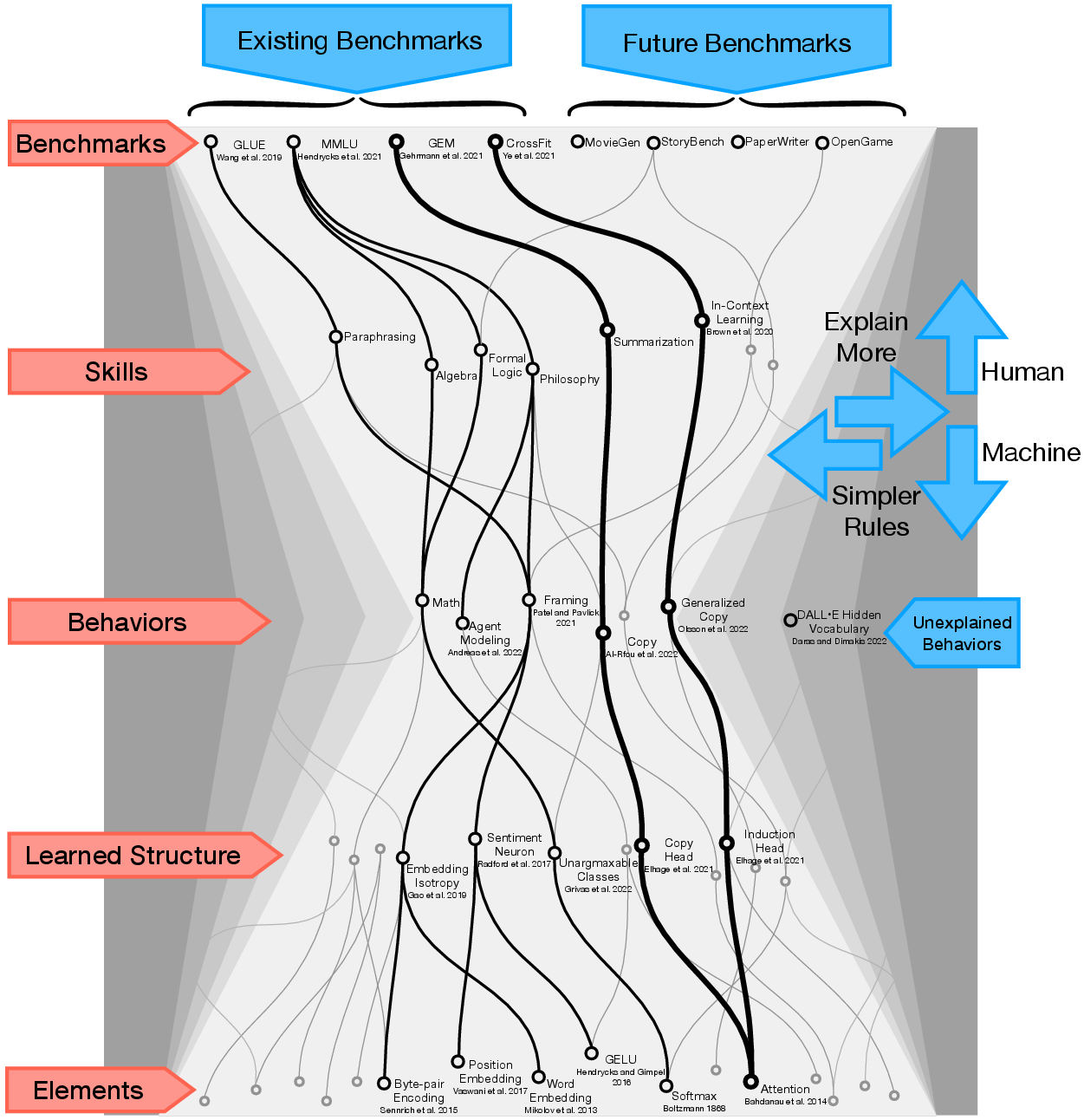

- The study emphasizes visualization techniques, such as behavioral subgraphs, to uncover regular patterns and inform future AI research benchmarks.

Generative Models as a Complex Systems Science

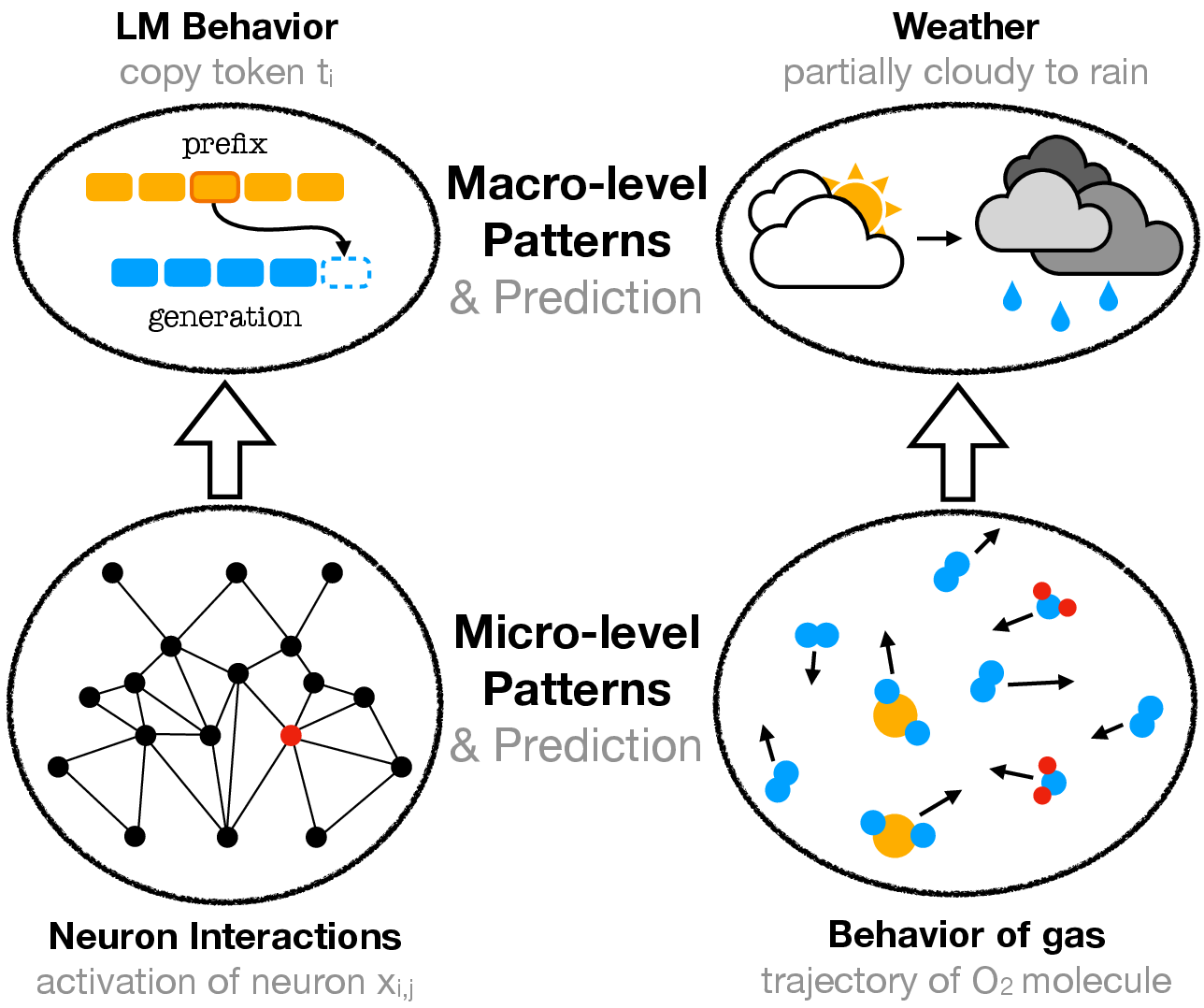

The paper "Generative Models as a Complex Systems Science: How can we make sense of LLM behavior?" presents a compelling argument for examining LLMs through the lens of complex systems science. This approach fosters an understanding of emergent behaviors that appear in these models, beyond traditional predictive analytics.

Generative Models and Complexity

The research suggests that the behavior of generative models reflects the principles of complex systems. In a complex system, emergent behavior arises from the interaction between simple components, leading to sophisticated global dynamics. Similarly, LLMs exhibit intricate behaviors resulting from the interplay of their fundamental components, such as neurons and layers.

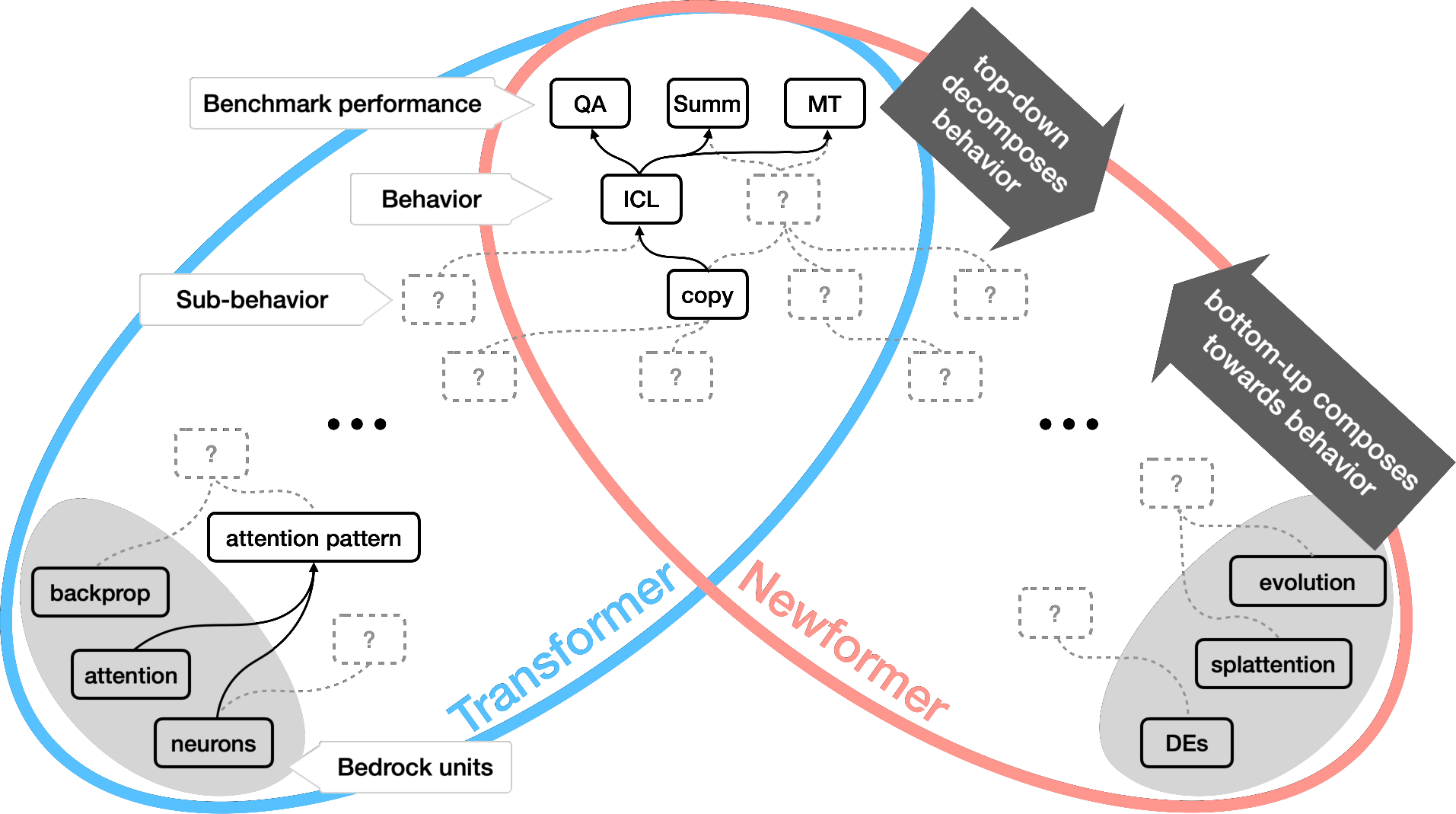

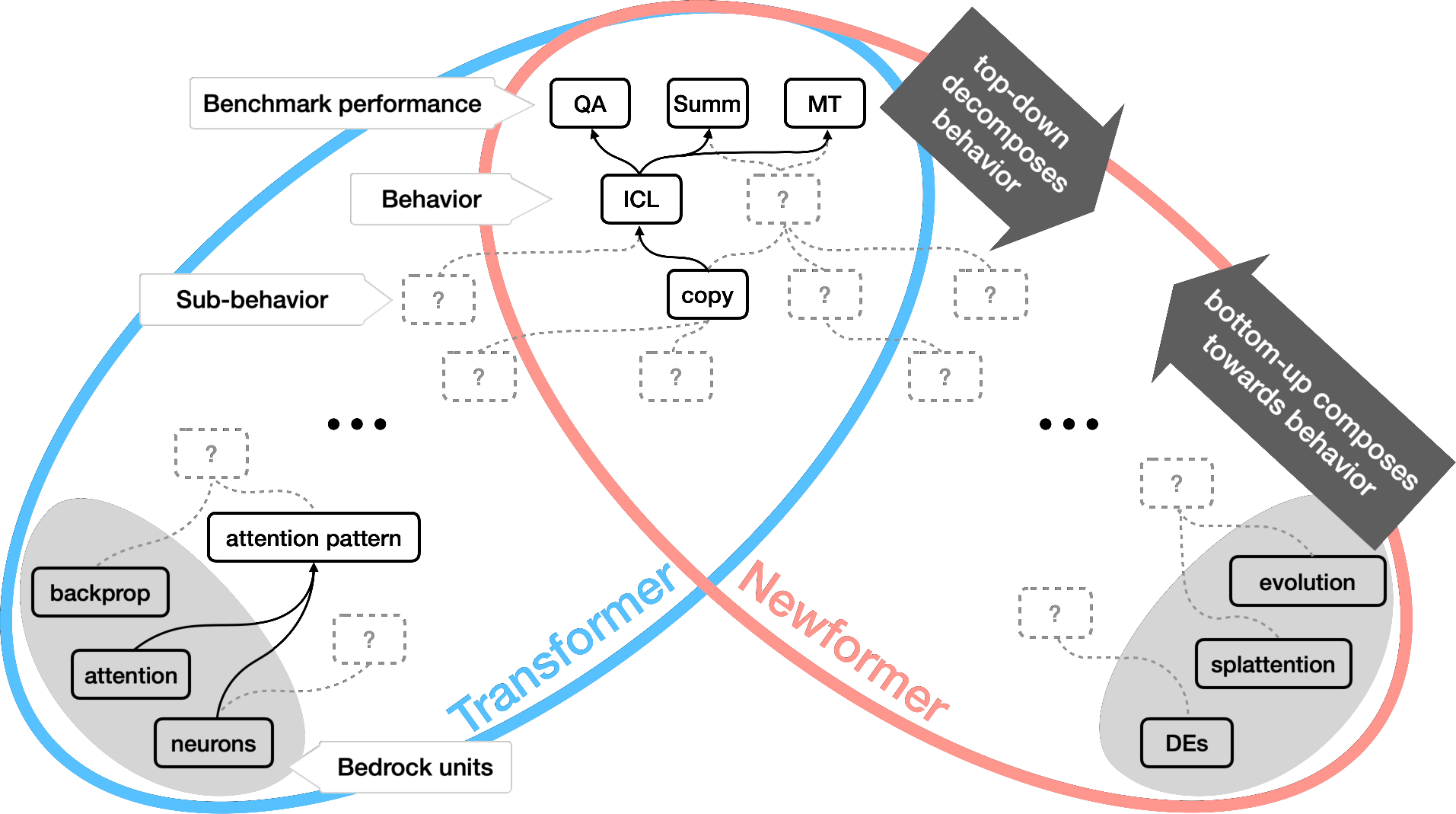

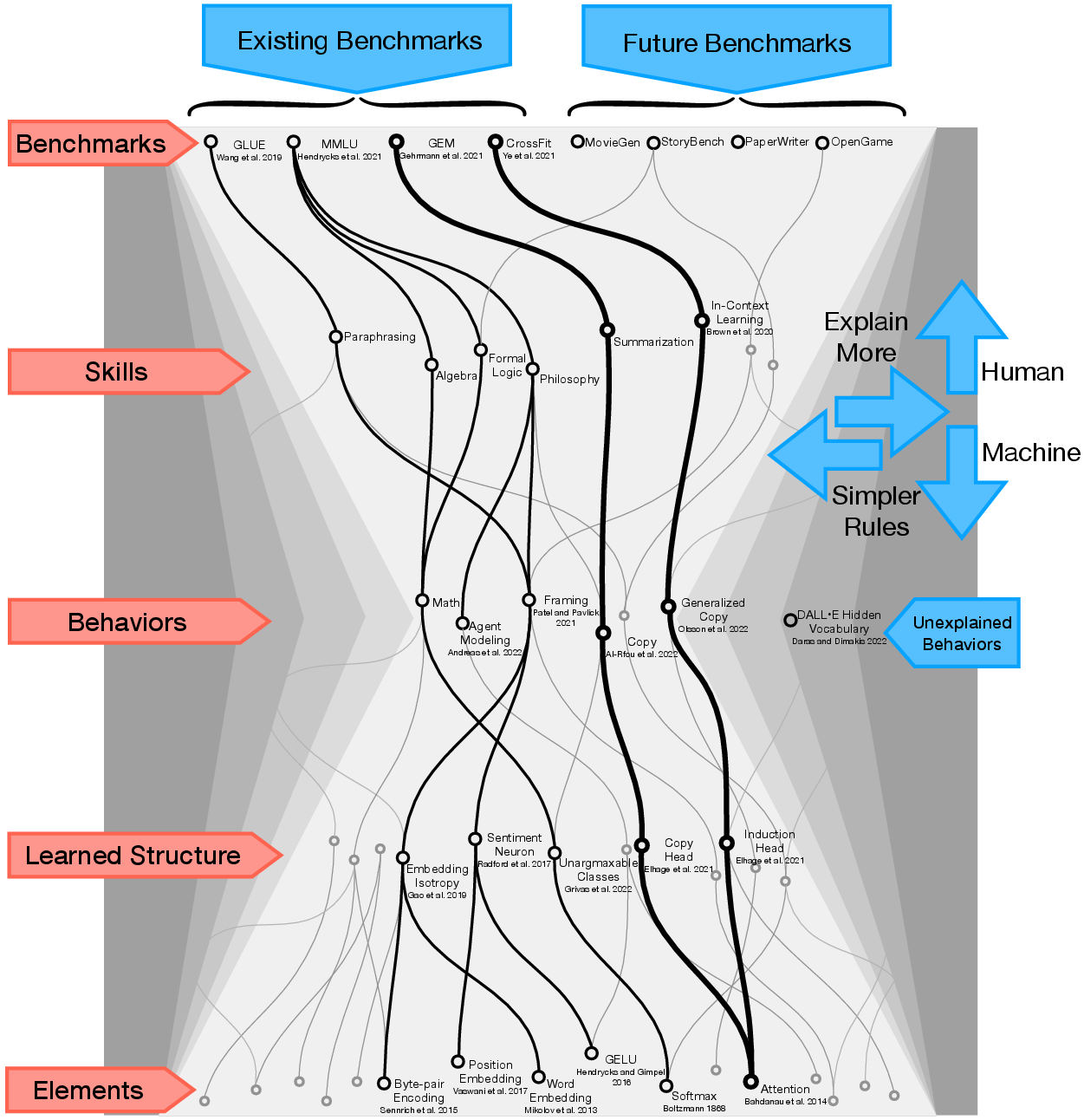

Figure 1: Top-down hierarchy of partially decomposed behaviors in learned models.

Behavioral Taxonomy for LLMs

A pivotal contribution of this study is the proposal of a structured taxonomy for decomposing the behavior of LMs. The paper stresses the necessity of categorizing behaviors into explainable units to streamline research agendas focused on model interpretation. By identifying shared and differential behaviors between new architectures and established models like Transformers, researchers can infer low-level mechanisms responsible for task completion.

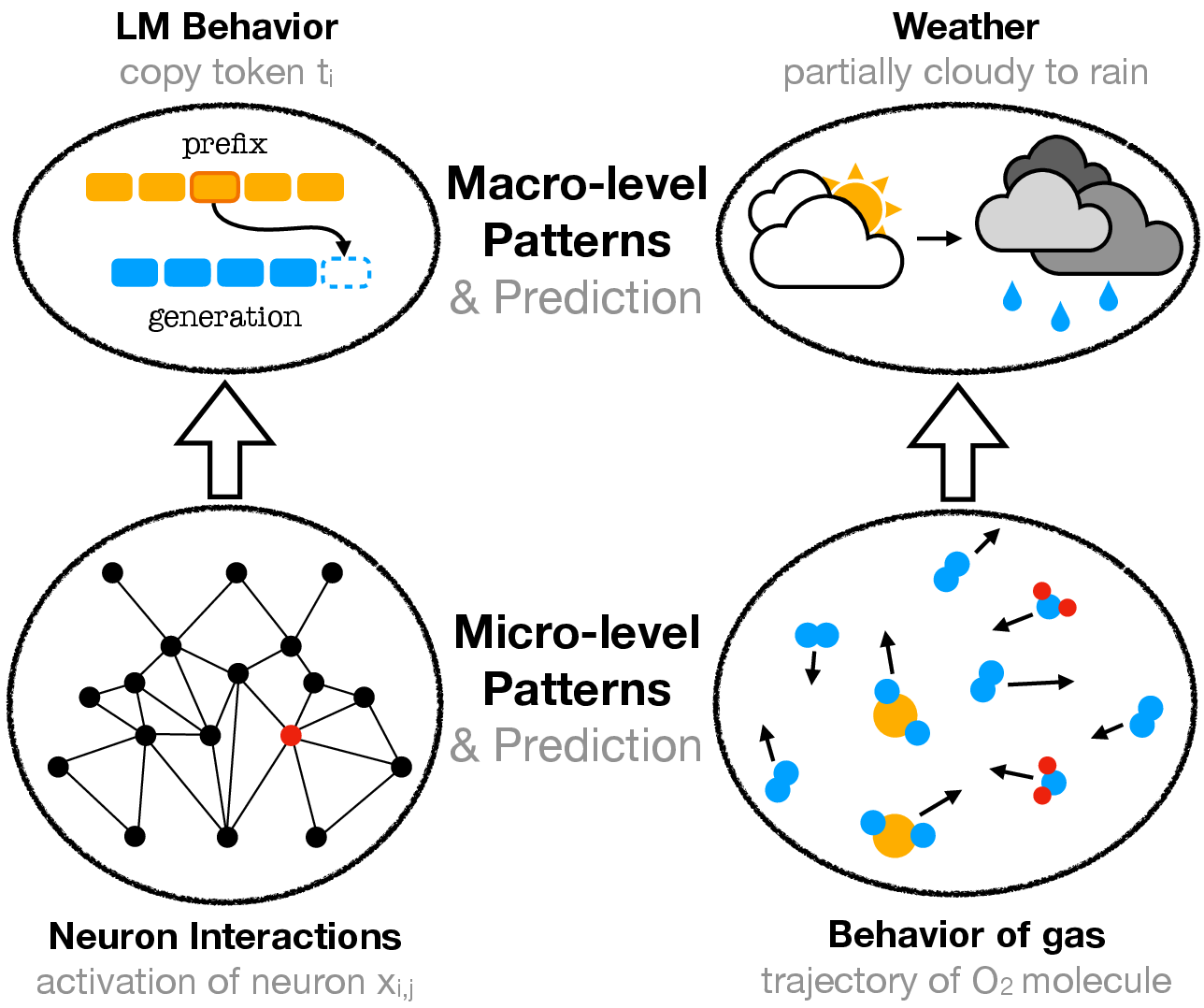

Figure 2: A visual representation capturing concepts essential for understanding phenomena like "copying" in Transformers.

Challenges in Predicting Behaviors

The discourse extends to propose visual subgraphs that assist in understanding LLM behaviors when generating sequences. Notably, these subgraphs spotlight the behavioral regularities across tasks and model generations that need addressing for comprehensive understanding. Figure 2, for instance, is employed to elucidate these themes.

The paper presents the hypothetical "Newformer" model, designed to outstrip current Transformers on benchmarks without revealing its architecture. This allegory underscores the challenges of exploring novel neural architectures with limited direct interpretability. The exploration suggests that understanding the "what" of model behaviors precedes unraveling the "how" and "why."

Emergence and Complex Systems Analysis

Generative models qualify as complex systems due to their emergent behaviors that are discovered more frequently than they are explicitly designed (Figure 3).

Figure 3: Complexity in systems arises from multiple levels of regularity, illustrated by micro-level and macro-level patterns.

Practical Implications and Future Directions

The paper's insights encourage a paradigm shift in AI research—towards a foundational examination of model behaviors. This perspective is expected to inform the design of novel benchmarks and experimental setups that hone in on emergent properties of LMs. Furthermore, it stresses that most of the advantages of complex systems analysis require open-source models to enhance transparency and reproducibility.

Conclusion

In summary, the paper advocates for adopting complex systems science to study generative models' intricate behaviors. By doing so, it provides a novel framework that can support the research community in developing a more profound understanding of the implications and potential of LMs, ultimately guiding future advances in AI technologies.