- The paper introduces CVQ-VAE, a clustered codebook approach that mitigates codebook collapse in vector quantization.

- The method leverages online running average updates and contrastive loss to dynamically adjust underutilized codevectors.

- Experimental results on datasets like MNIST and CIFAR10 show improved reconstruction quality and superior codebook utilization metrics.

Online Clustered Codebook

The paper "Online Clustered Codebook" explores a method to enhance the effectiveness of Vector Quantisation (VQ) by introducing Clustering VQ-VAE (CVQ-VAE), a quantisation technique aimed at avoiding codebook collapse in high-capacity representations required in complex computer vision tasks. This essay explores the method proposed in the paper, its implementation, and application nuances.

Methodology

Clustering VQ-VAE

The CVQ-VAE method addresses the challenge of codebook collapse commonly encountered in VQ, where only a small subset of codevectors is optimized, leaving many vectors underutilized or inactive. The proposed strategy selects encoded features as anchors to dynamically update these "dead" vectors. By moving unoptimized vectors closer in distribution to the encoded features, the likelihood of their selection and consequential optimization is increased.

Running Average Updates

To combat the evolving nature of deep network features, CVQ-VAE implements an online sampling method that leverages running averages for feature updates across training mini-batches. This approach is crucial as static snapshots may not accurately capture the true data distribution. The update mechanism considers both the frequency of codevector utilization and the encoded features, ensuring a comprehensive training effect:

Nk(t)=Nk(t−1)⋅γ+Bhwnk(t)⋅(1−γ)

where Nk(t) represents the running average, γ is a decay hyperparameter, nk(t) denotes encoded features quantised to entry ek and Bhw the feature dimensions across batch size.

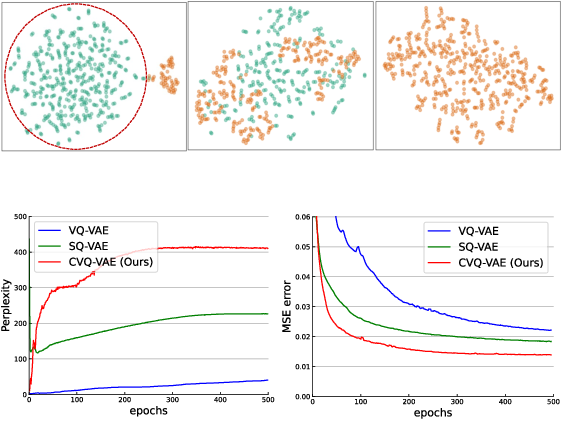

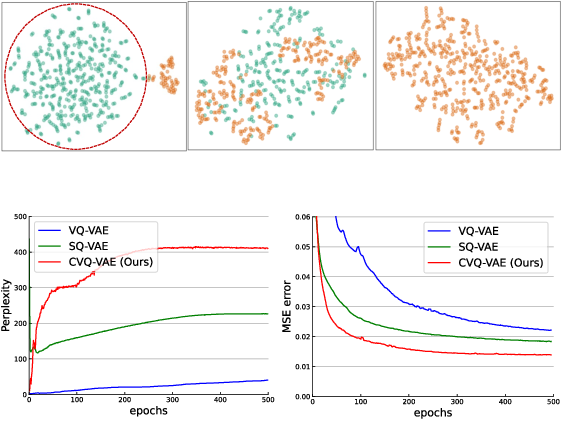

Figure 1: Codebook usage and reconstruction error illustrating the enhanced utilization of codebook vectors with CVQ-VAE.

Contrastive Loss

Aided by contrastive loss, CVQ-VAE fosters sparsity within the codebook, facilitating the creation of distinct representations. For each codevector, the model selects the closest feature as the positive pair, while more distant features form the negative pairs, leveraging the distance Di,k between the codevectors and the encoded features.

Implementation Considerations

Code Implementation

CVQ-VAE's simplicity in implementation means that it can be incorporated into existing models with minimal adjustments—typically a few lines of code in Pytorch. With adjustable parameters, such as the decay rate γ and vector dimensionality, flexibility is afforded for a wide range of applications.

Experiments and Results

Quantitative Experiments: Experimental results on datasets such as MNIST and CIFAR10 demonstrate CVQ-VAE's superior performance, evidenced by higher codebook utilization and improved reconstruction quality compared to state-of-the-art methods. Quantitative metrics such as SSIM and LPIPS further corroborate these findings.

Figure 2: Codebook optimization showcasing CVQ-VAE’s method for dynamically initializing codevectors using online clustering.

Applications

Unsupervised Representation Learning

CVQ-VAE excels in unsupervised representation learning, establishing a discrete codebook that fully leverages its entries for rich and expressive representations crucial for tasks like image compression, data generation, and more.

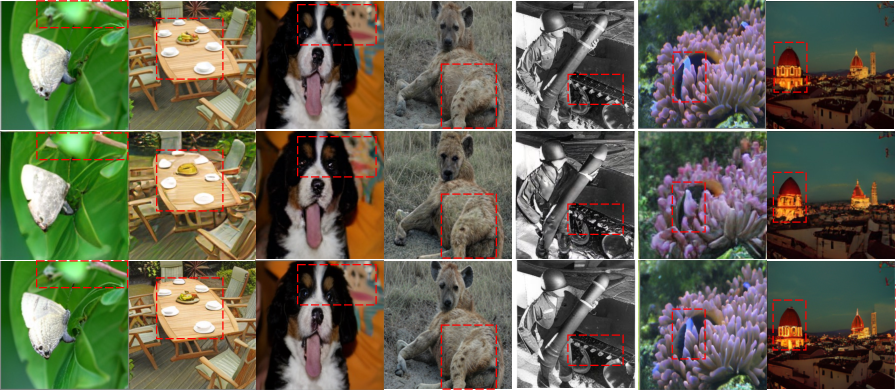

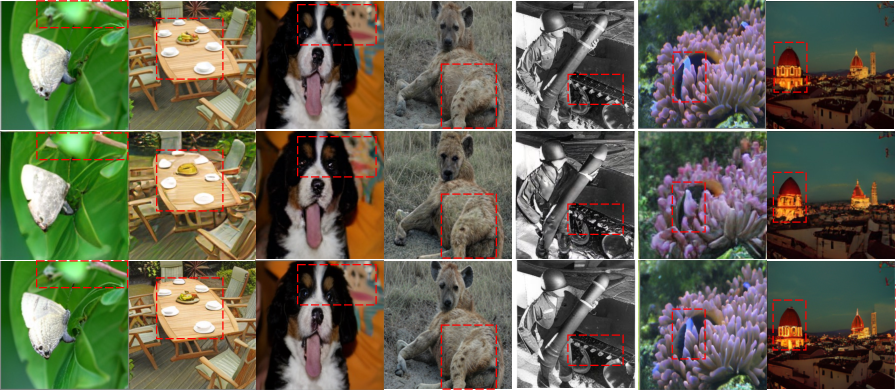

Image Generation: On tasks such as unconditional image generation (Figure 3), CVQ-VAE demonstrates substantial improvements over baseline models in maintaining intricate details under compression.

Figure 3: Reconstructions from different models, highlighting quality enhancements achieved through CVQ-VAE.

Conclusion

The Online Clustered Codebook proposed through CVQ-VAE offers a robust solution to the prevalent issue of codebook collapse in VQ techniques. By harnessing online clustering methods, the proposed approach significantly improves both representation richness and reconstruction capabilities without increasing computational overhead. This method's adaptability and superior output quality make it a valuable asset in future development and application of vector quantisation models in machine learning. The CVQ-VAE stands as an exemplary integration of dynamic quantisation methodologies within deep learning frameworks.