Human Motion Generation: A Survey

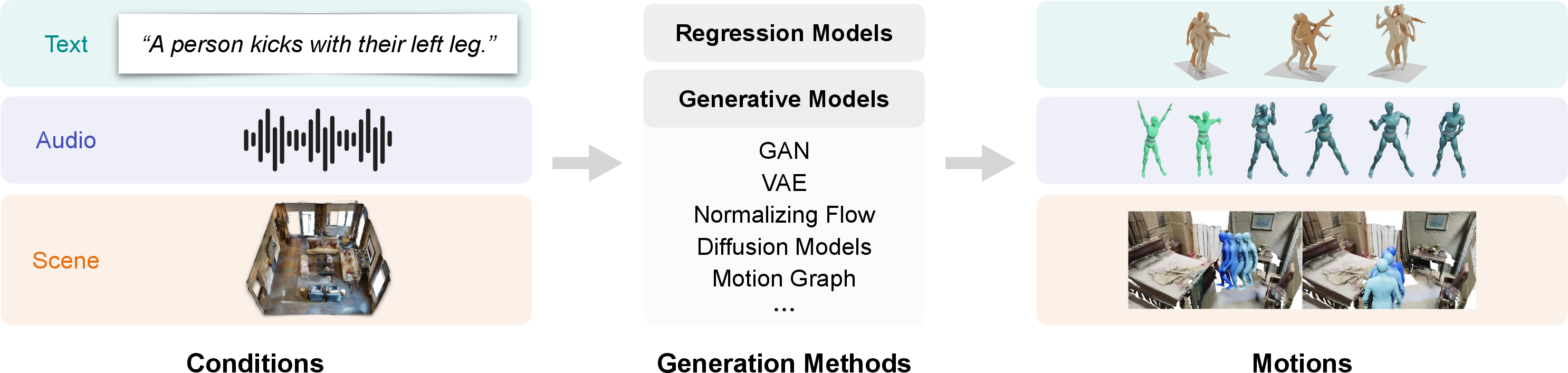

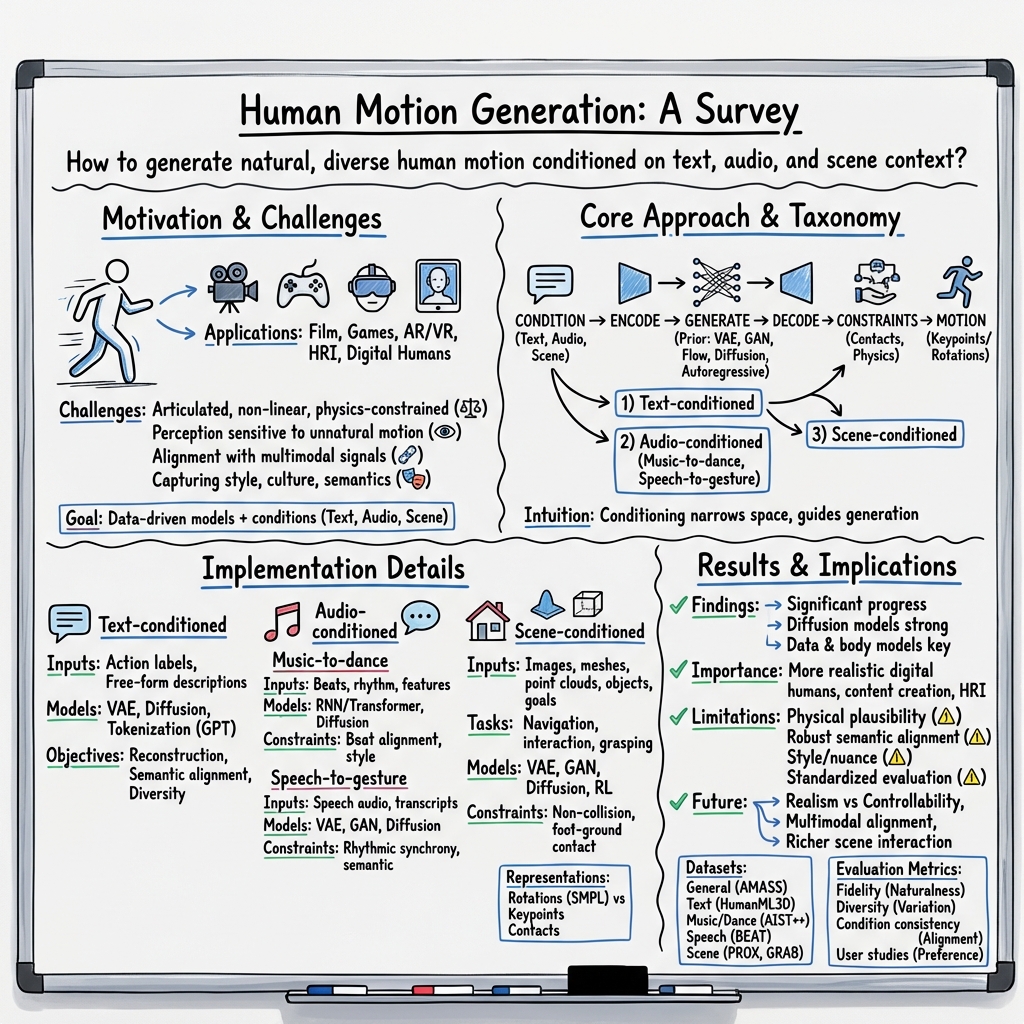

Abstract: Human motion generation aims to generate natural human pose sequences and shows immense potential for real-world applications. Substantial progress has been made recently in motion data collection technologies and generation methods, laying the foundation for increasing interest in human motion generation. Most research within this field focuses on generating human motions based on conditional signals, such as text, audio, and scene contexts. While significant advancements have been made in recent years, the task continues to pose challenges due to the intricate nature of human motion and its implicit relationship with conditional signals. In this survey, we present a comprehensive literature review of human motion generation, which, to the best of our knowledge, is the first of its kind in this field. We begin by introducing the background of human motion and generative models, followed by an examination of representative methods for three mainstream sub-tasks: text-conditioned, audio-conditioned, and scene-conditioned human motion generation. Additionally, we provide an overview of common datasets and evaluation metrics. Lastly, we discuss open problems and outline potential future research directions. We hope that this survey could provide the community with a comprehensive glimpse of this rapidly evolving field and inspire novel ideas that address the outstanding challenges.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Human Motion Generation: A Survey — Explained Simply

What is this paper about?

This paper is a big, friendly “map” of a fast‑growing research area: teaching computers to create realistic human movements. Think of a digital puppet (a 3D character) that can walk, dance, gesture, or sit naturally. The survey collects and explains recent methods, datasets, and ways to judge quality, especially when the motion should match something else—like a sentence (“a person waves hello”), music (dance), or a 3D room (sitting on a chair without falling through it).

The Main Goal

The paper’s purpose is to summarize what’s been done so far in human motion generation, what works best, what data people use, how results are measured, and what problems still need solving. It focuses on three main “conditions” that guide motion:

- Text (e.g., “a person jumps twice and waves”)

- Audio (music for dance, speech for hand gestures)

- Scene (interacting with objects, walking through rooms)

Key Questions the Paper Answers

Here are the simple questions this survey focuses on:

- How do computers “store” and understand human motion?

- How can we make motion that looks natural and human‑like?

- How do we get a computer to follow instructions from text, music/speech, or the environment?

- What datasets (collections of examples) do researchers use to train these systems?

- How do we measure whether generated motions are good?

- What’s working well now, and what’s still hard?

How Do They Study It?

This is a survey, so the authors don’t run a single experiment. Instead, they:

- Review lots of past papers and organize them by the type of input (text, audio, scene) and by the kind of models used.

- Explain common ways to represent motion, collect data, and evaluate results.

- Compare methods and outline trends over time.

To make the technical parts approachable, here are the main ideas in everyday language:

How motion is represented

- Keypoints: Imagine a stick figure with dots at the joints (like shoulders, elbows, knees). The computer stores the 3D positions of these dots over time.

- Rotations: Instead of dots in space, store the angles of each joint (like how much the elbow is bent). This better respects how real bodies move.

- Body models (like SMPL/SMPL-X): A standard 3D human shape that can move realistically, like a digital mannequin.

How data is collected

- Motion capture (markers on a real person in a studio)

- Markerless capture (estimating motion from regular videos)

- Large datasets: Collections like Human3.6M, AMASS, HumanML3D, AIST++, TED-Gesture, BEAT, and PROX, which contain thousands of motion examples, often with text, music, speech, or scene info.

The main types of “generative” models

Think of these like different “recipe” styles for creating motion:

- Autoregressive models: Predict the next pose step‑by‑step (like writing a story one word at a time).

- VAEs (Variational Autoencoders): Compress motions into a small “code” and learn to decode new examples from that code (like learning the gist of a move and recreating variations).

- GANs (Generative Adversarial Networks): Two networks play a game—one makes fake motions, the other tries to spot fakes—until the fakes look real.

- Normalizing Flows: A flexible way to turn simple noise into realistic motion with exact probability math.

- Diffusion Models: Start from pure noise and “clean it up” step by step until a smooth, believable motion appears. These have recently become very powerful for motion.

What Did They Find?

1) Text‑to‑Motion

- Progress: Models can take sentences and produce matching motions (e.g., “a person waves then crouches”). Better language understanding (using tools like CLIP or LLMs) helps a lot.

- Challenges: Language is vague—“walk happily” vs. “walk tiredly” needs style, speed, and personality. Models must understand these subtleties and generate motions that are both accurate and natural.

2) Audio‑to‑Motion

- Dance from music: Systems can generate dances that follow the beat and style (hip‑hop vs. ballet). Ensuring rhythm sync and variety is key.

- Gestures from speech: Systems create hand and arm movements that match speech rhythm and meaning. Keeping the style of a specific speaker and avoiding repetitive motions is important.

3) Scene‑aware Motion

- Humans interacting with environments (e.g., sitting on a chair, walking around a table) is tricky. Motions must respect physics—no floating, no clipping through furniture, correct foot contact with the ground.

- Some methods combine planning (like “reach the sofa”) with generation, and they add checks for contact and collisions to keep things realistic.

What models work best right now?

- Diffusion models are a standout across many tasks because they make stable, realistic results and handle diversity well.

- VAEs and GANs are still useful, especially when you need speed, control, or certain styles.

- Adding strong text/audio encoders and physics cues improves results.

How do they measure “good”?

Researchers use a mix of automatic scores and human judgments:

- Fidelity: How natural and smooth does it look? Does it match real motion statistics?

- Diversity: Do we get different valid motions for the same input (not always the same walk)?

- Condition consistency: Does the motion truly match the text/music/scene?

- Physical plausibility: Are there proper contacts with the ground and objects? No foot sliding?

- User studies: People watch the motions and rate realism or pick their favorites—still very important.

Data and evaluation gaps

- Datasets are getting bigger, but they can be biased (few subjects, limited actions or cultures).

- Automatic metrics don’t fully capture human judgment; better evaluation standards are needed.

Why This Matters

- Entertainment and media: Quicker, more realistic animation for movies, games, and virtual influencers.

- AR/VR and digital humans: More believable avatars in virtual spaces and social apps.

- Education and culture: Capture and recreate dances and gestures from different traditions.

- Robotics and HCI: Robots and virtual assistants that move in human‑friendly ways and communicate better with body language.

What’s Next? (Implications and Future Impact)

- Combining signals: Systems that can use text + music + scene together for richer control.

- Style and personalization: Matching a user’s dance style or a speaker’s unique gestures.

- Longer, complex actions: Keeping motion believable over minutes, not just seconds.

- Better physics and safety: Motions that follow physical rules and respect surroundings automatically.

- Fairness and access: More diverse data to cover different ages, body types, cultures; easier tools for creators who aren’t experts.

In short, this survey shows that computers are getting much better at “moving like us,” especially with new models like diffusion. There’s still work to do, but the progress is opening doors to more natural digital characters, smarter robots, and creative tools that anyone can use.

Knowledge Gaps

Below is a single, concrete list of unresolved knowledge gaps, limitations, and open questions highlighted or implied by the survey and the current state of human motion generation research.

- Standardized evaluation: Lack of universally accepted, perceptually aligned metrics (across text/audio/scene conditions) and shared leaderboards with fixed splits and protocols that enable apples-to-apples comparisons.

- Human perception correlation: Insufficient evidence that common automatic metrics (FID-like, feature distances, beat scores) correlate with human judgments of naturalness, intent readability, and style.

- Long-horizon generation: Limited support and benchmarks for multi-minute, goal-driven motion with stable posture, stylistic consistency, and narrative structure over long timescales.

- Multi-person interactions: Sparse methods and datasets for coordinated multi-human motion (e.g., group dance, conversational turn-taking, proxemics, social norms, collisions between bodies).

- Compositional conditioning: Under-explored simultaneous conditioning on multiple signals (text + audio + scene + style + constraints) with conflict resolution, prioritization, and editability.

- Fine-grained control: Limited mechanisms to specify and reliably control intensity, tempo, emotion, style, and timing (including keyframe/trajectory constraints) without degrading realism.

- Physical plausibility: Need for integrated, differentiable physics or robust constraint enforcement to ensure balance, contacts, friction, non-penetration, and energetically plausible kinematics.

- Contact-rich interactions: Insufficient modeling of hand–object interaction, manipulation, tool use, and whole-body contacts with scene geometry; scarce datasets with dense contact labels.

- Full-body fidelity: Underrepresentation of high-quality hand, finger, and facial movements aligned with body motion; limited garment/cloth-aware motion models beyond SMPL/SMPL-X bodies.

- Cross-skeleton retargeting: Fragile transfer across skeletons and avatars with different topologies and proportions; missing standardized retargeting evaluation.

- Domain generalization: Poor robustness across datasets and capture modalities (marker-based vs. markerless vs. pseudo-labeled), and to noisy upstream inputs (ASR errors, audio noise, scene recon noise).

- Language ambiguity: Limited handling of compositional, temporal, and figurative language (e.g., multi-sentence narratives, adverbs/intensity modifiers, spatial prepositions) and their alignment to motion segments.

- Audio semantics beyond rhythm: Overfocus on beat/tempo alignment; under-explored alignment to semantics, mood, and phrasing, especially across music genres and speech styles.

- Scene understanding: Limited generalization to novel, cluttered, or partially observed scenes; incomplete exploitation of affordances and object functionality for action selection and planning.

- Uncertainty and calibration: Few models report calibrated uncertainty, multi-modal distributions, or confidence intervals; diversity metrics often fail to reflect true conditional multi-modality.

- Real-time, low-latency deployment: Lack of methods that guarantee interactive rates on-device (AR/VR, HRI), with predictable latency and graceful degradation under compute constraints.

- Personalization and continual learning: Limited frameworks for adaptive, privacy-preserving personalization to a user’s style and body, and for continual updates without catastrophic forgetting.

- Data scale and coverage: Gaps in diversity (culture, age, body shapes, abilities), multilingual text, and long, well-aligned multimodal recordings; licensing/privacy constraints limit open sharing.

- Bias and fairness: Insufficient analysis and mitigation of demographic/cultural biases in datasets and models; absence of fairness-aware evaluation protocols.

- Reproducibility: Inconsistent reporting of preprocessing, skeleton mappings, and hyperparameters; lack of standardized data formats and pipelines for training and evaluation.

- User-study standards: No community-agreed protocols for perceptual studies (sample sizes, criteria, tasks) that make results comparable and reproducible.

- Interpretability: Limited understanding and tooling to inspect generative latent spaces (e.g., factors for style, intent, emotion) or to provide explanations for generated motions.

- Safety for embodiment: Lack of metrics and tests for safety, comfort, and reliability when deploying generated motion on physical robots or assistive devices.

- Energy and compute cost: High training/inference costs for diffusion/LLM-based pipelines; little work on energy-efficient architectures and training strategies.

- Cross-modal retrieval benchmarks: Missing standardized benchmarks for motion–text and motion–audio retrieval/alignment tasks tightly coupled with generation.

- Non-anthropomorphic and stylized characters: Minimal exploration of generation for varied rigs and stylized skeletons (games/animation), including evaluation across topologies.

- Tooling and standards: Need for robust, open tools for skeleton conversion (keypoints ↔ rotations), contact annotations, and interoperable scene–motion formats to facilitate research and deployment.

Glossary

- 3D rotations: A representation of 3D orientation used to parameterize poses. "

Kpts.'' andRot.'' denotes keypoints and 3D rotations, respectively." - Anchor pose: A specified reference body pose used to constrain or guide generation. "Anchor pose"

- Articulated: Composed of linked rigid segments connected by joints (e.g., the human skeleton). "human motion is highly non-linear and articulated"

- Autoregressive models: Sequence models that generate each step conditioned on previous outputs. "Autoregressive models~\cite{AR}"

- Bio-mechanical constraints: Physical limits and anatomical rules governing feasible human movement. "physical and bio-mechanical constraints"

- Biological motion: Motion patterns of living beings as perceived by the visual system. "perceiving biological motion"

- Denoising Diffusion Probabilistic Models (DDPM): Generative models that learn to reverse a noising process to synthesize data. "Denoising Diffusion Probabilistic Models (DDPM)~\cite{DDPM}"

- Foot-ground contact: A constraint/metric concerning when feet make contact with the ground in motion. "Foot-ground contact"

- Foot sliding: An artifact where a planted foot unrealistically moves relative to the ground. "Foot sliding"

- Generative Adversarial Networks (GAN): Generative models trained via adversarial competition between generator and discriminator. "Generative Adversarial Networks (GAN)~\cite{gan}"

- Keypoints: Discrete skeletal joint locations used to represent human pose. "

Kpts.'' andRot.'' denotes keypoints and 3D rotations, respectively." - Kinematics: The study/description of motion (e.g., joint angles and velocities) without considering forces. "slightly unnatural kinematics"

- Marker-based: Motion capture that uses physical markers attached to the body for tracking. "Marker-based"

- Markerless: Motion capture from images/videos without physical markers. "Markerless"

- Mesh: A polygonal surface representation of 3D geometry used for scene conditioning. "Scene (mesh)"

- MoCap: Abbreviation for motion capture, the process of recording human movement. "Unifies 15 marker-based MoCap datasets"

- Motion graph: A graph-based method that stitches motion clips by matching compatible transitions. "Motion Graph"

- Multi-modality: The property that multiple distinct valid motions can satisfy the same condition. "Multi-modality"

- Non-collison score: A metric evaluating how well generated motion avoids collisions with the scene. "Non-collison score"

- Nonverbal communication: Conveying information through body movements, gestures, and posture. "nonverbal communication medium"

- Normalizing Flows: Invertible transformations that map simple distributions to complex ones for generative modeling. "Normalizing Flows~\cite{normalizingflows}"

- Past motion: Previously observed motion sequence provided as contextual input. "Past motion"

- Point cloud: A 3D representation as a set of points in space, often used to represent scenes. "Scene (point cloud)"

- Pseudo-labeling: Creating labels for unlabeled data using model predictions to enable training. "Pseudo-labeling"

- Reinforcement Learning (RL): Learning to act by maximizing cumulative reward, applied to motion planning/control. "RL"

- Scene-conditioned: Motion generation guided by scene context, geometry, or objects. "scene-conditioned human motion generation"

- Style code: A latent vector controlling stylistic attributes of generated motion. "Style code"

- Style prompt: A text instruction specifying desired style in motion generation. "Style prompt"

- Trajectory: The path of the body (e.g., root position) through space over time. "Trajectory"

- Variational Autoencoders (VAE): Probabilistic generative models that learn latent variables via variational inference. "Variational Autoencoders (VAE)~\cite{vae}"

Practical Applications

Overview

This survey consolidates methods, datasets, and evaluation metrics for human motion generation across three main conditioning paradigms: text, audio (speech and music), and scene. Building on these advances (e.g., VAEs, GANs, normalizing flows, diffusion models like MDM, EDGE, SceneDiffuser; human body models like SMPL/SMPL-X; and curated datasets such as HumanML3D, BABEL, AIST++, BEAT, PROX), the following applications translate the paper’s findings into deployable tools and workflows for industry, academia, policy, and daily life.

Immediate Applications

The following applications can be implemented now using surveyed methods and available datasets/tools.

- Human motion previsualization and animation drafting

- Sector: media/entertainment, gaming

- Use: Generate diverse placeholder motions from text prompts (e.g., “walk and wave,” “sit and read”) or action labels to accelerate blocking and previsualization.

- Tools/workflows: Integrate text-conditioned models (TEMOS, MDM, MotionCLIP, T2M-GPT) as plugins for Blender/Maya/Unreal/Unity; leverage HumanML3D/BABEL for prompt templates; use feature-space diversity metrics to QA variations.

- Assumptions/dependencies: Adequate GPU for diffusion models; motion retargeting to rig; editorial review for style consistency; licensing of datasets.

- Crowd and NPC behavior synthesis

- Sector: gaming, simulation

- Use: Automatically populate scenes with varied, natural motions conditioned by text or scene context (e.g., “idle in a lobby,” “browse shelves”).

- Tools/workflows: Batch generation with VAE/diffusion backends (ACTOR, MDM) and scene-conditioned pipelines (HUMANISE, SceneDiffuser); collision/contact checks; foot-sliding metrics for QA.

- Assumptions/dependencies: Path/goal definitions; collision handling; performance constraints in real time.

- Speech-driven gesture generation for virtual presenters and avatars

- Sector: software/communications, education, marketing

- Use: Animate upper-body gestures from live or recorded speech for webinars, lectures, and digital presenters.

- Tools/workflows: Audio-conditioned models (StyleGestures, DiffGesture, GestureDiffuCLIP, LDA) wrapped as OBS/Zoom plugins; style prompts for persona control; beat/semantic consistency metrics.

- Assumptions/dependencies: Low-latency inference (favor VAE/GAN over heavy diffusion for live); cultural appropriateness; microphone/audio quality.

- Music-driven dance synthesis for social media and fitness instruction

- Sector: media/entertainment, fitness

- Use: Generate choreographies from tracks with controllable style and difficulty for short-form content and workout demos.

- Tools/workflows: EDGE, Bailando, DanceFormer; integrate with mobile apps; auto-beat alignment; content libraries curated by genre (AIST++, PhantomDance).

- Assumptions/dependencies: Music licensing; style control for brand alignment; safety for fitness (coach oversight).

- Scene-aware motion for AR/VR staging and furniture/e-commerce demos

- Sector: e-commerce, real estate, AR/VR

- Use: Synthesize interactions with chairs, tables, and rooms (sitting, reaching, navigating) to showcase products or stage environments.

- Tools/workflows: Scene-conditioned pipelines (GOAL, SAGA, HUMANISE, SceneDiffuser); point-cloud/mesh inputs; non-collision and contact metrics.

- Assumptions/dependencies: Accurate scene reconstruction (point cloud/mesh); physics/collision systems; device AR capabilities.

- Motion augmentation and cleanup in post-production

- Sector: media/gaming/software tooling

- Use: Fill missing frames, smooth artifacts, and reduce foot sliding; harmonize motion with scene contact constraints.

- Tools/workflows: Diffusion-based inpainting/denoising; physical plausibility checks (contact/ground alignment); integration into animation editors.

- Assumptions/dependencies: Rig compatibility; evaluation metrics guiding acceptance; human-in-the-loop review.

- Synthetic motion dataset generation for model training

- Sector: academia, robotics, computer vision

- Use: Augment training sets for action recognition, pose estimation, and trajectory prediction.

- Tools/workflows: Action-conditioned generators (Action2Motion, ACTOR, PoseGPT); feature-space coverage/diversity metrics for dataset curation; domain randomization.

- Assumptions/dependencies: Domain gap vs. real-world; annotation schemas; ethical/data governance for synthetic content labeling.

- HRI behavior prototyping with text/audio prompts

- Sector: robotics

- Use: Rapidly prototype human-like movement patterns for social robots and digital twins in simulation.

- Tools/workflows: Cross-modal generators (LDA, UDE) with ROS/Gazebo integration; motion retargeting to robot kinematics; safety constraints.

- Assumptions/dependencies: Retargeting fidelity; task-specific fine-tuning; safety validation in real environments.

- Virtual meeting avatars with expressive gestures

- Sector: communications, enterprise software

- Use: Auto-gesture generation from live speech to improve engagement in video calls.

- Tools/workflows: Lightweight VAE/GAN gesture modules; speaker/style conditioning (BEAT, ZEGGS); latency control; client-side SDK.

- Assumptions/dependencies: Privacy and consent; cultural sensitivity; device performance.

- Educational content authoring with motion scaffolds

- Sector: education

- Use: Generate instructor avatars and Gesture-enhanced explanations aligned to speech/music for interactive lessons.

- Tools/workflows: Audio-conditioned gesture modules; quality checks via beat/semantic alignment; LMS integration.

- Assumptions/dependencies: Pedagogical review for correctness; accessibility compliance; language coverage.

- Marketing/product demo digital humans

- Sector: marketing/commerce

- Use: Scripted motion sequences aligned to narration and product interactions.

- Tools/workflows: Text/audio-conditioned generation; style prompts for brand persona; scene-conditioned object interactions.

- Assumptions/dependencies: Brand guidelines; legal review; content QA.

- Benchmarks and QA pipelines for motion fidelity and consistency

- Sector: academia, industry standards

- Use: Adopt survey’s metrics (feature-space realism, contact/foot sliding, text/audio/scene consistency, user studies) as internal QA gates.

- Tools/workflows: Automated metric suites; dashboards; regression testing for releases.

- Assumptions/dependencies: Metric calibration to specific domains; human raters for subjective checks.

Long-Term Applications

These applications require further research, scaling, standardized tooling, or validation.

- Generalist embodied agents with language-conditioned motion in real homes

- Sector: robotics, smart home

- Use: Agents performing complex tasks (e.g., “set the table,” “tidy the living room”) with robust contact and scene understanding.

- Tools/workflows: Combine diffusion planning (SceneDiffuser), RL for control (GAMMA), and scene-conditioned motion; multi-modal grounding; physics engines.

- Assumptions/dependencies: Large-scale interaction datasets; reliable perception; safety certification; on-device compute.

- Cross-cultural, emotionally aware gestures for social AI

- Sector: HCI/communications, policy

- Use: Generate gestures aligned to language, emotion, and cultural norms across locales and contexts.

- Tools/workflows: Expanded datasets (BEAT-like multilingual corpora), style prompt libraries, semantic alignment modules.

- Assumptions/dependencies: Ethical review; cultural data depth; personalization without bias.

- Clinical rehabilitation and ergonomics support

- Sector: healthcare, industrial safety

- Use: Personalized motion synthesis for exercise guidance; ergonomic posture training and risk assessment.

- Tools/workflows: Scene-conditioned contact modeling; physical plausibility checks; clinician-in-the-loop validation; sensor feedback.

- Assumptions/dependencies: Clinical trials; liability frameworks; integration with medical devices.

- Sign language generation and accessibility avatars

- Sector: accessibility, education

- Use: High-fidelity sign language avatars for media and live communication.

- Tools/workflows: Specialized linguistic datasets; hand/face/body integrated models (SMPL-X/MANO/FLAME); semantic correctness evaluators.

- Assumptions/dependencies: Extensive linguistic coverage; community validation; regulatory compliance.

- Human-robot co-working motion planning in industry

- Sector: manufacturing, logistics

- Use: Predictive human motion and safe co-manipulation planning to reduce collisions and improve throughput.

- Tools/workflows: Scene-conditioned prediction/generation blended with trajectory planners; safety monitors; digital twin simulation.

- Assumptions/dependencies: Standards for safety and certification; real-time guarantees; domain adaptation.

- End-to-end media pipelines from script/audio/scene to animation

- Sector: media/entertainment

- Use: Automated generation of blocking, gestures, dance, and scene interactions from script and audio, editable by artists.

- Tools/workflows: Orchestrated multi-model pipeline (text/audio/scene-conditioned modules) with editorial interfaces; standardized metrics for quality.

- Assumptions/dependencies: Creative control handoff; interoperability standards; compute costs and caching strategies.

- Virtual try-on and realistic product interaction

- Sector: commerce, fashion

- Use: Dynamic try-on experiences with realistic motions (walking, turning, interacting with accessories).

- Tools/workflows: Motion-conditioned garment simulation; avatar retargeting; scene-aware contact modeling.

- Assumptions/dependencies: Accurate body/garment models; physics; latency constraints for web/mobile.

- Telepresence robots with natural expressive motion

- Sector: robotics, enterprise, healthcare

- Use: Robots mirroring speech-driven gestures and scene-appropriate movements during remote interactions.

- Tools/workflows: Speech-conditioned gesture generation retargeted to robot kinematics; safe contact handling; user preference learning.

- Assumptions/dependencies: Hardware constraints; safety assurance; personalization.

- Policy and standards for synthetic motion governance

- Sector: policy, standards bodies

- Use: Watermarking synthetic motion, disclosure guidelines, dataset governance, and fairness audits.

- Tools/workflows: Provenance metadata; standardized evaluation suites; best-practice documentation.

- Assumptions/dependencies: Multi-stakeholder consensus; regulatory adoption; international harmonization.

- Lifelong personalization of digital humans

- Sector: software, AR/VR, gaming

- Use: Avatars that learn personal style over time across contexts (speech, music, scene).

- Tools/workflows: Continual learning frameworks; style code management; privacy-preserving personalization.

- Assumptions/dependencies: On-device training; consent and data retention policies; robustness to drift.

- Training simulations for soft skills and public speaking

- Sector: enterprise training, education

- Use: Practice environments with responsive audience avatars and feedback on gesture/speech alignment.

- Tools/workflows: Audio-conditioned gesture generation; consistency and preference metrics for feedback; scenario authoring tools.

- Assumptions/dependencies: Validated feedback metrics; user acceptance; domain-specific content libraries.

Common assumptions and dependencies across applications

- Data and models: Availability of high-quality motion/scene/speech datasets (e.g., BABEL, HumanML3D, AIST++, BEAT, PROX/HUMANISE), and robust generative backends (diffusion/VAEs/GANs) for target domains.

- Physical plausibility: Reliable contact and collision handling; foot-sliding mitigation; scene understanding fidelity.

- Performance: Real-time constraints favor lighter models or edge acceleration; diffusion models may need distillation or caching.

- Integration: Retargeting to rigs/avatars/robots; interoperability with engines (Unity/Unreal), ROS, and DCC tools.

- Ethics and policy: Cultural appropriateness, consent for personal data, labeling/watermarking of synthetic motion, and IP/licensing compliance.

- Evaluation: Adoption of standardized metrics (fidelity, diversity, condition consistency, physical plausibility) and human-in-the-loop quality checks.

Collections

Sign up for free to add this paper to one or more collections.