MorphPiece : A Linguistic Tokenizer for Large Language Models (2307.07262v2)

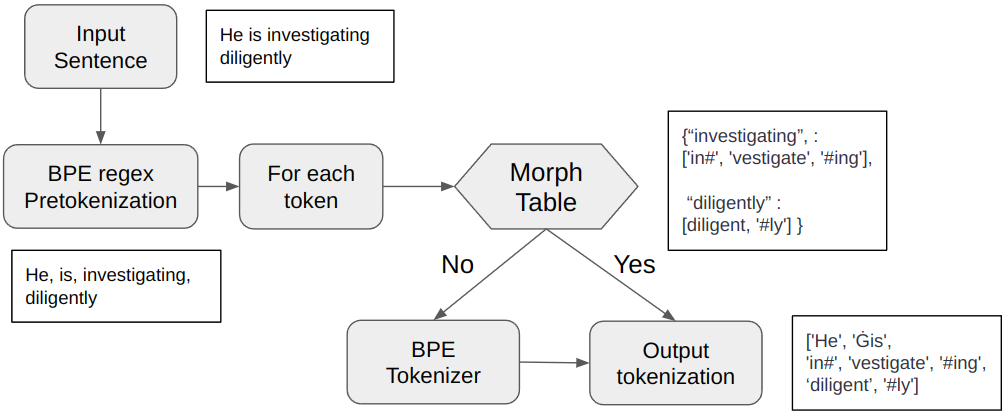

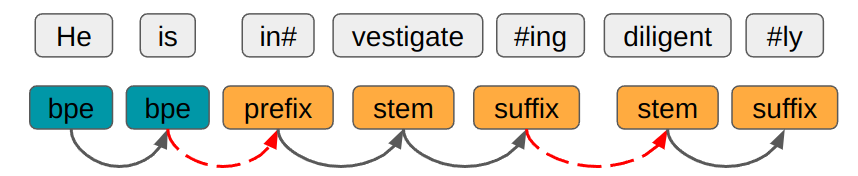

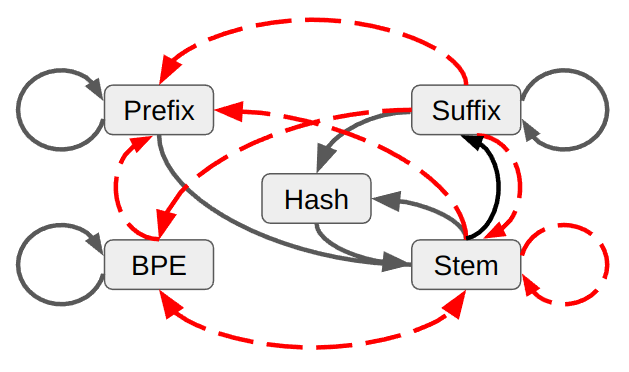

Abstract: Tokenization is a critical part of modern NLP pipelines. However, contemporary tokenizers for LLMs are based on statistical analysis of text corpora, without much consideration to the linguistic features. I propose a linguistically motivated tokenization scheme, MorphPiece, which is based partly on morphological segmentation of the underlying text. A GPT-style causal LLM trained on this tokenizer (called MorphGPT) shows comparable or superior performance on a variety of supervised and unsupervised NLP tasks, compared to the OpenAI GPT-2 model. Specifically I evaluated MorphGPT on language modeling tasks, zero-shot performance on GLUE Benchmark with various prompt templates, massive text embedding benchmark (MTEB) for supervised and unsupervised performance, and lastly with another morphological tokenization scheme (FLOTA, Hoffmann et al., 2022) and find that the model trained on MorphPiece outperforms GPT-2 on most evaluations, at times with considerable margin, despite being trained for about half the training iterations.

- Evaluating various tokenizers for arabic text classification. CoRR, abs/2106.07540, 2021. URL https://arxiv.org/abs/2106.07540.

- Arabert: Transformer-based model for arabic language understanding. CoRR, abs/2003.00104, 2020. URL https://arxiv.org/abs/2003.00104.

- An evaluation of two vocabulary reduction methods for neural machine translation. In Proceedings of the 13th Conference of the Association for Machine Translation in the Americas (Volume 1: Research Track), pp. 97–110, Boston, MA, March 2018. Association for Machine Translation in the Americas. URL https://aclanthology.org/W18-1810.

- PromptSource: An integrated development environment and repository for natural language prompts. In Basile, V., Kozareva, Z., and Stajner, S. (eds.), Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, pp. 93–104, Dublin, Ireland, May 2022. Association for Computational Linguistics. doi: 10.18653/v1/2022.acl-demo.9. URL https://aclanthology.org/2022.acl-demo.9.

- Meaningless yet meaningful: Morphology grounded subword-level NMT. In Proceedings of the Second Workshop on Subword/Character LEvel Models, pp. 55–60, New Orleans, June 2018. Association for Computational Linguistics. doi: 10.18653/v1/W18-1207. URL https://aclanthology.org/W18-1207.

- MorphyNet: a large multilingual database of derivational and inflectional morphology. In Proceedings of the 18th SIGMORPHON Workshop on Computational Research in Phonetics, Phonology, and Morphology, pp. 39–48, Online, August 2021. Association for Computational Linguistics. doi: 10.18653/v1/2021.sigmorphon-1.5. URL https://aclanthology.org/2021.sigmorphon-1.5.

- The SIGMORPHON 2022 shared task on morpheme segmentation. In Proceedings of the 19th SIGMORPHON Workshop on Computational Research in Phonetics, Phonology, and Morphology, pp. 103–116, Seattle, Washington, July 2022. Association for Computational Linguistics. doi: 10.18653/v1/2022.sigmorphon-1.11. URL https://aclanthology.org/2022.sigmorphon-1.11.

- Enriching word vectors with subword information. CoRR, abs/1607.04606, 2016. URL http://arxiv.org/abs/1607.04606.

- Byte pair encoding is suboptimal for language model pretraining. In Findings of the Association for Computational Linguistics: EMNLP 2020, pp. 4617–4624, Online, November 2020. Association for Computational Linguistics. doi: 10.18653/v1/2020.findings-emnlp.414. URL https://aclanthology.org/2020.findings-emnlp.414.

- Language models are few-shot learners. In Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., and Lin, H. (eds.), Advances in Neural Information Processing Systems, volume 33, pp. 1877–1901. Curran Associates, Inc., 2020. URL https://proceedings.neurips.cc/paper/2020/file/1457c0d6bfcb4967418bfb8ac142f64a-Paper.pdf.

- A joint model of orthography and morphological segmentation. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 664–669, San Diego, California, June 2016. Association for Computational Linguistics. doi: 10.18653/v1/N16-1080. URL https://aclanthology.org/N16-1080.

- Are all languages equally hard to language-model? In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), pp. 536–541, New Orleans, Louisiana, June 2018. Association for Computational Linguistics. doi: 10.18653/v1/N18-2085. URL https://aclanthology.org/N18-2085.

- Unsupervised morphology induction using morfessor. In FSMNLP, volume 4002 of Lecture Notes in Computer Science, pp. 300–301. Springer, 2005.

- How much does tokenization affect neural machine translation? CoRR, abs/1812.08621, 2018. URL http://arxiv.org/abs/1812.08621.

- Falcon, W. and The PyTorch Lightning team. PyTorch Lightning, 3 2019. URL https://github.com/Lightning-AI/lightning.

- Openwebtext corpus. http://Skylion007.github.io/OpenWebTextCorpus, 2019.

- Morfessor EM+Prune: Improved subword segmentation with expectation maximization and pruning. In Proceedings of the Twelfth Language Resources and Evaluation Conference, pp. 3944–3953, Marseille, France, May 2020. European Language Resources Association. ISBN 979-10-95546-34-4. URL https://aclanthology.org/2020.lrec-1.486.

- DagoBERT: Generating derivational morphology with a pretrained language model. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, 2020.

- Superbizarre is not superb: Improving bert’s interpretations of complex words with derivational morphology. CoRR, abs/2101.00403, 2021. URL https://arxiv.org/abs/2101.00403.

- An embarrassingly simple method to mitigate undesirable properties of pretrained language model tokenizers. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, 2022.

- DNABERT: pre-trained Bidirectional Encoder Representations from Transformers model for DNA-language in genome. Bioinformatics, 37(15):2112–2120, 02 2021. ISSN 1367-4803. doi: 10.1093/bioinformatics/btab083. URL https://doi.org/10.1093/bioinformatics/btab083.

- Adam: A method for stochastic optimization. In Bengio, Y. and LeCun, Y. (eds.), 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, 2015. URL http://arxiv.org/abs/1412.6980.

- Kudo, T. Subword regularization: Improving neural network translation models with multiple subword candidates. CoRR, abs/1804.10959, 2018. URL http://arxiv.org/abs/1804.10959.

- Morpho challenge 2005-2010: Evaluations and results. In Proceedings of the 11th Meeting of the ACL Special Interest Group on Computational Morphology and Phonology, pp. 87–95, Uppsala, Sweden, July 2010. Association for Computational Linguistics. URL https://aclanthology.org/W10-2211.

- Meal: Stable and active learning for few-shot prompting, 2023.

- Improving language model of human genome for dna-protein binding prediction based on task-specific pre-training. Interdisciplinary sciences, computational life sciences, 15(1):32—43, March 2023. ISSN 1913-2751. doi: 10.1007/s12539-022-00537-9. URL https://doi.org/10.1007/s12539-022-00537-9.

- Morphological and language-agnostic word segmentation for NMT. CoRR, abs/1806.05482, 2018. URL http://arxiv.org/abs/1806.05482.

- Building a large annotated corpus of English: The Penn Treebank. Computational Linguistics, 19(2):313–330, 1993. URL https://aclanthology.org/J93-2004.

- Using morphological knowledge in open-vocabulary neural language models. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), pp. 1435–1445, New Orleans, Louisiana, June 2018. Association for Computational Linguistics. doi: 10.18653/v1/N18-1130. URL https://aclanthology.org/N18-1130.

- Distributed representations of words and phrases and their compositionality. CoRR, abs/1310.4546, 2013. URL http://arxiv.org/abs/1310.4546.

- MTEB: Massive text embedding benchmark. In Vlachos, A. and Augenstein, I. (eds.), Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics, pp. 2014–2037, Dubrovnik, Croatia, May 2023. Association for Computational Linguistics. doi: 10.18653/v1/2023.eacl-main.148. URL https://aclanthology.org/2023.eacl-main.148.

- Morphological word segmentation on agglutinative languages for neural machine translation. CoRR, abs/2001.01589, 2020. URL http://arxiv.org/abs/2001.01589.

- The LAMBADA dataset: Word prediction requiring a broad discourse context. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 1525–1534, Berlin, Germany, August 2016. Association for Computational Linguistics. doi: 10.18653/v1/P16-1144. URL https://aclanthology.org/P16-1144.

- Glove: Global vectors for word representation. In Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543, 2014. URL http://www.aclweb.org/anthology/D14-1162.

- Deep contextualized word representations. CoRR, abs/1802.05365, 2018. URL http://arxiv.org/abs/1802.05365.

- V-measure: A conditional entropy-based external cluster evaluation measure. In Eisner, J. (ed.), Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), pp. 410–420, Prague, Czech Republic, June 2007. Association for Computational Linguistics. URL https://aclanthology.org/D07-1043.

- The effectiveness of morphology-aware segmentation in low-resource neural machine translation. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Student Research Workshop, pp. 164–174, Online, April 2021. Association for Computational Linguistics. doi: 10.18653/v1/2021.eacl-srw.22. URL https://aclanthology.org/2021.eacl-srw.22.

- Japanese and korean voice search. 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5149–5152, 2012.

- AlephBERT: Language model pre-training and evaluation from sub-word to sentence level. In Muresan, S., Nakov, P., and Villavicencio, A. (eds.), Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 46–56, Dublin, Ireland, May 2022. Association for Computational Linguistics. doi: 10.18653/v1/2022.acl-long.4. URL https://aclanthology.org/2022.acl-long.4.

- Neural machine translation of rare words with subword units. CoRR, abs/1508.07909, 2015. URL http://arxiv.org/abs/1508.07909.

- Morfessor 2.0: Toolkit for statistical morphological segmentation. In Proceedings of the Demonstrations at the 14th Conference of the European Chapter of the Association for Computational Linguistics, pp. 21–24, Gothenburg, Sweden, April 2014. Association for Computational Linguistics. doi: 10.3115/v1/E14-2006. URL https://aclanthology.org/E14-2006.

- Super-convergence: Very fast training of residual networks using large learning rates. CoRR, abs/1708.07120, 2017. URL http://arxiv.org/abs/1708.07120.

- Impact of tokenization on language models: An analysis for turkish. ACM Trans. Asian Low-Resour. Lang. Inf. Process., 22(4), mar 2023. ISSN 2375-4699. doi: 10.1145/3578707. URL https://doi.org/10.1145/3578707.

- GLUE: A multi-task benchmark and analysis platform for natural language understanding. CoRR, abs/1804.07461, 2018. URL http://arxiv.org/abs/1804.07461.

- Huggingface’s transformers: State-of-the-art natural language processing. CoRR, abs/1910.03771, 2019. URL http://arxiv.org/abs/1910.03771.

- Zhou, G. Morphological zero-shot neural machine translation. Master’s thesis, School of Informatics, University of Edinburgh, 2018.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.