Prompt Middleware: Mapping Prompts for Large Language Models to UI Affordances

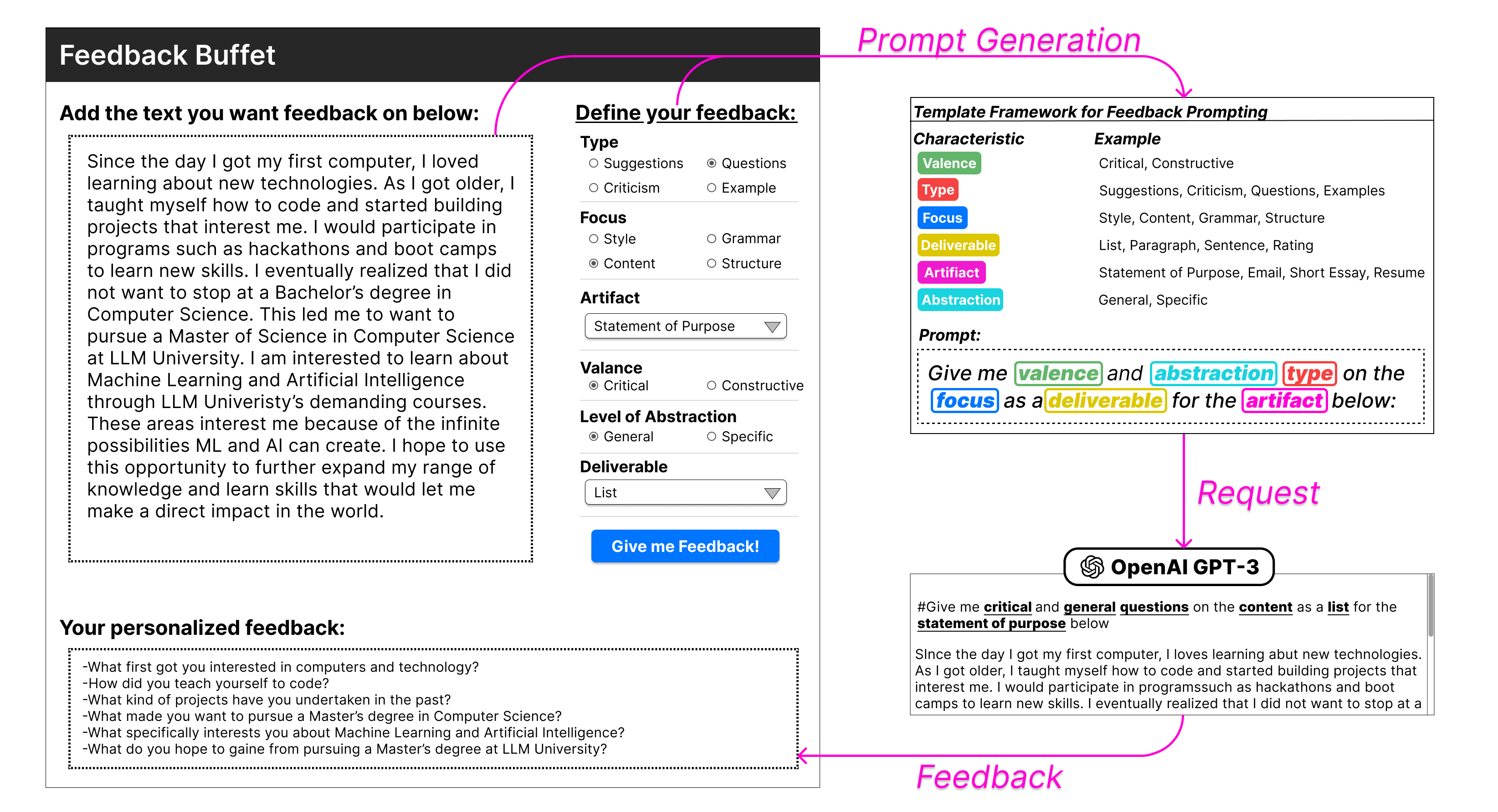

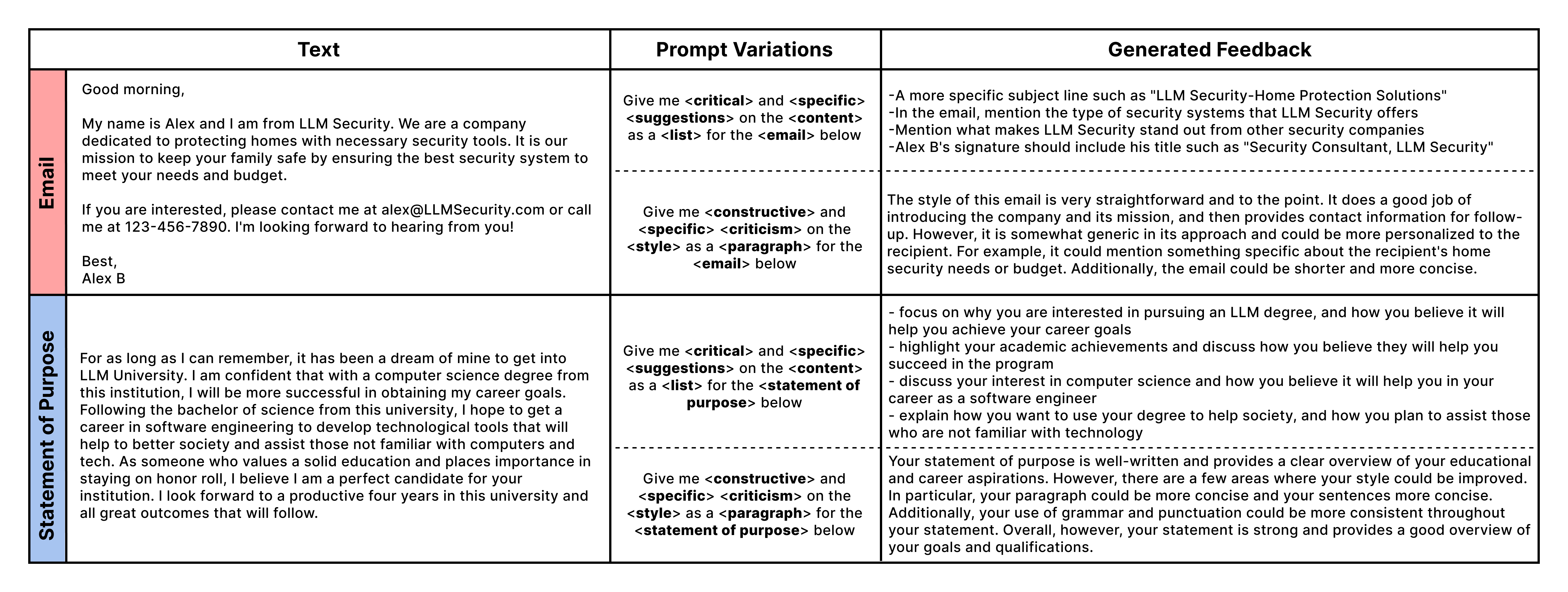

Abstract: To help users do complex work, researchers have developed techniques to integrate AI and human intelligence into user interfaces (UIs). With the recent introduction of LLMs, which can generate text in response to a natural language prompt, there are new opportunities to consider how to integrate LLMs into UIs. We present Prompt Middleware, a framework for generating prompts for LLMs based on UI affordances. These include prompts that are predefined by experts (static prompts), generated from templates with fill-in options in the UI (template-based prompts), or created from scratch (free-form prompts). We demonstrate this framework with FeedbackBuffet, a writing assistant that automatically generates feedback based on a user's text input. Inspired by prior research showing how templates can help non-experts perform more like experts, FeedbackBuffet leverages template-based prompt middleware to enable feedback seekers to specify the types of feedback they want to receive as options in a UI. These options are composed using a template to form a feedback request prompt to GPT-3. We conclude with a discussion about how Prompt Middleware can help developers integrate LLMs into UIs.

- The value of feedback in improving collaborative writing assignments in an online learning environment. Studies in Higher Education 37, 4 (2012), 387–400.

- Soylent: a word processor with a crowd inside. In Proceedings of the 23nd annual ACM symposium on User interface software and technology. 313–322.

- Language models are few-shot learners. Advances in Neural Information Processing Systems 33 (2020), 1877–1901.

- Wizard of Oz studies—why and how. Knowledge-based systems 6, 4 (1993), 258–266.

- The Idea Machine: LLM-Based Expansion, Rewriting, Combination, and Suggestion of Ideas. In Proceedings of the 14th Conference on Creativity and Cognition (Venice, Italy) (C&C ’22). Association for Computing Machinery, New York, NY, USA, 623–627. https://doi.org/10.1145/3527927.3535197

- Fluid Transformers and Creative Analogies: Exploring Large Language Models’ Capacity for Augmenting Cross-Domain Analogical Creativity. In Proceedings of the 15th Conference on Creativity and Cognition (Virtual Event, USA) (C&C ’23). Association for Computing Machinery, New York, NY, USA, 489–505. https://doi.org/10.1145/3591196.3593516

- Dennis M Docheff. 1990. The feedback sandwich. Journal of Physical Education, Recreation & Dance 61, 9 (1990), 17–18.

- Atul Gawande. 2009. The Checklist Manifesto: How to Get Things Right. Metropolitan Books.

- CausalMapper: Challenging Designers to Think in Systems with Causal Maps and Large Language Model. In Proceedings of the 15th Conference on Creativity and Cognition (Virtual Event, USA) (C&C ’23). Association for Computing Machinery, New York, NY, USA, 325–329. https://doi.org/10.1145/3591196.3596818

- Introassist: A tool to support writing introductory help requests. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. 1–13.

- PromptMaker: Prompt-based Prototyping with Large Language Models. In CHI Conference on Human Factors in Computing Systems Extended Abstracts. 1–8.

- Motif: Supporting Novice Creativity through Expert Patterns. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (Seoul, Republic of Korea) (CHI ’15). Association for Computing Machinery, New York, NY, USA, 1211–1220. https://doi.org/10.1145/2702123.2702507

- CrowdWeaver: visually managing complex crowd work. In Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work. 1033–1036.

- Critique Style Guide: Improving Crowdsourced Design Feedback with a Natural Language Model. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (Denver, Colorado, USA) (CHI ’17). Association for Computing Machinery, New York, NY, USA, 4627–4639. https://doi.org/10.1145/3025453.3025883

- Collaboratively crowdsourcing workflows with turkomatic. In Proceedings of the acm 2012 conference on computer supported cooperative work. 1003–1012.

- Ask Me or Tell Me? Enhancing the Effectiveness of Crowdsourced Design Feedback. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (Yokohama, Japan) (CHI ’21). Association for Computing Machinery, New York, NY, USA, Article 564, 12 pages. https://doi.org/10.1145/3411764.3445507

- Framing Creative Work: Helping Novices Frame Better Problems through Interactive Scaffolding. In Creativity and Cognition (Virtual Event, Italy) (C&C ’21). Association for Computing Machinery, New York, NY, USA, Article 30, 10 pages. https://doi.org/10.1145/3450741.3465261

- Combining Freeform Curation with Structured Templates. In Creativity and Cognition (Gathertown) (C&C ’19). ACM, New York, NY, USA, 11 pages. https://doi.org/10.1145/3591196.3593337

- Prototyping an Intelligent Agent through Wizard of Oz. In Proceedings of the INTERACT ’93 and CHI ’93 Conference on Human Factors in Computing Systems (Amsterdam, The Netherlands) (CHI ’93). Association for Computing Machinery, New York, NY, USA, 277–284. https://doi.org/10.1145/169059.169215

- Co-Writing Screenplays and Theatre Scripts with Language Models: Evaluation by Industry Professionals. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems. 1–34.

- Docent: transforming personal intuitions to scientific hypotheses through content learning and process training. In Proceedings of the Fifth Annual ACM Conference on Learning at Scale. 1–10.

- Laria Reynolds and Kyle McDonell. 2021. Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (Yokohama, Japan) (CHI EA ’21). Association for Computing Machinery, New York, NY, USA, Article 314, 7 pages. https://doi.org/10.1145/3411763.3451760

- What is ”Intelligent” in Intelligent User Interfaces? A Meta-Analysis of 25 Years of IUI. In Proceedings of the 25th International Conference on Intelligent User Interfaces (Cagliari, Italy) (IUI ’20). Association for Computing Machinery, New York, NY, USA, 477–487. https://doi.org/10.1145/3377325.3377500

- AI Chains: Transparent and Controllable Human-AI Interaction by Chaining Large Language Model Prompts. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (New Orleans, LA, USA) (CHI ’22). Association for Computing Machinery, New York, NY, USA, Article 385, 22 pages. https://doi.org/10.1145/3491102.3517582

- Re-Examining Whether, Why, and How Human-AI Interaction Is Uniquely Difficult to Design. Association for Computing Machinery, New York, NY, USA, 1–13. https://doi.org/10.1145/3313831.3376301

- Robert K Yin et al. 2018. Case study research and applications: Design and methods. Los Angeles, UK: Sage (2018).

- Wordcraft: story writing with large language models. In 27th International Conference on Intelligent User Interfaces. 841–852.

- Almost an Expert: The Effects of Rubrics and Expertise on Perceived Value of Crowdsourced Design Critiques. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing (San Francisco, California, USA) (CSCW ’16). Association for Computing Machinery, New York, NY, USA, 1005–1017. https://doi.org/10.1145/2818048.2819953

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.