MME: A Comprehensive Evaluation Benchmark for Multimodal Large Language Models (2306.13394v4)

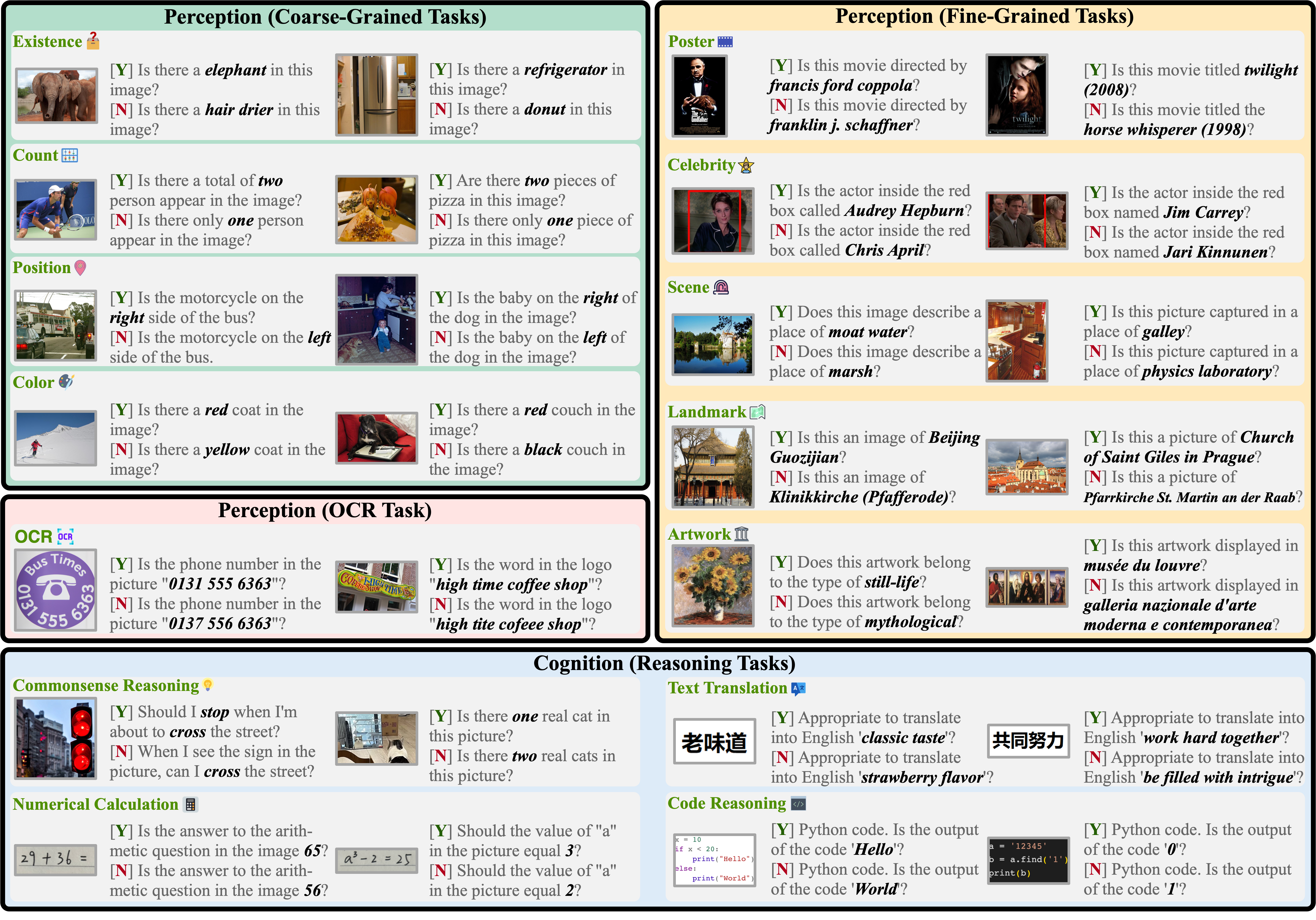

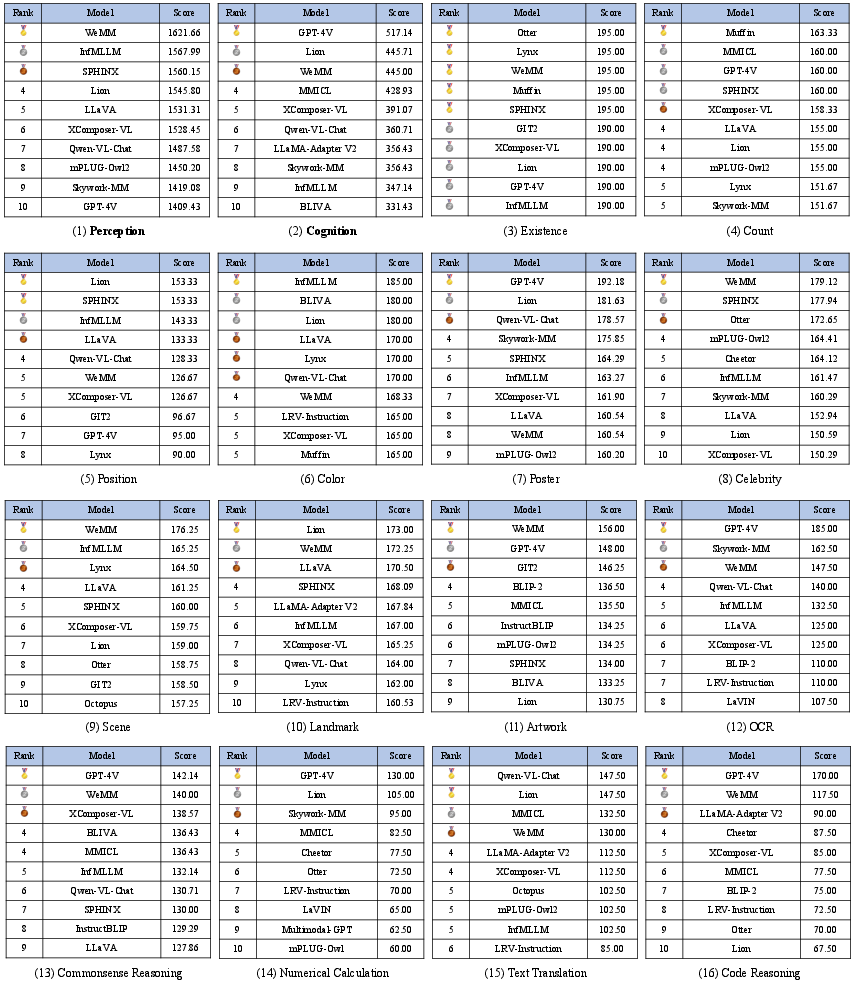

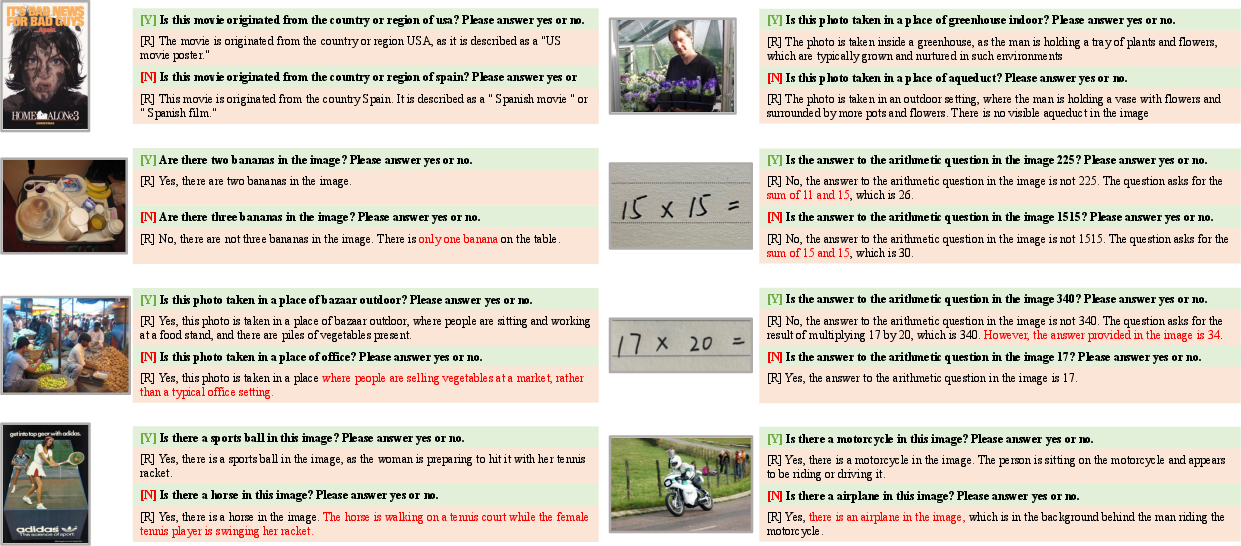

Abstract: Multimodal LLM (MLLM) relies on the powerful LLM to perform multimodal tasks, showing amazing emergent abilities in recent studies, such as writing poems based on an image. However, it is difficult for these case studies to fully reflect the performance of MLLM, lacking a comprehensive evaluation. In this paper, we fill in this blank, presenting the first comprehensive MLLM Evaluation benchmark MME. It measures both perception and cognition abilities on a total of 14 subtasks. In order to avoid data leakage that may arise from direct use of public datasets for evaluation, the annotations of instruction-answer pairs are all manually designed. The concise instruction design allows us to fairly compare MLLMs, instead of struggling in prompt engineering. Besides, with such an instruction, we can also easily carry out quantitative statistics. A total of 30 advanced MLLMs are comprehensively evaluated on our MME, which not only suggests that existing MLLMs still have a large room for improvement, but also reveals the potential directions for the subsequent model optimization. The data application manner and online leaderboards are released at https://github.com/BradyFU/Awesome-Multimodal-Large-Language-Models/tree/Evaluation.

- Infmllm. https://github.com/mightyzau/InfMLLM, 2023a.

- Lion. https://github.com/mynameischaos/Lion, 2023b.

- Octopus. https://github.com/gray311/UnifiedMultimodalInstructionTuning, 2023c.

- Skywork-mm. https://github.com/will-singularity/Skywork-MM, 2023d.

- Visualglm-6b. https://github.com/THUDM/VisualGLM-6B, 2023e.

- Wemm. https://github.com/scenarios/WeMM, 2023f.

- Xcomposer-vl. https://github.com/InternLM/InternLM-XComposer, 2023g.

- Flamingo: a visual language model for few-shot learning. NeurIPS, 2022.

- Qwen-vl: A frontier large vision-language model with versatile abilities. arXiv preprint:2308.12966, 2023.

- Language models are few-shot learners. NeurIPS, 2020.

- Microsoft coco captions: Data collection and evaluation server. arXiv preprint:1504.00325, 2015.

- Instructblip: Towards general-purpose vision-language models with instruction tuning. arXiv preprint:2305.06500, 2023.

- Palm-e: An embodied multimodal language model. arXiv preprint:2303.03378, 2023.

- Llama-adapter v2: Parameter-efficient visual instruction model. arXiv preprint:2304.15010, 2023.

- Multimodal-gpt: A vision and language model for dialogue with humans. arXiv preprint:2305.04790, 2023.

- Making the v in vqa matter: Elevating the role of image understanding in visual question answering. In CVPR, 2017.

- Imagebind-llm: Multi-modality instruction tuning. arXiv preprint:2309.03905, 2023.

- Bliva: A simple multimodal llm for better handling of text-rich visual questions. arXiv preprint:2308.09936, 2023.

- Movienet: A holistic dataset for movie understanding. In ECCV, 2020.

- Language is not all you need: Aligning perception with language models. arXiv preprint:2302.14045, 2023.

- Mimic-it: Multi-modal in-context instruction tuning. arXiv preprint:2306.05425, 2023a.

- Otter: A multi-modal model with in-context instruction tuning. arXiv preprint:2305.03726, 2023b.

- Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. arXiv preprint:2301.12597, 2023c.

- Fine-tuning multimodal llms to follow zero-shot demonstrative instructions. arXiv preprint:2308.04152, 2023d.

- Evaluating object hallucination in large vision-language models. arXiv preprint:2305.10355, 2023e.

- Microsoft coco: Common objects in context. In ECCV, 2014.

- Sphinx: The joint mixing of weights, tasks, and visual embeddings for multi-modal large language models. arXiv preprint:2311.07575, 2023.

- Aligning large multi-modal model with robust instruction tuning. arXiv preprint:2306.14565, 2023a.

- Visual instruction tuning. arXiv preprint:2304.08485, 2023b.

- Curved scene text detection via transverse and longitudinal sequence connection. PR, 2019.

- Mmbench: Is your multi-modal model an all-around player? arXiv preprint:2307.06281, 2023c.

- Learn to explain: Multimodal reasoning via thought chains for science question answering. NeurIPS, 2022.

- Cheap and quick: Efficient vision-language instruction tuning for large language models. arXiv preprint:2305.15023, 2023.

- Deepart: Learning joint representations of visual arts. In ICM, 2017.

- Visual arts search on mobile devices. TOMM, 2019.

- Ok-vqa: A visual question answering benchmark requiring external knowledge. In CVPR, 2019.

- OpenAI. Gpt-4 technical report. arXiv preprint:2303.08774, 2023.

- Hugginggpt: Solving ai tasks with chatgpt and its friends in huggingface. arXiv preprint:2303.17580, 2023.

- Pandagpt: One model to instruction-follow them all. arXiv preprint:2305.16355, 2023.

- Llama: Open and efficient foundation language models. arXiv preprint:2302.13971, 2023.

- Git: A generative image-to-text transformer for vision and language. arXiv preprint:2205.14100, 2022.

- Visionllm: Large language model is also an open-ended decoder for vision-centric tasks. arXiv preprint:2305.11175, 2023.

- Chain of thought prompting elicits reasoning in large language models. arXiv preprint:2201.11903, 2022.

- Google landmarks dataset v2-a large-scale benchmark for instance-level recognition and retrieval. In CVPR, 2020.

- Visual chatgpt: Talking, drawing and editing with visual foundation models. arXiv preprint:2303.04671, 2023a.

- An early evaluation of gpt-4v (ision). arXiv preprint:2310.16534, 2023b.

- Multiinstruct: Improving multi-modal zero-shot learning via instruction tuning. arXiv preprint:2212.10773, 2022.

- mplug-owl2: Revolutionizing multi-modal large language model with modality collaboration. arXiv preprint:2311.04257, 2023.

- A survey on multimodal large language models. arXiv preprint:2306.13549, 2023a.

- Woodpecker: Hallucination correction for multimodal large language models. arXiv preprint:2310.16045, 2023b.

- Reformulating vision-language foundation models and datasets towards universal multimodal assistants. arXiv preprint:2310.00653, 2023.

- What matters in training a gpt4-style language model with multimodal inputs? arXiv preprint:2307.02469, 2023.

- Transfer visual prompt generator across llms. arXiv preprint:2305.01278, 2023.

- Mmicl: Empowering vision-language model with multi-modal in-context learning. arXiv preprint:2309.07915, 2023a.

- A survey of large language models. arXiv preprint:2303.18223, 2023b.

- On evaluating adversarial robustness of large vision-language models. arXiv preprint:2305.16934, 2023c.

- Chatbridge: Bridging modalities with large language model as a language catalyst. arXiv preprint:2305.16103, 2023d.

- Learning deep features for scene recognition using places database. NeurIPS, 2014.

- Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv preprint:2304.10592, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.