- The paper demonstrates that networks trained with the FF algorithm form sparse, specialized neuronal ensembles analogous to those in biological cortex.

- It employs a two-pass forward approach that contrasts positive and negative data to optimize a goodness function without traditional backpropagation.

- The study shows that FF-trained models exhibit zero-shot learning capabilities by creating coherent ensembles for unseen categories.

Emergent Representations in Networks Trained with the Forward-Forward Algorithm

The paper "Emergent Representations in Networks Trained with the Forward-Forward Algorithm" explores the outcomes of neural networks trained using a novel algorithm, Forward-Forward (FF), focusing on how these networks emulate certain biological features of cortical networks. The main thrust of the paper is the internal network organization, particularly the emergence of sparse neuronal ensembles akin to those observed in biological sensory processing areas.

Introduction to the Forward-Forward Algorithm

The Forward-Forward algorithm takes inspiration from biological plausibility concerns surrounding the traditional Backpropagation (Backprop) method, which lacks resemblance to biological neural activities. The FF algorithm replaces the backward passes of Backprop with two forward passes and operates by distinguishing between positive and negative data. During training, the FF algorithm aims to maximize a goodness function on positive data while minimizing it on negative data, akin to mechanisms in contrastive learning.

Activation Patterns

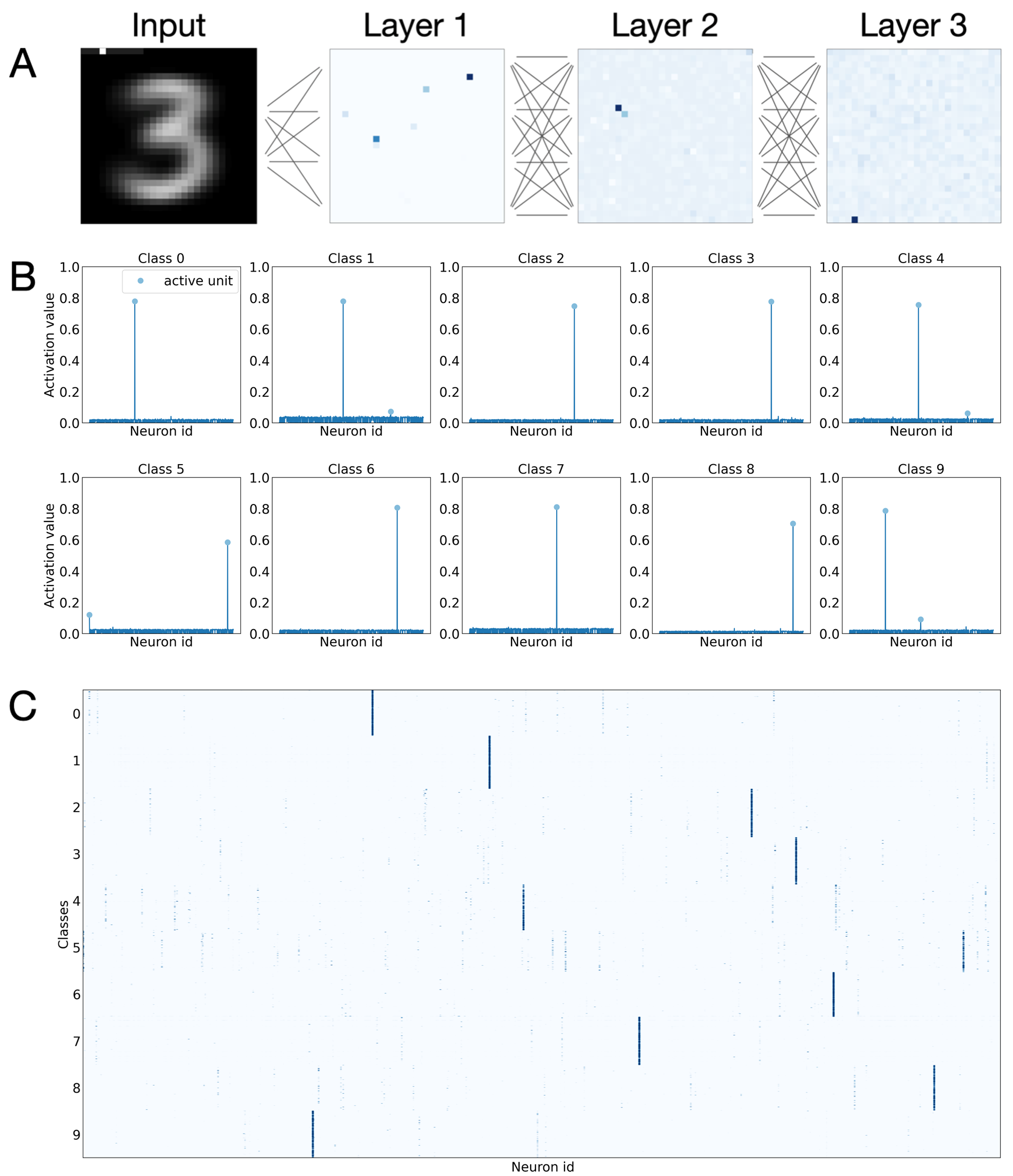

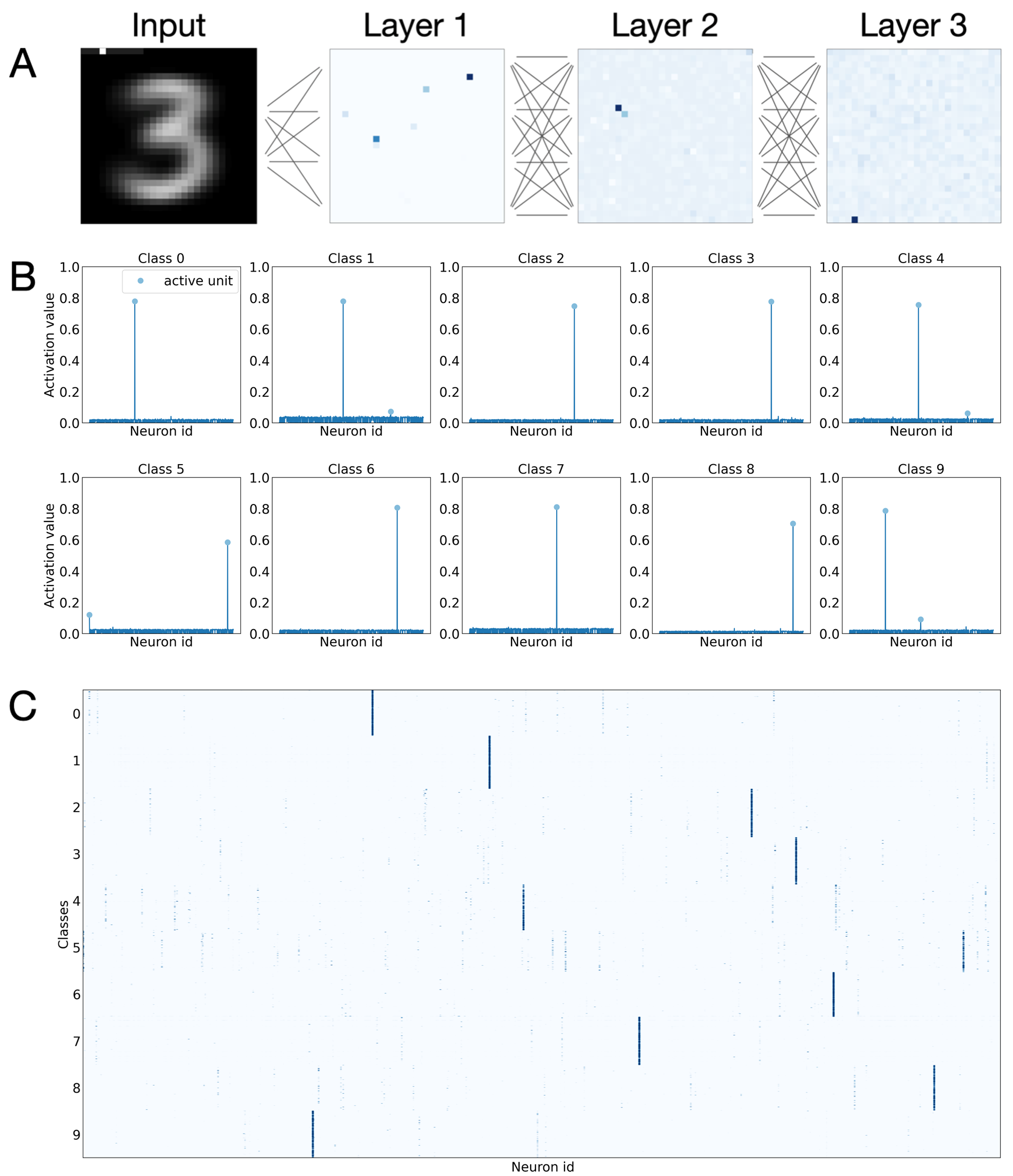

Figure 1: Activation patterns in a Multi-Layer Perceptron trained with the Forward-Forward algorithm, on the Mnist dataset.

Notably, the FF algorithm encourages sparsity in internal representations, resulting in highly specialized neurons that form ensembles. These neurons activate selectively in response to positive data, exhibiting minimal responses to negative or unstructured stimuli. The sparsity observed in FF is reminiscent of observations in cortical regions, suggesting ensembles as functional building blocks for perception.

Implementation of Forward-Forward in Synthetic and Biological Ensembles

The paper explores the nature of ensembles formed under the FF algorithm, drawing parallels between artificially generated and biologically observed neuronal patterns.

Methodology and Models

The authors investigate ensemble formation by comparing three distinct models:

- FF Model: Utilizing the architecture inspired by the seminal FF algorithm, this model underwent training according to FF principles.

- BP/FF Model: Sharing the architectural attributes of FF, this variant was trained end-to-end using Backprop to optimize the same goodness function characteristic of FF.

- BP Model: A conventional neural network classifier trained using Backprop on categorical cross-entropy loss, serving as a baseline.

Neurophysiological Findings

The FF model shows activation patterns analogous to cortical ensembles found in preliminary stages of sensory processing. Activation sparsity measured via Mean Absolute Deviation (MAD) and ensemble identification through a category-specific representation matrix demonstrate significant findings suggesting ensemble formation.

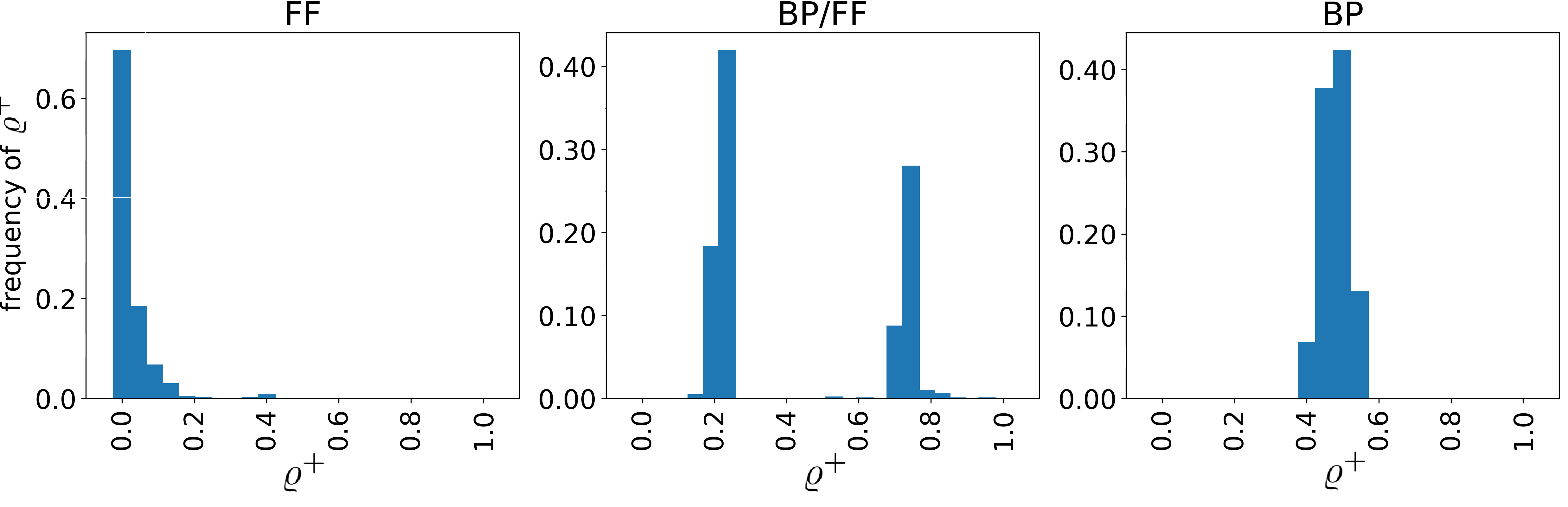

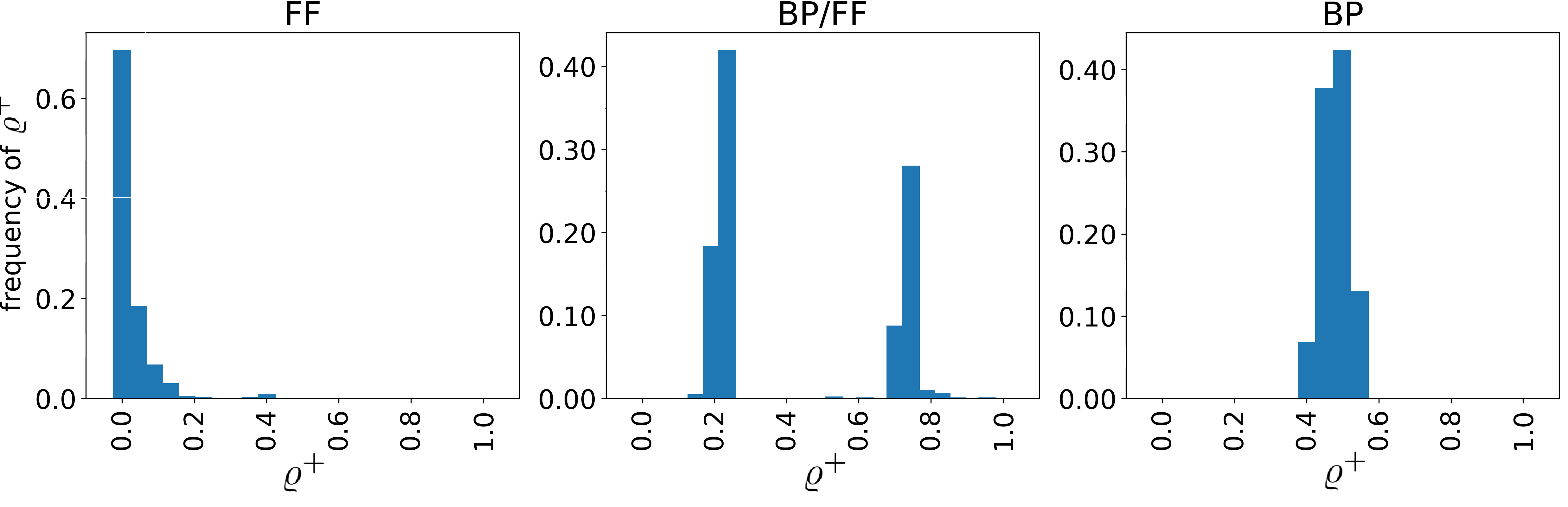

Figure 2: Distribution of excitatory and inhibitory connections for each neuron in Layer 2.

The computational infrastructure for FF espouses not only sparsity but also a distinct balance between excitatory and inhibitory connections, diverging from the balance observed in Backprop-trained models.

Emergent Ensemble Properties

Ensembles arising from FF training are characterized by minimal unit count and high activation specificity, aligning closely with neuronal processes witnessed in biological contexts.

Semantic Overlap and Zero-shot Capabilities

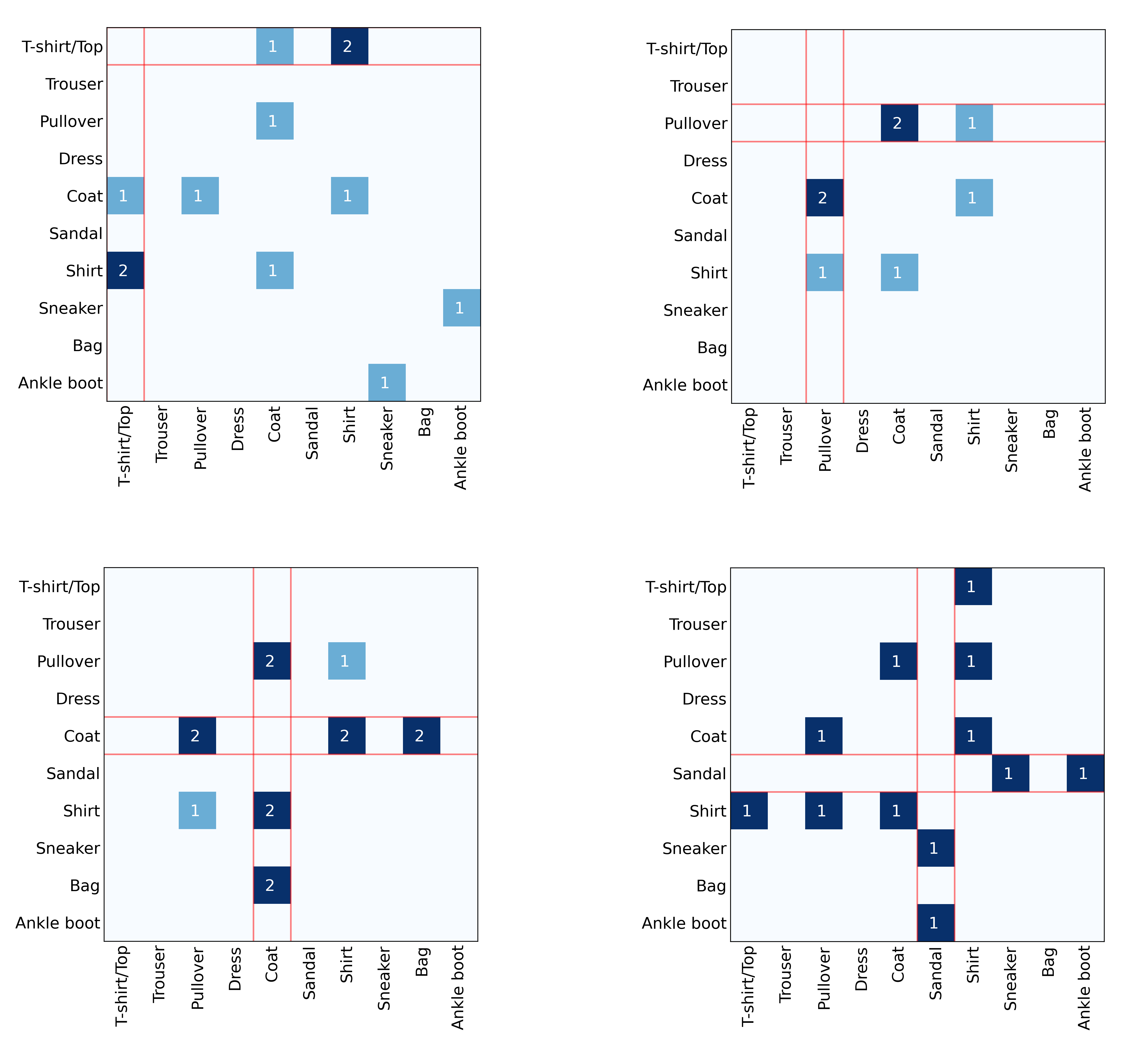

An emergent property noted was the formation of ensembles elicited by semantically similar classes with shared neurons, a trait mirrored in biological networks.

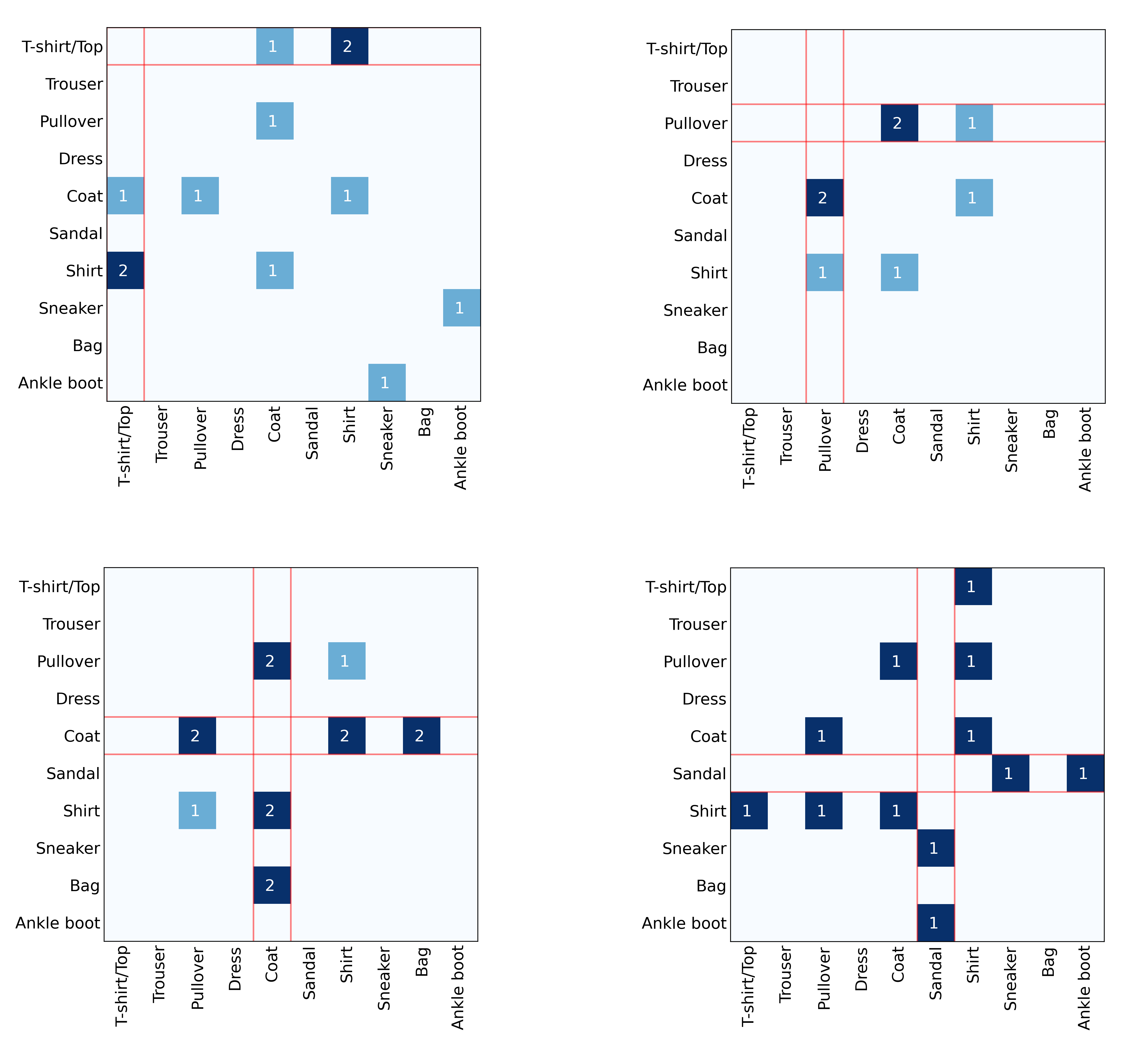

Figure 3: Shared units between ensembles of seen and unseen categories.

Further, the FF model exhibited robust capabilities in representing unseen categories, with coherent ensembles forming through test data, indicating potential for zero-shot learning advantageous in AI applications.

Conclusion and Future Directions

This research substantiates the perspective that FF may offer models closer to biological cortical learning than conventional Backprop. The emergent sparse and specialized neuronal ensembles and distinct excitation/inhibition interplay advocate for its potential in more accurately modeling brain-like learning processes.

Future work could explore how ensemble dynamics evolve and persist in real-time learning contexts, potentially revolutionizing strategies for encoding and processing information in artificial and biological systems alike.