BiomedGPT: A Generalist Vision-Language Foundation Model for Diverse Biomedical Tasks

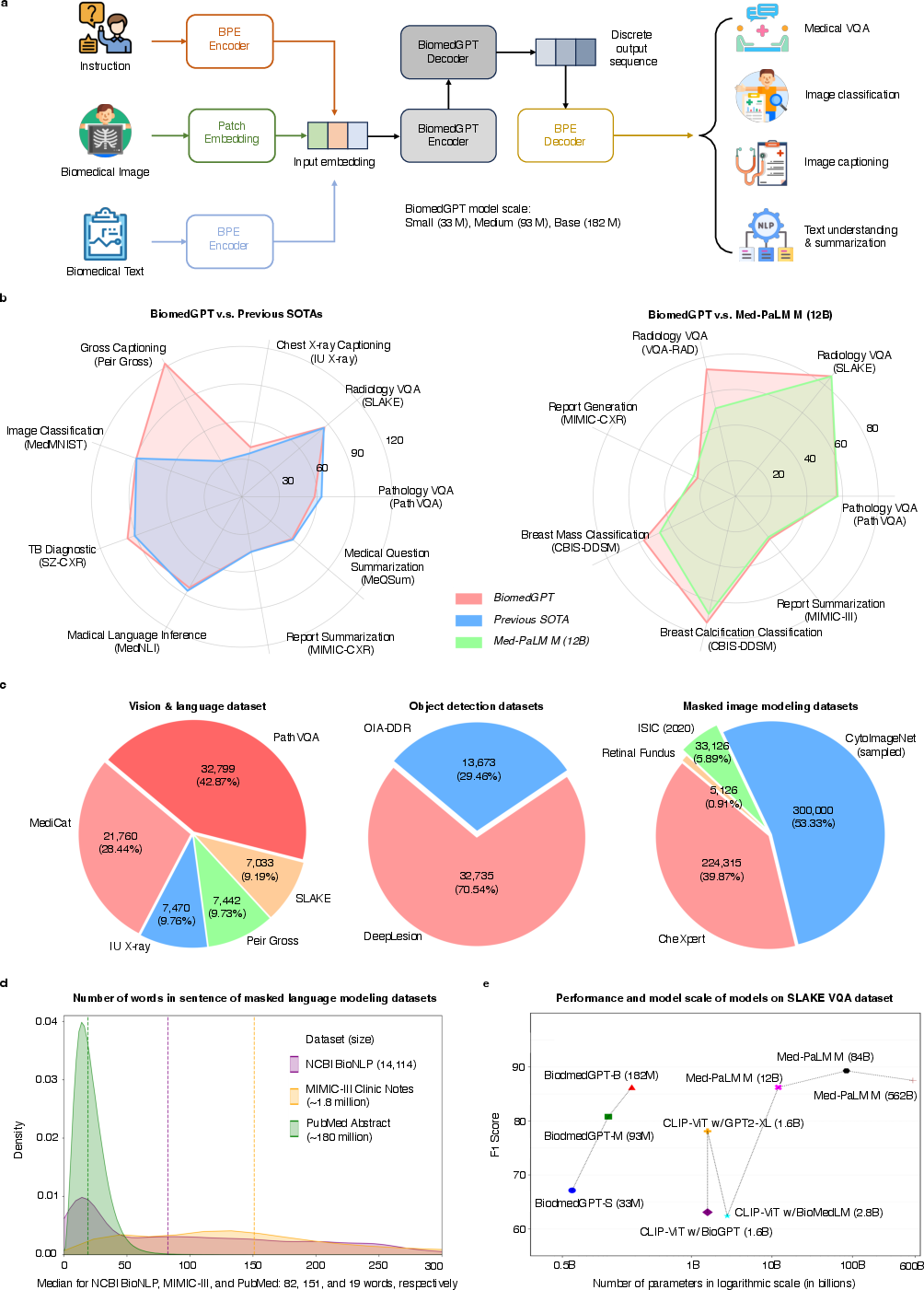

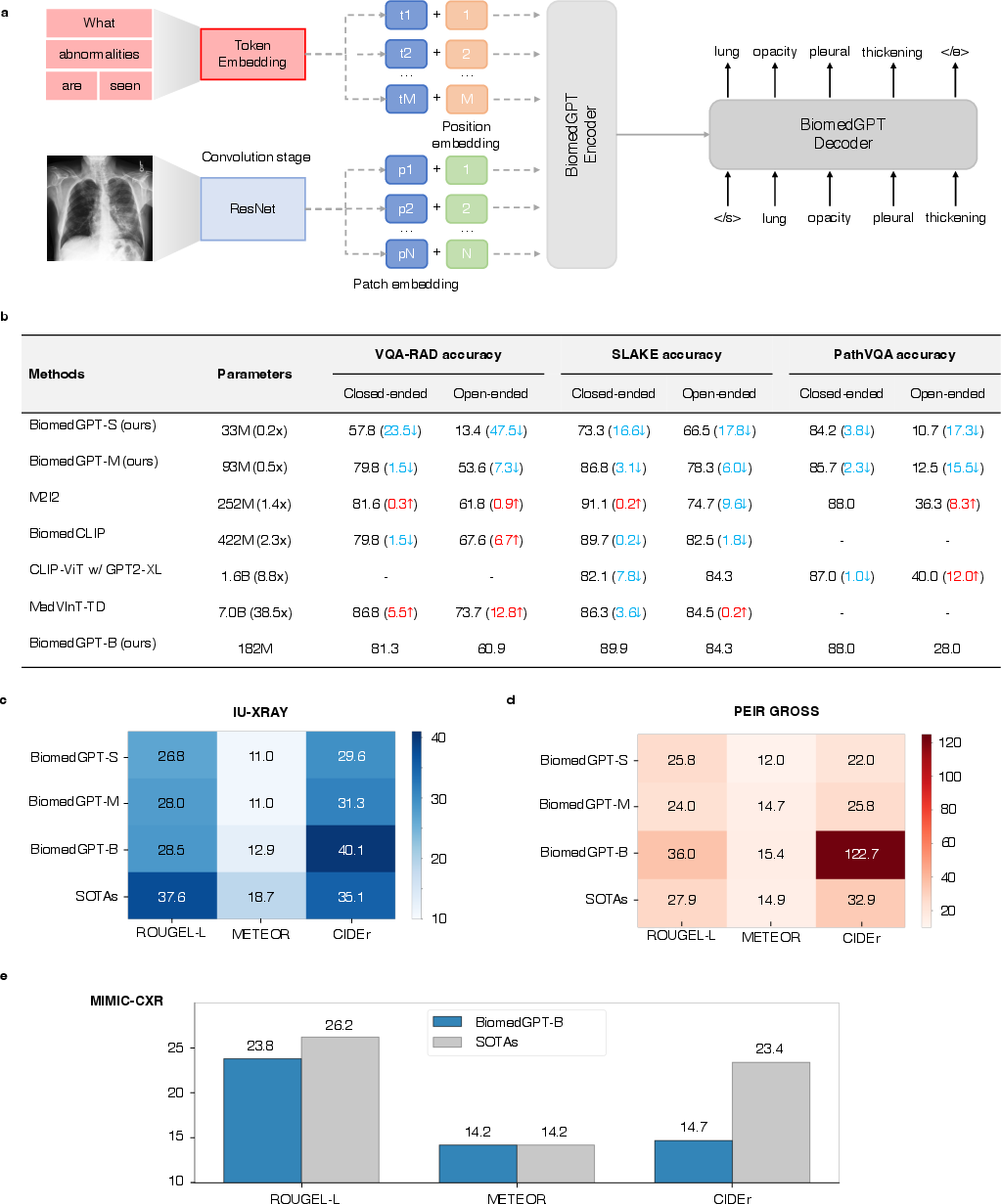

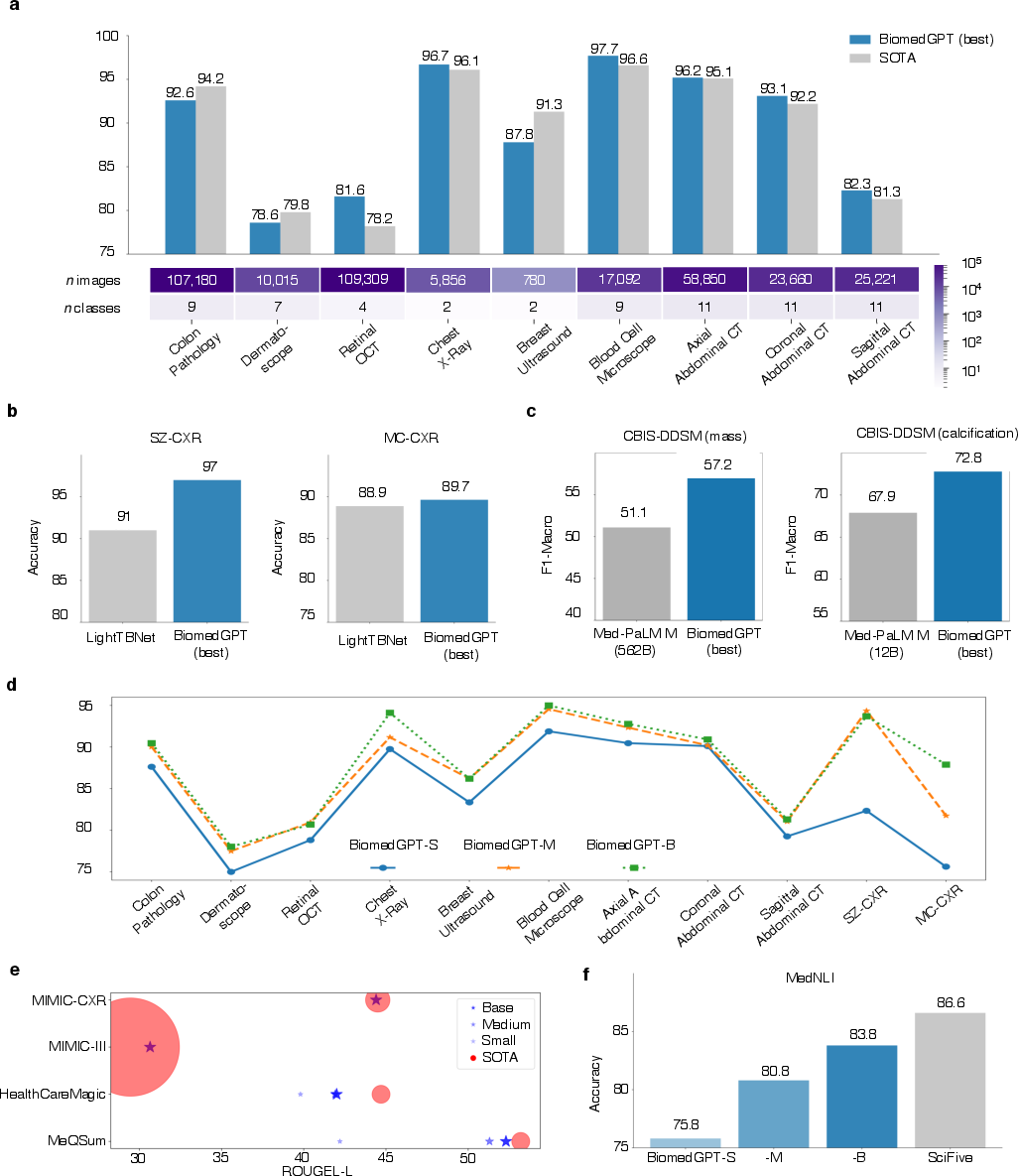

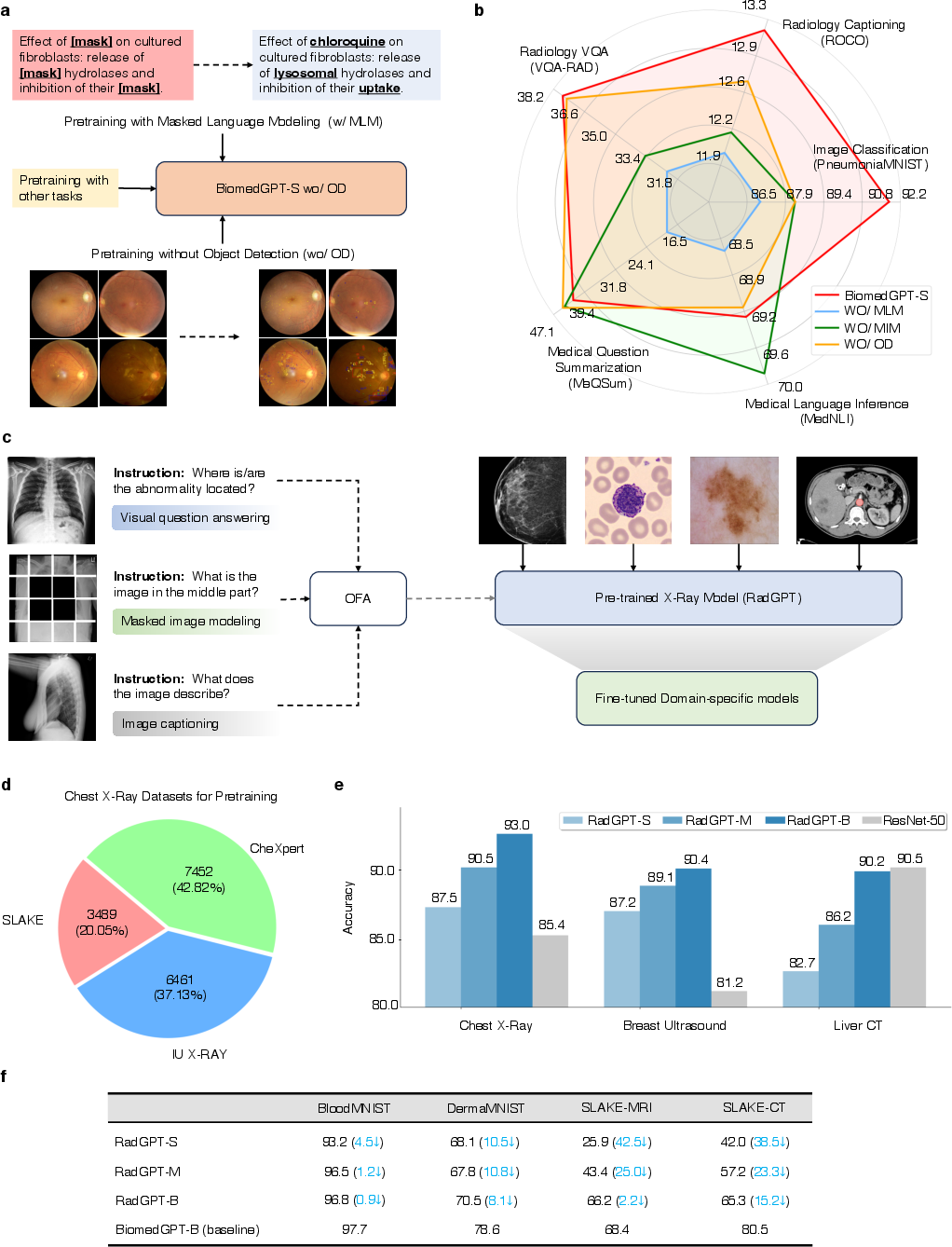

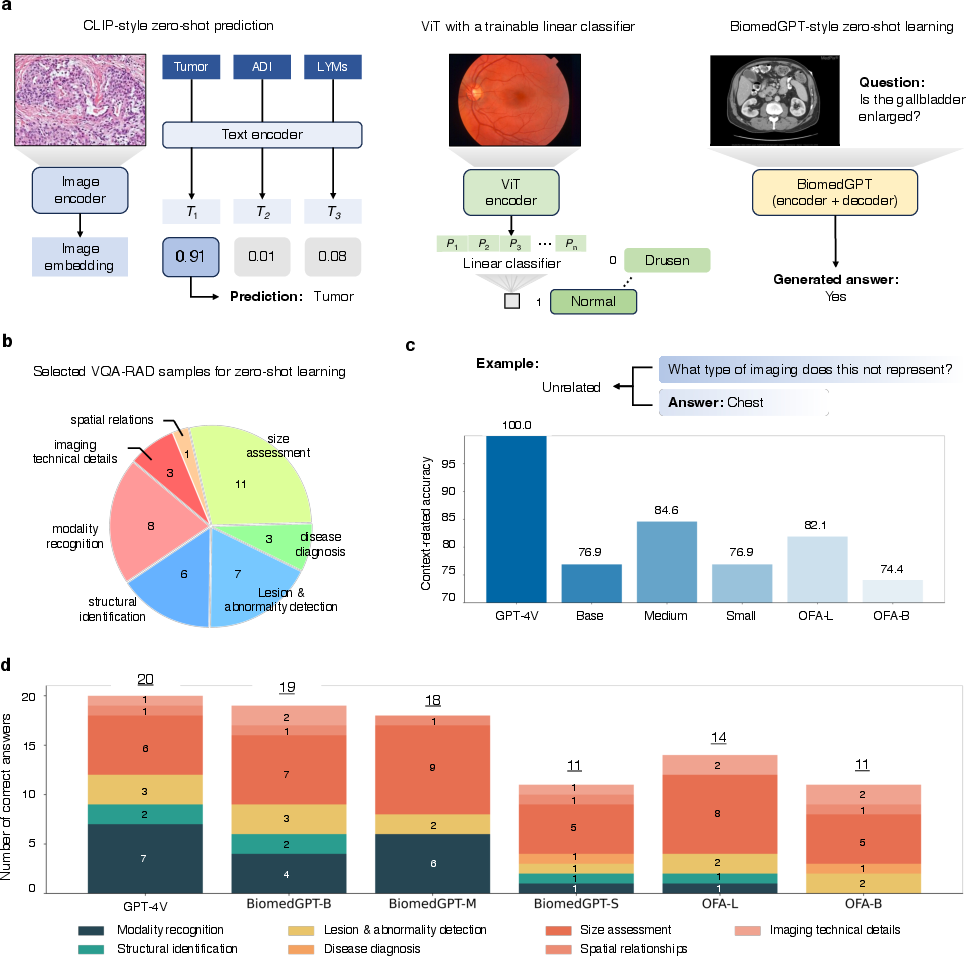

Abstract: Traditional biomedical AI models, designed for specific tasks or modalities, often exhibit limited flexibility in real-world deployment and struggle to utilize holistic information. Generalist AI holds the potential to address these limitations due to its versatility in interpreting different data types and generating tailored outputs for diverse needs. However, existing biomedical generalist AI solutions are typically heavyweight and closed source to researchers, practitioners, and patients. Here, we propose BiomedGPT, the first open-source and lightweight vision-language foundation model, designed as a generalist capable of performing various biomedical tasks. BiomedGPT achieved state-of-the-art results in 16 out of 25 experiments while maintaining a computing-friendly model scale. We also conducted human evaluations to assess the capabilities of BiomedGPT in radiology visual question answering, report generation, and summarization. BiomedGPT exhibits robust prediction ability with a low error rate of 3.8% in question answering, satisfactory performance with an error rate of 8.3% in writing complex radiology reports, and competitive summarization ability with a nearly equivalent preference score to human experts. Our method demonstrates that effective training with diverse data can lead to more practical biomedical AI for improving diagnosis and workflow efficiency.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Practical Applications

Immediate Applications

Below are applications that can be prototyped or deployed now given BiomedGPT’s open-source release, reported performance, and a successful hospital deployment for radiology VQA.

- Radiology question-answering at the point of care

- Sectors: healthcare, software (PACS/EHR)

- What: Side-panel assistant in PACS that answers targeted questions about chest X-rays (e.g., presence, location, severity, type of findings), supports checklists, and aids trainee education.

- Tools/workflows: PACS plugin with DICOM viewer integration; on-prem inference server; prompt templates for common queries.

- Assumptions/dependencies: On-prem deployment for PHI; clinician-in-the-loop review; institution-specific fine-tuning (e.g., MIMIC-CXR-like data) to address dataset shift; no autonomous diagnosis claims pending regulatory clearance.

- Radiology report drafting assistance (impression-from-findings, captioning)

- Sectors: healthcare, software

- What: Draft impressions from findings in radiology reports; generate preliminary image captions to accelerate report composition and teaching file creation.

- Tools/workflows: Smart-phrase generator in dictation software; report QA assistant to suggest clarifications; teaching-file builder.

- Assumptions/dependencies: Human review; local fine-tuning to match departmental style guides; guardrails to mitigate hallucinations.

- Clinical documentation summarization

- Sectors: healthcare, software

- What: Summarize patient-doctor dialogues and EHR notes to reduce administrative burden (e.g., MedQSum/MedDialog, MIMIC-III summarization-level performance).

- Tools/workflows: EHR-integrated summarizer for visit notes; after-visit summary generator with clinician sign-off.

- Assumptions/dependencies: PHI handling, audit logging; clinician oversight; configuration to maintain terminological precision and readability levels for patient-facing outputs.

- Breast imaging second-reader support (mass/calcification triage)

- Sectors: healthcare, medical devices

- What: Second-read prioritization or QA flags on mammography studies based on lesion-level classification performance.

- Tools/workflows: Worklist triage; discrepancy flagging; structured lesion lists for double-reading workflows.

- Assumptions/dependencies: Retrospective validation on local data; human adjudication; quality management system for software as a medical device; regulatory pathway planning.

- TB and pneumonia screening triage on chest X-rays

- Sectors: healthcare, public health

- What: Triage flags for suspected tuberculosis or pneumonia to prioritize reads, especially in resource-limited settings.

- Tools/workflows: Offline/on-device inference in screening programs; tele-radiology triage queues.

- Assumptions/dependencies: Local prevalence and data distribution validation; sensitivity/specificity tuning to clinical context; reporting of uncertainty.

- Medical education tutor for image-based learning

- Sectors: education

- What: Interactive VQA over radiology and pathology images; explanations of image findings; self-assessment quizzes.

- Tools/workflows: LMS plugin; case-based modules with image Q&A; curated datasets with feedback.

- Assumptions/dependencies: Curated, verified content; guardrails to prevent overconfident errors; faculty oversight.

- Dataset labeling acceleration and active learning

- Sectors: academia, healthcare, medtech

- What: Weak labels for classification; candidate captions/answers for human verification; rapid bootstrapping of new datasets.

- Tools/workflows: Annotation platforms with model-in-the-loop suggestions; uncertainty sampling; inter-rater adjudication workflows.

- Assumptions/dependencies: Clear annotation guidelines; tracking provenance of human corrections; bias checks.

- Research baseline for multimodal biomedical AI

- Sectors: academia, open-source ecosystem

- What: Reproducible baseline for VQA, captioning, classification, NLI, summarization; ablation-informed recipes (keep MLM; diversify modalities).

- Tools/workflows: Model zoo, training scripts, data preprocessing pipelines; shared benchmarks; collaborative reproducibility studies.

- Assumptions/dependencies: Respect dataset licenses (e.g., MIMIC); compute availability (single A100-class GPU suffices for fine-tuning).

- Patient-friendly explanation layer for imaging results (low-risk contexts)

- Sectors: healthcare, patient engagement

- What: Plain-language restatements of clinician-approved findings to support comprehension and shared decision-making.

- Tools/workflows: After-visit patient portal modules; glossary linking for terms; clinician pre-approval workflows.

- Assumptions/dependencies: Restricted to education; clinical approval before release; readability and accessibility testing.

- Quality control and report-image consistency checking

- Sectors: healthcare

- What: Flag inconsistencies between dictated findings and visual evidence (e.g., missing laterality, mismatched view).

- Tools/workflows: QA dashboard; nightly batch checks; feedback to radiologists for correction.

- Assumptions/dependencies: Risk-managed deployment as QA tool; explainability artifacts to justify flags; local tuning to reduce noise.

- Multimodal data governance and evaluation sandboxes

- Sectors: policy, hospital IT, academia

- What: On-prem testbeds to evaluate open-source, auditable models for safety, fairness, and performance before procurement.

- Tools/workflows: Standardized VQA/captioning test sets; human evaluation protocols; reporting templates (model cards).

- Assumptions/dependencies: Institutional governance; IRB where applicable; standardized metrics and acceptance thresholds.

- Cross-domain feasibility studies (transfer to new modalities)

- Sectors: academia, medtech R&D

- What: Rapid feasibility testing on ultrasound/MRI/CT using fine-tuning and ablation insights to budget data needs.

- Tools/workflows: Small-scale fine-tunes; performance monitoring by anatomy/modality; scaling plans if degradation observed.

- Assumptions/dependencies: Access to representative local data; awareness that transfer degrades without modality diversity—plan for incremental pretraining.

- Vendor-neutral integration kits

- Sectors: software, healthcare IT

- What: Reference adapters for DICOM, HL7/FHIR and EHR APIs to embed BiomedGPT into existing systems.

- Tools/workflows: Containerized services; REST/gRPC endpoints; RBAC and audit logs.

- Assumptions/dependencies: Security hardening; PHI compliance; change management with IT.

Long-Term Applications

These require additional research, scaling, validation, or regulatory approvals before routine clinical or commercial use.

- End-to-end radiology report generation from images

- Sectors: healthcare, medical devices

- What: Fully auto-generated structured reports with findings, impressions, and coded terminologies.

- Dependencies: Prospective, multi-site trials; calibration and uncertainty estimates; regulatory clearance; large-scale multi-modality pretraining.

- Generalist, patient-centric multimodal assistants

- Sectors: healthcare, patient services

- What: Assistants that integrate imaging, notes, labs, and patient goals to support shared decision-making.

- Dependencies: Multimodal EHR integration; reasoning over preferences; safety layers; fairness audits across demographics.

- Cross-modality clinical reasoning and tumor board copilot

- Sectors: healthcare

- What: Summarize cross-sectional imaging, pathology, and genomics; propose differential diagnoses and follow-up plans.

- Dependencies: Multimodal alignment (CT/MRI/US/path/genomics); causal reasoning; human oversight; medicolegal frameworks.

- Emergency and low-resource diagnostic triage across devices

- Sectors: public health, global health

- What: On-device triage on portable X-ray/ultrasound with robust offline models for rapid decisions.

- Dependencies: Edge optimization; robustness to variable equipment quality; localization and language support; field validation.

- Continual/federated learning across hospitals

- Sectors: healthcare, enterprise IT

- What: Privacy-preserving collaborative training to reduce site bias and improve generalization.

- Dependencies: Federated learning infrastructure; legal agreements; drift monitoring; secure aggregation.

- Clinical trial and eligibility matching using multimodal data

- Sectors: pharma, healthcare

- What: Match patients to trials based on imaging findings plus EHR text.

- Dependencies: Ontology mapping; harmonized data standards; explainability for eligibility rationales.

- Automated protocol selection and imaging quality assistance

- Sectors: medical devices, radiology operations

- What: Recommend protocols, detect positioning issues, and prompt repeat acquisitions before patient leaves scanner.

- Dependencies: Real-time integration with modality consoles; prospective validation; UI/UX for technologists.

- Safety-critical VQA for interventional guidance

- Sectors: healthcare, surgical robotics

- What: Answer targeted intra-procedural questions about device position or complications.

- Dependencies: Real-time constraints; rigorous reliability; certification; integration with fluoroscopy/US systems.

- Patient-facing conversational imaging education at scale

- Sectors: education, digital health

- What: Longitudinal messaging to explain imaging trajectories, expected findings, and recovery.

- Dependencies: Long-form, multi-visit summarization; personalization; content safety and clinical review.

- Insurance utilization review and coding support

- Sectors: insurance, health IT

- What: Extract imaging findings and suggested codes to support claims and medical necessity checks.

- Dependencies: Coding accuracy guarantees; auditability; stakeholder acceptance; regulatory compliance.

- National benchmarks and regulatory frameworks for multimodal foundation models

- Sectors: policy, standards bodies

- What: Standard test suites (VQA, captioning, classification), reporting requirements, post-market surveillance norms.

- Dependencies: Public-private partnerships; de-identified shared datasets; consensus on risk categories and evidence thresholds.

- Robust multilingual and cross-cultural adaptation

- Sectors: global health, education

- What: Support for non-English reports and patient materials; culturally appropriate explanations.

- Dependencies: Multilingual corpora; locale-specific clinical practice patterns; evaluation in diverse populations.

- Explainability, calibration, and liability-aware AI

- Sectors: healthcare, legal/policy

- What: Uncertainty-aware outputs, evidence tracing (e.g., heatmaps, rationale text), and liability-sharing models.

- Dependencies: Methods research; user interface standards; legal frameworks; clinician training.

Cross-cutting assumptions and dependencies

- Regulatory: Many clinical decision support functions require clearance (e.g., FDA/CE). Start with assistive, non-autonomous workflows.

- Data governance: HIPAA/GDPR-compliant, on-prem or VPC deployments; strict PHI handling; audit logs and RBAC.

- Generalization: Expect dataset shift; plan for local fine-tuning and monitoring; performance can degrade on unseen modalities without diverse pretraining.

- Safety: Human-in-the-loop, content moderation, uncertainty estimation, and fail-safe defaults are essential.

- Integration: APIs for DICOM, HL7/FHIR, and EHR; IT change control and security hardening.

- Bias and fairness: Evaluate across demographics and devices; document limitations; continuous post-deployment surveillance.

- Licensing and IP: Verify model and dataset licenses and institutional policies before commercial use.

- Compute: While lightweight, fine-tuning and on-prem inference still require GPU resources and MLOps readiness.

Collections

Sign up for free to add this paper to one or more collections.