- The paper introduces CEMAL, a framework using LLM-generated tailored exercises to address specific weaknesses in math word problem solvers.

- The methodology employs an iterative training process with problem and solution analogy strategies to enhance robustness and generalization.

- Empirical results demonstrate state-of-the-art performance on datasets like MAWPS, ASDiv-a, and SVAMP with reduced computational overhead.

Customized Exercise Generation for Math Word Problem Solvers

Introduction

The paper "Let GPT be a Math Tutor: Teaching Math Word Problem Solvers with Customized Exercise Generation" explores the integration of LLMs as pedagogical tools to enhance the learning efficacy of smaller Math Word Problem (MWP) solvers. The authors propose a framework called Customized Exercise for MAth Learning (CEMAL) which leverages LLMs to generate exercises tailored to the explicit weaknesses of the student model. This approach aims to distill the mathematical reasoning capabilities of LLMs into smaller models, achieving competitive performance with significantly less computational overhead.

Methodology

Iterative Training Framework

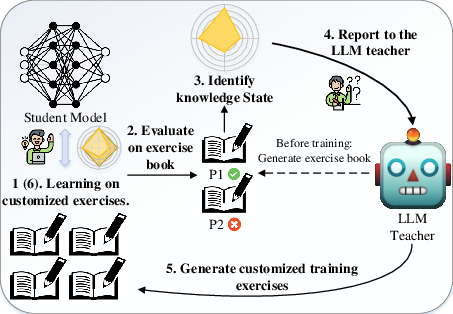

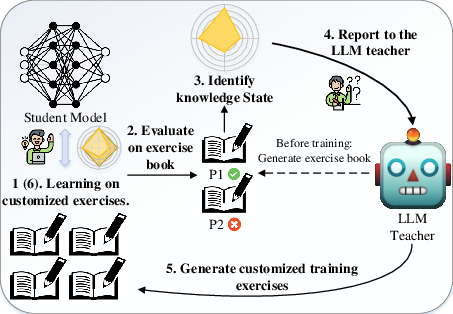

CEMAL employs an iterative framework wherein a student MWP solver is trained using exercises generated by an LLM, which acts as a tutor. The process involves an initial training phase using a set of augmented MWPs, followed by progressive refinement through exercises targeting specific weaknesses identified during model evaluation.

Figure 1: This figure shows the overall iterative framework of CEMAL. After one round of training, the student, which is a small MWP solver, is evaluated by exercises provided by an LLM teacher. Subsequently, LLM generates customized exercises that target the student's knowledge state and weaknesses, thereby facilitating a customized improvement in their overall performance.

Exercise Generation Technique

The core component of CEMAL is its ability to generate exercises that are specifically tailored to address the shortcomings of the student model. This is accomplished by employing both problem analogy and solution analogy strategies. The LLM generates exercises with modified problem statements that maintain the same underlying mathematical structure, ensuring that the solver gains robustness through exposure to varied linguistic contexts.

A critical part of this approach is the generation of an 'exercise book', a diverse validation set derived from the training data. This facilitates a comprehensive evaluation of the model's strengths and weaknesses by preventing overfitting to memorized solutions.

Empirical Results

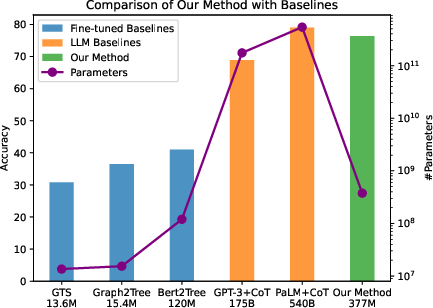

The experimental evaluation demonstrated that CEMAL achieves state-of-the-art results on multiple datasets, including MAWPS, ASDiv-a, and SVAMP. The method consistently outperformed traditional fine-tuning baselines and previous knowledge distillation techniques.

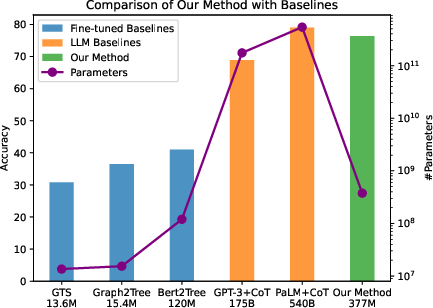

Figure 2: Accuracies vs model sizes for representative baselines and our approach on SVAMP dataset. Our method achieves competitive performance with LLMs with significantly fewer parameters.

A particularly noteworthy outcome is CEMAL's performance in out-of-distribution (OOD) scenarios, where the student solver's accuracy greatly benefited from the custom-tailored exercises. This suggests that the model's understanding generalizes beyond the specific distribution of its initial training data.

Analysis of Strategies and Components

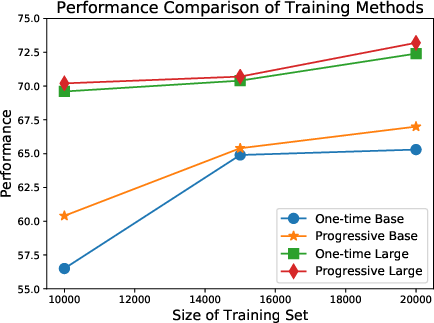

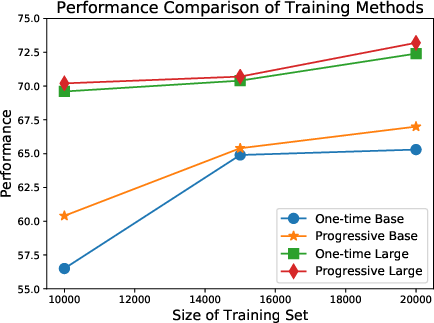

The paper investigates various problem generation strategies employed during training, explicitly comparing targeted versus random exercise generation. Targeted exercises, which directly address the solver's detected weaknesses, significantly enhance model performance, especially in OOD settings. Additionally, the importance of the exercise book in providing a more balanced and comprehensive evaluation is highlighted.

Figure 3: Performance Comparison between one-time augmentation and progressive augmentation on SVAMP under out-of-distribution setting.

Conclusion

The CEMAL framework presents a novel method for utilizing LLMs in a tutorial capacity to enhance smaller models' problem-solving capabilities. By systematically generating and integrating targeted exercises, CEMAL enables student solvers to attain high accuracy with reduced computational complexity compared to LLMs alone. The promising results, particularly in generalization and robustness, suggest that this approach can be expanded to other domains where LLMs can guide the learning process of smaller, more efficient AI systems.

In future work, the exploration of autonomous exercise generation without reference problems and enhancement of the quality control mechanisms for generated problems could further refine and extend the efficacy of the CEMAL framework.