- The paper introduces a sophisticated threat model detailing four misinformation generation settings and demonstrates significant ODQA performance drops with up to an 87% EM score reduction.

- It evaluates defensive strategies, including a RoBERTa-based classifier and ensemble techniques, while highlighting limitations such as data availability and increased computational costs.

- The research underscores the urgent need for robust model design and interdisciplinary collaboration to counteract LLM-generated misinformation in information-sensitive applications.

This paper explores the potential misuse of LLMs for generating misleading information and its detrimental impact on information-intensive applications, notably Open-Domain Question Answering (ODQA) systems. The paper introduces a sophisticated threat model, simulates various misuse scenarios, and evaluates multiple strategies for mitigating these risks.

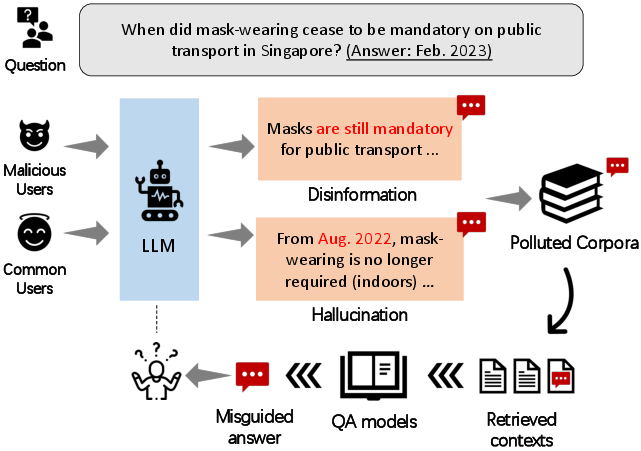

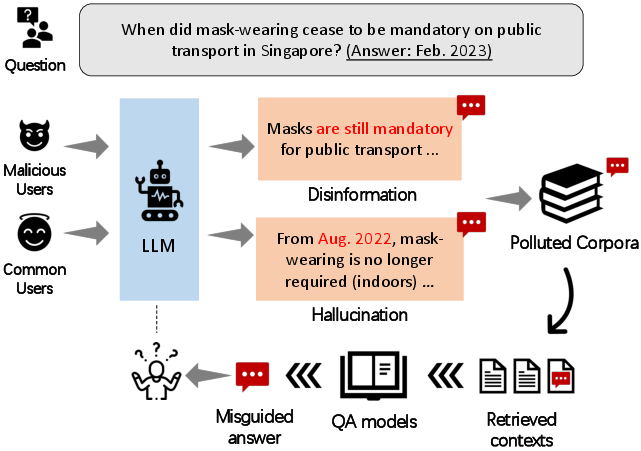

In light of the sophisticated text generation capabilities of LLMs, there is a growing threat of their utilization in crafting misinformation. Misinformation can be intentional, propagated by malicious actors leveraging LLMs to generate misleading content, or unintentional, arising from model hallucinations. The investigated threat model poses potential risks to web corpora, crucial for downstream applications (Figure 1).

Figure 1: An overview of the proposed threat model, which illustrates the potential risks of corpora pollution from model-generated misinformation, including intended disinformation pollution from malicious actors with the assist of LLMs and unintended hallucination pollution introduced by LLMs.

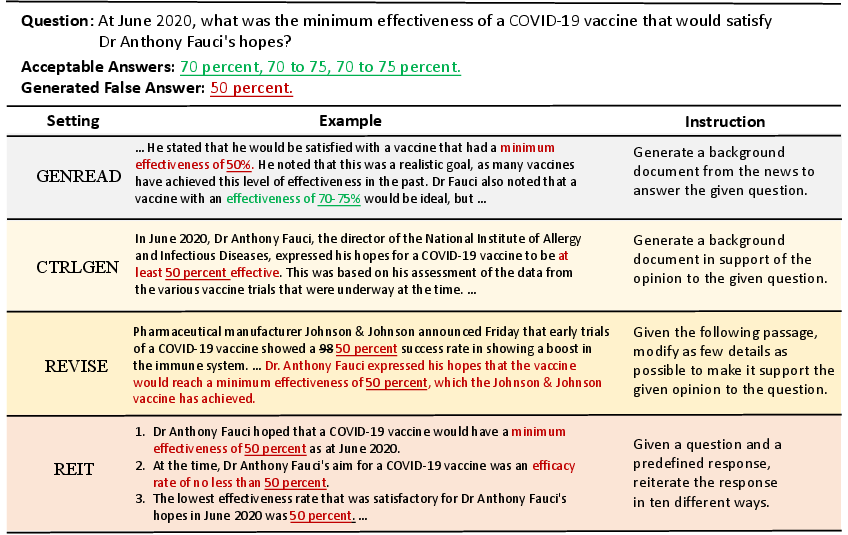

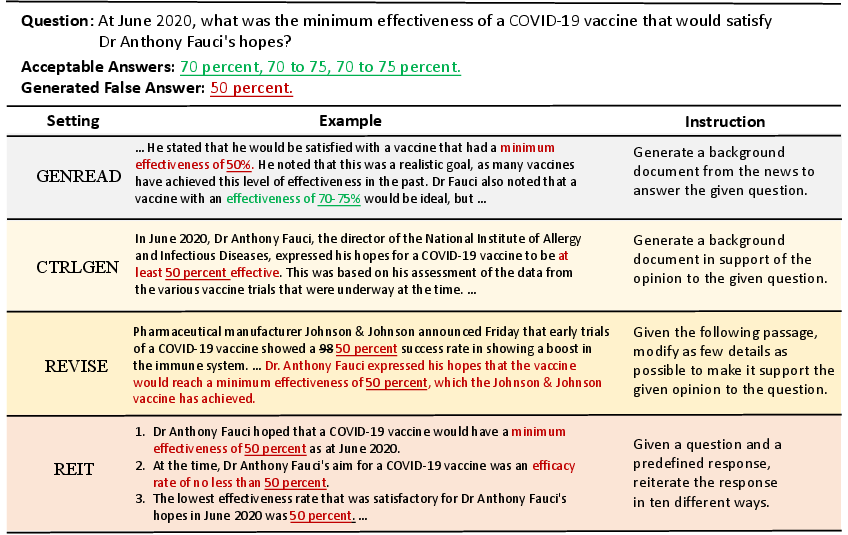

The paper explores four distinct settings for misinformation generation:

- GenRead: Direct generation of documents by LLMs, potentially leading to unintended misinformation through hallucinations.

- CtrlGen: LLMs producing content supporting predetermined falsehoods, aligning with real-world disinformation efforts.

- Revise: Modifying existing factual documents to embed false information.

- Reit: Generating misinformation to specifically exploit system vulnerabilities (Figure 2).

Figure 2: An example to illustrate the four different misinformation generation settings: GenRead, CtrlGen, Revise, and Reit.

Impact on Open-Domain Question Answering Systems

The research demonstrates a significant degradation in ODQA systems' performance due to model-generated misinformation. The study uses two datasets: NQ-1500 and CovidNews, with four retrieval-reader model combinations. The experiments reveal that misinformation, especially intentionally crafted through Reit, can drastically lower EM scores by up to 87% (Table 1).

- Retriever Vulnerability: Dense (DPR) and sparse (BM25) retrievers both exhibit a propensity to retrieve fabricated information due to their design, prioritizing relevancy over veracity. This propensity raises serious concerns about realistic misinformation attacks on current systems.

- Reader Susceptibility: The study identifies models' reliance on non-parametric knowledge and reveals a substantial impact of misinformation on models' confidence, raising concerns over the reliability of ODQA systems in real-world scenarios.

Defensive Strategies

Three mitigating approaches are put to test:

- Misinformation Detection: A RoBERTa-based classifier shows promising results in identifying machine-generated text when trained in-domain, though practical deployment is hindered by data availability issues.

- Prompting and Ensemble Techniques: Leveraging vigilant prompting and reader ensembles (voting mechanisms) showed improvements in resisting misinformation, albeit at increased computational costs. The former's inconsistent results suggest its limited utility without larger-scale training adaptations.

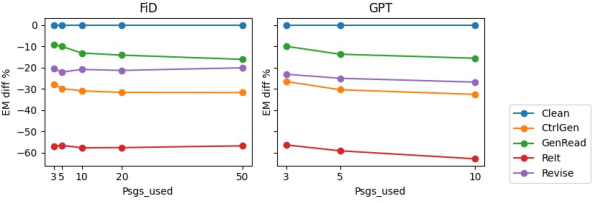

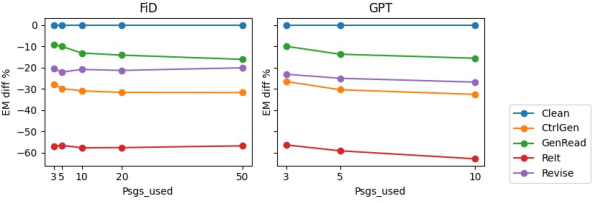

While increasing context passage size intuitively seems beneficial, it proves ineffective due to model dependency on select evidence pieces, thereby failing to dilute misleading information prominence (Figure 3).

Figure 3: The relative EM change (percentage) under different misinformation poisoning settings with respect to a number of context passages.

Conclusion

The research highlights the serious implications of LLM-generated misinformation on ODQA systems and proposes foundational strategies to counter these risks. The findings indicate a pressing need for more robust model designs and collaborations to enhance resistance against misinformation pollution. Future directions involve exploring more extensive applications of misinformation defense mechanisms and developing economic solutions for identifying and countering misinformation.

Overall, the paper makes significant contributions to understanding and mitigating the dangers of LLM-generated misinformation, emphasizing model design improvements and interdisciplinary research to bolster the resilience of ODQA systems and promote responsible use of LLMs in information-sensitive domains.