- The paper proposes ReFIT, which enhances retrieval recall by distilling reranker outputs to update query representations via KL divergence.

- It integrates an inference-time distillation process into a dual-step retrieval framework, yielding consistent improvements on benchmarks like BEIR and Mr.TyDi.

- The approach is lightweight, architecture-agnostic, and applicable to various modalities including text-to-video and multilingual IR tasks with minimal latency increase.

ReFIT: Relevance Feedback from a Reranker during Inference

The paper "ReFIT: Relevance Feedback from a Reranker during Inference" (2305.11744) introduces an innovative approach to improve the recall of information retrieval (IR) systems. This method, termed ReFIT, enhances the classic retrieve-and-rerank (R&R) framework by leveraging the re-ranker's output as inference-time relevance feedback to update the query representations within the retriever. This essay examines the methodology, experimental results, and implications of this approach.

Methodology

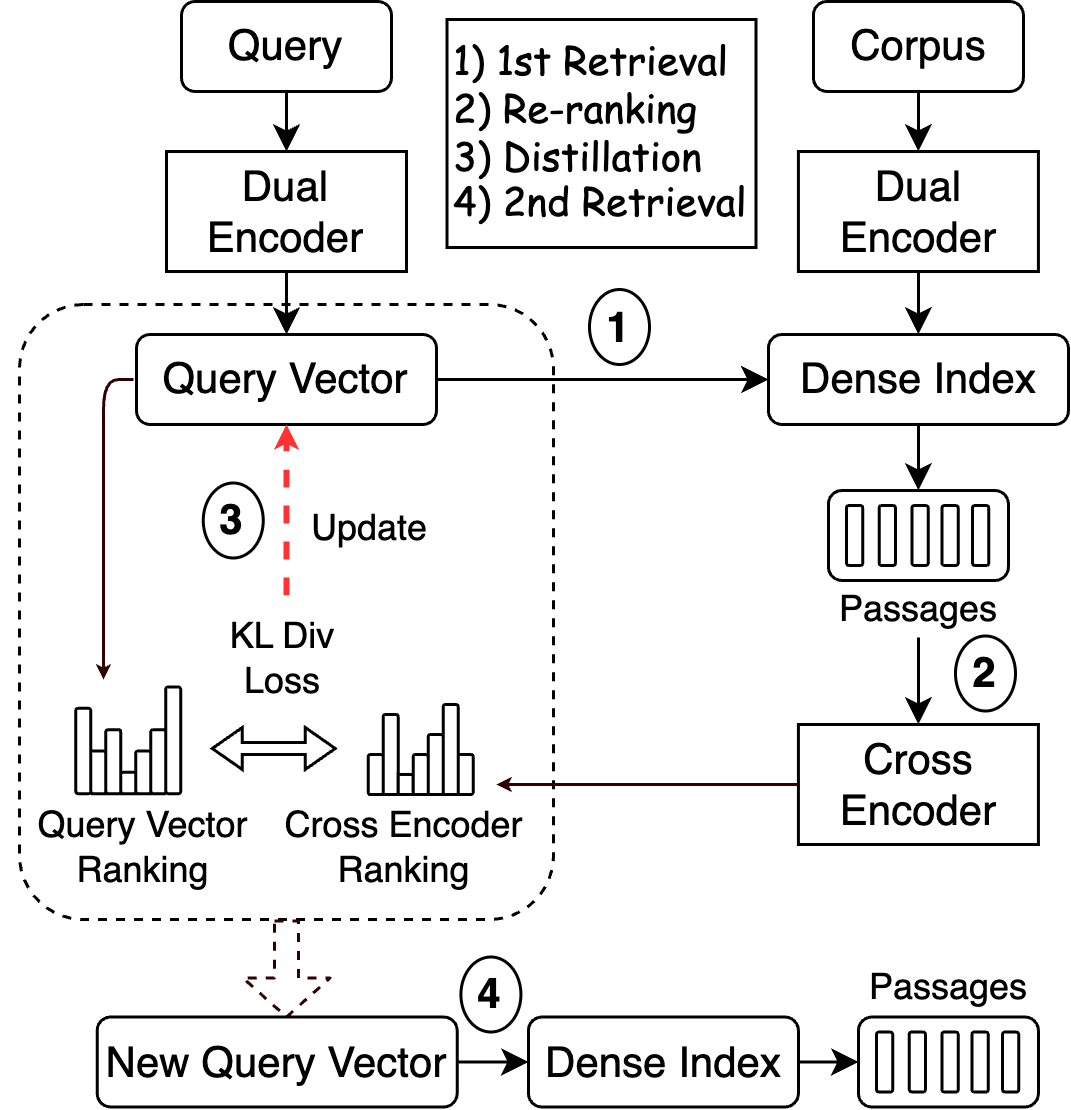

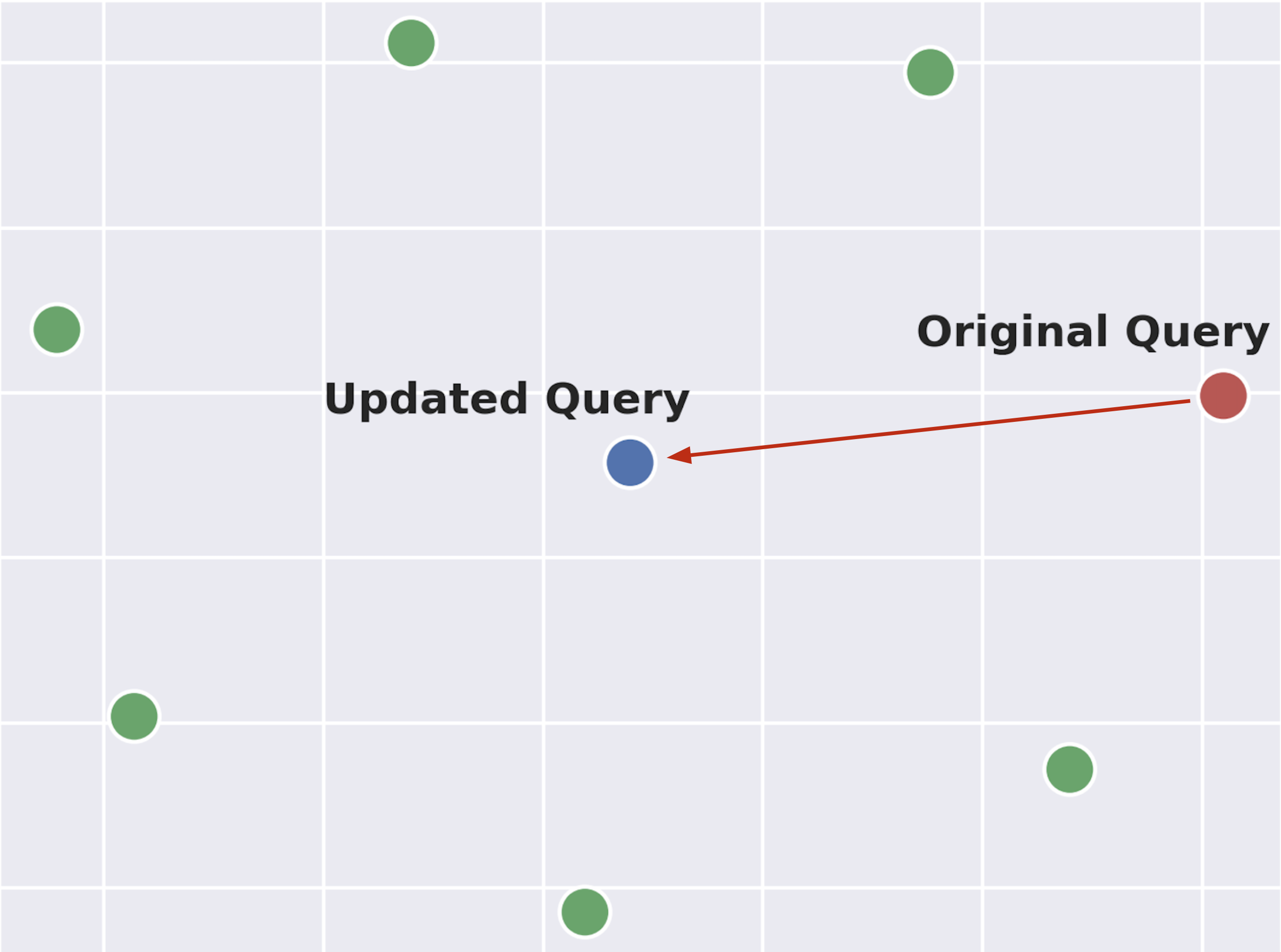

The ReFIT approach integrates a novel inference-time distillation process into the traditional retrieve-and-rerank (R&R) framework to compute a new query vector that improves recall when used in a second retrieval step. The key idea is to update the retriever's query representation using the output of a more powerful cross-encoder re-ranker. This update is performed by minimizing a distillation loss which aligns the retriever's score distribution with that of the re-ranker.

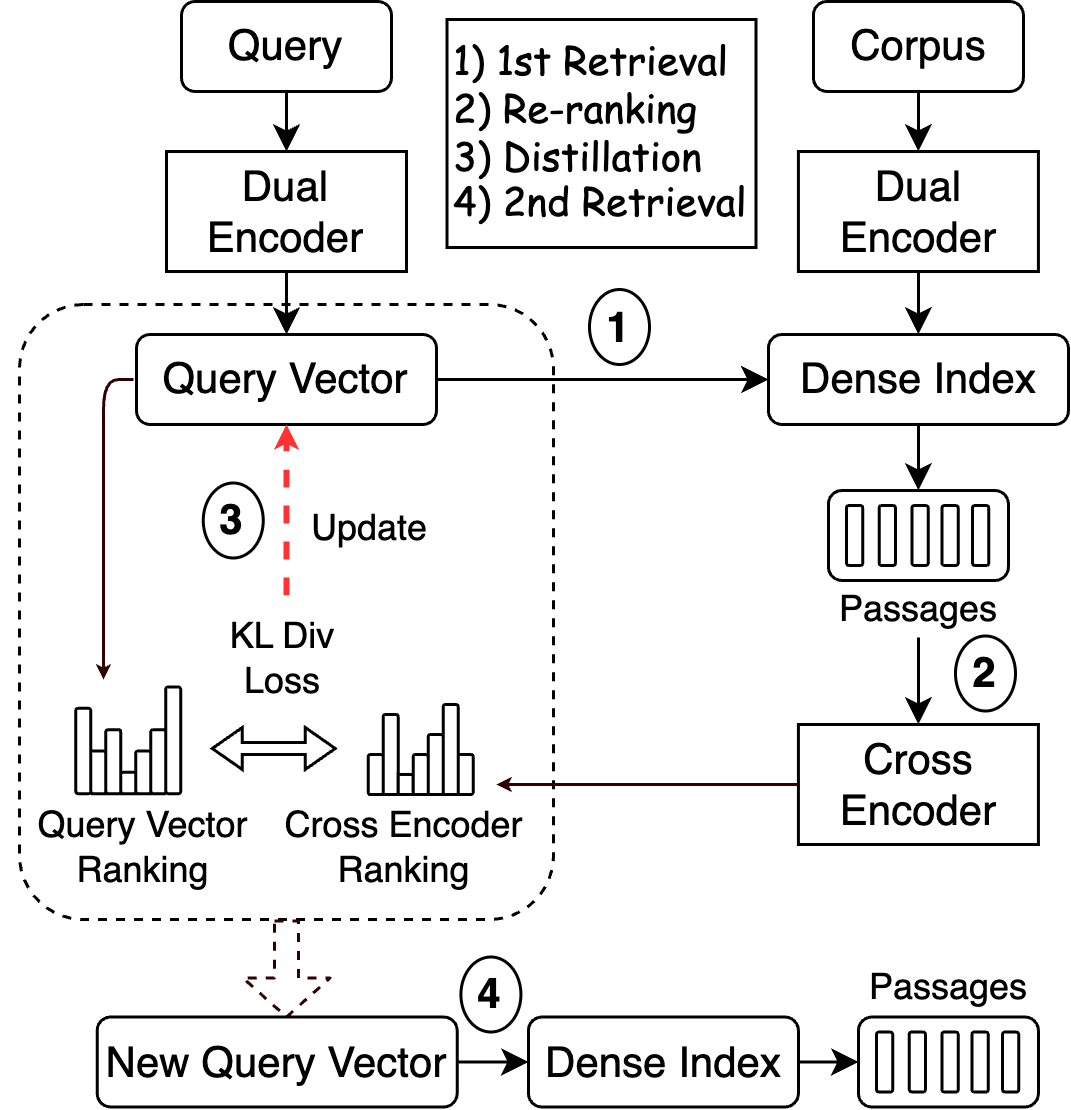

Figure 1: The proposed method for re-ranker relevance feedback. We introduce an inference-time distillation process (step 3) into the traditional retrieve-and-rerank framework (steps 1 and 2) to compute a new query vector, which improves recall when used for a second retrieval step (step 4).

The process begins with a typical R&R framework involving an initial retrieval using a dual-encoder and subsequent reranking using a cross-encoder. ReFIT introduces a novel inference-time component whereby the re-ranker's outputs are distilled into an updated query vector. This is achieved using Kullback-Leibler (KL) divergence to minimize the discrepancy between the distributions of the re-ranker and the retriever. The resulting updated query vector is then used for a second retrieval step, thereby enhancing the retrieval recall.

Experimental Results

Experimental evaluations demonstrate significant improvements in retrieval recall across multiple domains, languages, and modalities. The model shows consistent performance enhancement on the BEIR benchmark and the multilingual Mr.TyDi benchmark, as well as a multi-modal retrieval setup involving text-to-video retrieval.

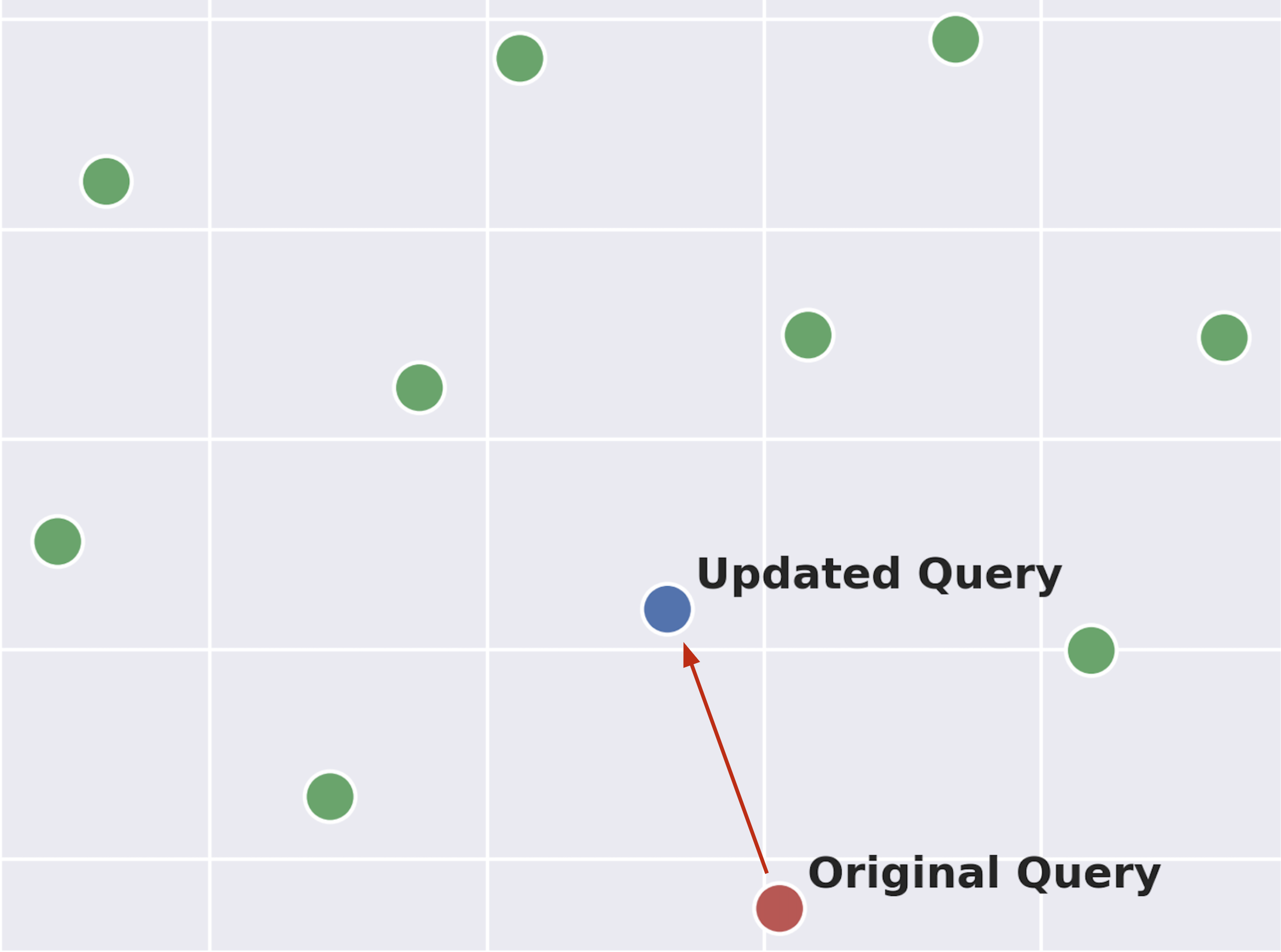

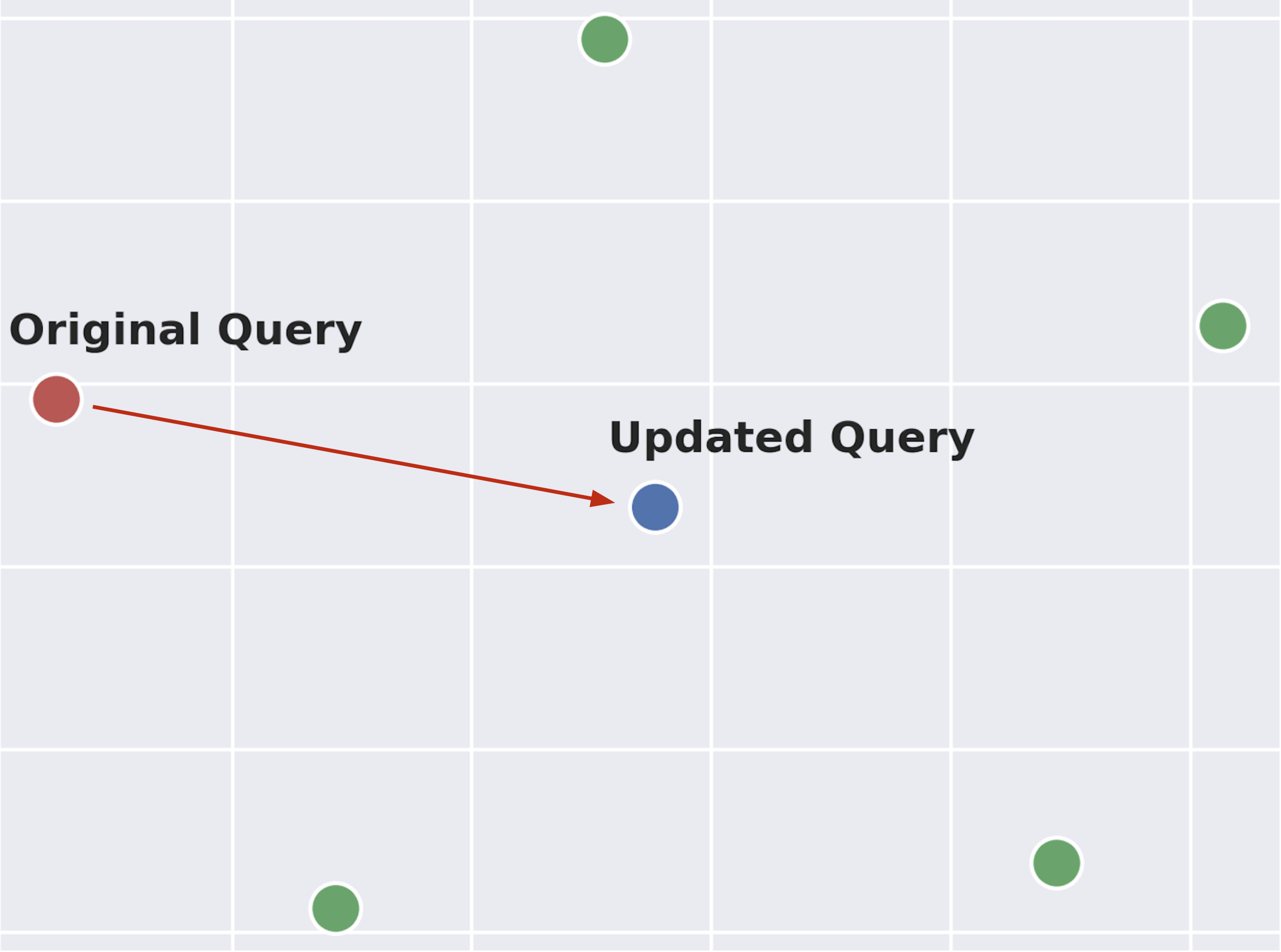

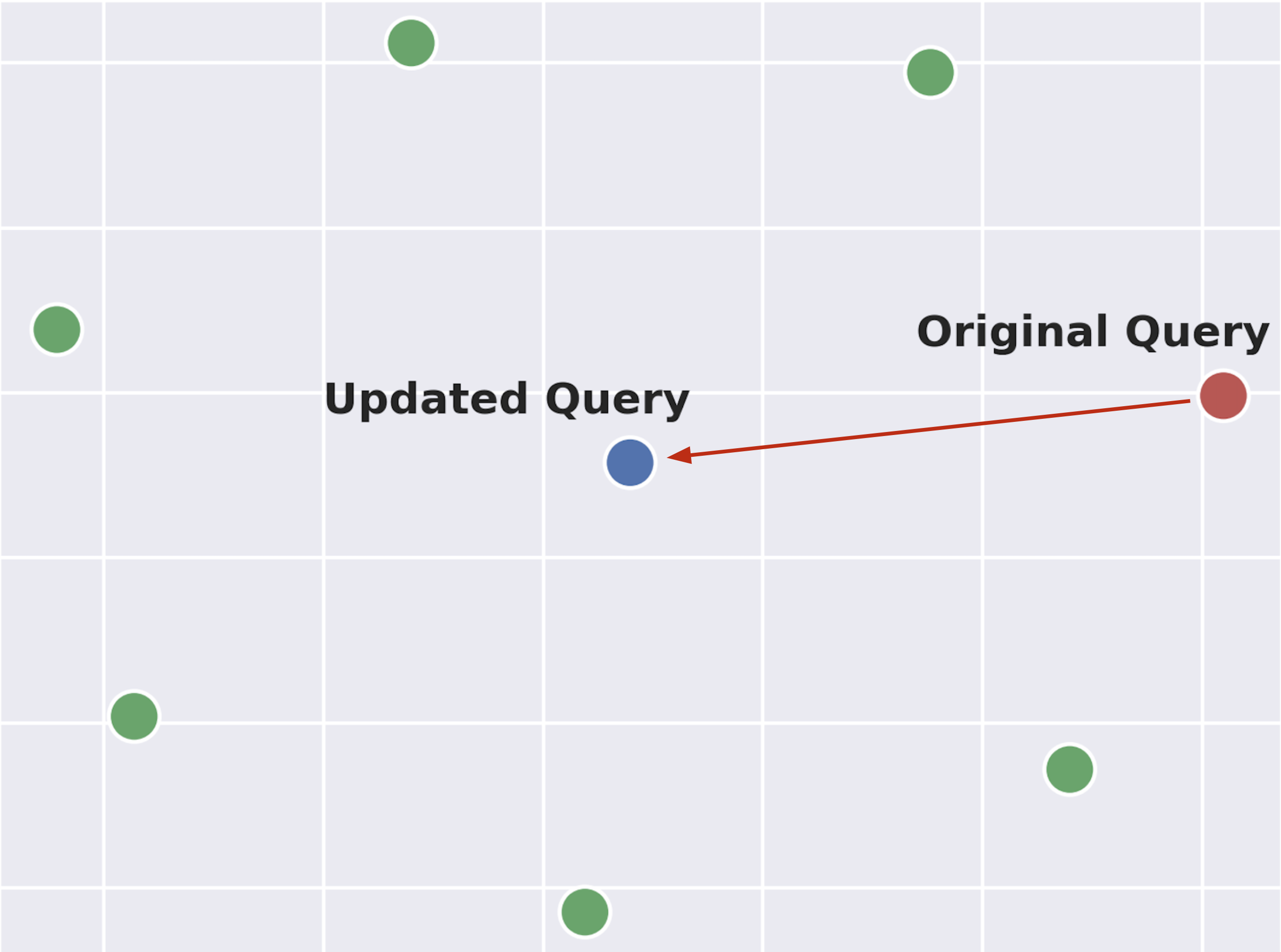

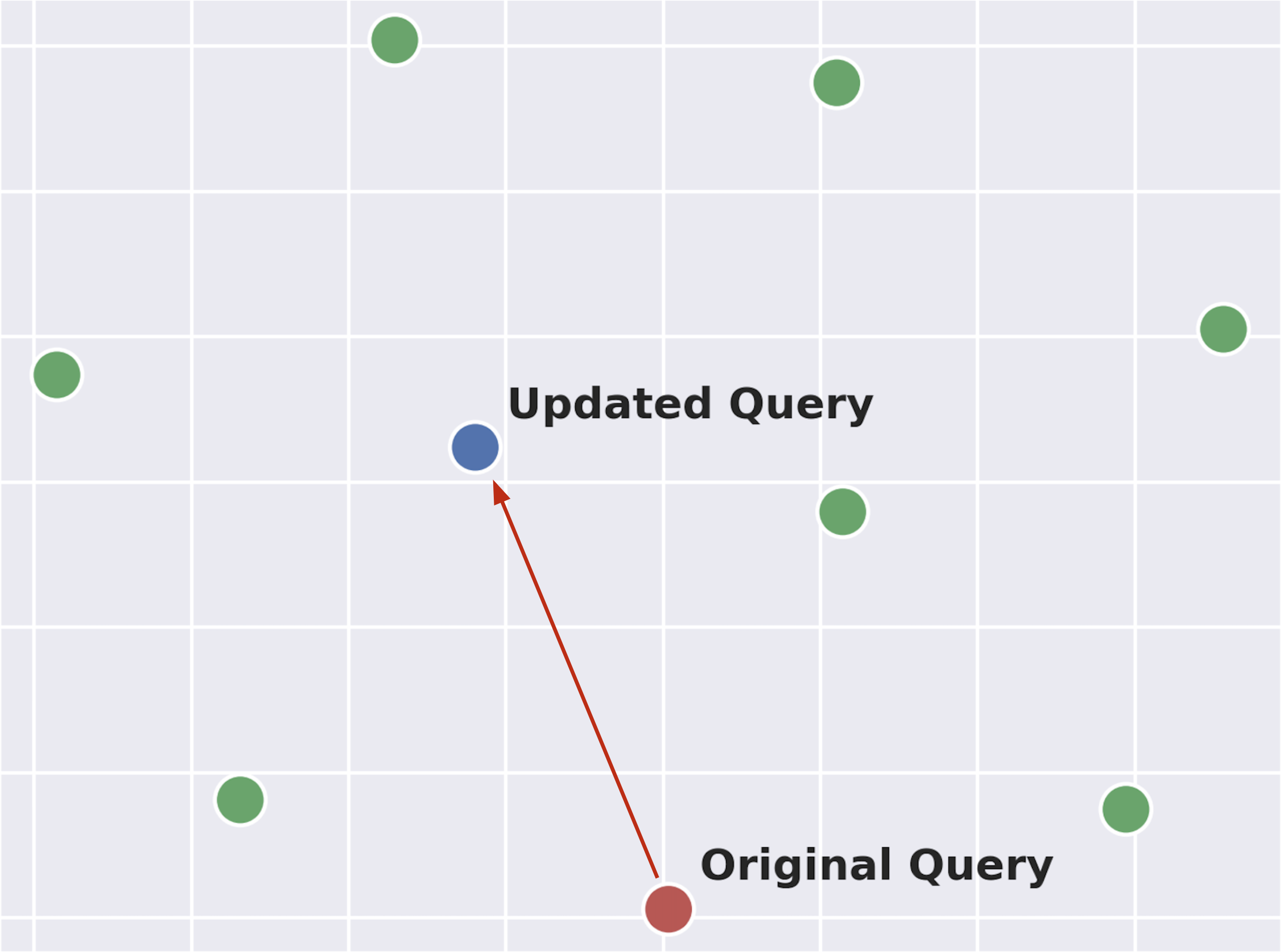

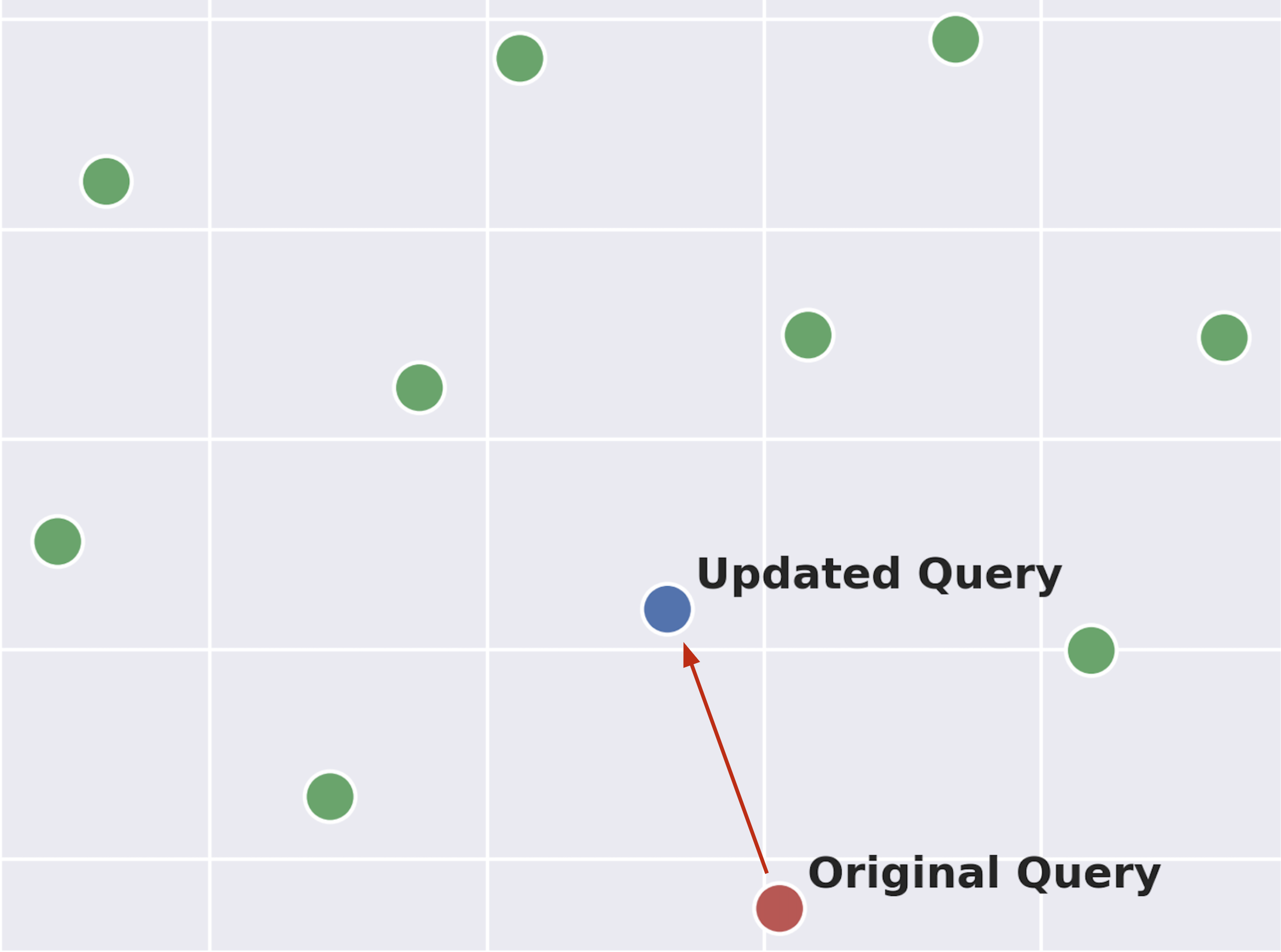

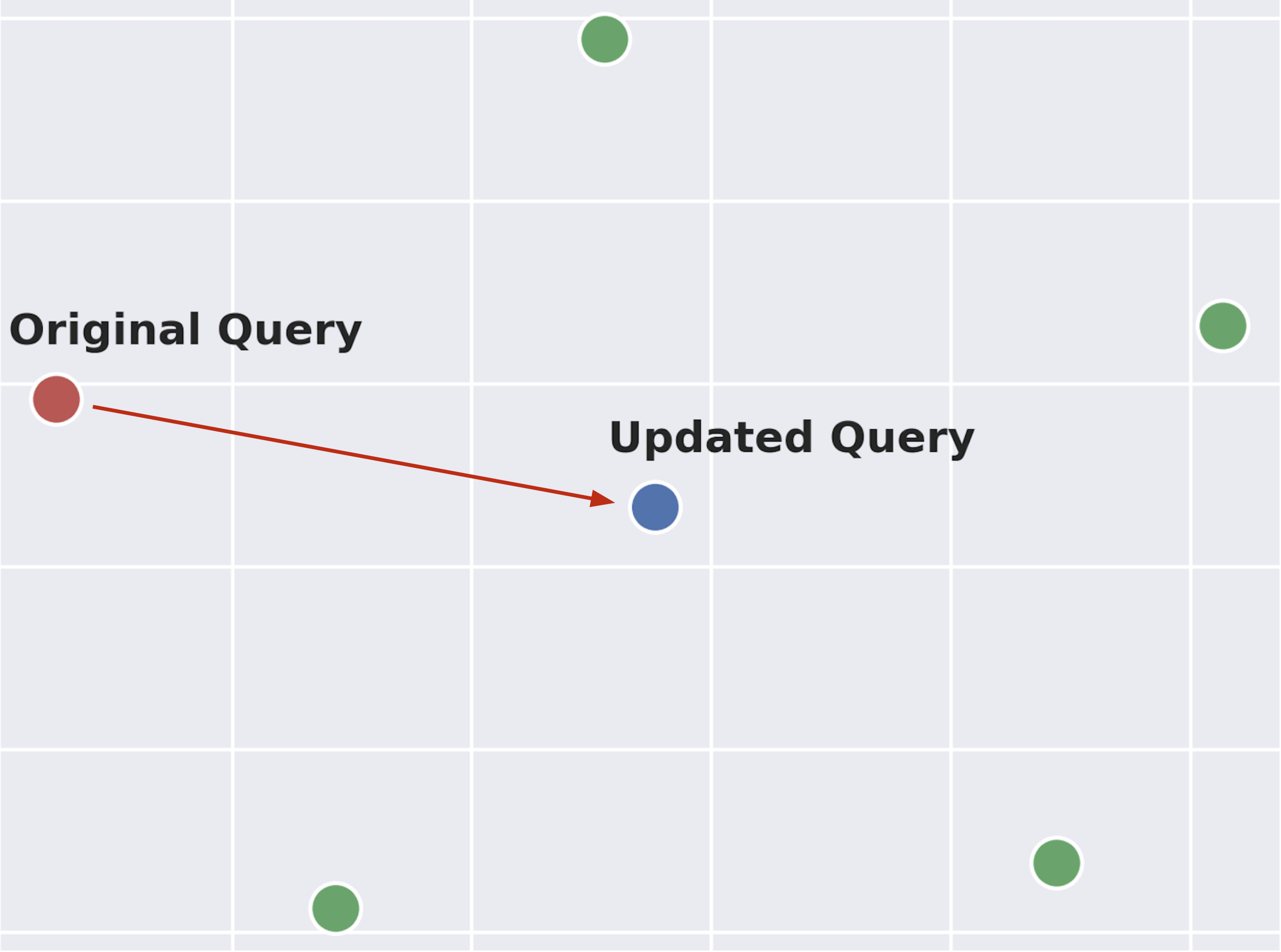

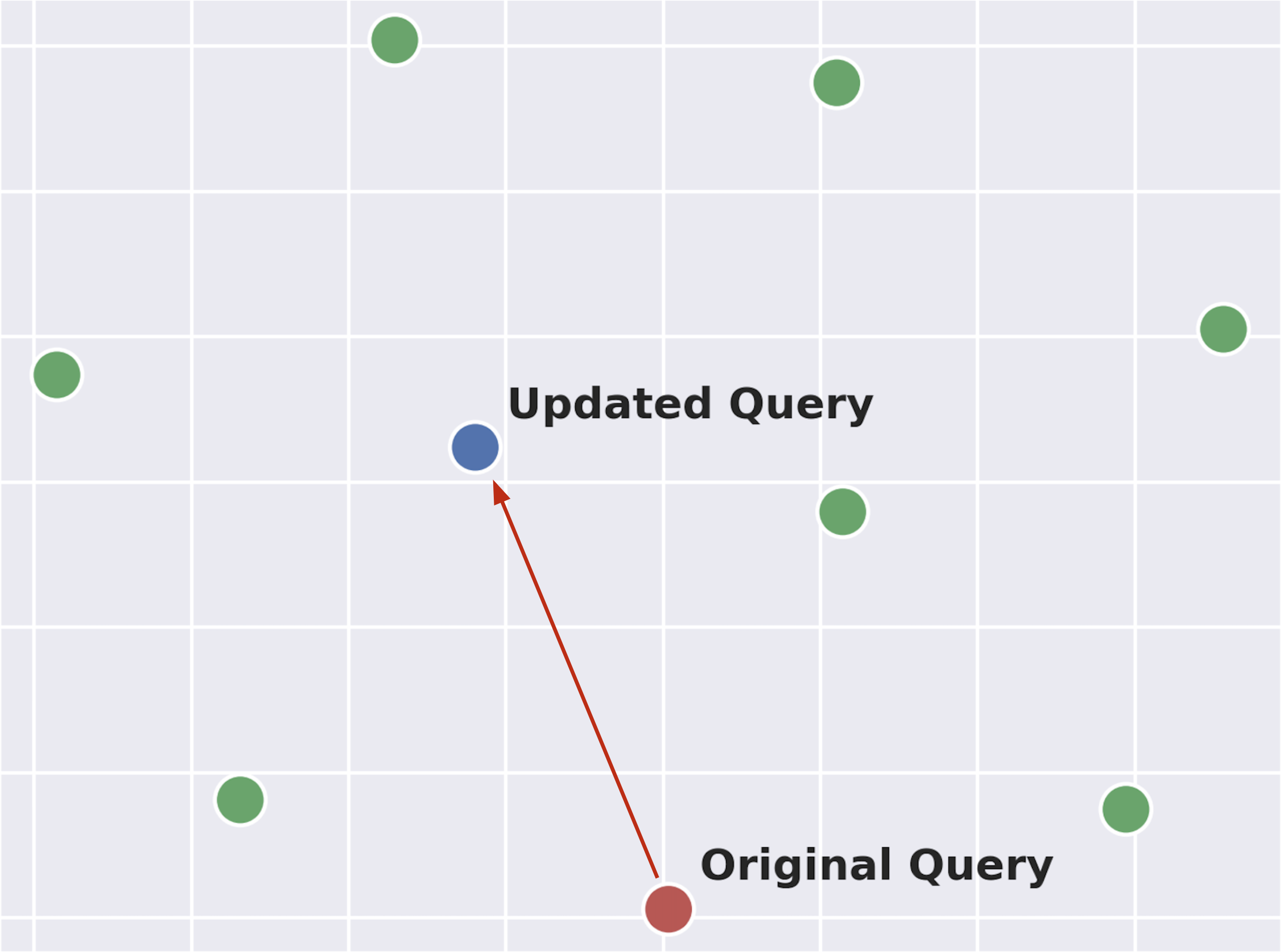

Figure 2: t-SNE plots for four examples from BEIR, with the query vectors shown alongside the corresponding positive passages. The updated query vectors (in blue) after our relevance feedback approach are closer to the positive passages (in green) on average compared to the original query vectors (in red).

The results highlight the efficacy of ReFIT in achieving higher Recall@100 metrics compared to both retrieval-only baselines like BM25, DPR, and modern dual-encoders, as well as traditional R&R frameworks. Notably, ReFIT achieves these improvements with a marginal increase in latency compared to re-ranking larger pools of candidates.

Discussion and Future Work

ReFIT provides a lightweight, parameter-free means to significantly enhance the recall capabilities of existing retrieval frameworks by merely updating query representations at inference time. Its architecture-agnostic nature makes it applicable across various domains, languages, and modalities without altering the underlying models. Future work could explore extending the relevance feedback concept to improve token-level query representations for better interpretability and examining the potential for iterative relevance feedback rounds.

The ability of ReFIT to leverage re-rankers' discriminative power at inference time positions it as a valuable tool for IR tasks where recall improvement is critical, such as open-domain question answering and dialog generation.

Conclusion

This paper presents a methodological advancement in neural IR by harnessing inference-time relevance feedback from a reranker to refine query representations within the retriever model. ReFIT not only enhances performance metrics across multiple benchmarks but does so with efficiency, maintaining competitive inference times. Its implementation simplicity and demonstrated effectiveness across different IR setups signify its potential for widespread adoption in environments where retrieval accuracy and recall are paramount.