- The paper introduces SCoT prompting with RED strategy, integrating structured reasoning with programming constructs for improved code generation.

- It employs sequence, branch, and loop structures to transform intermediate reasoning into logically sound code.

- Results on benchmarks like HumanEval show up to a 13.79% improvement in Pass@1 scores, highlighting enhanced accuracy and clarity.

Structured Chain-of-Thought Prompting for Code Generation

This paper introduces a novel method called Structured Chain-of-Thought (SCoT) prompting, designed to improve the accuracy of LLMs in code generation tasks. The authors propose a specific technique known as RED prompting, which integrates structured reasoning steps with program structures to enhance the quality of code generated by models like ChatGPT and Codex.

Background and Motivation

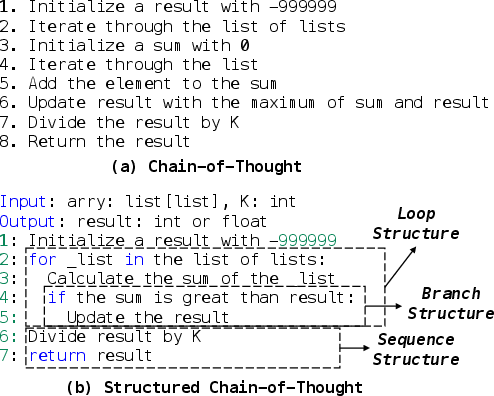

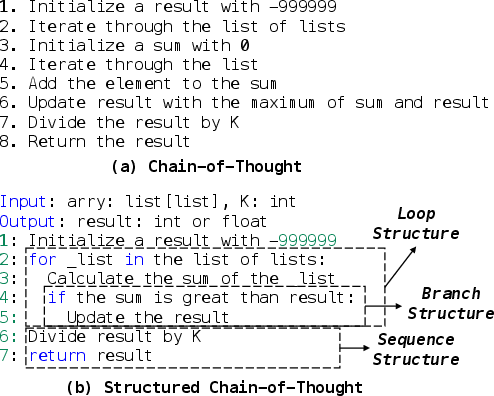

Existing Chain-of-Thought (CoT) prompting techniques have demonstrated marginal improvements in the accuracy of LLMs for code generation by inserting intermediate natural language reasoning steps. However, the CoT methodology was initially developed for natural language processing tasks, and thus these intermediate steps lack the structure often inherent in coding. The paper hypothesizes that employing program structures—such as sequence, branch, and loop structures—within these reasoning steps could lead to more accurate and structured code outputs.

Figure 1: The comparison of a Chain-of-Thoughts (CoT) and our Structured Chain-of-Thought (SCoT).

Methodology: Structured Chain-of-Thought (SCoT)

The core innovation of the paper is the incorporation of program structures within the intermediate reasoning steps, a method termed as SCoT. This involves encoding the intermediate reasoning process of LLMs using structural elements commonly found in programming, thus making the transition from thought to code more logical and seamless.

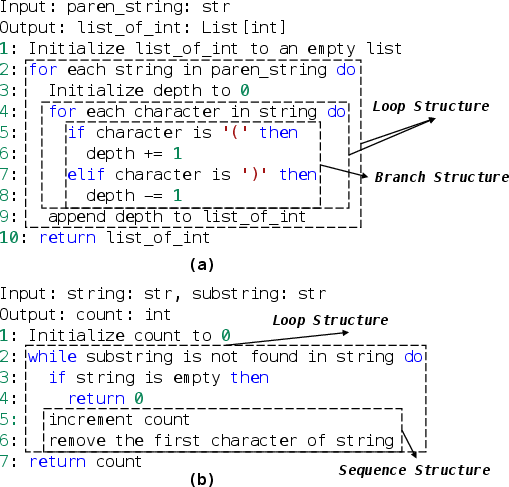

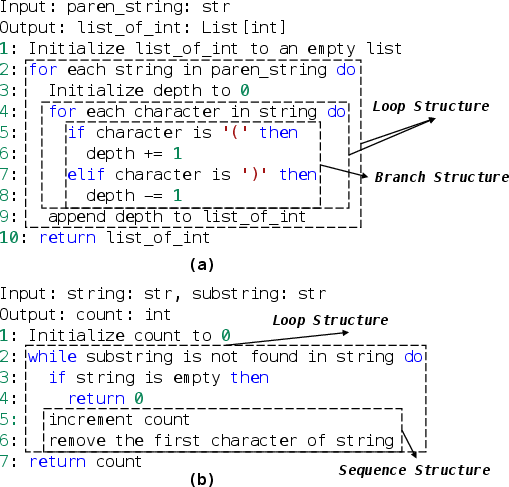

Figure 2: Examples of SCoT in code generation.

Detailed Implementation

- Definition of Structures: The SCoT prompting employs three foundational program structures:

- Sequence Structure: Linear flow of instructions.

- Branch Structure: Conditional pathways using if-else logic.

- Loop Structure: Iterative processes such as for-loops and while-loops.

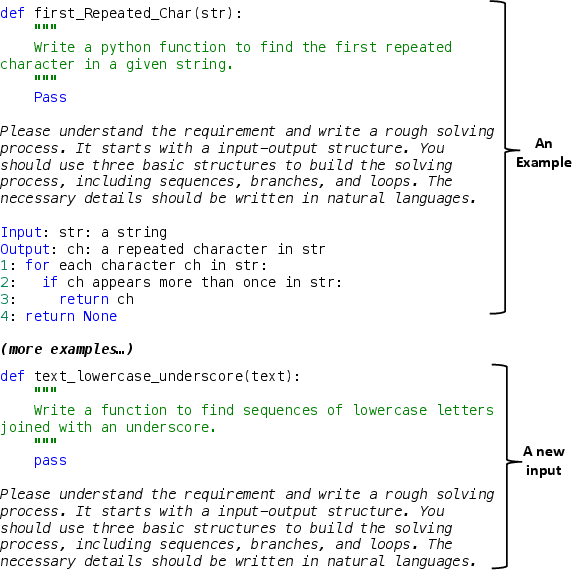

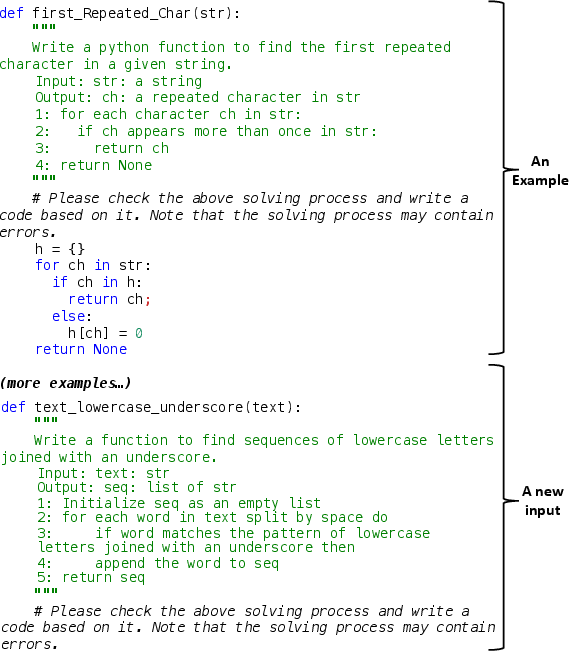

- Prompting Technique: RED prompting orchestrates a two-step generation process comprising:

Results

The effectiveness of RED prompting was evaluated using three prominent code generation benchmarks: HumanEval, MBPP, and MBCPP, where it demonstrated significant performance gains. In particular, RED prompting surpassed traditional CoT prompting approaches by up to 13.79% in Pass@1 scores for the HumanEval benchmark, marking a substantial leap in code accuracy.

Analysis and Implications

The study illustrated that the structured intermediate reasoning enabled by SCoT leads to clearer logic paths and more accurate code outputs. This structured approach aids LLMs in navigating through programming tasks by relying on familiar control structures, thus reducing ambiguity and enhancing code completion fidelity. Furthermore, human evaluations indicated a higher preference for programs generated by RED prompting due to clarity and maintainability.

Conclusion

SCoT prompting offers a promising advancement in the field of AI-driven code generation by leveraging the structural properties of programming languages to articulate intermediate reasoning in a more coherent manner. The significant improvements in various benchmarks underscore the potential of structured thought processes in enhancing LLM performance on complex coding tasks. This research paves the way for future explorations into integrating domain-specific structures into AI reasoning processes to further optimize and expand the capabilities of LLMs in specialized applications.