RelPose++: Recovering 6D Poses from Sparse-view Observations (2305.04926v2)

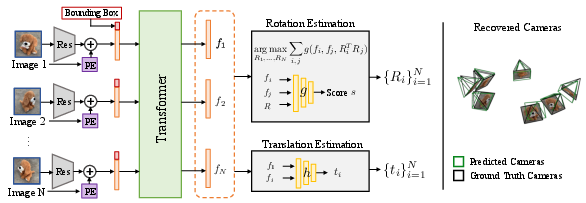

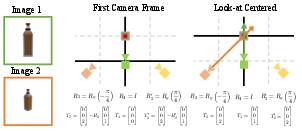

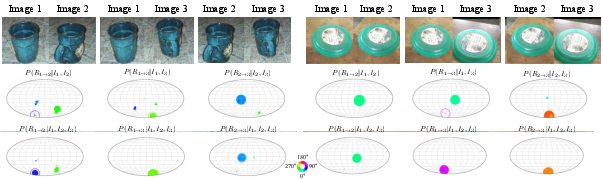

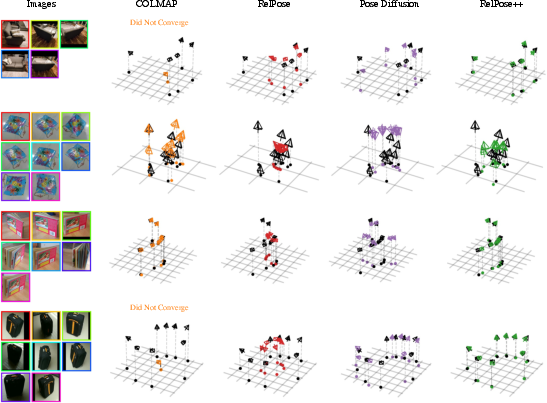

Abstract: We address the task of estimating 6D camera poses from sparse-view image sets (2-8 images). This task is a vital pre-processing stage for nearly all contemporary (neural) reconstruction algorithms but remains challenging given sparse views, especially for objects with visual symmetries and texture-less surfaces. We build on the recent RelPose framework which learns a network that infers distributions over relative rotations over image pairs. We extend this approach in two key ways; first, we use attentional transformer layers to process multiple images jointly, since additional views of an object may resolve ambiguous symmetries in any given image pair (such as the handle of a mug that becomes visible in a third view). Second, we augment this network to also report camera translations by defining an appropriate coordinate system that decouples the ambiguity in rotation estimation from translation prediction. Our final system results in large improvements in 6D pose prediction over prior art on both seen and unseen object categories and also enables pose estimation and 3D reconstruction for in-the-wild objects.

- Objectron: A Large Scale Dataset of Object-Centric Videos in the Wild with Pose Annotations. In CVPR, 2021.

- NeMo: Neural Mesh Models of Contrastive Features for Robust 3D Pose Estimation. In ICLR, 2021.

- SURF: Speeded Up Robust Features. In ECCV, 2006.

- Extreme Rotation Estimation using Dense Correlation Volumes. In CVPR, 2021.

- ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual-Inertial and Multi-Map SLAM. T-RO, 2021.

- ShapeNet: An Information-Rich 3D Model Repository. arXiv preprint arXiv:1512.03012, 2015.

- Wide-Baseline Relative Camera Pose Estimation with Directional Learning. In CVPR, 2021.

- Universal Correspondence Network. NeurIPS, 2016.

- MonoSLAM: Real-time Single Camera SLAM. TPAMI, 2007.

- SuperPoint: Self-supervised Interest Point Detection and Description. In CVPR-W, 2018.

- Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Communications of the ACM, 1981.

- Deep Orientation Uncertainty Learning Based on a Bingham Loss. In ICLR, 2019.

- Multiple View Geometry in Computer Vision. Cambridge University Press, 2003.

- Rotation Averaging. IJCV, 2013.

- Deep Residual Learning for Image Recognition. In CVPR, 2016.

- Few-View Object Reconstruction with Unknown Categories and Camera Poses. ArXiv, 2212.04492, 2022.

- End-to-end Recovery of Human Shape and Pose. In CVPR, 2018.

- Learning 3D Human Dynamics from Video. In CVPR, 2019.

- VIBE: Video Inference for Human Body Pose and Shape Estimation. In CVPR, 2020.

- BARF: Bundle-Adjusting Neural Radiance Fields. In ICCV, 2021.

- SIFT Flow: Dense Correspondence Across Scenes and Its Applications. TPAMI, 2010.

- H Christopher Longuet-Higgins. A Computer Algorithm for Reconstructing a Scene from Two Projections. Nature, 1981.

- David G Lowe. Distinctive Image Features from Scale-invariant Keypoints. IJCV, 2004.

- An Iterative Image Registration Technique with an Application to Stereo Vision. In IJCAI, 1981.

- MediaPipe: A Framework for Building Perception Pipelines. arXiv:1906.08172, 2019.

- Virtual Correspondence: Humans as a Cue for Extreme-View Geometry. In CVPR, 2022.

- Explaining the Ambiguity of Object Detection and 6D Pose from Visual Data. In ICCV, 2019.

- VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera. TOG, 2017.

- Relative Camera Pose Estimation Using Convolutional Neural Networks. In ACIVS, 2017.

- NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. In ECCV, 2020.

- ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras. T-RO, 2017.

- ORB-SLAM: A Versatile and Accurate Monocular SLAM System. T-RO, 2015.

- Implicit-PDF: Non-Parametric Representation of Probability Distributions on the Rotation Manifold. In ICML, 2021.

- PIZZA: A Powerful Image-only Zero-Shot Zero-CAD Approach to 6 DoF Tracking. In 3DV, 2022.

- David Nistér. An Efficient Solution to the Five-point Relative Pose Problem. TPAMI, 2004.

- Learning 3D Object Categories by Looking Around Them. In ICCV, 2017.

- Learning Orientation Distributions for Object Pose Estimation. In IROS, 2020.

- ZePHyR: Zero-shot Pose Hypothesis Scoring. In ICRA, 2021.

- Common Objects in 3D: Large-Scale Learning and Evaluation of Real-life 3D Category Reconstruction. In ICCV, 2021.

- The 8-Point Algorithm as an Inductive Bias for Relative Pose Prediction by ViTs. In 3DV, 2022.

- From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In CVPR, 2019.

- SuperGlue: Learning Feature Matching with Graph Neural Networks. In CVPR, 2020.

- Structure-from-Motion Revisited. In CVPR, 2016.

- Pixelwise View Selection for Unstructured Multi-View Stereo. In ECCV, 2016.

- SparsePose: Sparse-View Camera Pose Regression and Refinement. In CVPR, 2023.

- A Benchmark for the Evaluation of RGB-D SLAM Systems. In IROS, 2012.

- Canonical Capsules: Self-supervised Capsules in Canonical Pose. In NeurIPS, 2021.

- DROID-SLAM: Deep Visual SLAM for Monocular, Stereo, and RGB-D Cameras. NeurIPS, 2021.

- Bundle Adjustment—A Modern Synthesis. In International workshop on vision algorithms, 1999.

- Shinji Umeyama. Least-squares Estimation of Transformation Parameters Between Two Point Patterns. TPAMI, 1991.

- MetaPose: Fast 3D Pose from Multiple Views without 3D Supervision. In CVPR, 2022.

- Attention is All You Need. NeurIPS, 2017.

- PoseDiffusion: Solving Pose Estimation via Diffusion-aided Bundle Adjustment. In ICCV, 2023.

- DeepVO: Towards End-to-End Visual Odometry with Deep Recurrent Convolutional Neural Networks. In ICRA, 2017.

- SegICP: Integrated Deep Semantic Segmentation and Pose Estimation. In IROS, 2017.

- Pose from Shape: Deep Pose Estimation for Arbitrary 3D Objects. In BMVC, 2019.

- PoseContrast: Class-Agnostic Object Viewpoint Estimation in the Wild with Pose-Aware Contrastive Learning. In 3DV, 2021.

- D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry. In CVPR, 2020.

- pixelNeRF: Neural Radiance Fields from One or Few Images. In CVPR, 2021.

- Perceiving 3D Human-Object Spatial Arrangements from a Single Image in the Wild. In ECCV, 2020.

- NeRS: Neural Reflectance Surfaces for Sparse-view 3D Reconstruction in the Wild. In NeurIPS, 2021.

- RelPose: Predicting Probabilistic Relative Rotation for Single Objects in the Wild. In ECCV, 2022.

- Richard Zhang. Making Convolutional Networks Shift-Invariant Again. In ICML, 2019.

- Stereo magnification: Learning view synthesis using multiplane images. SIGGRAPH, 37, 2018.

- SparseFusion: Distilling View-conditioned Diffusion for 3D Reconstruction. In CVPR, 2023.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.