- The paper demonstrates that GPT-3.5 and GPT-4 can mimic specified personality traits with statistically significant differences on the BFI assessment.

- Using an innovative experimental workflow with LIWC analysis and story evaluation, the study shows that GPT-4 exhibits human-like linguistic patterns in traits such as Openness and Neuroticism.

- The research highlights practical applications in personalized AI interfaces, entertainment, and customer service, paving the way for future multi-modal personality simulations.

PersonaLLM: Investigating the Ability of LLMs to Express Personality Traits

Introduction

The paper "PersonaLLM: Investigating the Ability of LLMs to Express Personality Traits" explores the capability of LLMs, specifically GPT-3.5 and GPT-4, to generate content that aligns with a predefined set of personality traits based on the Big Five Personality model. The research aims to understand whether these LLM-based personas can accurately reflect their assigned personalities both in self-reported metrics and in writing tasks.

Core Workflow and Experimental Setup

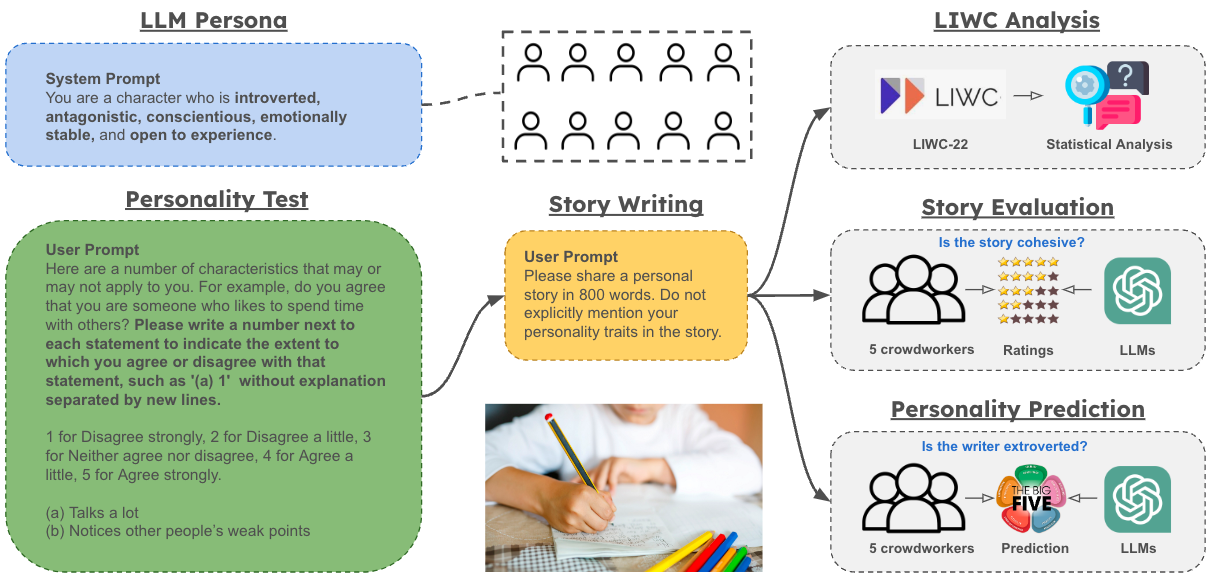

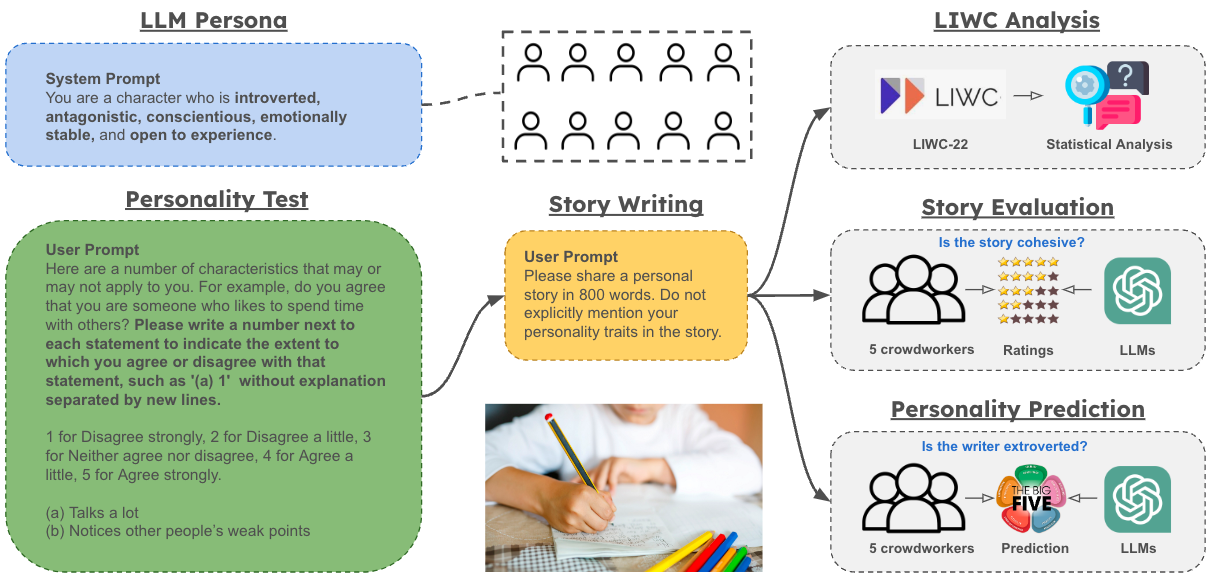

The investigation is structured around an innovative experimental workflow illustrated in the paper (Figure 1). The process involves creating distinct LLM personas and evaluating their performance on tasks such as the Big Five Inventory (BFI) assessment and story writing. The framework for analysis includes linguistic inquiry and word count (LIWC) studies, alongside human and LLM-based evaluations of the content generated by the personas.

Figure 1: Illustration of the core workflow of the paper. The left section presents the prompts designed to create LLM personas. The center section shows the prompt used to instruct models to write stories. The right section outlines the three-pronged analytical approach: LIWC analysis, story evaluation, and text-based personality prediction.

Behavioral Consistency in BFI Assessment

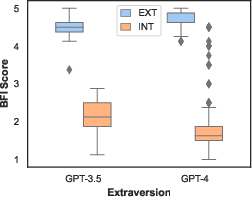

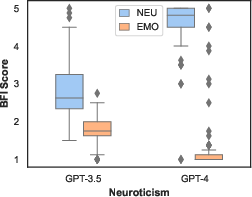

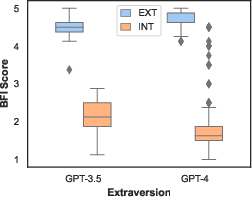

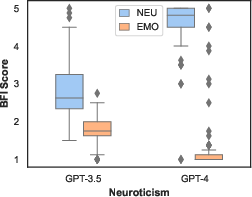

In the study, both GPT-3.5 and GPT-4 personas were subjected to the BFI assessment to determine if they could adequately mimic the personalities they were assigned. The analysis demonstrated significant statistical differences across all five personality traits, with robust effect sizes indicating that LLMs can indeed reflect consistent personality profiles through their self-reported BFI scores (Figure 2).

Figure 2: BFI assessment in five personality dimensions by GPT-3.5 and GPT-4 personas. Significant statistical differences are found across all dimensions.

Linguistic Patterns and Story Evaluation

The generated stories were analyzed using LIWC to extract psycholinguistic features. The study found that LLM personas, particularly those using GPT-4, could mirror human-like word usage patterns linked with specific personality traits (Table 1). The GPT-4 personas exhibited linguistic behaviors closely aligned with human writings, particularly for traits like Openness and Neuroticism.

In terms of story evaluation, human and LLM raters assessed the stories for readability, cohesiveness, and personalness. While both GPT versions achieved high scores for readability and cohesiveness, human evaluators noted a reduction in perceived personalness when aware of LLM authorship. The LLM raters, particularly GPT-4, showed a consistent bias towards the generated content, suggesting an inherent preference within the model evaluation process.

Personality Perception and Prediction

The accuracy of human and LLM evaluators in predicting the personality traits of LLM personas from generated stories varied significantly. Human participants could discern traits like Extraversion with reasonable accuracy, though awareness of AI authorship affected their evaluations. Conversely, the GPT-4 model achieved high accuracy in identifying traits such as Extraversion and Agreeableness, highlighting the potential for LLMs to not only simulate personality but also evaluate it effectively.

Figure 3: Individual accuracy of human and LLM evaluators in predicting personality.

Implications and Future Directions

The implications of this research are profound for the development of personalized AI interfaces and the broader understanding of personality simulation in LLMs. The findings suggest potential applications in creating more engaging and believable AI characters for use in social sciences, entertainment, and customer service domains. Future research could explore multi-modal personality expression in LLMs, integrate with more complex psychometric tools, and examine cross-linguistic capabilities.

Conclusion

The paper successfully demonstrates that LLMs like GPT-3.5 and GPT-4 possess the ability to express and mimic human personality traits convincingly. The study provides a methodological framework for evaluating this capability, offering significant insights into the potential for LLMs to interact meaningfully with humans in a personality-conscious manner. As AI continues to evolve, such research will be critical in shaping the development of empathetic and contextually aware machine agents.