OpenAGI: When LLM Meets Domain Experts (2304.04370v6)

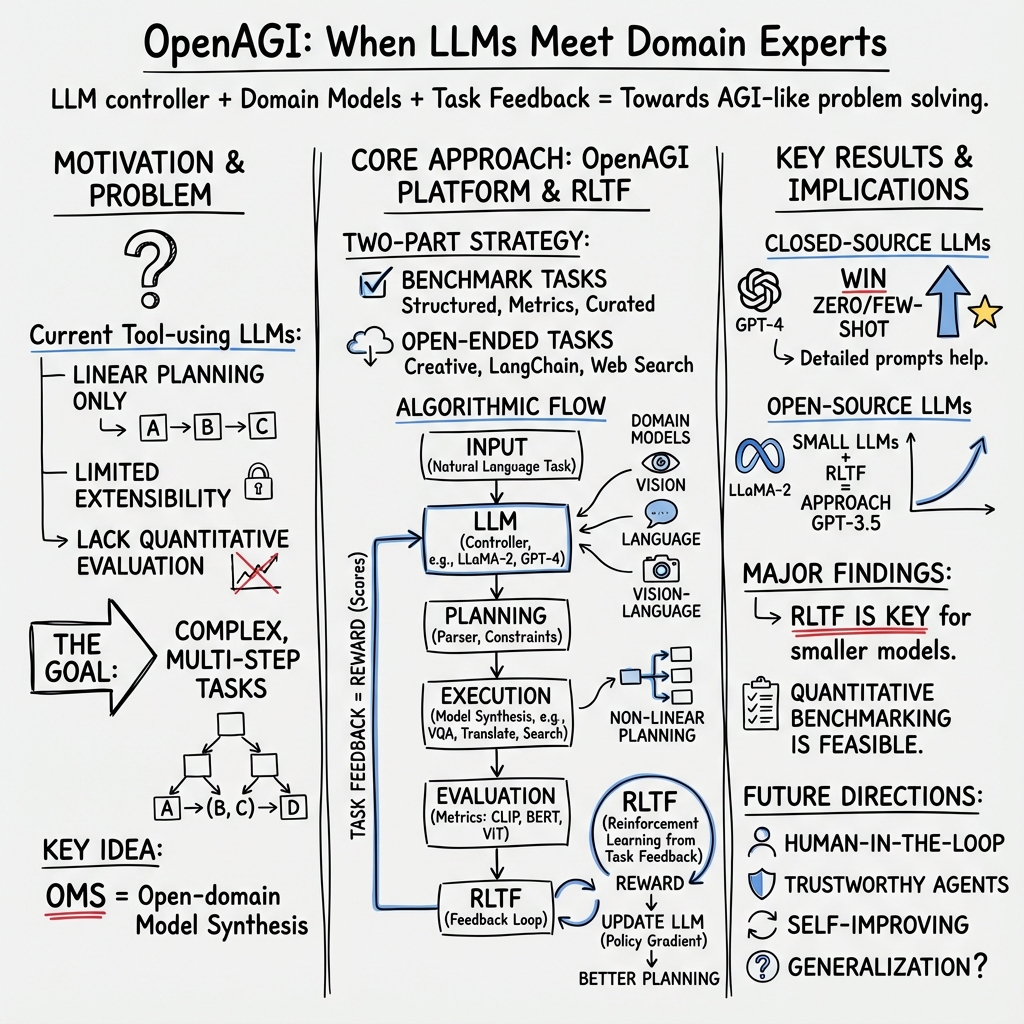

Abstract: Human Intelligence (HI) excels at combining basic skills to solve complex tasks. This capability is vital for AI and should be embedded in comprehensive AI Agents, enabling them to harness expert models for complex task-solving towards AGI. LLMs show promising learning and reasoning abilities, and can effectively use external models, tools, plugins, or APIs to tackle complex problems. In this work, we introduce OpenAGI, an open-source AGI research and development platform designed for solving multi-step, real-world tasks. Specifically, OpenAGI uses a dual strategy, integrating standard benchmark tasks for benchmarking and evaluation, and open-ended tasks including more expandable models, tools, plugins, or APIs for creative problem-solving. Tasks are presented as natural language queries to the LLM, which then selects and executes appropriate models. We also propose a Reinforcement Learning from Task Feedback (RLTF) mechanism that uses task results to improve the LLM's task-solving ability, which creates a self-improving AI feedback loop. While we acknowledge that AGI is a broad and multifaceted research challenge with no singularly defined solution path, the integration of LLMs with domain-specific expert models, inspired by mirroring the blend of general and specialized intelligence in humans, offers a promising approach towards AGI. We are open-sourcing the OpenAGI project's code, dataset, benchmarks, evaluation methods, and the UI demo to foster community involvement in AGI advancement: https://github.com/agiresearch/OpenAGI.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces OpenAGI, a free and open platform that helps AI systems solve complex, real-world problems by combining a general “smart” LLM (like GPT) with many small expert tools (like image enhancers, translators, or calculators). The goal is to move toward AGI—AI that can handle many different tasks—by letting a LLM plan and use the right tools to get the job done, and learn from the results to get better over time.

Key Objectives

The authors set out to:

- Build a platform (OpenAGI) where an LLM acts like a team captain, choosing and coordinating the right expert tools to solve multi-step tasks.

- Provide two kinds of tasks:

- Benchmark tasks with datasets and scores so researchers can fairly compare different AI systems.

- Open-ended tasks that encourage creativity, like making art or researching online.

- Create a new training method called RLTF (Reinforcement Learning from Task Feedback) so the LLM can improve using simple task results (like scores), not just human-labeled data.

- Test different LLMs (both open-source and closed-source) to see how well they plan, select tools, and solve complex tasks.

Methods and Approach

Think of the LLM as a coach, and the expert models as specialist players. The coach reads a problem described in plain language, makes a playbook (a plan), picks the right players (tools), and runs the plays (executes the tools in order).

Here’s how OpenAGI works in everyday terms:

- Task input: A problem is written in natural language (for example: “Clean up this blurry, noisy photo and describe what’s in it”).

- Planning: The LLM designs a step-by-step plan. This can be linear (one step after another) or non-linear (some steps can happen in parallel or in a branching structure).

- Tool selection and execution: The LLM picks expert tools from a growing library—like image denoising, super-resolution, translation, question answering, or text-to-image generation—and runs them in the planned order.

- Evaluation: The platform measures how well the solution worked using standard scores:

- BERT Score: Checks how similar the output text is to a “good” text.

- CLIP Score: Rates how well an image matches a text description.

- ViT Score: Measures how similar two images are.

- Learning from feedback (RLTF): This is like playing a video game and looking at your score after each level. The LLM uses the task results (scores) to tweak its future plans. Unlike RLHF (Reinforcement Learning from Human Feedback), RLTF doesn’t need people to label every answer—it learns from how well it did.

They also:

- Built 185 multi-step tasks across text, images, and combined text+image inputs.

- Included a parser that helps turn the LLM’s written plan into a clear list of tools to run.

- Tested with different prompting styles (short vs detailed prompts) to see how they affect performance.

Main Findings and Why They Matter

What they discovered:

- Closed-source LLMs (like GPT-4) generally perform best out of the box (especially with “zero-shot” or “few-shot” prompts).

- Open-source LLMs (like LLaMA-2) improve a lot when they are fine-tuned and especially when trained with RLTF. With RLTF, some smaller open models got close to GPT-3.5’s performance on these tasks.

- Detailed prompts can help strong models, but may confuse smaller open-source models during tool selection.

- The LLM can handle non-linear plans (not just simple step-by-step), which is important for real-world problems that need branching or parallel steps.

- Everything—code, datasets, tasks, benchmarks, and a demo—is open-sourced, making it easy for the community to extend and test new ideas.

Why it matters:

- Combining a general LLM with many expert tools is a powerful, practical path toward more capable AI.

- RLTF shows a way to make AI self-improve using task scores, reducing the need for expensive human labeling.

- Standard benchmarks make it easier to compare systems and push progress forward.

Implications and Potential Impact

OpenAGI points toward AI that can:

- Plan and use the right tools to solve complex, multi-step problems across different kinds of data (text, images, and more).

- Learn from its own results and get better over time without constant human supervision.

- Support creative tasks (like making art with a theme) and practical tasks (like cleaning up images and answering questions about them).

The authors also suggest future directions:

- Human-in-the-loop agents: When a tool is missing or unclear, the AI could ask a human expert as part of its plan.

- Trustworthy agents: Build safety and ethical checks into the planning process.

- Self-improving agents: Let the AI generate new tasks to practice, reflect on mistakes, and improve by itself.

In short, OpenAGI provides a flexible, community-friendly platform and a learning method (RLTF) that together help LLMs act more like smart, adaptable problem solvers—an important step on the path to AGI.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what remains missing, uncertain, or unexplored in the paper that future researchers could address.

- Reward design in RLTF:

- How exactly “task feedback” is computed across heterogeneous tasks (e.g., weighting and normalization of CLIP/BERTScore/ViT vs. task-specific metrics) and how multi-objective rewards are combined is unspecified.

- Sensitivity of RLTF performance to reward shaping, normalization, and credit assignment across steps remains unstudied.

- Risk of reward hacking (optimizing proxy metrics while degrading true task utility) is unaddressed.

- RL algorithm choices and stability:

- Only REINFORCE is used; no comparisons to lower-variance or more sample-efficient methods (e.g., PPO, A2C, SAC) or to off-policy approaches.

- No analysis of RLTF variance, convergence behavior, sample efficiency, or training stability across seeds and task mixes.

- Lack of ablations isolating the contribution of constrained beam search, parser quality, and RLTF components.

- Generalization and transfer:

- OOD generalization is asserted but not systematically measured (e.g., to unseen tools, unseen compositions, or novel task structures).

- No analysis of transfer to tasks that differ in composition depth, branching factor, or modality from training tasks.

- No curriculum learning or difficulty-aware training to assess scaling with task complexity.

- Plan optimality and non-linear planning quality:

- No quantitative metric for plan optimality or minimality (e.g., unnecessary tools invoked, cost-aware planning, or execution latency).

- Non-linear planning is demonstrated qualitatively but lacks systematic evaluation against linear baselines across tasks that truly require non-linearity.

- Absence of diagnostics on failure modes in planning (e.g., ordering mistakes, missing prerequisites, redundant steps).

- Evaluation metrics and coverage:

- Heavy reliance on CLIP/BERTScore/ViT limits validity for many tasks (e.g., detection mAP, VQA accuracy, ROUGE/CIDEr, task success in QA/NLU).

- No human evaluation for open-ended or creative outputs, and no inter-annotator agreement for qualitative assessments.

- No statistical significance testing or confidence intervals to validate reported improvements.

- Benchmark design and representativeness:

- The 185 tasks are largely constructed through synthetic augmentations; their ecological validity (vs. real-world degradations and compositions) is untested.

- Task coverage is limited (e.g., no speech, time-series, tabular reasoning, code execution, robotics/embodied tasks, multi-turn interactive tasks).

- The necessity of non-linear strategies for the 68 “non-linear” tasks is not validated with ground-truth dependency graphs.

- Dataset splits and sampling:

- Training and test splits use 10% each without detailed stratification by modality, difficulty, or composition complexity; no cross-validation is provided.

- Potential leakage and alignment issues (datasets chosen to match model training distributions) may inflate performance; not systematically controlled.

- Tool/model integration at scale:

- Extensibility is claimed but not stress-tested with large tool catalogs (scaling behavior, search complexity, tool discovery, and selection accuracy).

- No evaluation of failure handling (tool/API downtime, inconsistent outputs, version drift), fallback strategies, or re-planning mechanisms.

- No formal type system or schema checking for tool I/O compatibility, pre/post-conditions, or data validation beyond prompt-based constraints.

- Execution engine, reliability, and parsing:

- The “planning solution parser” relies on GPT-3.5, introducing a dependency and potential error propagation; parser robustness and error rates are unmeasured.

- Lack of instrumentation and observability (step-level logs, tracing, dependency graphs) for debugging plan execution failures.

- Comparisons to alternative planners/agents:

- No quantitative comparisons to structured planning baselines (e.g., PDDL planners, program synthesis) or to agent frameworks (AutoGPT, HuggingGPT) under identical conditions.

- No analysis of hybrid approaches that combine symbolic planning or search with LLMs for tool orchestration.

- Fairness of model comparisons:

- Open-source LLMs are fine-tuned/RLTF-tuned while closed-source models are not; fairness and comparability of results are unclear.

- Using a closed-source LLM (GPT-3.5) as a parser may advantage closed-source pipelines; impact on results is unquantified.

- Open-ended task evaluation:

- Creative multi-output generation (e.g., art, poem, music) is shown only qualitatively; no framework for evaluating coherence, aesthetics, or cultural fidelity.

- RLTF is not demonstrated on open-ended tasks, leaving reward definition and optimization for such tasks unresolved.

- Cost, latency, and environmental impact:

- No measurement of computational cost, wall-clock latency per task/plan, energy usage, or carbon footprint—especially important for RLTF that requires repeated tool calls.

- No cost-aware planning (e.g., penalizing expensive or slow tools), budget constraints, or resource allocation policies.

- Data, labels, and reproducibility:

- “Manually curated, feasible solutions” used for few-shot/fine-tuning are not fully specified (annotation protocol, inter-annotator agreement, release plans).

- Reproducibility risks due to closed-source models, non-deterministic APIs, and tool versioning are not mitigated (version pinning, seeds, exact execution environment).

- Safety, security, and ethics:

- Use of external tools/APIs lacks guardrails for data privacy, prompt injection, output sanitization, and harmful content mitigation.

- No safety constraints or ethical compliance checks during planning/execution; future work mentions “trustworthy agents” but no current safeguards.

- Robustness to non-stationarity:

- Tools/models evolve over time; robustness to version changes, deprecations, and interface drift is untested.

- No continuous evaluation or monitoring to detect and adapt to environment changes.

- Configuration and parameter selection:

- Planning only selects tool sequences; it does not optimize tool hyperparameters or control settings that critically affect performance.

- No exploration of conditional branching, loops, or dynamic re-parameterization based on intermediate results.

- Multi-agent and human-in-the-loop aspects:

- Only single-controller LLM is studied; potential benefits of specialized sub-controllers or multi-agent collaboration are unexplored.

- Human-in-the-loop interventions are listed as future work; no current interface, protocols, or efficacy evaluation.

- Legal and licensing considerations:

- Use of third-party datasets/tools/APIs may have licensing/usage constraints; compliance and redistribution policies are not clarified.

- Coverage of languages and domains:

- Multilingual capabilities are hinted (e.g., English/German) but not systematically evaluated across languages, scripts, or domain-specific jargon.

- Domain transfer (e.g., biomedical, finance, law) via appropriate expert tools is not tested.

- Failure mode taxonomy:

- No systematic error analysis categorizing failures (e.g., tool selection vs. plan structure vs. data handling), which would guide targeted improvements.

- Self-improvement loop design:

- The claimed “self-improving AI feedback loop” lacks formalization of continual learning protocols, catastrophic forgetting mitigation, or safety during self-modification.

- Benchmark governance and evolution:

- Procedures for community contributions, task/tool validation, difficulty calibration, and benchmark versioning are not defined.

- Theoretical framing:

- No formalism for OpenAGI planning (e.g., as hierarchical MDPs or task graphs), which limits theoretical analysis of guarantees or bounds.

Each item above can be directly operationalized into experiments, ablations, infrastructure features, or evaluation protocols to strengthen the platform’s rigor and real-world applicability.

Glossary

- AGI: The goal of building systems with general, human-like problem-solving abilities across tasks. "towards AGI."

- Augmented LLMs (ALMs): LLMs enhanced with external tools or resources to improve reasoning and task execution. "the emerging field of Augmented LLMs (ALMs) focuses on addressing the limitations of conventional LLMs ... by equipping them with enhanced reasoning capabilities and the ability to employ external resources"

- AutoGPT: An autonomous agent that decomposes objectives into tasks and iteratively executes them. "Notably, AutoGPT ... is an automated agent, which is designed to set multiple objectives, break them down into relevant tasks, and iterate on these tasks until the objectives are achieved."

- BERT Score: A text evaluation metric that measures similarity using BERT embeddings. "the BERT Score is utilized to assess tasks with text outputs"

- CLIP Score: A metric assessing alignment between text and images using CLIP embeddings. "we employ the CLIP Score only for Text-to-Image Generation-based tasks"

- Constrained beam search: A decoding method that enforces constraints to produce feasible outputs. "and use constrained beam search ... to reduce the likelihood of producing infeasible solutions"

- Diffusers: A Hugging Face library for diffusion-based generative models. "Hugging Face's transformers and diffusers"

- Few-shot Learning: Guiding a model with a small set of input-output examples for a target task. "Few-shot Learning (Few) presents a set of high-quality demonstrations, each consisting of both input and desired output, on the target task."

- Human-in-the-loop agents: Systems that incorporate human expertise as part of the decision or execution process. "Human-in-the-loop agents, where LLM may prompt human experts for answers as one step of the task-solving plan when a suitable model is unavailable"

- Hugging Face: An ecosystem and hub for models, datasets, and libraries like Transformers and Diffusers. "Hugging Face's transformers and diffusers"

- Instruction fine-tuning: Fine-tuning models on instruction-following datasets to improve adherence to prompts. "fine-tuned using a technique called instruction fine-tuning."

- LangChain: A framework that connects LLMs to external tools, APIs, and data sources. "we further include LangChain to provide additional expert models, such as Google Search, Wikipedia, Wolfram Alpha and so on."

- LLMs: Scaled transformer-based models trained on vast corpora with strong language and reasoning capabilities. "Recent advances in LLMs have showcased exceptional learning and reasoning capabilities"

- Low-Rank Adaptation (LoRA): A parameter-efficient method for fine-tuning large models by learning low-rank updates. "We employ Low-Rank Adaptation (LoRA) ... to optimize all open-source LLMs across both the Fine-tuning and RLTF learning schema."

- Multi-modality data: Data combining multiple modalities like text, images, or audio. "complex tasks involving multi-modality data, such as image and text processing"

- Nonlinear Task Planning: Planning where steps can occur concurrently or in complex orders rather than a strict sequence. "Nonlinear Task Planning: The majority of current research is limited to solving tasks with linear task planning solutions"

- OpenAGI: An open-source platform for AGI research enabling LLM-driven planning and model synthesis. "We introduce OpenAGI, an open-source AGI research and development platform designed for solving multi-step, real-world tasks."

- Open-domain Model Synthesis (OMS): Composing domain expert models across varied tasks based on natural language instructions. "Open-domain Model Synthesis (OMS) holds the potential to drive the development of artificial general intelligence (AGI)"

- Out-of-Distribution (OOD) generalization: A model’s ability to perform well on data that differ from its training distribution. "address challenges on out-of-distribution (OOD) generalization"

- Proximal Policy Optimization (PPO): A reinforcement learning algorithm that optimizes policies with clipped objectives for stability. "notably Proximal Policy Optimization (PPO) ... to tailor LLMs to this feedback via a reward model."

- Prompt engineering: Crafting and structuring prompts to guide LLM behavior and outputs. "through strategic prompt engineering."

- REINFORCE: A policy-gradient RL algorithm using sampled returns to update parameters. "We choose to use REINFORCE ... in this work"

- Reinforcement Learning from Human Feedback (RLHF): Training models with human-labeled preferences via RL to align outputs with human values. "Reinforcement Learning from Human Feedback (RLHF) has been introduced"

- Reinforcement Learning from Task Feedback (RLTF): The paper’s method that uses task outcomes as feedback to improve LLM planning via RL. "we introduce a mechanism referred to as Reinforcement Learning from Task Feedback (RLTF)."

- Reward model: A learned model that scores outputs/actions to guide reinforcement learning updates. "to tailor LLMs to this feedback via a reward model."

- Trustworthy agents: Agents designed to ensure safety and ethical behavior during task execution. "Trustworthy agents, which guarantee the safety and the ethical standard of agents during task-solving"

- ViT Score: An image similarity metric computed using Vision Transformer embeddings. "and the ViT score is applied to measure image similarity for the remaining tasks with image outputs."

- Visual Foundation Models (VFMs): Broadly trained vision models generalizing across tasks (e.g., Stable Diffusion, ControlNet). "Visual Foundation Models (VFMs) such as Transformers, ControlNet, and Stable Diffusion"

- Visual Question Answering (VQA): A multimodal task where a system answers questions about visual content. "Visual Question Answering (VQA) involves answering questions based on an image"

- Zero-shot Learning: Prompting a model without task-specific examples to perform a new task. "Zero-shot Learning (Zero) directly inputs the prompt to the LLM."

Practical Applications

Overview

Below are actionable, real-world applications that follow directly from OpenAGI’s core contributions: an open-source platform that lets an LLM act as a controller to select, synthesize, and execute domain expert models/tools/APIs for complex multi-step tasks, including non-linear planning; benchmarked, multimodal task suites and evaluation metrics; and the RLTF (Reinforcement Learning from Task Feedback) mechanism for self-improving planning.

Each application is categorized as Immediate (deployable now) or Long-Term (requires further research, scaling, or development), includes relevant sectors, potential tools/products/workflows, and notes assumptions or dependencies affecting feasibility.

Immediate Applications

The following use cases can be built today using OpenAGI’s codebase, model integrations (Hugging Face, Diffusers, LangChain), evaluation metrics (CLIP/BERT/ViT), and RLTF for iterative improvement.

- Multimodal customer support triage (Software, E-commerce)

- Workflow: Automatically route complex tickets containing text and images through sentiment analysis, translation, object detection, summarization, and escalation.

- Tools: Hugging Face sentiment/translation/QA models, object detection; OpenAGI’s LLM controller; LangChain search when needed.

- Assumptions/Dependencies: Data privacy/compliance; model accuracy on domain content; GPU capacity for image tasks; stable tool APIs.

- Media asset restoration and enhancement (Media, Retail)

- Workflow: Denoise, deblur, colorize, and super-resolve product or marketing images; auto-generate captions for cataloging.

- Tools: Restormer (denoise/deblur), Swin2SR (super-resolution), colorization; image captioning; RLTF to optimize pipeline quality (via ViT-based similarity).

- Assumptions/Dependencies: Compute availability; objective quality metrics for RLTF; consistent image domains.

- Marketing and creative content generation (Marketing, Creative Studios)

- Workflow: Open-ended pipelines to produce themed images, poems, and music across languages, coordinated via non-linear planning.

- Tools: Diffusers (e.g., Stable Diffusion for text-to-image), LLMs for text/music prompts; LangChain for research.

- Assumptions/Dependencies: Brand-safe prompt engineering; IP/licensing and content provenance; human review.

- Multilingual document operations (Finance, Legal, Government)

- Workflow: Translate, summarize, and perform QA extraction on contracts, filings, and reports; assemble multilingual summaries.

- Tools: BART/T5 for summarization/translation; QA models; BERTScore for quality control; OpenAGI task plans.

- Assumptions/Dependencies: Domain adaptation; confidentiality and audit trails; language coverage and accuracy.

- Accessibility aids for images and documents (Public sector, Education)

- Workflow: Generate image captions, answer VQA queries about photos/graphics, and translate materials for accessibility.

- Tools: GIT-based VQA; captioning models; translation; OpenAGI non-linear planning to sequence tasks.

- Assumptions/Dependencies: Robustness to varied user content; on-device vs. cloud compute; accessibility standards.

- E-commerce listing automation (E-commerce)

- Workflow: Detect objects in product photos, enhance images, generate multi-language titles/descriptions, and classify attributes.

- Tools: Object detection, captioning, translation, classification; RLTF to refine sequencing based on conversion metrics.

- Assumptions/Dependencies: Domain-specific taxonomy alignment; measurement of downstream business impact; scaling for catalog volume.

- Research benchmarking for AI agents (Academia)

- Workflow: Use OpenAGI’s benchmark tasks, datasets, and metrics to evaluate planning and tool-use across LLMs; test non-linear plans and multimodal inputs.

- Tools: OpenAGI repository, datasets, CLIP/BERT/ViT metrics; LoRA fine-tuning; RLTF with REINFORCE; planning solution parser.

- Assumptions/Dependencies: Compute and reproducibility; fair comparisons across models; standardized prompts.

- MLOps agent improvement loop (Software)

- Workflow: Integrate RLTF into CI/CD: run task suites, collect metrics as rewards, auto-update LLM controller policies; log/audit plans and outcomes.

- Tools: RLTF implementation (REINFORCE), constrained beam search for feasible plan generation; experiment tracking.

- Assumptions/Dependencies: Well-defined reward functions tied to business KPIs; rollback mechanisms; data versioning.

- Education content support (Education)

- Workflow: Summarize readings, generate illustrative images, and assist with VQA-based homework help across languages.

- Tools: Summarization (BART/T5), text-to-image, VQA; LangChain search for references.

- Assumptions/Dependencies: Age-appropriate guardrails; academic integrity; content accuracy.

- Everyday photo fixer and explainer (Daily life)

- Workflow: A consumer app to denoise/deblur/super-resolve photos and auto-caption or explain image content (e.g., scenes or receipts).

- Tools: Restormer, Swin2SR, captioning; simple non-linear plans; lightweight UI.

- Assumptions/Dependencies: Cloud vs. device processing; privacy; user-friendly interfaces.

- Compliance and reporting assistant (Government, Finance)

- Workflow: Aggregate public information via Google Search/Wikipedia, summarize findings, and produce multilingual reports with citations.

- Tools: LangChain search connectors; LLM summarization; translation; OpenAGI planning; BERTScore for quality checks.

- Assumptions/Dependencies: Source reliability; fact-checking; governance/records management.

Long-Term Applications

The following applications are plausible extensions that require improved reliability, safety, domain adaptation, governance, and/or integration with external systems.

- General-purpose enterprise agent orchestrator (Cross-sector platform)

- Product: A managed “Agent Orchestrator” that uses LLMs to plan, select, and execute expert tools/APIs for complex, non-linear workflows across departments.

- Dependencies: Robust planning under uncertainty; traceability/audit; cost management; standardized tool schemas and observability.

- Clinical imaging and reporting assistant (Healthcare)

- Workflow: Enhance medical images (denoise/deblur/super-resolution), extract features, and draft structured radiology reports in multiple languages.

- Dependencies: Regulatory approval (e.g., FDA/CE), domain-specific model training and validation, clinical-grade metrics, bias/safety monitoring, secure data pipelines.

- Robotics perception and planning loop (Robotics, Manufacturing)

- Workflow: LLM-controlled orchestration of perception models (object detection, VQA on camera feeds) and downstream task planning, integrated with motion controllers.

- Dependencies: Real-time constraints; safety certification; deterministic behavior; hardware integration; robust non-linear planning under physical risk.

- Scientific discovery assistant (Academia, R&D)

- Workflow: Integrate literature retrieval (LangChain), symbolic computation (Wolfram Alpha), simulation tools, and experiment planning; use RLTF for iterative refinement.

- Dependencies: Trustworthy reasoning; human-in-the-loop oversight; domain-specific evaluation metrics; provenance tracking.

- Self-improving agents via automated task generation (Software/AI)

- Workflow: Agents that generate new tasks, evaluate themselves using RLTF, and improve planning/skills autonomously (self-reflection, self-prompting).

- Dependencies: Safe reward shaping; preventing reward hacking/model collapse; scalable training pipelines; ethical constraints.

- Policy drafting and multilingual citizen outreach (Government)

- Workflow: Agents synthesize evidence, draft policy briefs, generate multilingual outreach materials, and track public feedback via multimodal inputs.

- Dependencies: Transparency, bias mitigation, factual accuracy, public records compliance, stakeholder review loops.

- Personalized curriculum planning and tutoring (Education)

- Workflow: Non-linear agent planning to adaptively select tools and content per learner profile, including multimodal tasks and assessments.

- Dependencies: Data privacy and consent; validated pedagogical outcomes; alignment with standards; long-term efficacy studies.

- Financial risk analysis and reporting pipeline (Finance)

- Workflow: Combine market data, filings, news (search), multimodal chart/image analysis, summarization, and multilingual reports with explainability.

- Dependencies: Licensed data access; rigorous auditability; model risk management; governance for tool and plan selection.

- Energy/utility monitoring and maintenance (Energy)

- Workflow: Analyze sensor images/videos and maintenance logs to produce alerts, summaries, and work orders; optimize multi-step diagnostics via RLTF.

- Dependencies: Domain-specific perception models, real-time processing, integration with SCADA/CMMS, reliability in safety-critical contexts.

- Expert model marketplace and economy (Software platform)

- Product: A marketplace where domain experts publish models/tools with standardized interfaces; LLM controllers (via OpenAGI-like pipelines) select and pay per use.

- Dependencies: Interoperability standards, security/sandboxing, billing and governance, quality certification/evaluation frameworks.

- Agent safety, ethics, and governance frameworks (Policy/AI governance)

- Workflow: Red-teaming, guardrails, and trust guarantees for LLM-planned tool use; standardized evaluation beyond CLIP/BERT/ViT for agent behavior and outcomes.

- Dependencies: Cross-industry standards, third-party audits, incident response processes, ongoing monitoring and alignment techniques (e.g., RLTF plus safety constraints).

Collections

Sign up for free to add this paper to one or more collections.