Training-Free Layout Control with Cross-Attention Guidance (2304.03373v2)

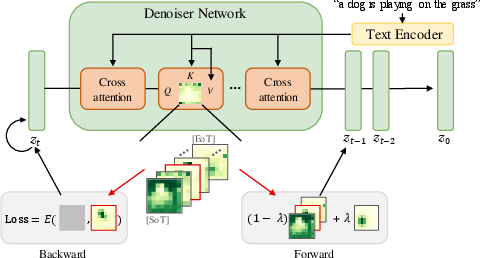

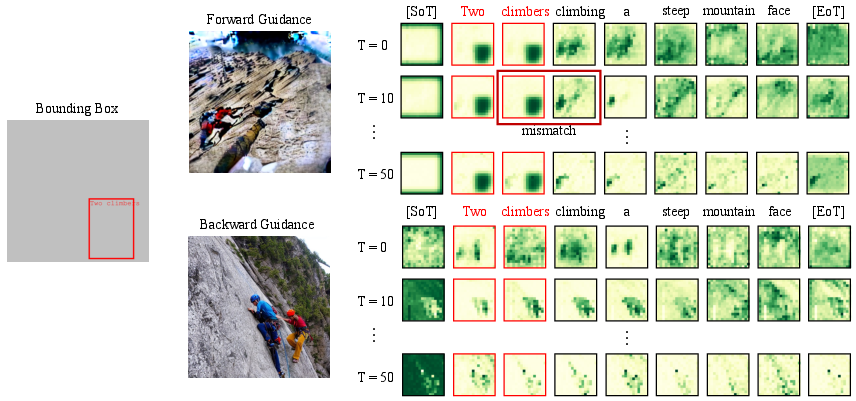

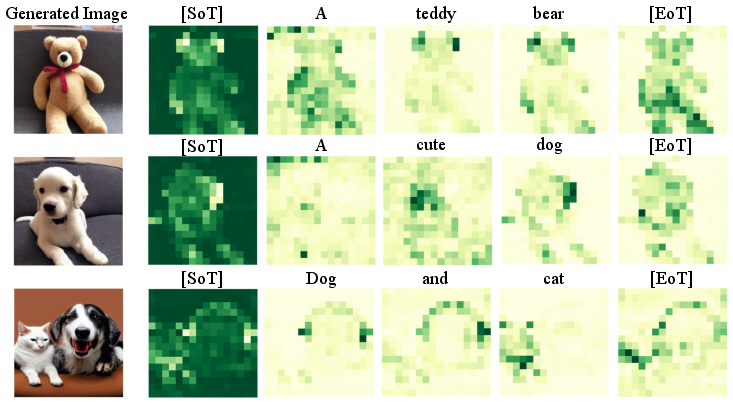

Abstract: Recent diffusion-based generators can produce high-quality images from textual prompts. However, they often disregard textual instructions that specify the spatial layout of the composition. We propose a simple approach that achieves robust layout control without the need for training or fine-tuning of the image generator. Our technique manipulates the cross-attention layers that the model uses to interface textual and visual information and steers the generation in the desired direction given, e.g., a user-specified layout. To determine how to best guide attention, we study the role of attention maps and explore two alternative strategies, forward and backward guidance. We thoroughly evaluate our approach on three benchmarks and provide several qualitative examples and a comparative analysis of the two strategies that demonstrate the superiority of backward guidance compared to forward guidance, as well as prior work. We further demonstrate the versatility of layout guidance by extending it to applications such as editing the layout and context of real images.

- Spatext: Spatio-textual representation for controllable image generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18370–18380, 2023.

- ediffi: Text-to-image diffusion models with an ensemble of expert denoisers. arXiv preprint arXiv:2211.01324, 2022.

- Universal guidance for diffusion models. arXiv preprint arXiv:2302.07121, 2023.

- Multidiffusion: Fusing diffusion paths for controlled image generation. arXiv preprint arXiv:2302.08113, 2023.

- Attend-and-excite: Attention-based semantic guidance for text-to-image diffusion models. ACM Transactions on Graphics (TOG), 42(4):1–10, 2023.

- Zero-shot spatial layout conditioning for text-to-image diffusion models. arXiv preprint arXiv:2306.13754, 2023.

- Diffedit: Diffusion-based semantic image editing with mask guidance. arXiv preprint arXiv:2210.11427, 2022.

- Dall· e mini, 2021.

- Diffusion models beat gans on image synthesis. Advances in Neural Information Processing Systems, 34:8780–8794, 2021.

- Cogview: Mastering text-to-image generation via transformers. Advances in Neural Information Processing Systems, 34:19822–19835, 2021.

- Cogview2: Faster and better text-to-image generation via hierarchical transformers. Advances in Neural Information Processing Systems, pages 16890–16902, 2022.

- Frido: Feature pyramid diffusion for complex scene image synthesis. arXiv preprint arXiv:2208.13753, 2022.

- Training-free structured diffusion guidance for compositional text-to-image synthesis. In The Eleventh International Conference on Learning Representations, 2023.

- Make-a-scene: Scene-based text-to-image generation with human priors. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XV, pages 89–106. Springer, 2022.

- An image is worth one word: Personalizing text-to-image generation using textual inversion. arXiv preprint arXiv:2208.01618, 2022.

- Benchmarking spatial relationships in text-to-image generation. arXiv preprint arXiv:2212.10015, 2022.

- Generative adversarial nets. In Proceedings of Advances in Neural Information Processing Systems (NeurIPS), 2014.

- Vector quantized diffusion model for text-to-image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10696–10706, 2022.

- Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626, 2022.

- Generating multiple objects at spatially distinct locations. arXiv preprint arXiv:1901.00686, 2019.

- Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598, 2022.

- Inferring semantic layout for hierarchical text-to-image synthesis. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 7986–7994, 2018.

- Composer: Creative and controllable image synthesis with composable conditions. arXiv preprint arXiv:2302.09778, 2023.

- Multimodal conditional image synthesis with product-of-experts gans. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XVI, pages 91–109. Springer, 2022.

- Image generation from scene graphs. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1219–1228, 2018.

- Imagic: Text-based real image editing with diffusion models. arXiv preprint arXiv:2210.09276, 2022.

- Gligen: Open-set grounded text-to-image generation. arXiv preprint arXiv:2301.07093, 2023.

- Microsoft COCO: common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), 2014.

- Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), 2014.

- Compositional visual generation with composable diffusion models. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XVII, pages 423–439. Springer, 2022.

- Simple open-vocabulary object detection with vision transformers. arXiv preprint arXiv:2205.06230, 2022.

- Glide: Towards photorealistic image generation and editing with text-guided diffusion models. arXiv preprint arXiv:2112.10741, 2021.

- Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2337–2346, 2019.

- Flickr30k entities: Collecting region-to-phrase correspondences for richer image-to-sentence models. International Journal of Computer Vision (IJCV), 2017.

- Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, page 3, 2022.

- Zero-shot text-to-image generation. In International Conference on Machine Learning, pages 8821–8831. PMLR, 2021.

- Generative adversarial text to image synthesis. In International conference on machine learning, pages 1060–1069. PMLR, 2016.

- High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 10684–10695, 2022.

- High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10684–10695, 2022.

- U-net: Convolutional networks for biomedical image segmentation. In MICCAI, 2015.

- Dreambooth: Fine tuning text-to-image diffusion models for subject-driven generation. arXiv preprint arXiv:2208.12242, 2022.

- Photorealistic text-to-image diffusion models with deep language understanding. In Alice H. Oh, Alekh Agarwal, Danielle Belgrave, and Kyunghyun Cho, editors, Advances in Neural Information Processing Systems, 2022.

- Laion-5b: An open large-scale dataset for training next generation image-text models. arXiv preprint arXiv:2210.08402, 2022.

- High-fidelity guided image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5997–6006, 2023.

- Image synthesis from reconfigurable layout and style. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10531–10540, 2019.

- Object-centric image generation from layouts. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 2647–2655, 2021.

- Df-gan: A simple and effective baseline for text-to-image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16515–16525, 2022.

- Unitune: Text-driven image editing by fine tuning an image generation model on a single image. arXiv preprint arXiv:2210.09477, 2022.

- Boxdiff: Text-to-image synthesis with training-free box-constrained diffusion. arXiv preprint arXiv:2307.10816, 2023.

- Attngan: Fine-grained text to image generation with attentional generative adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1316–1324, 2018.

- Modeling image composition for complex scene generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7764–7773, 2022.

- Reco: Region-controlled text-to-image generation. arXiv preprint arXiv:2211.15518, 2022.

- From image descriptions to visual denotations: New similarity metrics for semantic inference over event descriptions. TACL, 2014.

- Scaling autoregressive models for content-rich text-to-image generation. arXiv preprint arXiv:2206.10789, 2022.

- Cross-modal contrastive learning for text-to-image generation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 833–842, 2021.

- Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks. In Proceedings of the IEEE international conference on computer vision, pages 5907–5915, 2017.

- Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE transactions on pattern analysis and machine intelligence, 41(8):1947–1962, 2018.

- Adding conditional control to text-to-image diffusion models. arXiv preprint arXiv:2302.05543, 2023.

- Image generation from layout. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8584–8593, 2019.

- Detecting twenty-thousand classes using image-level supervision. In Proceedings of the European Conference on Computer Vision (ECCV), 2022.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.