- The paper introduces a robust SCB-Dataset that benchmarks student and teacher behavior detection through both object detection and image classification.

- It employs detailed annotation protocols and tailored sampling strategies to address class imbalance and capture diverse classroom scenarios.

- YOLOv7 and advanced vision-language models achieve high performance metrics, underscoring the dataset’s potential to drive AI-supported educational analytics.

SCB-Dataset: Benchmarking Student and Teacher Classroom Behavior Recognition

Motivation and Context

Automated detection and analysis of student and teacher classroom behaviors is critical for advancing AI-supported educational analytics, pedagogical research, and adaptive learning environments. Prior efforts in this domain have been constrained by the paucity of large-scale, high-fidelity, publicly available datasets that cover real-world classroom complexity, rich action typology, and heterogeneous learning environments. The "SCB-Dataset: A Dataset for Detecting Student and Teacher Classroom Behavior" (2304.02488) addresses this deficit by introducing a comprehensive multi-task dataset—spanning both object detection and image-level classification—capturing nuanced student and teacher activities in authentic classroom scenarios across diverse stages, ethnicities, and school subjects.

SCB-Dataset Construction

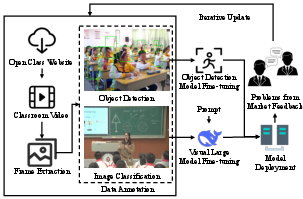

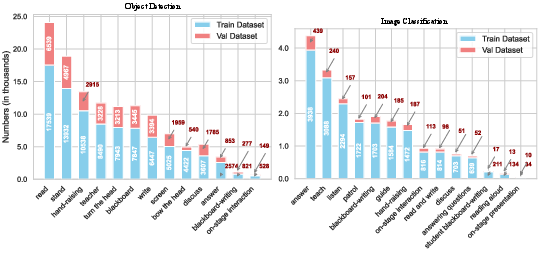

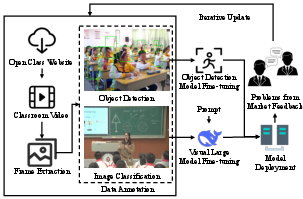

The dataset was generated by sourcing classroom video material from multiple Chinese educational platforms, including those targeting ethnic minority groups. Frames were extracted and annotated both for object detection and image classification, following detailed labeling standards for behavior types. Sampling strategies were designed to address class imbalance, e.g., oversampling rare behaviors ("discuss," "student blackboard-writing") and downsampling common ones ("read," "write").

Figure 1: The production process of SCB-Dataset illustrates the end-to-end pipeline from raw video acquisition to iterative feedback-driven annotation refinement.

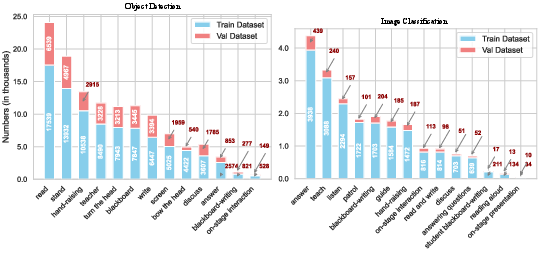

The object detection subset contains 13,330 images with 122,977 bounding box labels across 12 behavior classes (plus supporting categories such as "screen" and "blackboard"). The image classification subset provides 21,019 images labeled with one of 14 group- or individual-level behaviors. The annotation protocol emphasizes dominant behavior selection per image, supports future extensibility, and leverages custom interfaces for annotation efficiency and quality control.

Behavior Taxonomy and Sample Diversity

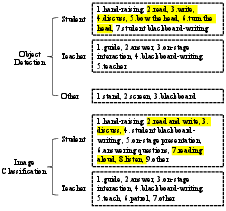

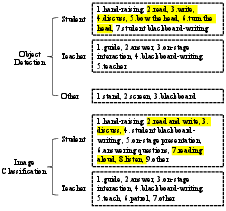

SCB-Dataset encompasses behaviors relevant for both fine-grained and holistic classroom analysis. Student behaviors include "hand-raising," "read and write," "discuss," "bow the head," and composite activities, while teacher behaviors range from "teach" and "patrol" to "on-stage interaction." Some classes are uniquely present in either object detection or classification, yielding a union of 19 distinct behavior types.

Figure 2: Examples of behavior classes in SCB-Dataset, covering typical and edge-case classroom activities for both students and teachers.

Figure 3: SCB-Dataset Class Count Statistics highlighting the persistent challenge of data imbalance, particularly for rare behaviors.

Figure 4: The behavior classification of SCB-Dataset details the mapping between annotated actions and their relevance to educational process analytics.

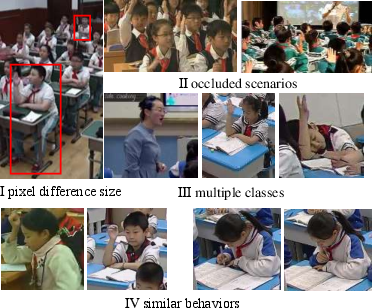

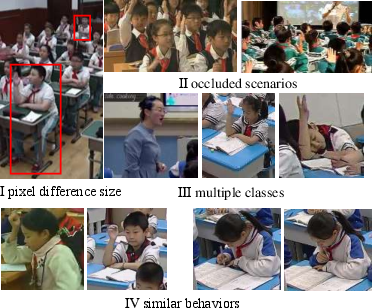

Recognizing classroom diversity, the dataset is purposefully constructed to include a spectrum of shooting angles, classroom layouts, age groups from kindergarten to university, and ethnic backgrounds. This heterogeneity significantly elevates the dataset’s realism and applicability for robust deployment scenarios.

Figure 5: Challenges in the SCB-Dataset include pixel-level differences, high-density occlusion, behavioral ambiguity, and co-occurrence of multiple classes.

Figure 6: The diversity of the SCB-Dataset includes varying shooting angles, disciplinary settings, developmental stages, and ethnic contexts.

Annotation Infrastructure and Quality Control

A major innovation is the multilayered enhancement of labeling tools based on VIA, with progressive versions supporting in-box class display, rapid annotation box navigation, dense scene management, and automatic frame-to-frame annotation transfers to minimize tedium and error. Annotation review is complemented by post-hoc validation tools ensuring completeness, proper categorization, and bounding box fidelity.

Benchmarking: Detection and Classification Results

Object Detection

YOLO series architectures (v5–v13) were benchmarked on the object detection data, with YOLOv7 demonstrating superior performance across most categories.

Figure 7: Example of YOLOv7 detection results for complex classroom scenes, indicating simultaneous multi-class activity localization.

Figure 8: Training and testing results of the SCB-Dataset (teacher behavior part) on YOLO series models confirm YOLOv7’s empirical superiority ([email protected] up to 94%).

Numerically, YOLOv7 achieves [email protected] scores exceeding 90% for half of the behavior classes ("teacher," "blackboard-writing," "on-stage interaction"), which are considered deployment-ready. Notably, detection of behaviors such as "bow the head" and "turn the head" remains challenging ([email protected] < 40%), pointing to a need for dedicated architectural or data augmentation advances.

Image-Level Classification

Large vision-LLMs (LVLMs), specifically Qwen2.5-VL-7B-instruct fine-tuned with LoRA via the LLaMA Factory, are trained on the image classification subset. Most behavior classes attain f1 scores above 80%, with some ("blackboard-writing") approaching 99% precision/recall—indicating strong learning of salient context-dependent features.

Figure 9: Loss of Qwen2.5-VL-7B-instruct during the training iteration process demonstrates rapid initial convergence and strong final stability.

Figure 10: Using the LLaMA Factory framework to test the Qwen2.5-VL-7B-instruct example, validating model deployment for image-based prompt-driven behavior classification.

Limitations and Opportunities

SCB-Dataset deliberately pushes the envelope in classroom scene realism, annotation granularity, and typological breadth. It confronts significant technical obstacles: persistent class imbalance, substantial occlusion in dense scenes, inter-class behavioral ambiguity, and dependency on global context for group behaviors. These issues, manifested in both detection and classification metrics, necessitate ongoing investigation into sample-efficient learning, improved multi-label approaches, stronger modeling of behavior temporal dynamics, and bias mitigation strategies.

Implications and Speculation

Practically, SCB-Dataset sets a new standard for automated classroom analytics infrastructure, enabling fine-grained feedback for teacher performance, student engagement, and personalized learning trajectory assessment. Theoretically, its scale and diversity facilitate rigorous benchmarking for robust vision algorithms under occlusion, action co-occurrence, and environmental heterogeneity. Future research will likely integrate video-based temporal action recognition, explore the synergy between pose-estimation and detection, and couple multimodal input streams (audio, text) for richer pedagogical inference. Importantly, the continuing expansion and iterative refinement of SCB-Dataset—guided by real-world feedback—will further catalyze advances in educational AI.

Conclusion

SCB-Dataset constitutes a comprehensive, technically challenging benchmark for classroom behavior analysis, supporting both object detection and image-level classification. Systematic annotation, diverse scenario coverage, and robust baseline results validate its strong utility for both applied and theoretical research in educational computer vision. Its ongoing development will substantially enhance the frontier of AI-powered classroom understanding.