- The paper demonstrates a novel integration of implicit occupancy fields with gradient-based NBV planning to achieve efficient 3D object reconstruction.

- It employs stratified ray sampling and free-ray supervision to enhance reconstruction accuracy and minimize geometric ambiguity.

- Experimental results reveal significant improvements in model fidelity and computational efficiency compared to baseline methods.

Active Implicit Object Reconstruction using Uncertainty-guided Next-Best-View Optimization

Abstract and Introduction

The paper "Active Implicit Object Reconstruction using Uncertainty-guided Next-Best-View Optimization" explores an innovative approach to active object reconstruction leveraging implicit neural representations. The researchers propose a novel method for generating geometric reconstructions of objects with minimal sensor views by optimizing the "next-best-view" (NBV) based on uncertainty metrics derived directly from implicit occupancy fields. Their work integrates implicit occupancy fields with NBV planning in a manner that is both efficient and effective, eschewing traditional voxelization techniques and optimizing through gradient descent on continuous view manifolds.

Methodology

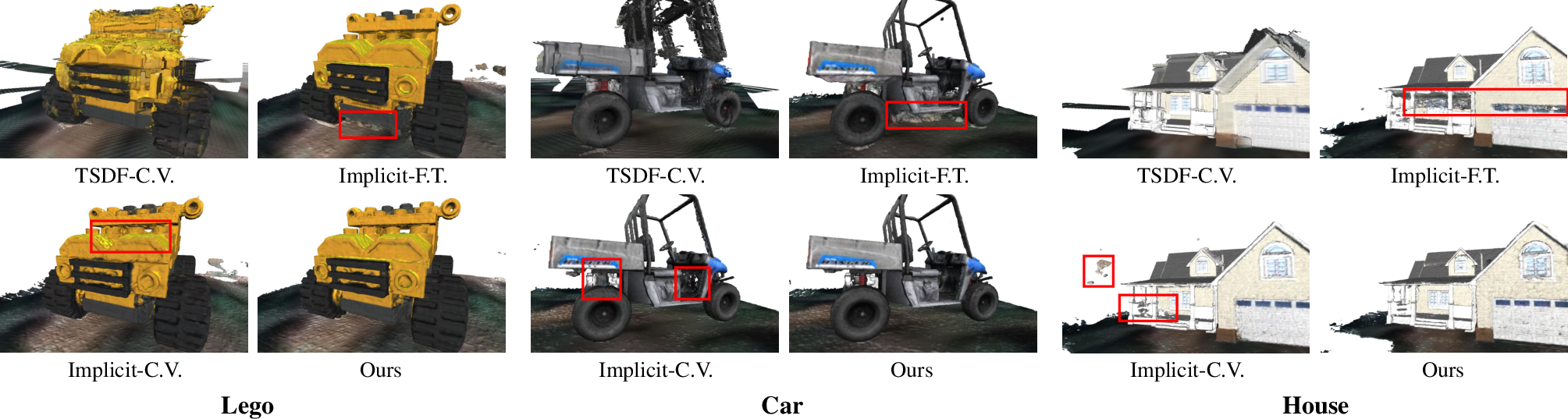

Implicit Occupancy Field Construction

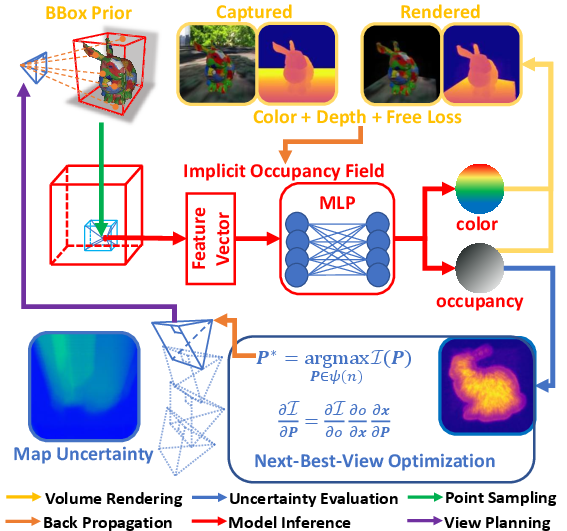

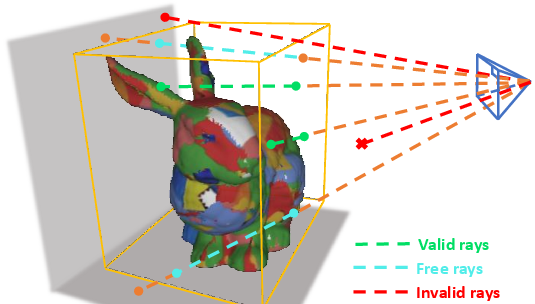

The proposed methodology constructs an implicit occupancy field as the scene's geometric representation. This field utilizes volume rendering based on color and depth supervision enhanced by additional free-ray sampling strategies. The method places significant emphasis on object-level reconstruction, implementing improvements over conventional approaches by incorporating object bounding box priors to guide the sampling and reduce spatial ambiguity in the model (Figure 1).

Figure 1: The architecture of our method.

The system initiates with a multi-resolution hash table facilitated by a shallow MLP, inspired by Instant-NGP, accelerating the training process to suit real-time applications. This combination of techniques allows for the precise encoding of high-frequency signals and forms the basis of the implicit function capturing the scene's details.

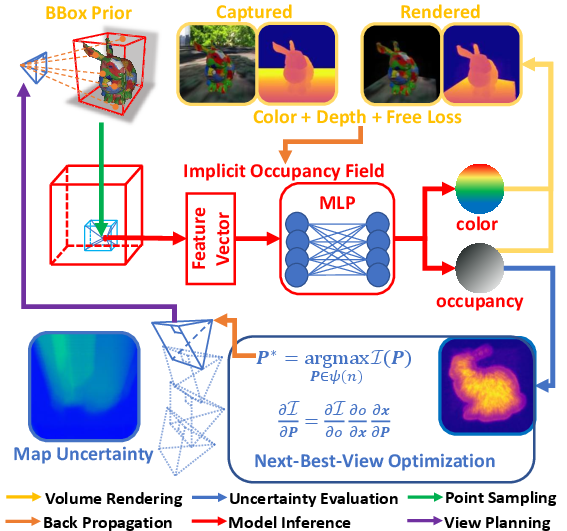

Sampling and Supervision Strategies

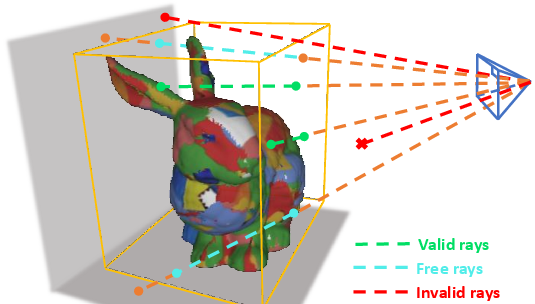

The model classifies rays intersecting the scene into various types—invalid, valid, and free rays—based on a calculated bounding box intersection. This smart segmentation ensures that relevant surface and environmental data guide the learning process effectively, leading to more detailed and accurate reconstructions (Figure 2).

Figure 2: Illustration of ray types defined in our reconstruction method.

By employing stratified and normally distributed sampling methods, the system enhances data acquisition around areas of probable interest and occupancy, while free rays inform about unoccupied space. This dual-focus strategy leverages binary-cross-entropy to enforce non-occupancy where appropriate, driving efficient and complete learning outcomes.

Next-Best-View Optimization

View Uncertainty Evaluation

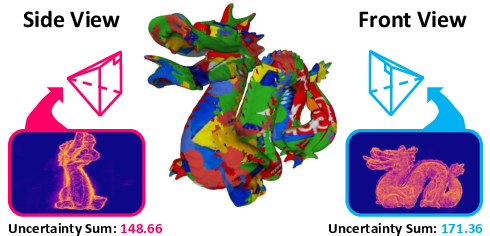

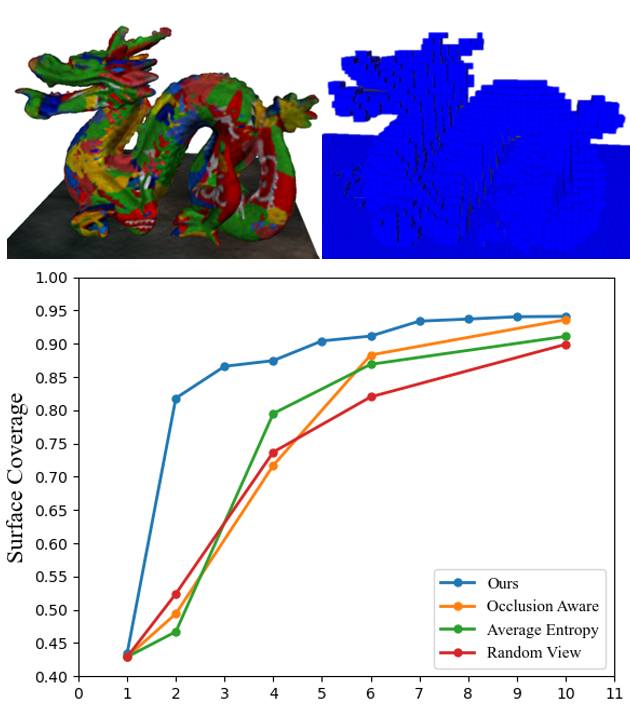

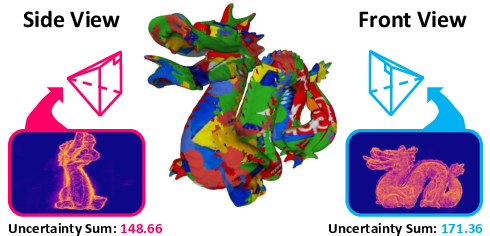

The NBV decision-making incorporates a sophisticated sampling-based approach, where entropy of occupancy probabilities reveals the uncertainty of potential views. The method evaluates the sum of information gain across sampled rays, adjusting the focus from broad sweeps to detail-oriented refinements throughout the process. A novel top-N strategy is implemented, dynamically tuning global and local attention to align with the reconstruction stage (Figure 3).

Figure 3: Illustration of the unfair evaluation in the dragon's scene.

Gradient-Based NBV Planning

The standout feature of this system is its use of gradient-based optimization to derive NBV selections on a continuous manifold, significantly improving adaptability across scenarios without relying on predefined candidate views. By leveraging the differentiability of the implicit model, the paper demonstrates enhanced robustness and precision in planning future sensor movements.

Experimental Results

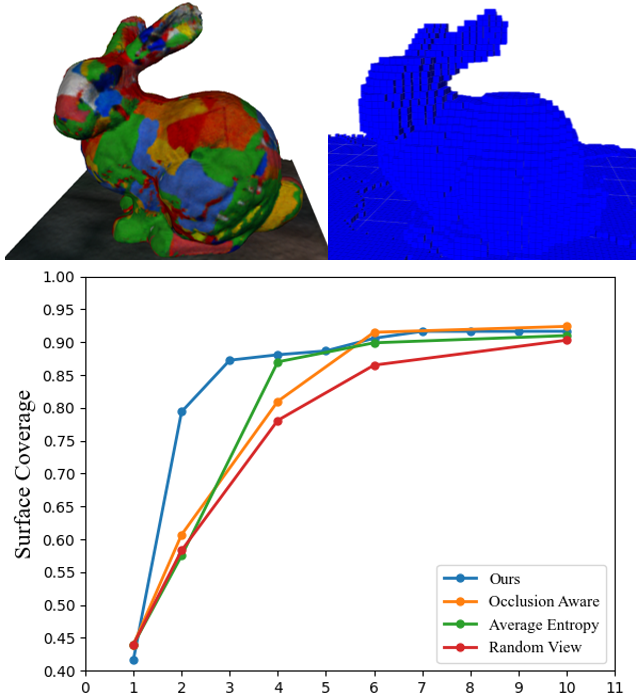

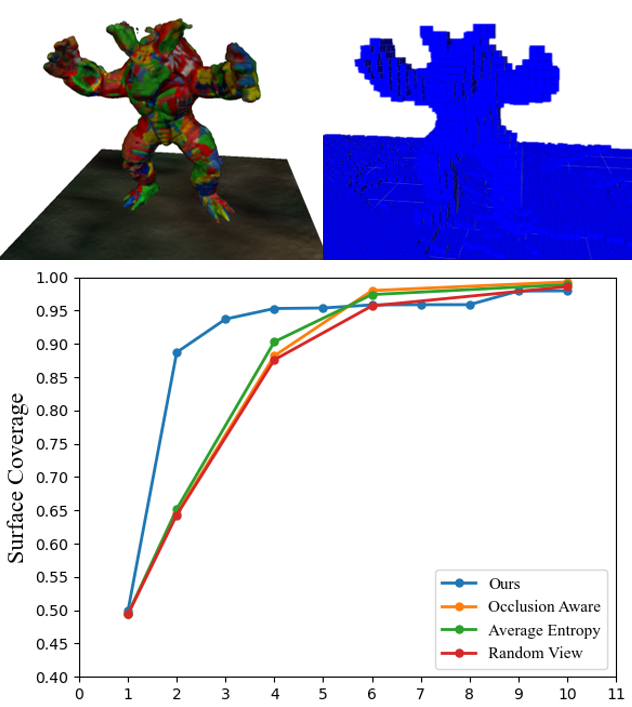

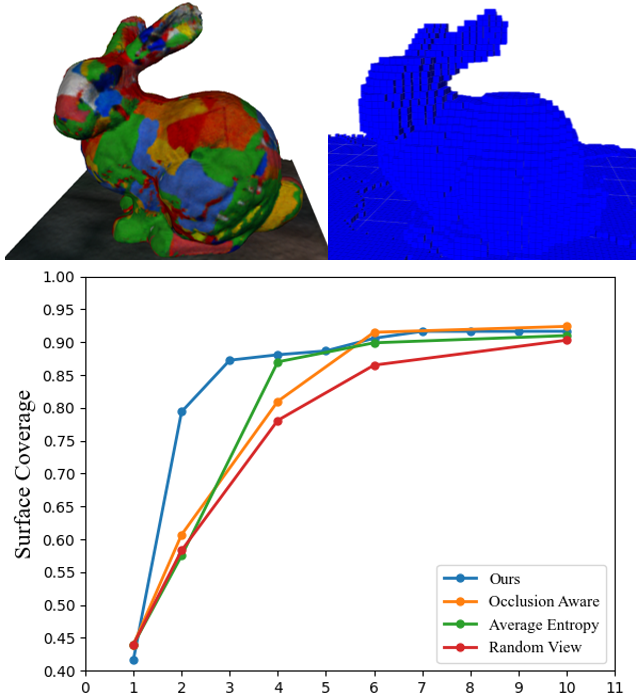

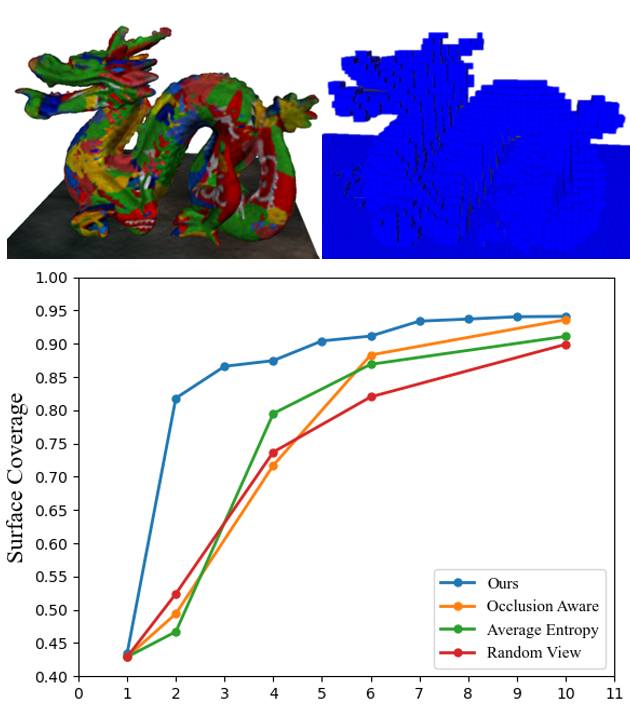

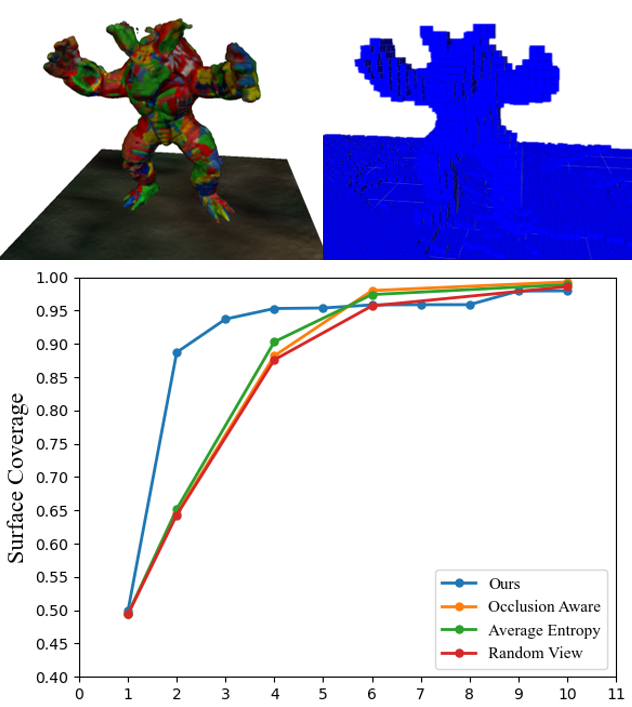

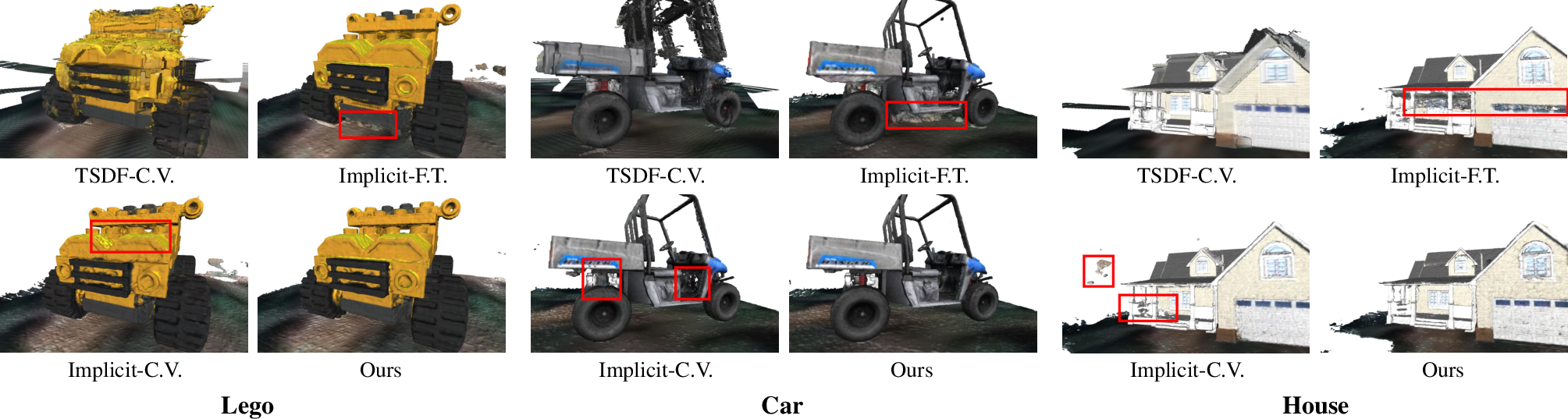

The method's efficacy was validated through both simulation and real-world experiments, showing considerable improvements in reconstruction accuracy and computational efficiency (Figure 4, Figure 5).

Figure 4: Reconstructed model and surface coverage curve of Stanford Bunny, Dragon, and Armadillo.

Figure 5: Qualitative comparison results of Lego, Car, and House.

The reconstructed models under the experimental setup displayed superior geometric fidelity and faster convergence toward complete surface representation compared to baseline methods. The practical implications are significant, suggesting that the method could be readily adapted for robotics applications requiring real-time and high-quality 3D reconstructions.

Conclusion and Future Work

The contributions presented highlight the integration of implicit representations with optimized NBV planning. This approach suggests a pathway toward more intelligent robotics systems capable of dynamically updating their environment understanding with minimal input data. Future explorations might extend to more complex scene mappings and integrate higher levels of semantic context understanding using similar implicit strategies. By refining environment perception through uncertainty-guided methodologies, the scope of autonomous systems' operability and reliability can expand significantly.