- The paper introduces a framework that couples BERT's 9216 fine-grained artificial neurons with fMRI-derived functional brain networks to reflect linguistic processing.

- It employs the element-wise product of query and key vectors and synchronizes activations using Pearson correlation to map artificial to biological neurons.

- Experimental results on the 'Narratives' fMRI dataset reveal significant coupling between deep-layer ANs and BNs, highlighting neurolinguistic integration.

Overview of the Framework

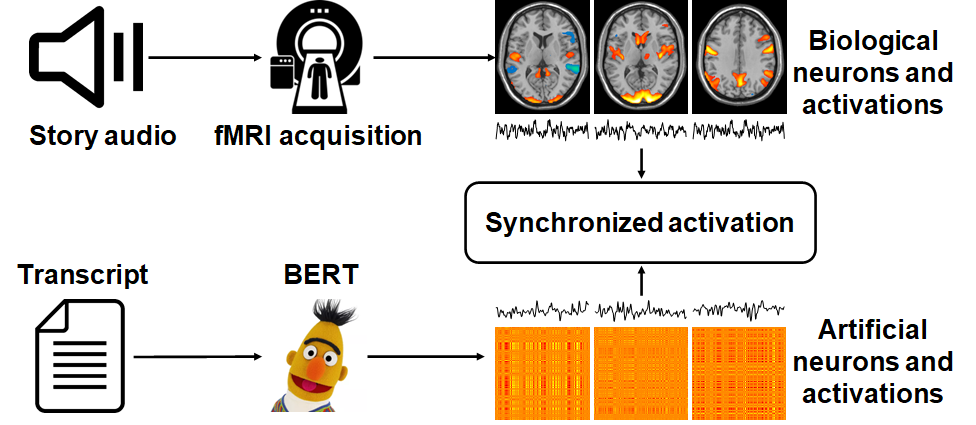

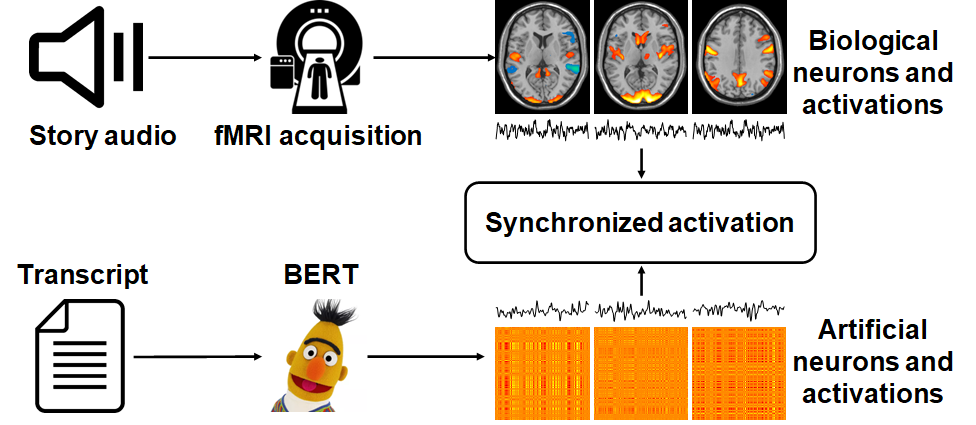

The paper "Coupling Artificial Neurons in BERT and Biological Neurons in the Human Brain" introduces a framework to link artificial neurons (ANs) within transformer-based NLP models, such as BERT, and biological neurons (BNs) within the human brain. This framework seeks to resolve existing limitations by increasing the granularity of ANs and capturing the functional interactions between BNs. By embedding input text sequences with a pre-trained BERT and analyzing functional magnetic resonance imaging (fMRI) data, the study enhances our understanding of linguistic processing at a neural level.

Defining Artificial and Biological Neurons

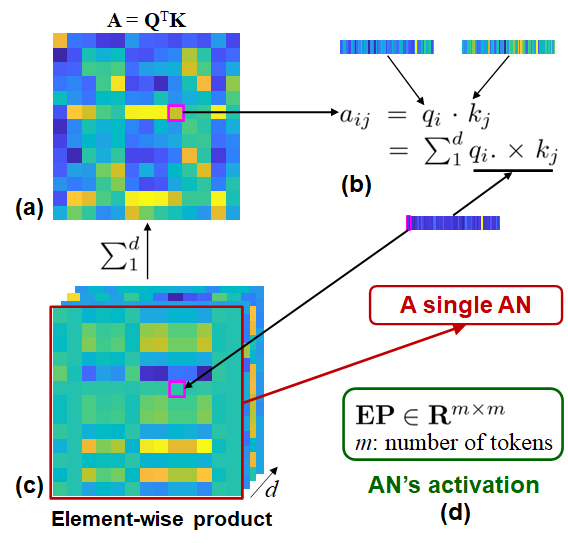

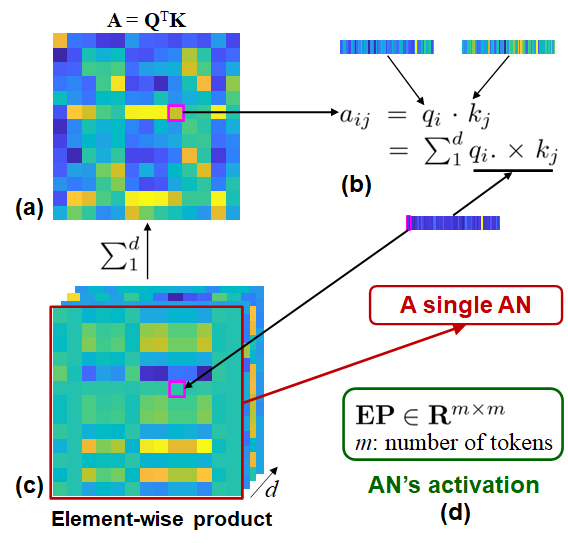

The paper addresses the lack of fine-granularity in existing models by considering each dimension of the query/key vectors within BERT’s multi-head self-attention (MSA) as an individual AN. This approach results in a large number of ANs (9216 in BERT). Temporal activations of these ANs are quantified by considering the element-wise product of queries and keys, offering a deeper insight into linguistic perception.

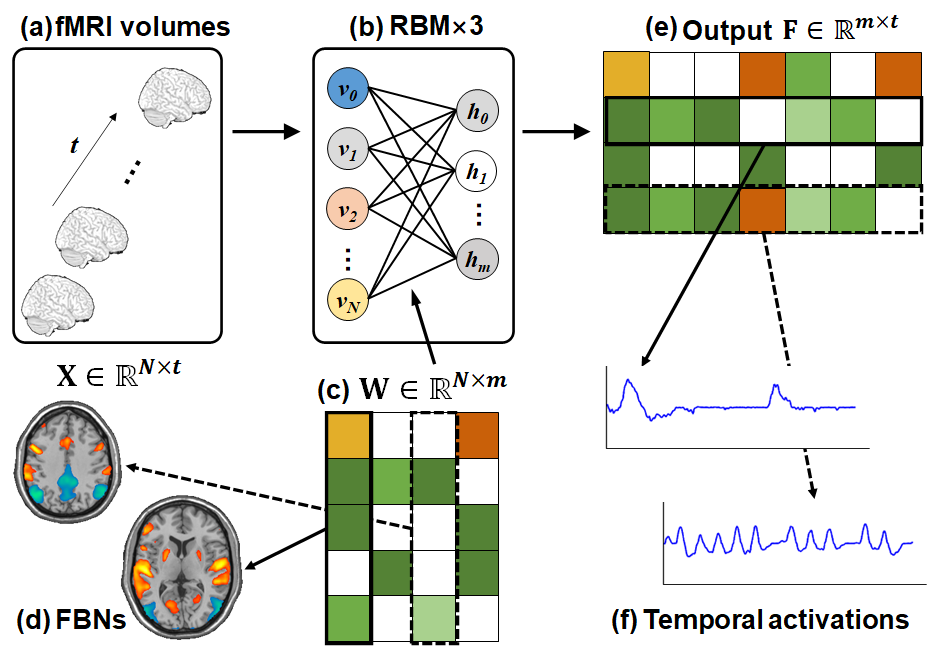

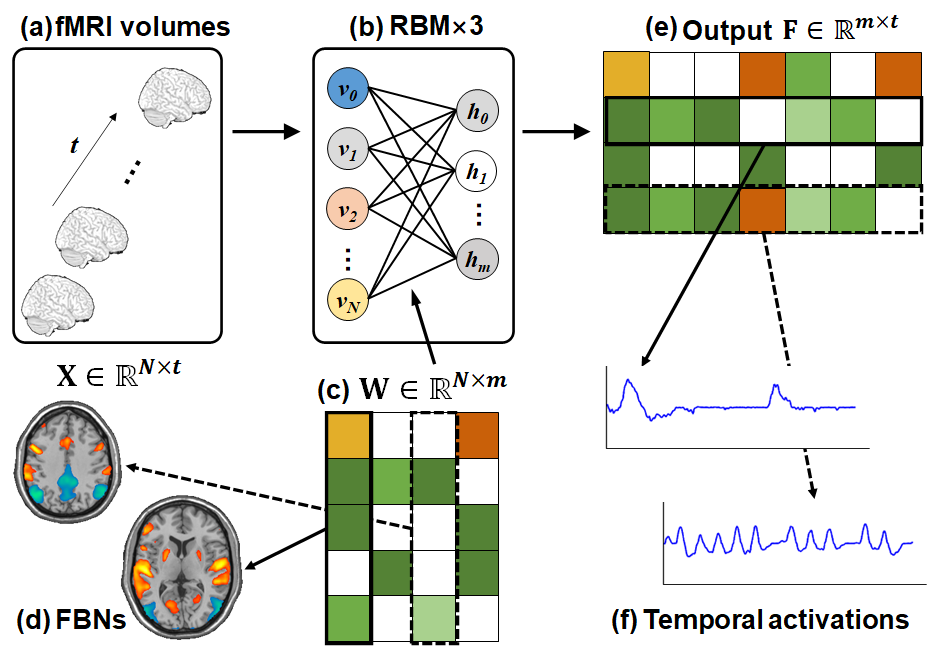

Conversely, biological neurons (BNs) are defined as functional brain networks (FBNs), extracted via the volumetric sparse deep belief network (VS-DBN) applied to fMRI data. This model captures the integrational and segregational brain activities, providing a fine-grained analysis of brain function interaction in response to linguistic stimuli.

Figure 1: The framework for coupling artificial neurons in BERT and biological neurons in the brain.

Synchronization and Experiments

Synchronization between ANs and BNs is achieved by aligning their temporal activations. The Pearson correlation coefficient (PCC) is employed to measure synchronization, identifying the coupling between ANs and BNs by maximizing the correlations of their temporal activations.

The paper utilizes the "Narratives" fMRI dataset, including sessions with spoken stories, to demonstrate the framework’s effectiveness. The experimental results reveal that a significant portion of ANs in BERT’s deep layers are synchronized with BNs, uncovering the association between linguistic perception and neural activation.

Figure 2: The definitions of AN and its activation in BERT. (a) The simplified attention matrix by removing the softmax operations. (b) The contribution of each dimension in query/key to the attention matrix. (c) The element-wise product of queries and keys. (d) The AN's activation is measured by each dimension of the element-wise product.

Interpretation of Results

Significantly synchronized ANs and BNs underpin the framework's capacity to reflect meaningful linguistic and semantic information. The distinct spatial distributions of BNs indicate that they are not merely passive responders but play a crucial role aligned with linguistic integration and perception. The study observed different patterns of neural activation for different story stimuli, offering insights into the dynamic nature of linguistic processing within the brain.

Figure 3: The definitions of BN and its activation in fMRI. (a) The model input is fMRI volumes. (b) The VS-DBN consists of three RBM layers. (c) The global latent variable W. (d) The spatial distribution of FBNs. (e) The temporal activations of FBNs.

Conclusion and Future Directions

The framework presented reveals significant potential in linking linguistic processing models with neural activities. It shows that transformer-based NLP models can serve as representative tools to explore the neural basis of language processing. Despite its limitations, such as the reliance on unidirectional attention dynamics in BERT and simplistic measures of AN activation, the framework sets a foundation for future research. Future work could explore other linguistic tasks or models, such as GPT-3, to assess further consistency in AN-BN coupling patterns, enriching the understanding of neurolinguistic interactions.