Abstract Visual Reasoning Enabled by Language

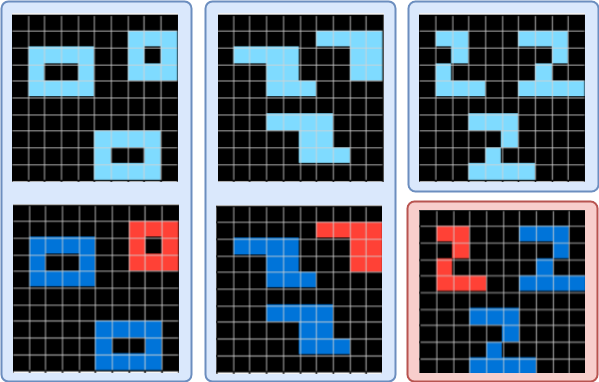

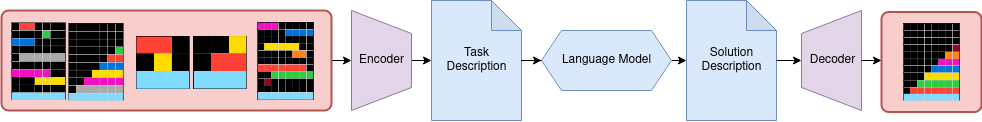

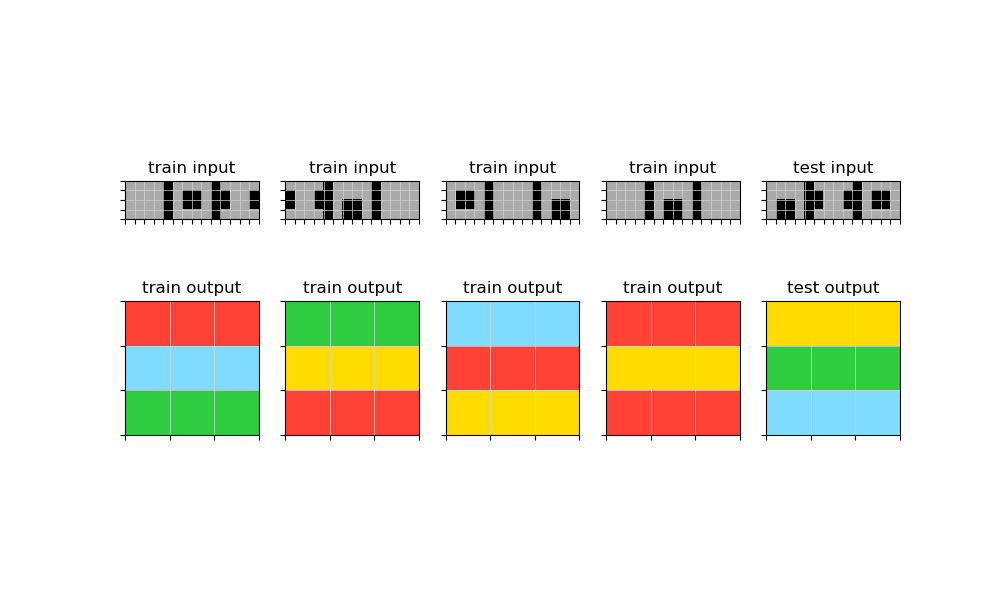

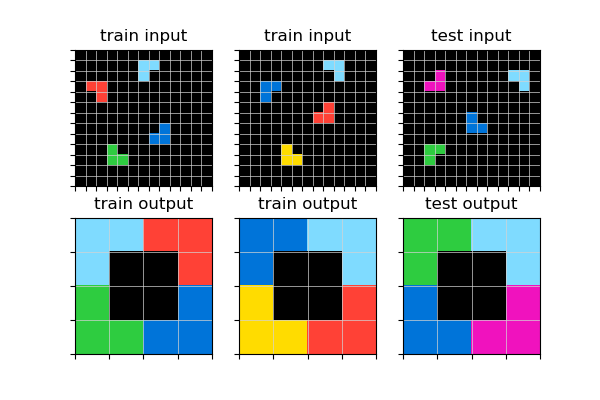

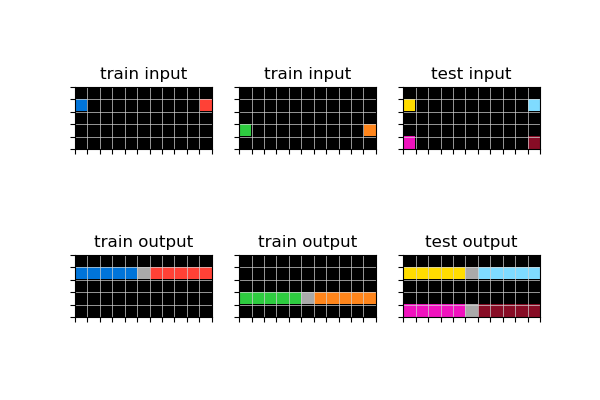

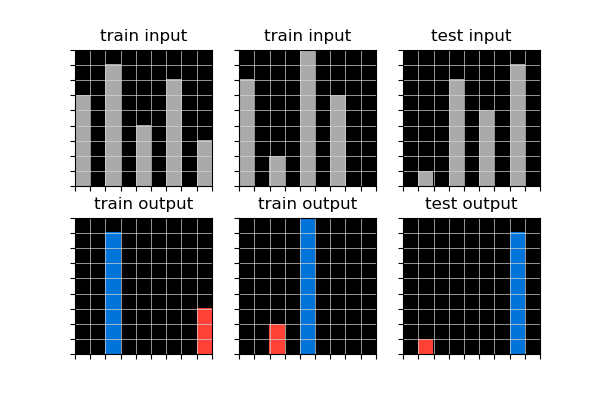

Abstract: While AI models have achieved human or even superhuman performance in many well-defined applications, they still struggle to show signs of broad and flexible intelligence. The Abstraction and Reasoning Corpus (ARC), a visual intelligence benchmark introduced by François Chollet, aims to assess how close AI systems are to human-like cognitive abilities. Most current approaches rely on carefully handcrafted domain-specific program searches to brute-force solutions for the tasks present in ARC. In this work, we propose a general learning-based framework for solving ARC. It is centered on transforming tasks from the vision to the language domain. This composition of language and vision allows for pre-trained models to be leveraged at each stage, enabling a shift from handcrafted priors towards the learned priors of the models. While not yet beating state-of-the-art models on ARC, we demonstrate the potential of our approach, for instance, by solving some ARC tasks that have not been solved previously.

- Communicating natural programs to humans and machines, 2021.

- Roderic Guigo Corominas Alejandro de Miquel, Yuji Ariyasu. Arc kaggle competition, 2020.

- Neural-guided, bidirectional program search for abstraction and reasoning, 2021.

- Object-centric compositional imagination for visual abstract reasoning. In ICLR2022 Workshop on the Elements of Reasoning: Objects, Structure and Causality, 2022.

- Language models are few-shot learners, 2020.

- François Chollet. On the measure of intelligence, 2019.

- Abstraction and reasoning challenge, 2020.

- Vlad Golubev Ilia Larchenko. Abstract reasoning, 2020.

- Fast and flexible: Human program induction in abstract reasoning tasks, 2021.

- Lab42. Arc abstraction & reasoning corpus, 2022.

- Grounding language for transfer in deep reinforcement learning. Journal of Artificial Intelligence Research, 63:849–874, 2018.

- Bloom: A 176b-parameter open-access multilingual language model. arXiv preprint arXiv:2211.05100, 2022.

- Core knowledge. Dev. Sci., 10(1):89–96, Jan. 2007.

- Johan Sokrates Wind. Dsl solution to the arc challenge, 2020.

- Graphs, constraints, and search for the abstraction and reasoning corpus, 2022.

- Virel: Unsupervised visual relations discovery with graph-level analogy, 2022.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.