- The paper demonstrates that in-context learning enables pretrained models to make predictions using few-shot examples without updating parameters.

- It outlines various training strategies, including supervised and self-supervised methods, to enhance the model's ability through optimized demonstration design.

- The study highlights challenges like sensitivity to demonstration design and scalability, offering insights into robust scoring functions and normalization techniques.

A Survey on In-Context Learning

Introduction

In recent years, LLMs have demonstrated remarkable abilities through in-context learning (ICL), a paradigm that allows models to make predictions by leveraging a context supplemented with a few examples. This survey paper provides a comprehensive examination of the development and challenges associated with ICL, aiming to clarify its definition, explore advanced techniques, and discuss potential research directions.

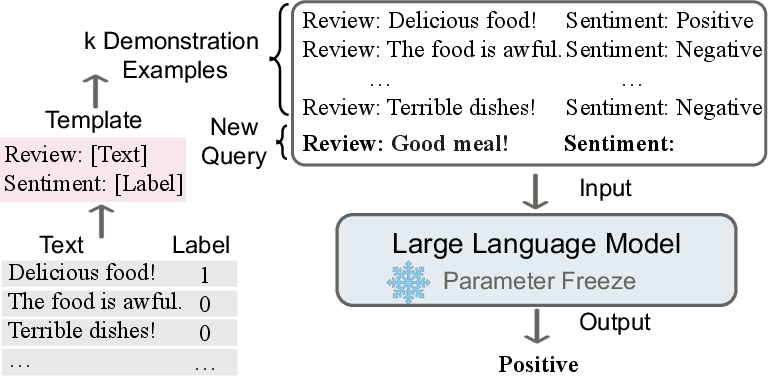

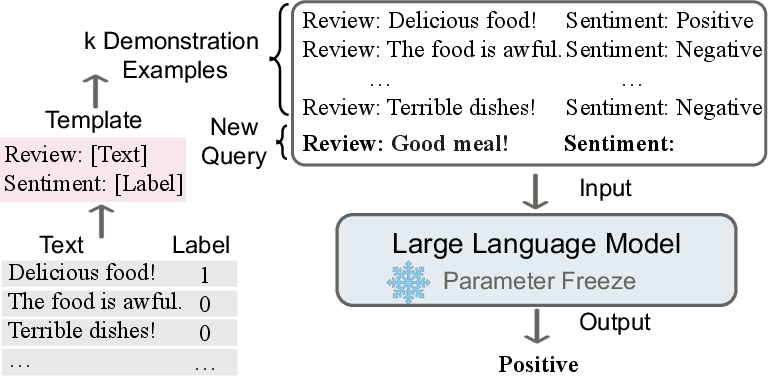

ICL facilitates learning from analogy by providing demonstration contexts composed of a few examples in natural language format. Unlike traditional supervised learning, which requires parameter updates during a training stage, ICL operates directly on pretrained models without altering their parameters. This paradigm promises data efficiency and ease of knowledge integration by modifying demonstration templates and human-understandable prompts.

Figure 1: Illustration of in-context learning. ICL requires a piece of demonstration context containing a few examples written in natural language templates. Taking the demonstration and a query as the input, LLMs are responsible for making predictions.

ICL can be formally defined as estimating the likelihood of a potential answer conditioned on a demonstration set using a pretrained LLM. Given a query text, the model selects the candidate answer with the highest estimated probability, determined by a scoring function. The demonstration set includes optional task instructions and demonstration examples, formatted in natural language.

Researchers have explored various methods to enhance ICL capabilities beyond standard language modeling objectives. Supervised In-context Training leverages tasks with contextually framed examples to refine model performance in understanding analogies. Prominent methods such as MetaICL and Symbol Tuning illustrate innovations in utilizing transformed label representations to enhance context-based reasoning. Meanwhile, Self-supervised In-context Training leverages raw corpora to construct self-supervised data, thus bridging the pretraining-inference gap and promoting task generalization (2301.00234).

Demonstration Designing

Demonstration design is central to ICL performance. It spans multiple strategies:

- Organization: Selecting and ordering demonstration examples significantly impacts model predictions. Techniques range from kNN-based unsupervised retrievers to supervised approaches that utilize a scoring mechanism for retrieving optimal examples.

- Formatting: Proper formatting enhances understanding in complex reasoning tasks. Instruction formatting and chain-of-thought processes are instrumental in guiding models through intermediate reasoning steps, thereby improving comprehension and task execution (2301.00234).

Challenges and Future Directions

While promising, ICL presents challenges in robustness, efficiency, and scalability. The performance of ICL is highly sensitive to demonstration design, prompting research into more consistent scoring functions and normalization strategies. Moreover, as computational demands increase, optimizing prompting strategies and investigating novel pretraining techniques that enhance ICL-specific capabilities are crucial future directions.

Conclusion

In-context learning has emerged as a powerful tool in the arsenal of AI research, offering a unique approach to deploying LLMs in various domains. This survey underscores the ongoing efforts to refine ICL methodologies and provides insights into future research paths that could bridge gaps in understanding and application, ushering in advancements in both theoretical constructs and practical implementations.