- The paper introduces a framework that leverages neural radiance fields and consumer-grade hardware to capture and render photorealistic objects.

- It employs a two-layer volumetric representation with density-guided sample filtering and shader code transpilation for real-time rendering optimization.

- Results show improved rendering speed and quality over state-of-the-art methods, enabling seamless integration into interactive game and VR environments.

Neural Assets: Volumetric Object Capture and Rendering for Interactive Environments

Introduction and Method Overview

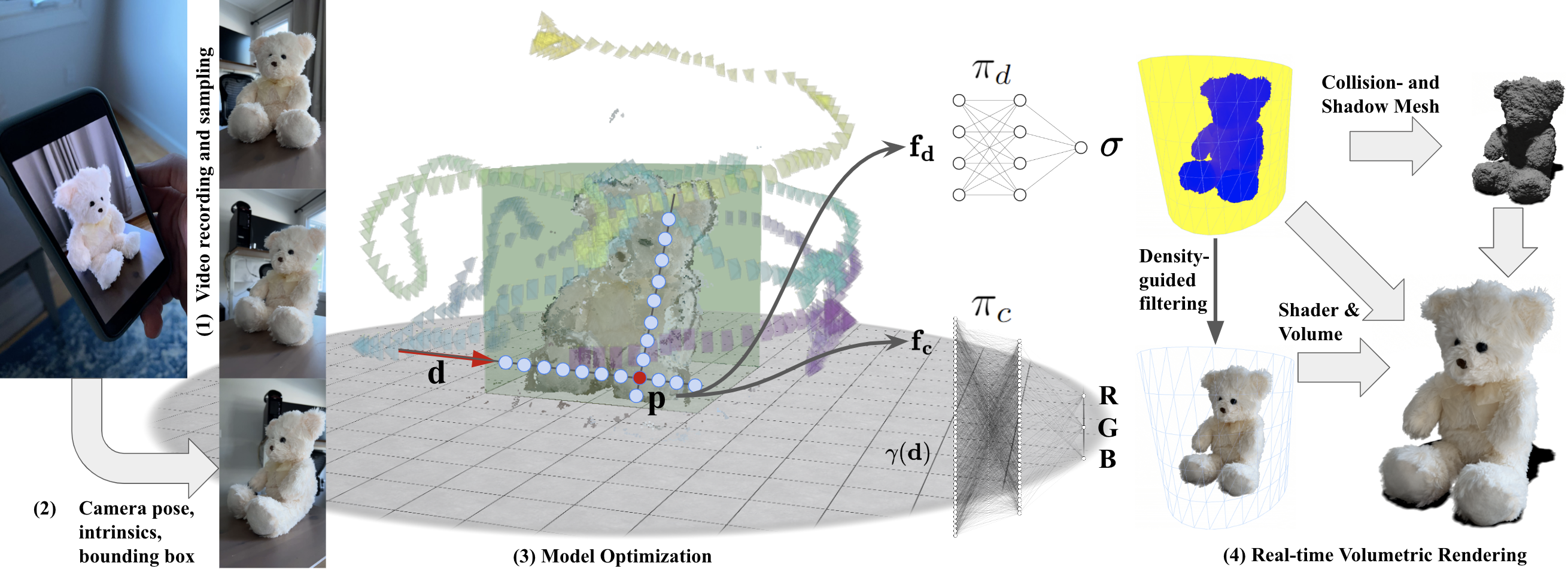

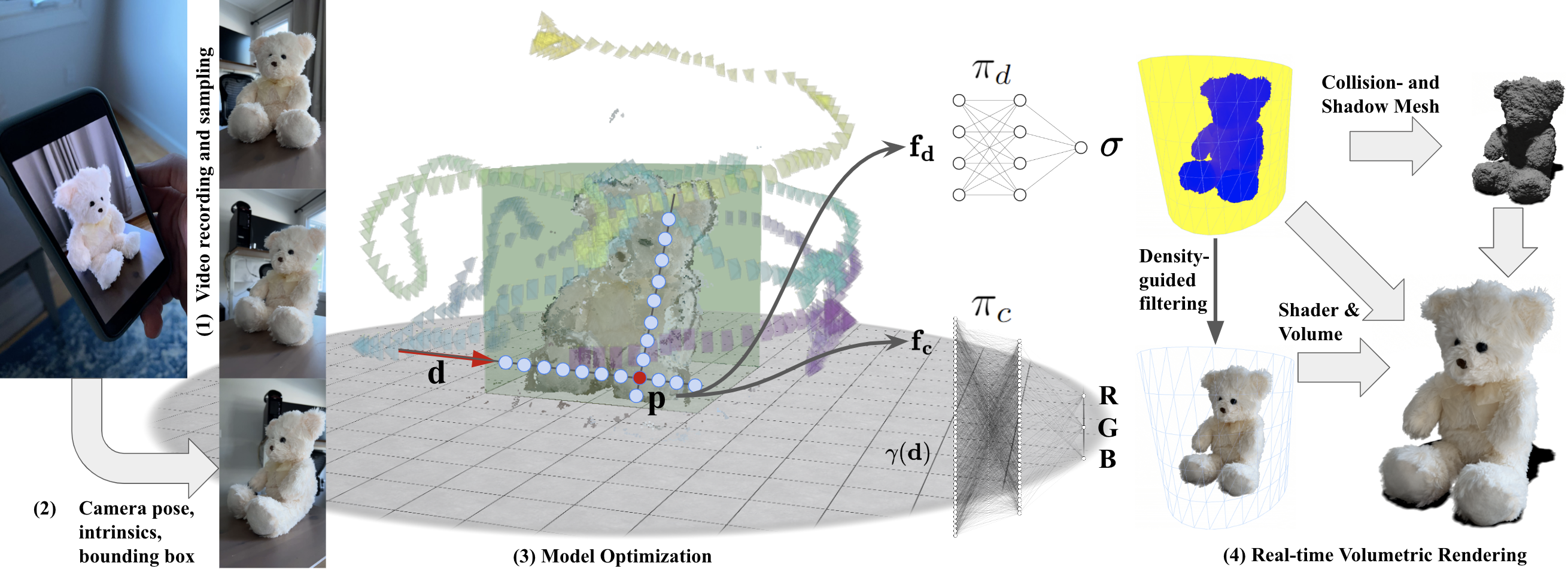

The paper "Neural Assets: Volumetric Object Capture and Rendering for Interactive Environments" presents a novel framework for capturing, reconstructing, and rendering real-world objects in virtual environments using consumer-grade hardware like mobile phones. This approach leverages advancements in neural rendering, specifically neural radiance fields (NeRFs), to achieve photorealistic object representation including complex volumetric effects such as translucency and fur, enhancing the realism in interactive environments.

Figure 1: A streamlined method for object recording, 3D reconstruction using MLPs, and shader code transpilation for real-time rendering.

Object Capture and 3D Reconstruction

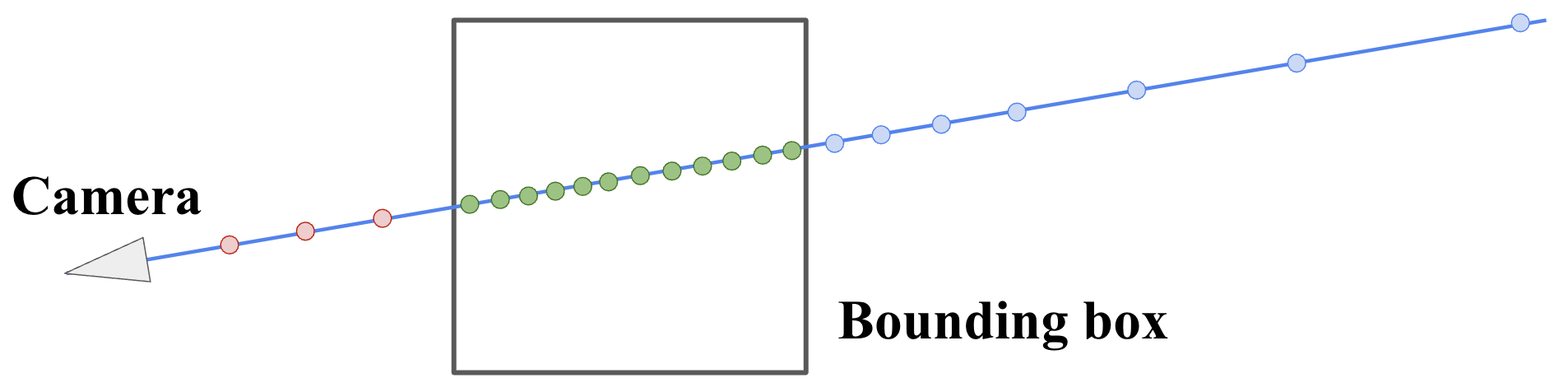

The process begins with capturing images or video using standard mobile devices. A straightforward workflow ensures user-friendly operations by allowing easy camera exposure and settings adjustments, followed by an optimization phase using a two-layer volumetric representation. This representation separates foreground and background modeling, thus refining object boundaries and enhancing translucent effects without requiring specialized setups like green screens.

Real-time Rendering Optimization

To address the computational challenges inherent in rendering neural radiance fields rapidly, the paper proposes several solutions:

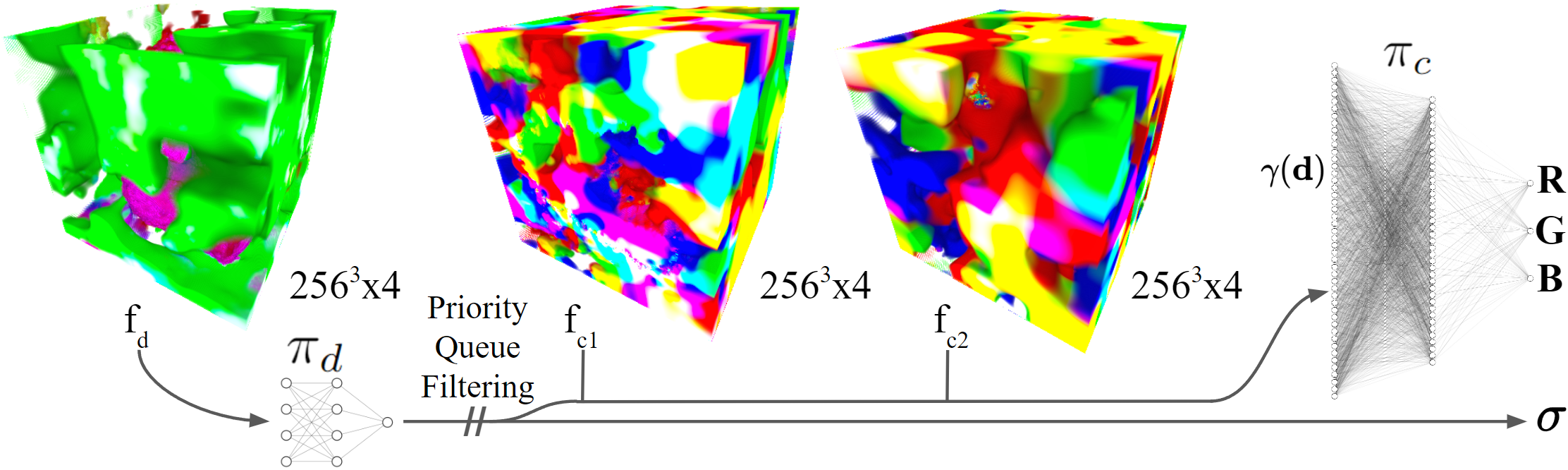

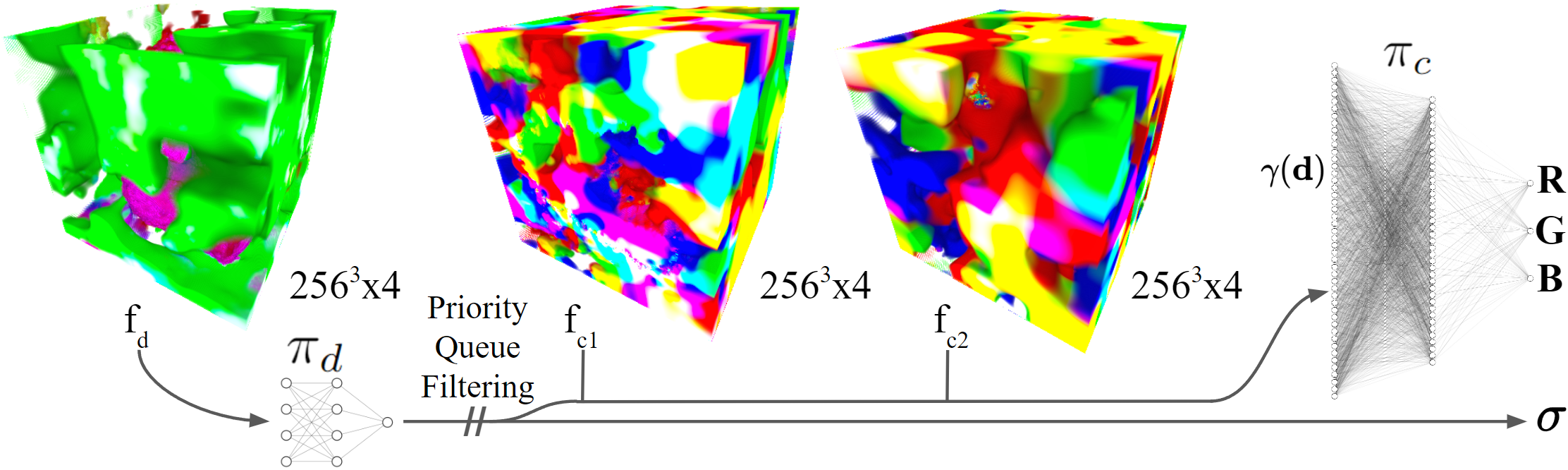

- Hardware-Conscious Model Design: Utilizes GPUs' texture read capabilities and MLPs for efficient density and color prediction, balancing memory usage and computational demands.

- Density-Guided Sample Filtering: Prioritizes samples with significant blending weights, decreasing computational costs while maintaining visual fidelity.

- Shader Code Transpilation: Translates model configurations into optimized GLSL and HLSL shader code, facilitating integration across diverse platforms.

Figure 2: Execution graph illustrating efficient texture reads and priority filtering in rendering processes.

Integration and Practical Applications

Integration within game engines offers seamless interactivity, incorporating standard physics for collision detection and shadow mapping. Objects rendered in neural representation are compatible with current engine logic, allowing realistic interactions, such as hand-object collisions in VR.

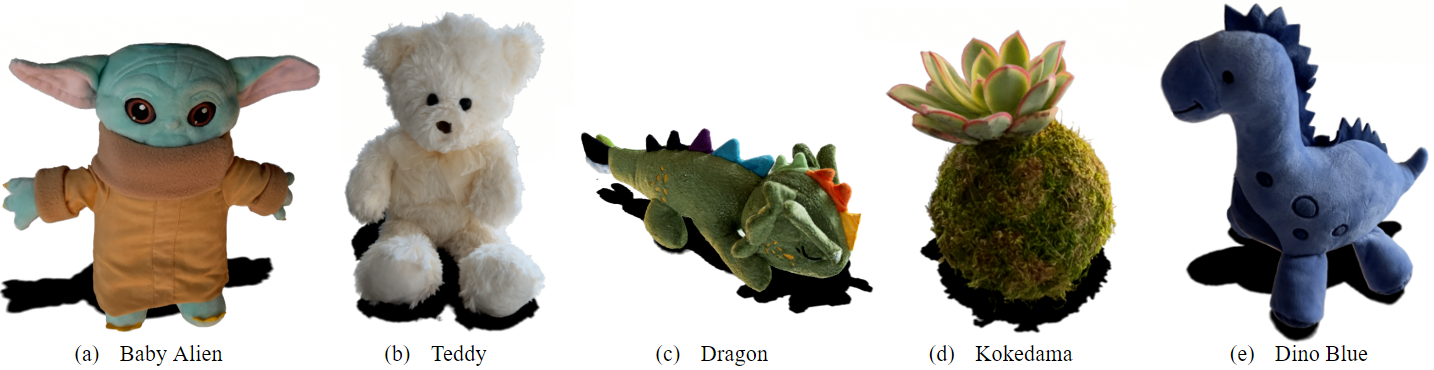

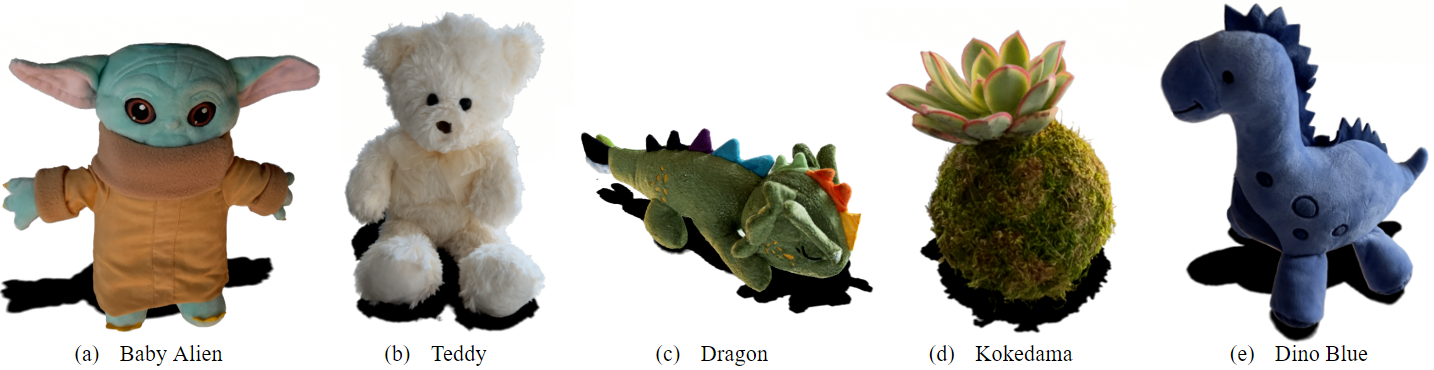

Figure 3: Challenging test objects showcasing diverse material applications and viewpoint-dependent effects using mobile recordings.

Results

The approach exhibits substantial improvements in rendering speed and quality over existing methods, including ReLU-Fields and KiloNeRF, with competitive FPS achieved in real-world applications. This integration provides high-quality renderings even in stereoscopic settings, expanding potential applications in augmented and virtual reality environments.

Figure 4: Comparisons of reconstruction quality with other state-of-the-art methods based on CO3D dataset evaluations.

Limitations and Future Directions

Despite its high performance, the feature grid requirements pose memory challenges compared to mesh-based representations. Suggested future directions include feature compression techniques and deformation modeling, aimed at enhancing interactive realism and object editability.

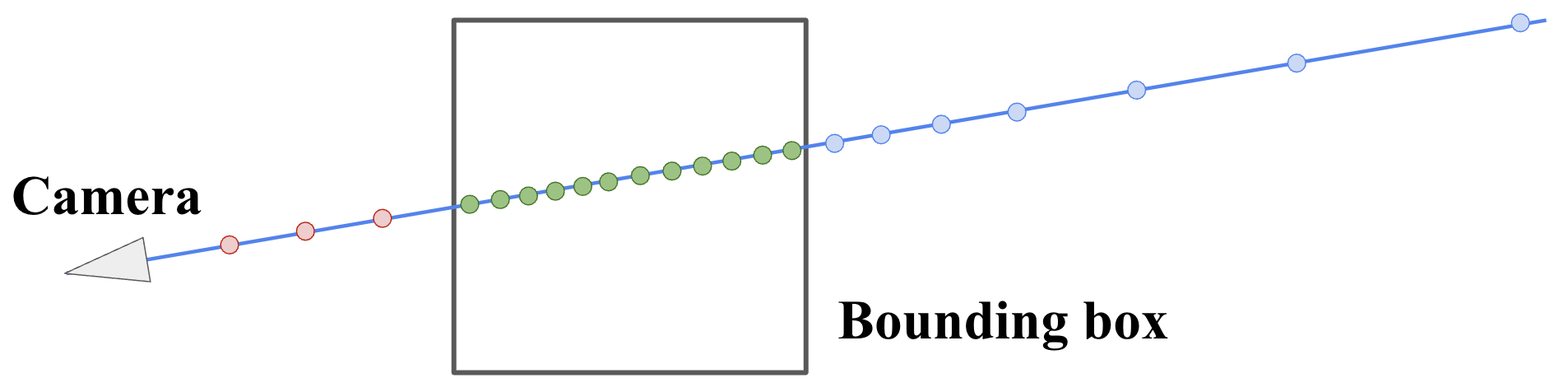

Figure 5: Adaptive point sampling differentiating sample locations for effective rendering.

Conclusion

Neural Assets advances the domain of volumetric rendering by bridging the gap between complex real-world capture and interactive virtual experiences. By ensuring high-fidelity object reconstruction and efficient rendering capabilities, the presented solutions pave the way for further innovations, particularly in consumer-grade immersive technologies.

Figure 6: Hand interactions demonstrating practical neural asset integration in virtual environments.

This paper redefines the scope of interactive rendering, making neural assets an indispensable tool for creating realistic environments in gaming and simulation contexts.