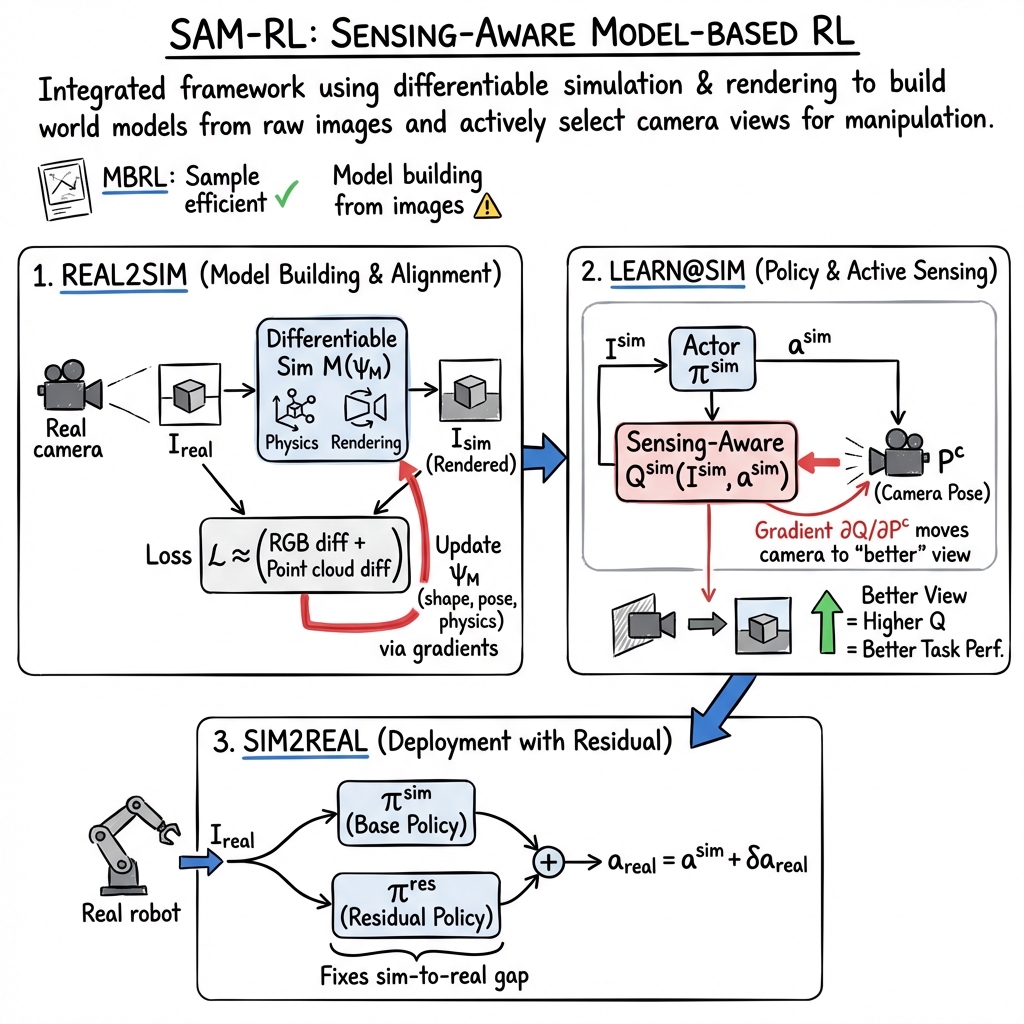

SAM-RL: Sensing-Aware Model-Based Reinforcement Learning via Differentiable Physics-Based Simulation and Rendering

Abstract: Model-based reinforcement learning (MBRL) is recognized with the potential to be significantly more sample-efficient than model-free RL. How an accurate model can be developed automatically and efficiently from raw sensory inputs (such as images), especially for complex environments and tasks, is a challenging problem that hinders the broad application of MBRL in the real world. In this work, we propose a sensing-aware model-based reinforcement learning system called SAM-RL. Leveraging the differentiable physics-based simulation and rendering, SAM-RL automatically updates the model by comparing rendered images with real raw images and produces the policy efficiently. With the sensing-aware learning pipeline, SAM-RL allows a robot to select an informative viewpoint to monitor the task process. We apply our framework to real world experiments for accomplishing three manipulation tasks: robotic assembly, tool manipulation, and deformable object manipulation. We demonstrate the effectiveness of SAM-RL via extensive experiments. Videos are available on our project webpage at https://sites.google.com/view/rss-sam-rl.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The following points summarize what remains missing, uncertain, or unexplored in the paper, stated concretely to guide future research:

- Robustness to imperfect perception: the method assumes accurate instance segmentation for a single target object; the impact of segmentation errors, multi-object segmentation, cluttered backgrounds, and occlusions on model updates and policies is not evaluated.

- Photometric realism and calibration: the RGB loss does not model illumination, exposure, white balance, or camera response; methods to estimate lighting and photometric parameters (and their effect on differentiable rendering-based updates) are absent.

- Physical parameter identification scope: updates are limited to mass and inertia; crucial contact and deformation parameters (friction, damping, stiffness/compliance, restitution) are not estimated, especially important for contact-rich and deformable tasks.

- Identifiability of system parameters: updating physical parameters from a single end pose (or few observations) is underdetermined; principled approaches to identifiability, regularization, multi-trajectory estimation, and uncertainty quantification are missing.

- Deformable object modeling fidelity: thread modeled as rigid links with revolute joints; generalization to continuum elasticity or more realistic deformable models is not demonstrated, and parameter learning for deformables (e.g., stiffness) is unexplored.

- Action space limitations: policies only control 3D translation (rotations disabled, gripper actions unspecified); extension to full 6-DoF control, end-effector orientation, force/torque commands, and richer manipulators remains open.

- Camera viewpoint optimization constraints: gradient-based updates do not enforce kinematic reachability, collision avoidance, joint limits, or time/motion budgets of the camera arm; a constrained optimization/planning formulation is needed.

- NBV baseline comparison: no quantitative comparison against established NBV methods (information gain heuristics, coverage-based, learning-based) to isolate the benefit of Q-based viewpoint selection.

- Stability of actor–critic coupling: the circular dependence (actor outputs actions, Q guides camera pose that changes observations) lacks analysis of convergence, local optima, and stability under noisy gradients or non-smooth rendering/contacts.

- Viewpoint change overhead: the trade-off between moving the camera (time, energy, collision risk) and task performance is not measured; policies to limit or plan view changes across a horizon are not studied.

- Sparse reward for residual RL: residual policy is trained with binary success signals; strategies for shaping, safety-constrained exploration, and guarantees that residuals do not degrade performance under exploration are not examined.

- Baseline fairness and external validity: comparisons to TD3/SAC/Dreamer are conducted in PyBullet with PyBullet as the “real world”; equivalence of observation/action spaces, reward shaping, and transferability to physical hardware are not established.

- Sim-to-real generalization: robustness to variations in object geometry, material, texture, lighting, and sensor characteristics is not quantified; domain randomization or uncertainty-aware training strategies are not explored.

- Rendering-based loss robustness: sensitivity to sensor artifacts (missing depth, specular/transparent surfaces, motion blur, rolling shutter) and adverse lighting is untested; reliance on masked RGB and EMD depth may be brittle in practice.

- Multi-object and cluttered scenes: the pipeline focuses on a single object; scaling to multiple interacting objects, simultaneous tracking/model updates, and the effect of mutual occlusions are open problems.

- Automatic success detection: Needle-Threading success is manually judged; scalable and precise automatic success metrics and detectors for deformable tasks are needed.

- Calibration sensitivity: the effect of errors in camera intrinsics, hand–eye calibration, and robot base-to-base transforms on model accuracy, viewpoint optimization, and policy performance is not analyzed.

- Computation and real-time feasibility: end-to-end latency of differentiable simulation + rendering + camera optimization on-robot is not reported; profiling, real-time constraints, and hardware acceleration requirements remain unclear.

- Acceptance criterion and oscillations: simple “accept if Q increases” may induce jitter or oscillations; smoothing, hysteresis, or planning-based camera selection is not developed.

- Expert trajectory generation scalability: reliance on trajectory optimization in differentiable simulators may fail or be costly for complex tasks; fallback strategies, warm starts, or learning-from-demonstration integration are not investigated.

- Loss component ablation: contributions of RGB vs depth (EMD) terms to model fidelity and downstream policy performance are not quantified; alternative geometric losses (e.g., signed distance, ICP variants) are not compared.

- Dual-arm coordination safety: collision avoidance and coordination between the camera arm (Flexiv) and the manipulation arm (Franka) during dynamic viewpoint changes are not addressed.

- Failure modes and diagnostics: systematic characterization of when SAM-RL fails (e.g., severe occlusion, fast dynamics, contact discontinuities, poor textures) and diagnostic tools to recover are absent.

- Reproducibility: full details on hyperparameters, training schedules, datasets of camera views, trajectory optimizer settings, and code availability are insufficient for replication; incomplete equations and typos hinder clarity.

- Metrics beyond success rate: real-world sample efficiency (episodes, wall-clock time), robustness across seeds, and safety metrics are not reported; statistical significance and confidence intervals are missing.

- Multi-step NBV planning: viewpoint selection is greedy gradient ascent; planning sequences of views with lookahead, task-aware costs, and joint optimization of perception and action over a horizon remains open.

- Handling non-differentiable events: reliability of gradients through contact-rich dynamics (impacts, stick–slip) is unexamined; smoothing strategies or subgradient methods for discontinuities are not described.

- Environment rendering fidelity: background textures, environment geometry, and lighting are not modeled/updated; strategies for full-scene photometric/geomtric alignment to reduce sim–real discrepancy are needed.

- Multi-modal sensing: integration of additional sensing modalities (force/torque, tactile, proprioception) for model update and viewpoint selection is not explored.

- POMDP formulation clarity: the paper references POMDPs but does not formally define the observation model, belief updates, or the role of active sensing within a POMDP framework; a principled formulation is lacking.

Collections

Sign up for free to add this paper to one or more collections.