- The paper demonstrates that combining normal and shear force data in a CNN improves gesture classification accuracy from 66% to 74%.

- It details a novel 5x9 tri-axial sensor array built with PDMS, magnets, and hall effect sensors to ensure precise force measurements.

- The approach offers advancements in human-computer interfaces and robotic controls by reducing misclassification in various touch gestures.

Deep Learning Classification of Touch Gestures Using Distributed Normal and Shear Force

Introduction

The classification of touch gestures is a significant area of research, particularly in the context of human-computer interaction, robotics, and telepresence. This paper presents a method for classifying touch gestures by leveraging both normal and shear force components, which enhances the recognition accuracy over methods using only normal force data. The approach involves designing a flexible tactile sensing array consisting of tri-axial force sensors and employing a Convolutional Neural Network (CNN) to extract and learn spatio-temporal features from data gathered in user studies.

Sensor Design and Fabrication

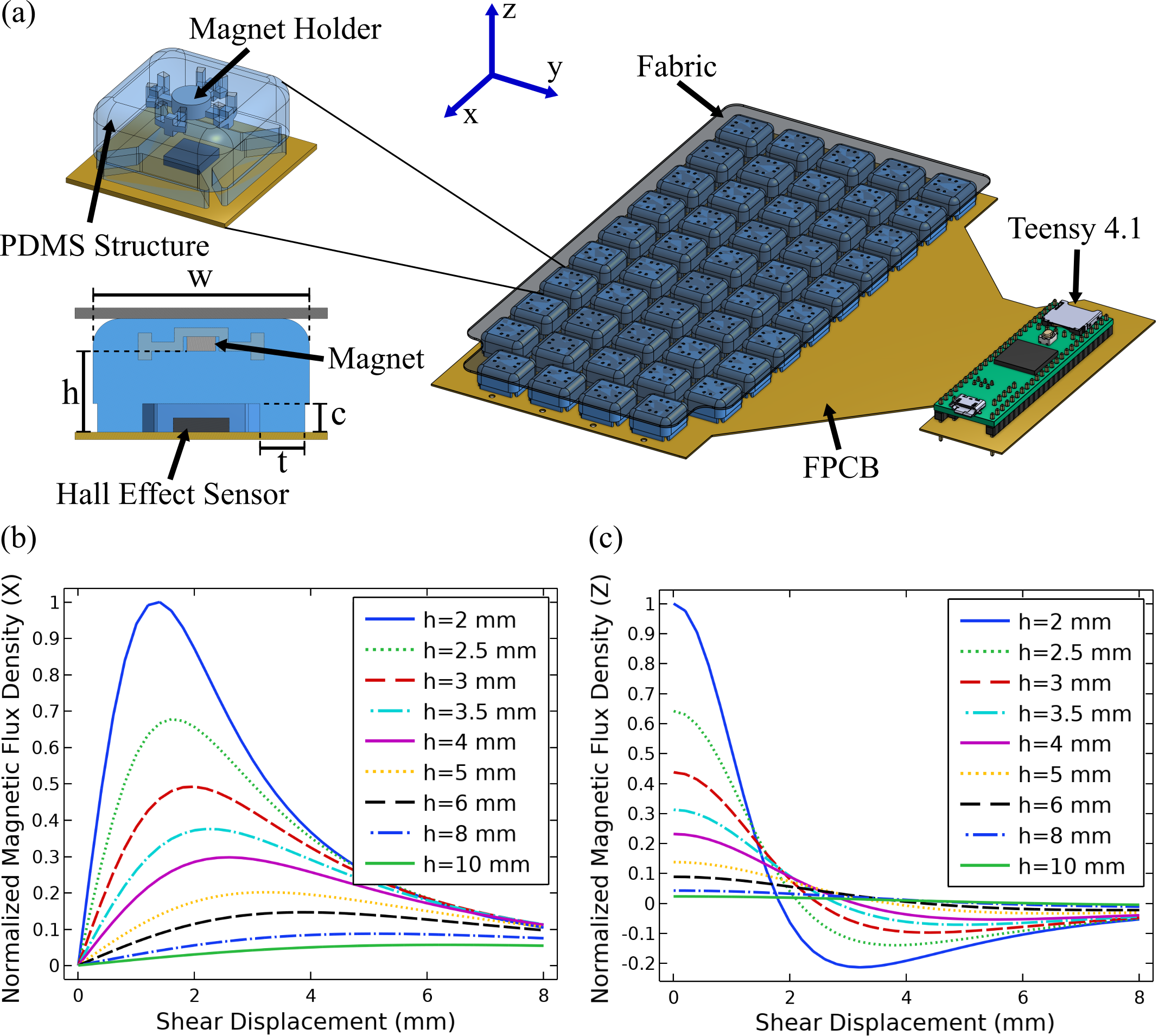

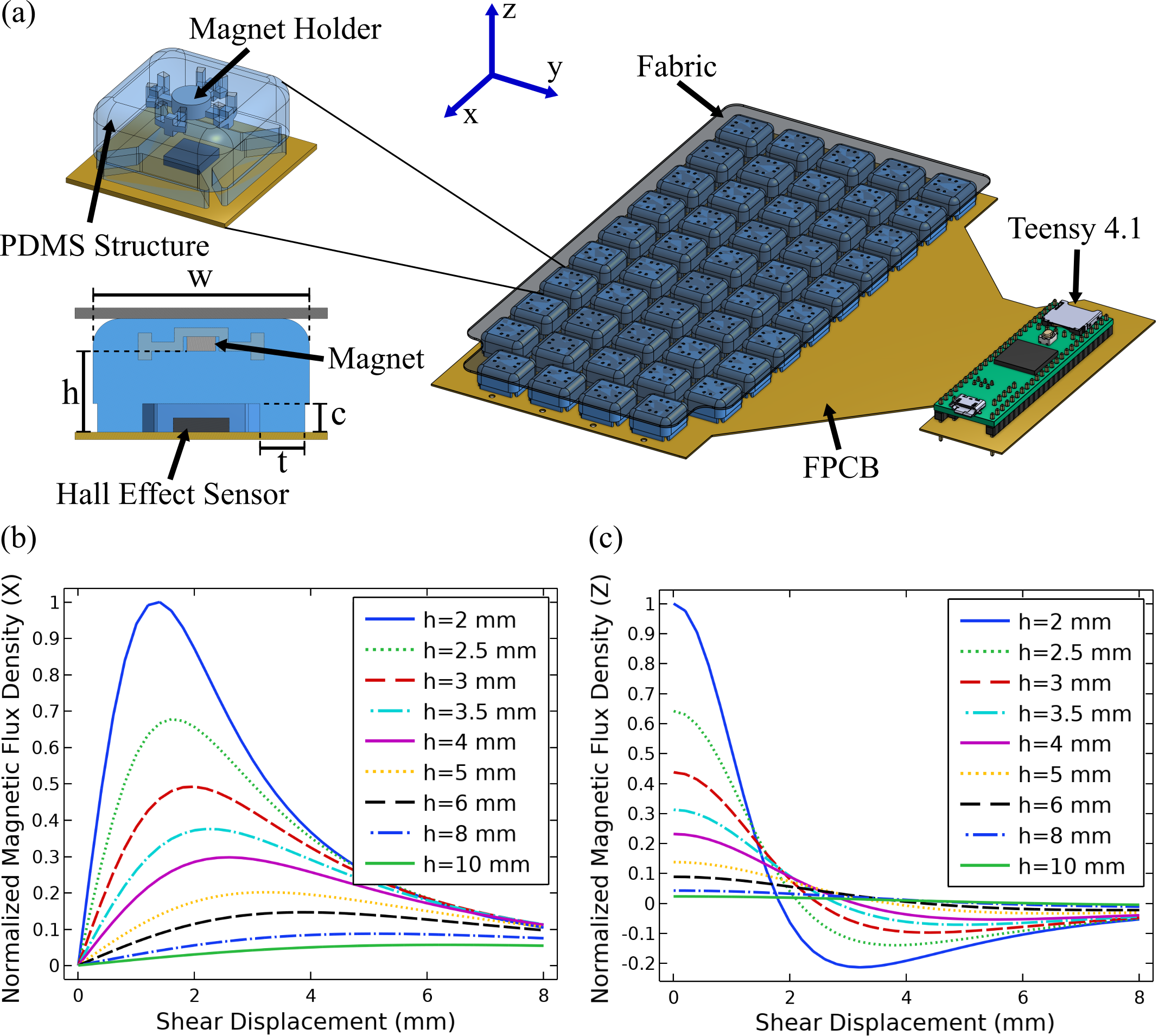

The novel sensing array is a 5x9 matrix of tri-axial sensors, offering a spatial coverage optimized for capturing tactile inputs on a human arm or its robotic equivalent. The core sensor design features integrated magnets and hall effect sensors embedded within a PDMS structure, allowing the measurement of three-axis forces.

Figure 1: Sensor design and working principle. (a) A rendering of the sensor array and an individual taxel. (b) Horizontal and (c) vertical magnetic flux readings from FEA simulations when varying magnet heights and displacements.

The sensors were calibrated using a standardized force/torque sensor setup, demonstrating minimal cross-coupling and accurate force measurements. The fabrication process involves embedding magnets in silicone molds and aligning them precisely with hall effect sensors on a flexible PCB.

Data Collection and User Study

A comprehensive user study with 11 participants was conducted to gather data for 13 touch gesture classes. These gestures include strokes, taps, ticks, and pulls, among others, and are defined rigorously to cover common touch interactions.

Figure 2: User study setup for collecting touch gesture data consisting of a mannequin and the flexible sensing array. The bottom right plot shows sensor data; red circle diameters and arrows indicate normal and shear forces, respectively.

The study's design ensured the collection of diverse user-generated touch patterns, capturing the rich complexity of human gesture execution.

Deep Learning Model for Gesture Classification

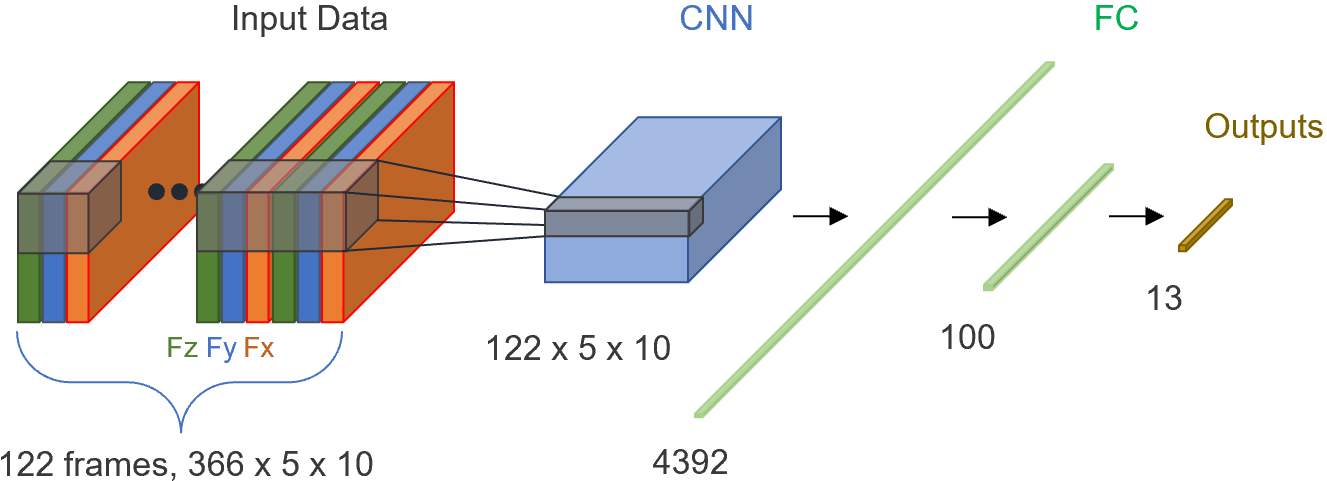

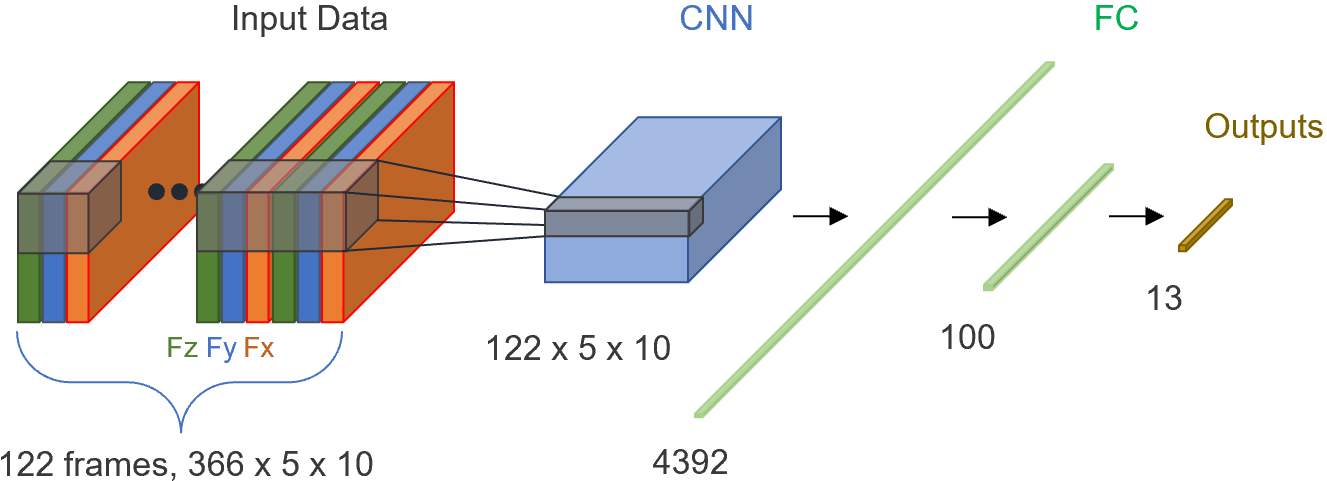

Touch gesture data were processed using a CNN model designed to treat temporal sensor readings as 3D image sequences. The CNN architecture includes convolutional layers followed by ReLU activations, dropout, and max-pooling layers, resulting in an efficient processing pipeline that maps force recordings to gesture classes.

Figure 3: CNN model used for touch gesture classification. Tri-axial force data are stacked along the temporal length to be treated as a 3D image. FC stands for a fully connected layer.

Training this model on a labeled dataset from the user study revealed that incorporating shear force data boosts classification accuracy significantly.

Results and Analysis

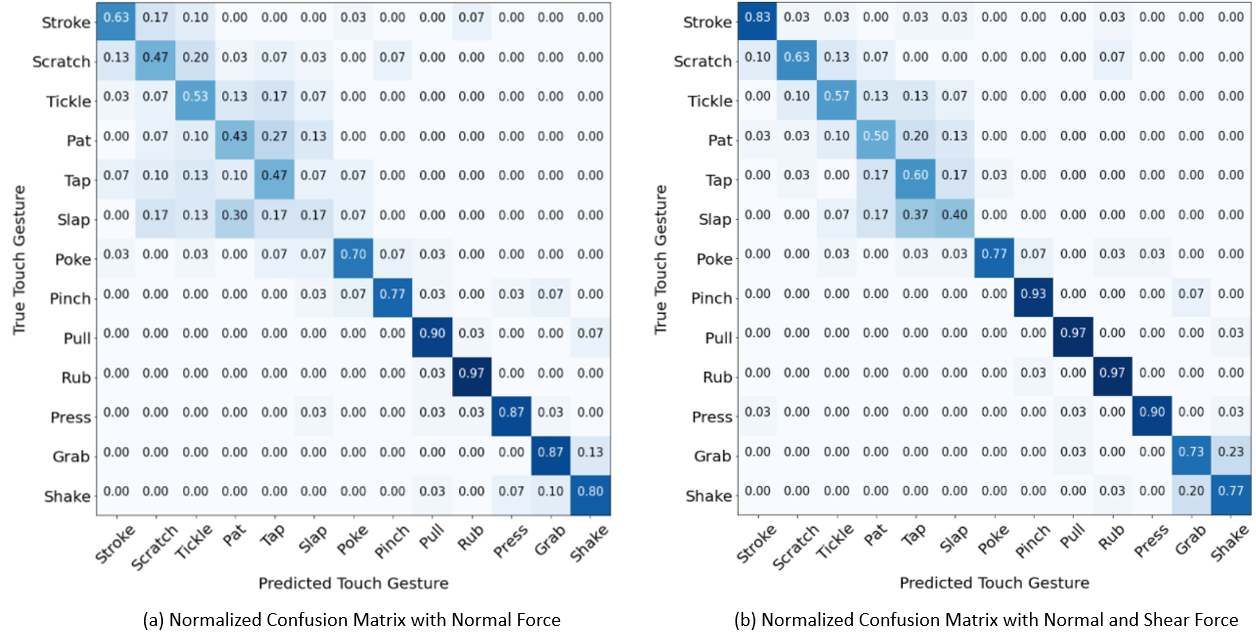

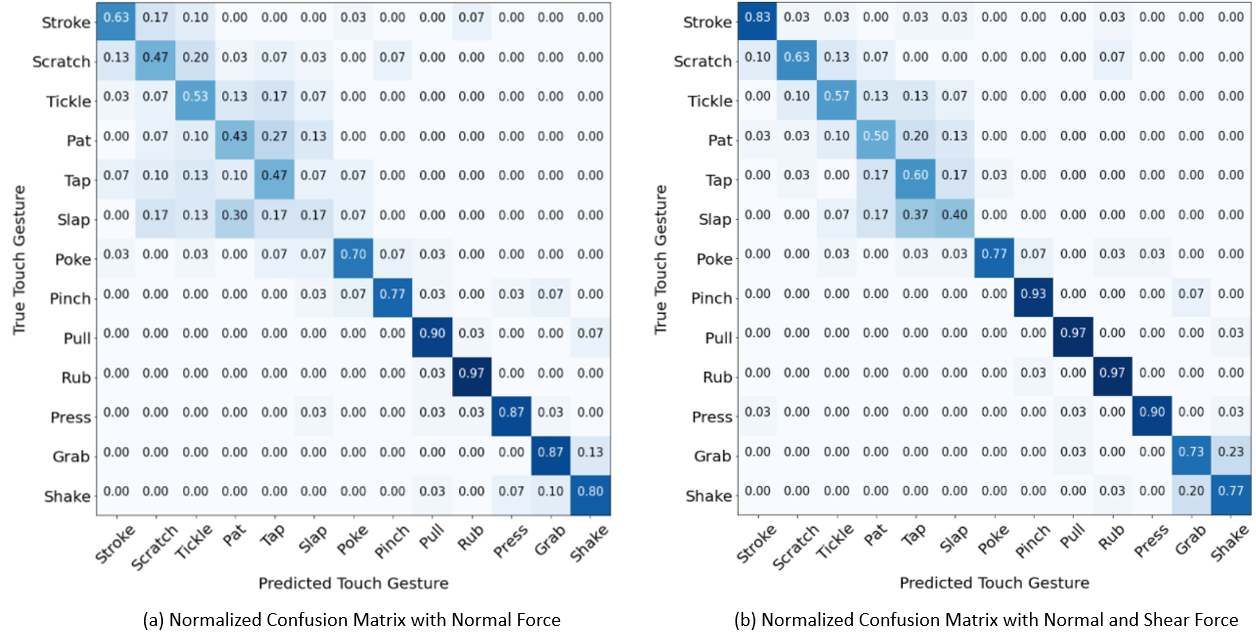

The ablative study results indicate a 74% accuracy for gesture classification when both normal and shear data are used, in contrast to 66% with normal force only. This improvement is attributed to the shear force providing additional distinguishing features for interactions that are otherwise similar in normal force distribution.

Figure 4: Results of the CNN ablative study (a) when normal force is used (average accuracy: 66\%) and (b) when normal and shear force are used (average accuracy: 74\%) for classification.

The results demonstrate a notable reduction in misclassification for gestures such as stroke, scratch, and tickle when shear data is included, substantiating the hypothesis that shear force data is critically informative.

Conclusion

The study successfully leverages distributed multi-axial force data and deep learning to advance touch gesture recognition. The incorporation of shear forces leads to improved accuracy, marking a step forward in developing sensitive human-computer interfaces and touch-based robotic control systems. Future iterations may explore the scaling of tactile arrays and the integration of temporal modeling techniques, such as LSTMs or Transformers, to further enhance performance and applicability in diverse interaction scenarios.