- The paper introduces ECoBA, a novel black-box attack algorithm that combines additive and erosive perturbations to target binary OCR systems.

- It demonstrates that ECoBA requires fewer steps for successful misclassification across multiple architectures compared to traditional adversarial methods.

- The study highlights critical vulnerabilities in binary image classifiers and underscores the need for robust defenses in practical OCR applications.

A Black-Box Attack on Optical Character Recognition Systems

Introduction

Adversarial machine learning has rapidly developed as a crucial field demonstrating the vulnerabilities inherent in deep learning models. The research paper "A Black-Box Attack on Optical Character Recognition Systems" (2208.14302) investigates adversarial attack methods specifically targeting OCR systems that rely on binary images. These systems are widely used in applications like license plate recognition and bank check processing. The paper introduces a novel attack algorithm, ECoBA, that effectively challenges existing binary image classifiers, standing as a valuable contribution by highlighting both the weaknesses of current models and proposing robust attack strategies.

Inefficiency of Previous Attack Methods

The paper discusses the challenges associated with binary image classification systems due to their limited pixel domains, which make traditional adversarial attack methods, such as PGD and FGSM, inadequate. The primary issue is the loss of perturbations during binarization, serving as a de facto defense against these methods. Wang et al. demonstrated this binarization defense through achieving notable accuracy against adversarial attacks by merely binarizing input images [binarization_deffense]. This inefficiency is illustrated in Figure 1, where perturbations below the binarization threshold vanish post conversion.

Figure 1: The effect of binarization on adversarial examples created by the PGD method. Perturbations in (b) are smaller than the binarization threshold, and perturbations in (c) are more significant than the threshold. (d) and (e) are the binary versions of (b) and (c), respectively.

Proposed Method: ECoBA

The core contribution of the paper is the Efficient Combinatorial Black-box Adversarial Attack (ECoBA), designed specifically to generate effective adversarial examples for binary image classifiers. ECoBA leverages two perturbation strategies: additive perturbations (AP) targeting the image background and erosive perturbations (EP) affecting the characters. By combining these perturbation types, ECoBA successfully deceives classifiers with high confidence through controlled manipulation minimizing detectability.

The optimization process involves exhaustive pixel flipping guided by error metrics, stored and ranked in dictionaries for efficient selection. This approach ensures minimal required perturbations, emphasizing the attack's precision and effectiveness.

Figure 2: From left to right: original binary image, adversarial examples after only additive perturbations, only erosive perturbations, and final adversarial example with the proposed method.

Simulations and Results

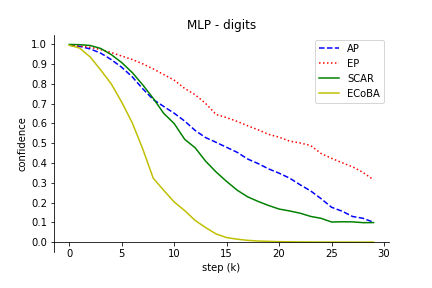

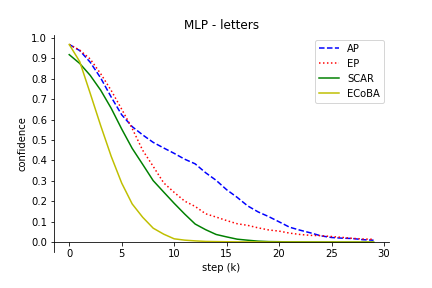

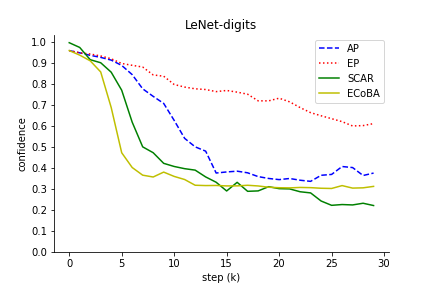

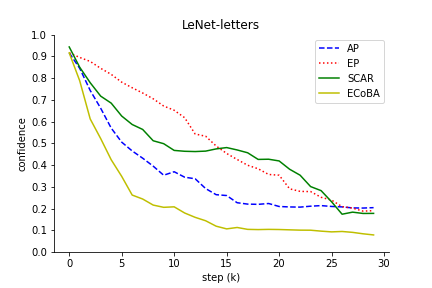

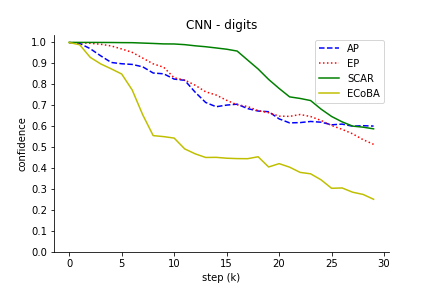

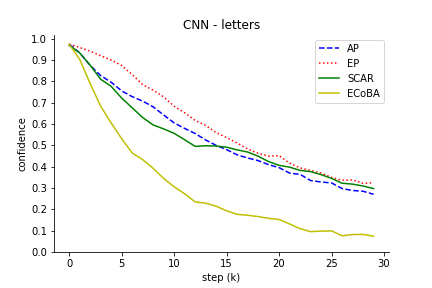

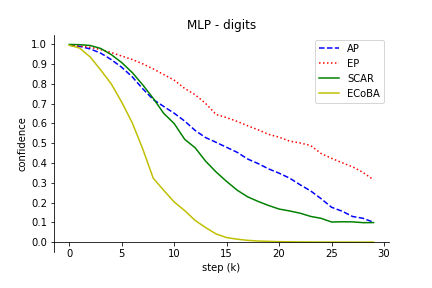

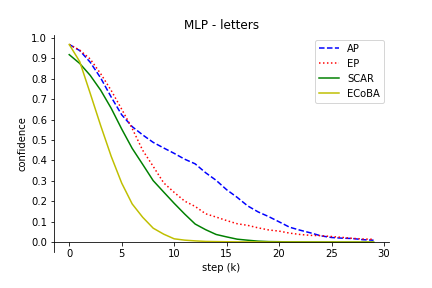

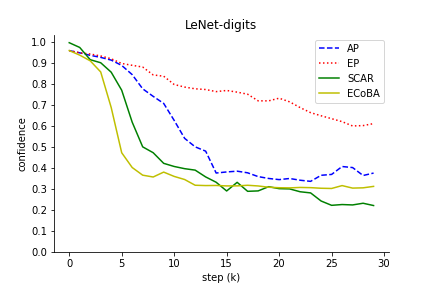

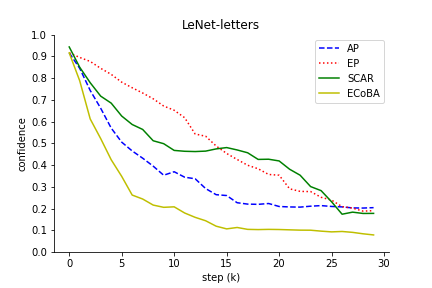

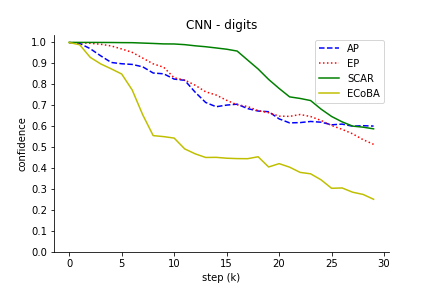

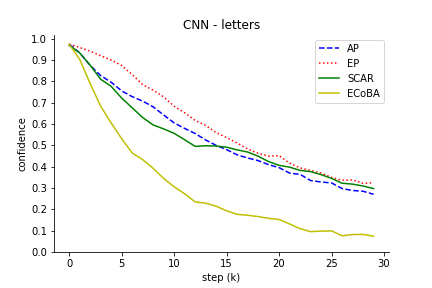

To evaluate ECoBA's effectiveness, the authors conducted comprehensive simulations utilizing the MNIST and EMNIST datasets across three classifier architectures: MLP-2, LeNet, and CNN. The experiment results underscored ECoBA's superior performance, requiring fewer steps for successful attacks compared to standalone AP and EP attacks as well as previous methods such as Scar.

The comparison results illustrated the robustness of ECoBA, highlighting the synergy of AP and EP in generating impactful adversarial examples. This is demonstrated in Figure 3, showing declining classification performance with ascending step sizes.

Figure 3: Classification performance of input image with increasing step size. We include AP and EP as individual attack on input images to observe their effectiveness.

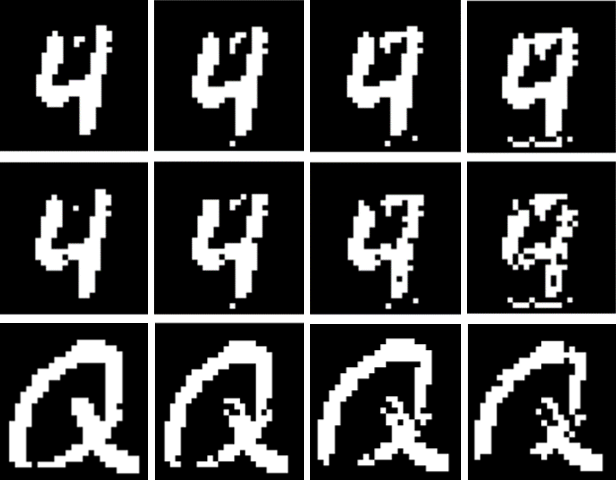

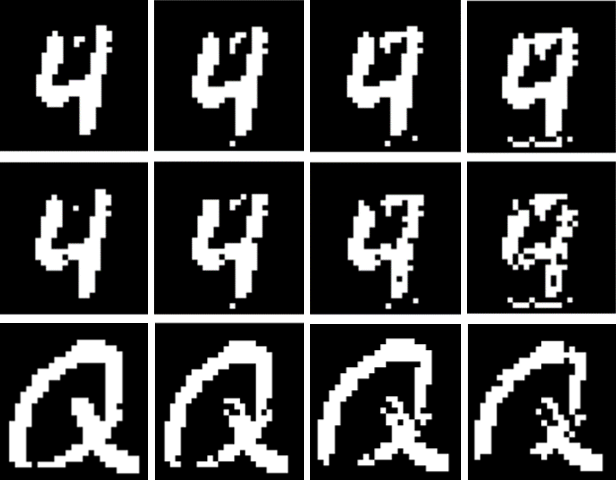

Additionally, the paper examines class interpolation outcomes where increased adversarial perturbations transform input images into visually different classes (Figure 4).

Figure 4: Class interpolation with increasing k. The first row: only AP, the second row: ECoBA, the last row: EP.

Conclusion

The study provides substantive insights into binary image adversarial attacks and highlights notable inefficiency in conventional methods on such models. ECoBA's introduction, with its efficient perturbation strategies, significantly enhances the practical attack capabilities against OCR systems based on binary images. The real-world application extends beyond basic OCR systems, affecting any field reliant on binary image processing, prompting necessary reconsideration of robustness in future model designs. This paper sets the foundation for developing more resilient classifiers against such precise and combinatorial adversarial attacks. Future work may include improving defense mechanisms specifically tailored to counteract the innovative strategies introduced by ECoBA.