- The paper presents the QSFormer model which unifies query-support transformers to enhance feature representation and metric learning in few-shot classification.

- It integrates both global sample and local patch transformers using cross-attention and Earth Mover’s Distance to improve class discrimination.

- Experimental results on miniImageNet and tieredImageNet demonstrate significant performance gains in 1-shot and 5-shot tasks over state-of-the-art methods.

Introduction

The paper presents a novel approach to few-shot classification, a task that involves recognizing new classes using only a limited number of examples. The authors propose a Query-Support Transformer (QSFormer) model to simultaneously address the challenges of feature representation and metric learning in a unified framework. By integrating a global sample Transformer (sampleFormer) and a local patch Transformer (patchFormer), the method enables more effective few-shot classification.

Methodology

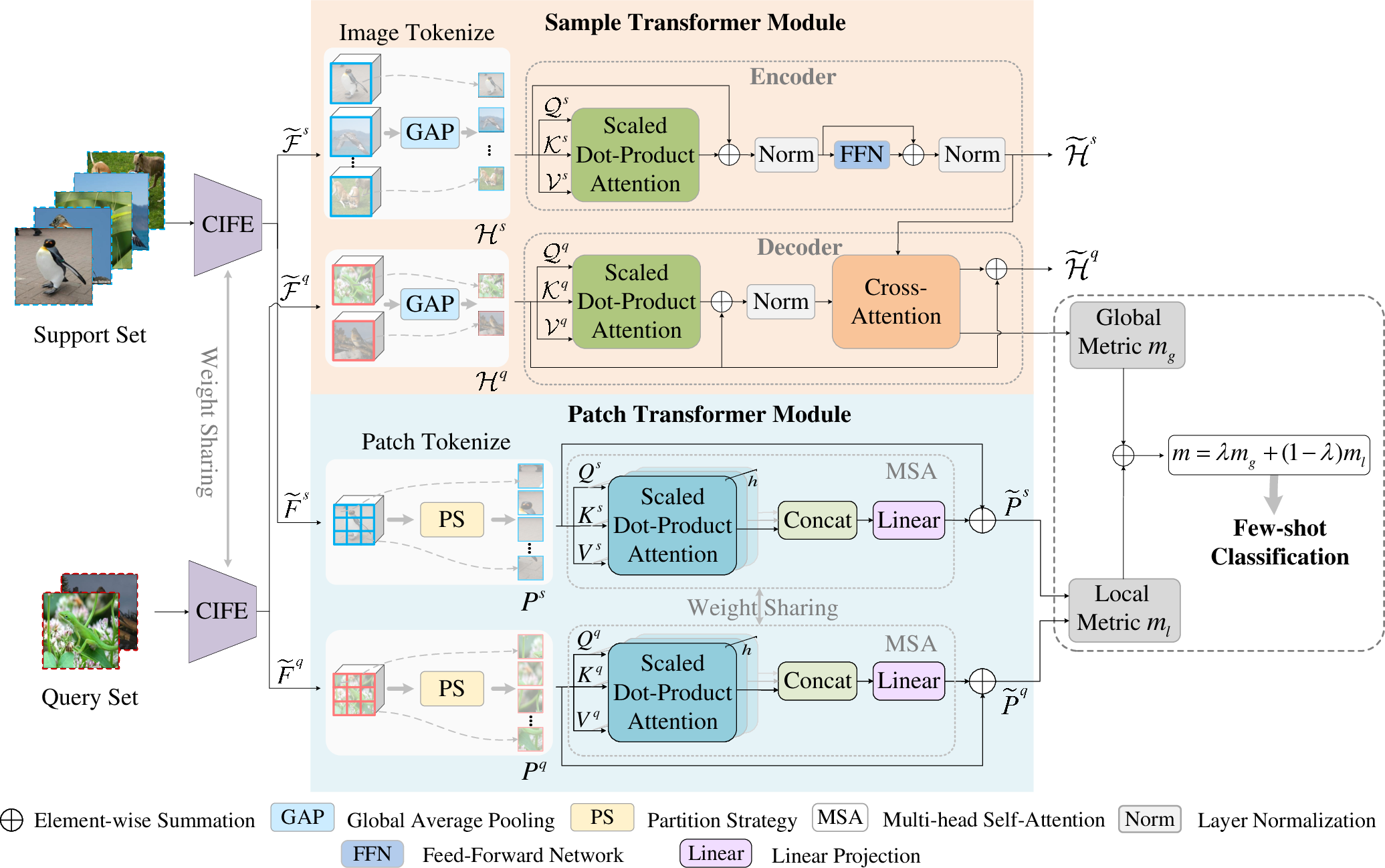

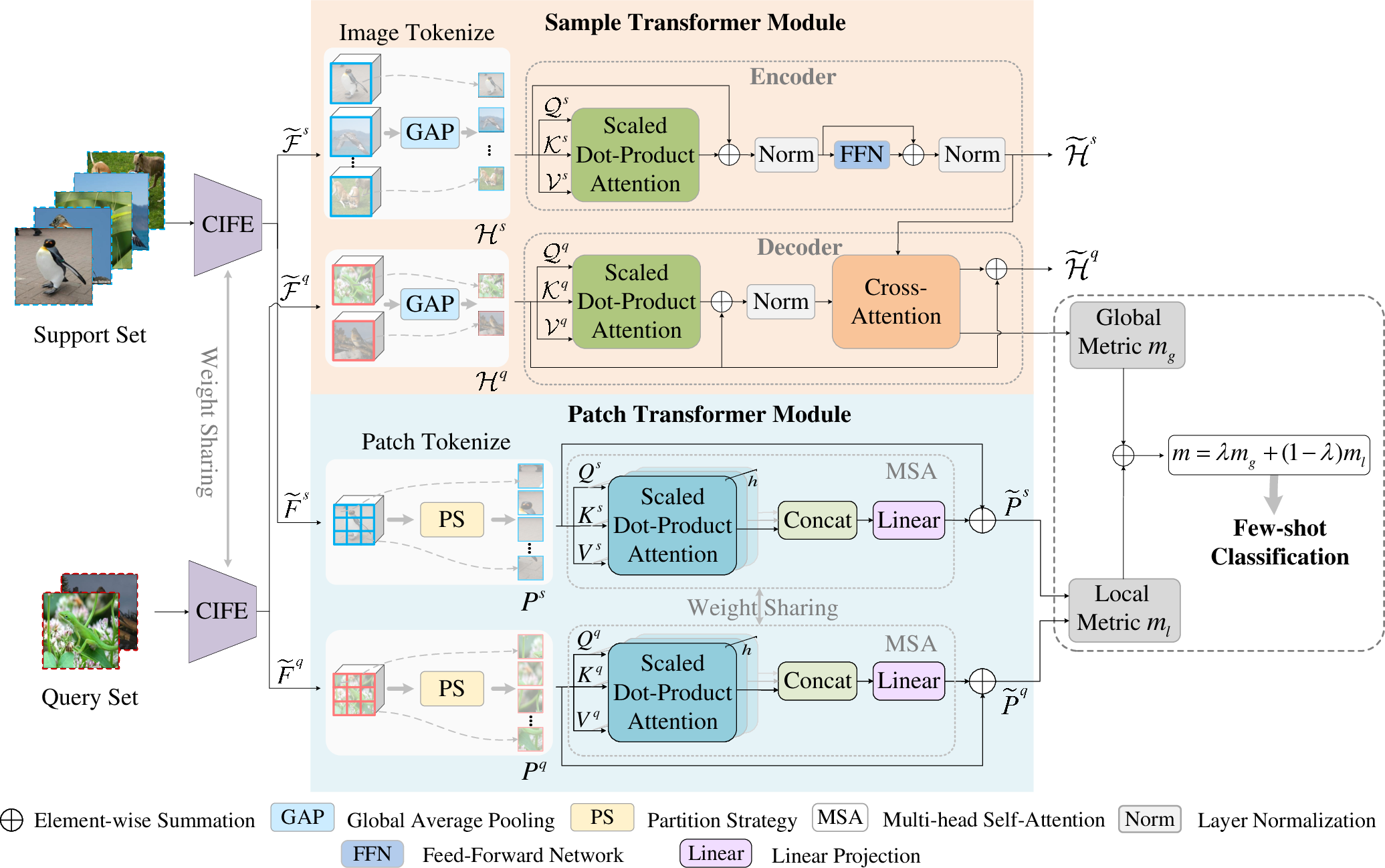

The QSFormer framework comprises several key components: the Cross-scale Interactive Feature Extractor (CIFE), the Sample Transformer Module, the Patch Transformer Module, and the combination of these modules for metric learning and classification.

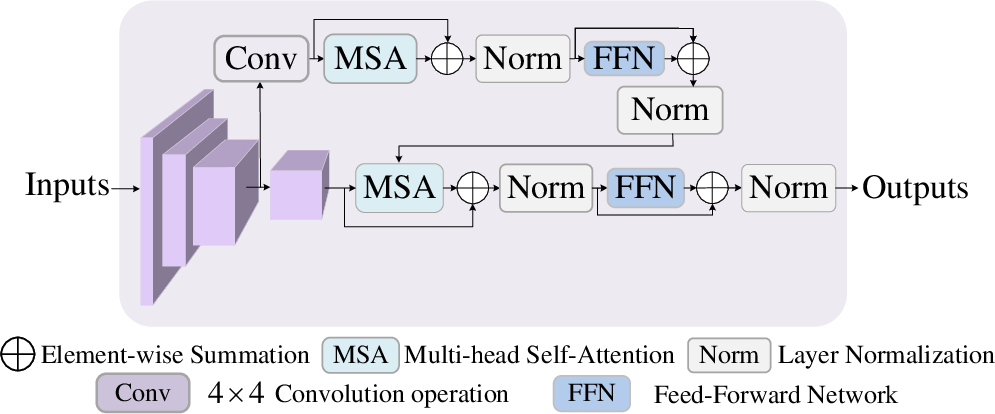

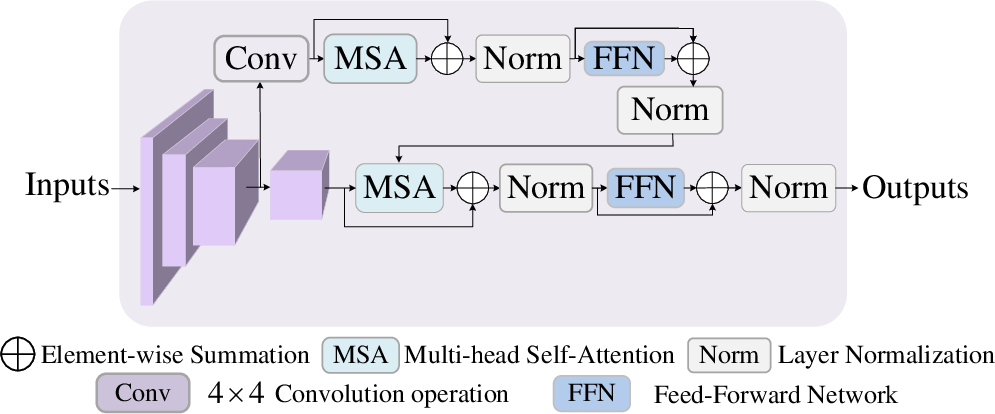

- Cross-scale Interactive Feature Extractor (CIFE): This module acts as the backbone, utilizing a ResNet architecture to generate multi-scale features. It enhances the representations by capturing cross-scale dependencies using a Transformer-based network (Figure 1).

Figure 1: Illustration of Cross-scale Interactive Feature Extractor (CIFE) for feature extraction.

- Sample Transformer Module (sampleFormer): As illustrated in Figure 2, sampleFormer uses an encoder-decoder structure to model the relationships between query and support samples. In this design, the encoder emphasizes support sample interaction, while the decoder focuses on query sample representation and metric learning through cross-attention mechanisms.

Figure 2: An overview of the proposed QSFormer framework, which mainly consists of Cross-scale Interactive Feature Extractor (CIFE), Sample Transformer Module, Patch Transformer Module, Metric Learning and Few-shot Classification. More details can be found in Section \uppercase\expandafter{\romannumeral3

- Patch Transformer Module (patchFormer): This module complements the global approach by modeling local relationships within image patches. The patchFormer calculates metrics using the Earth Mover's Distance (EMD) method, enhancing fine-grained feature alignment.

Metric Learning and Few-shot Classification

The QSFormer employs a combination of global and local metrics derived from sampleFormer and patchFormer respectively. The final metric combines these to classify query images based on the nearest neighbor principle. The paper introduces a contrastive loss to learn effective representations by maximizing the similarity between matching query-support pairs while minimizing the similarity for non-matching pairs.

Results

The QSFormer demonstrates its efficacy across multiple datasets, notably miniImageNet and tieredImageNet, outperforming several state-of-the-art models in both 1-shot and 5-shot tasks. The model significantly improved performance over transformer-based and metric learning models by substantial margins, showcasing its capability in few-shot learning scenarios.

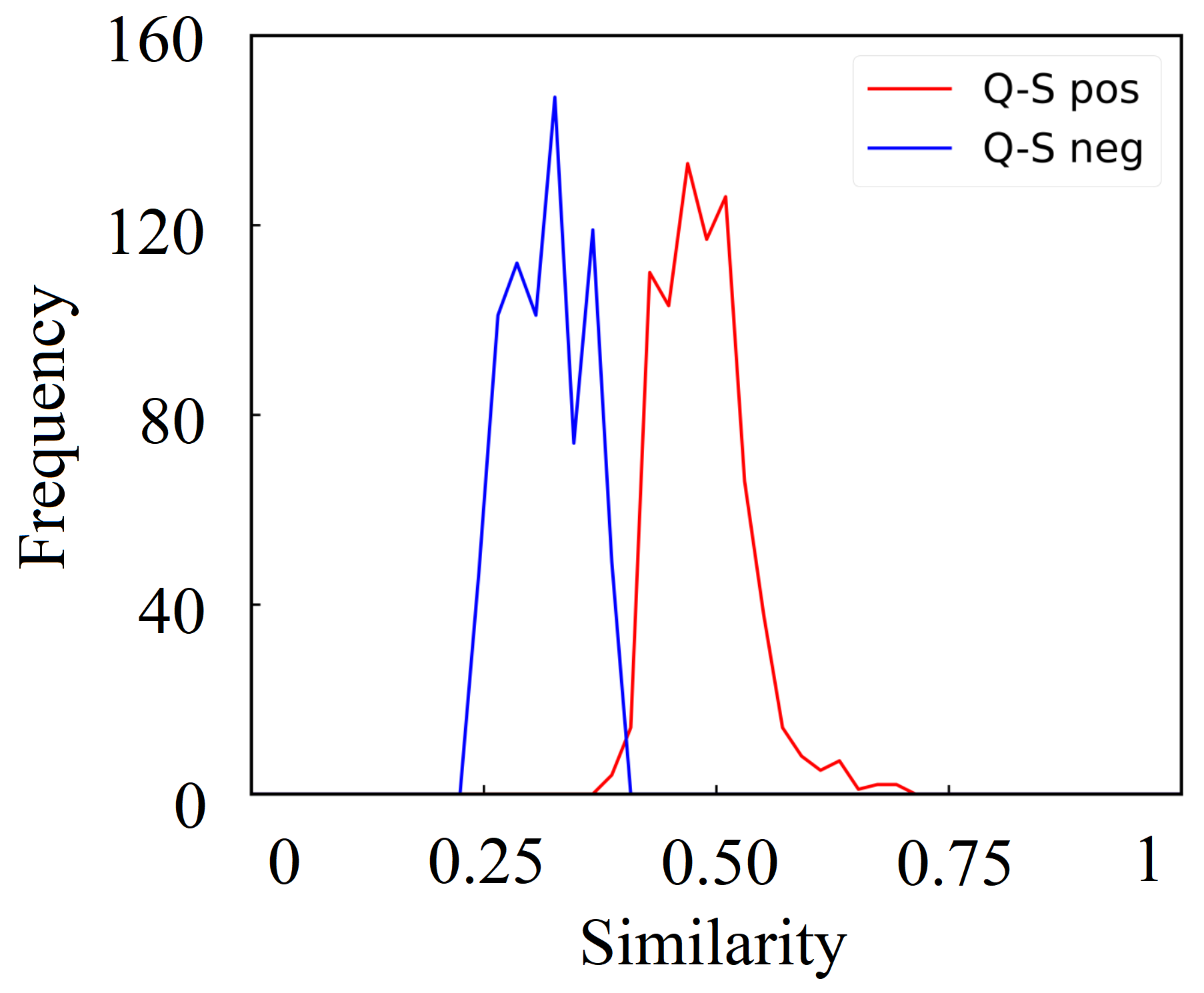

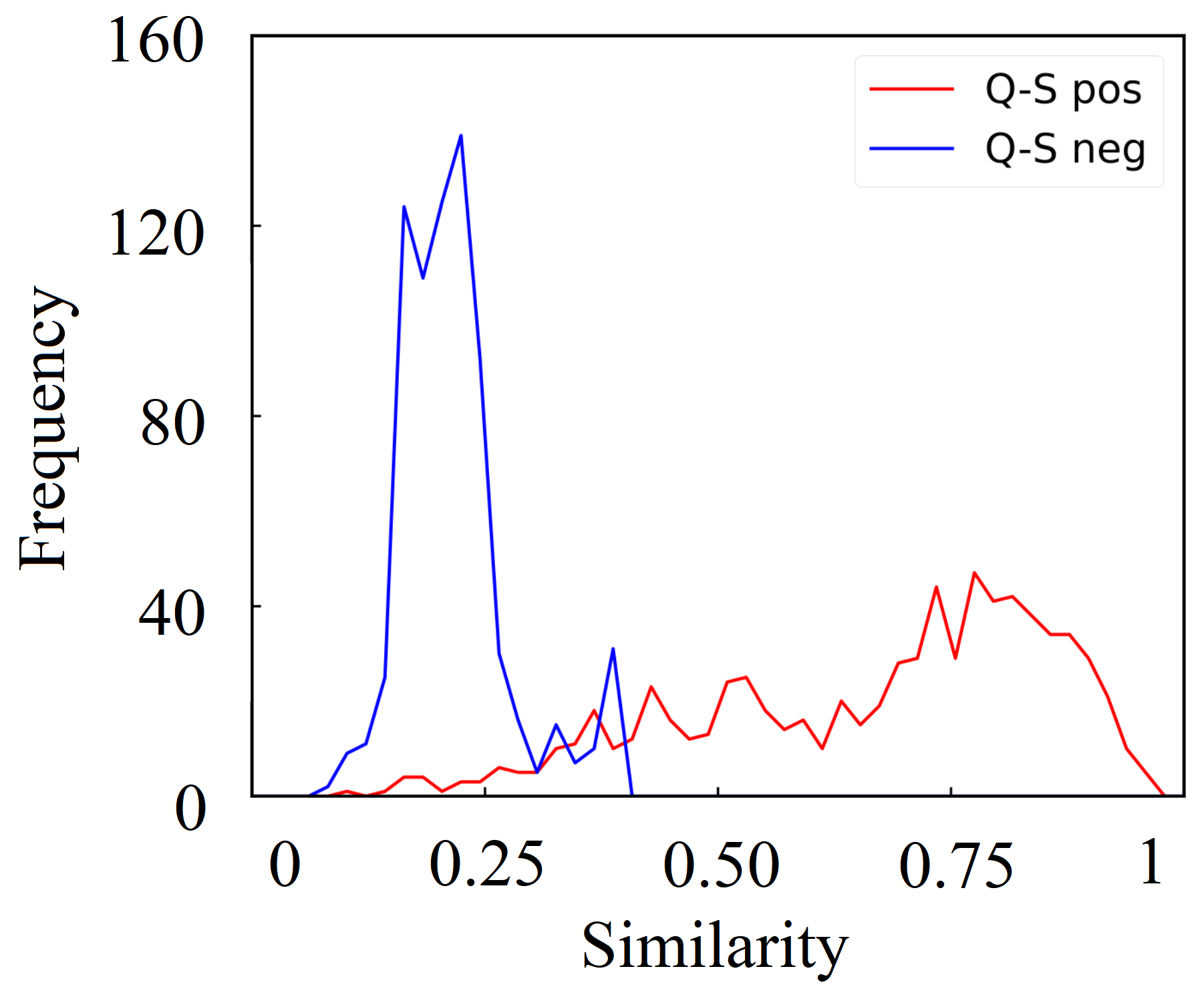

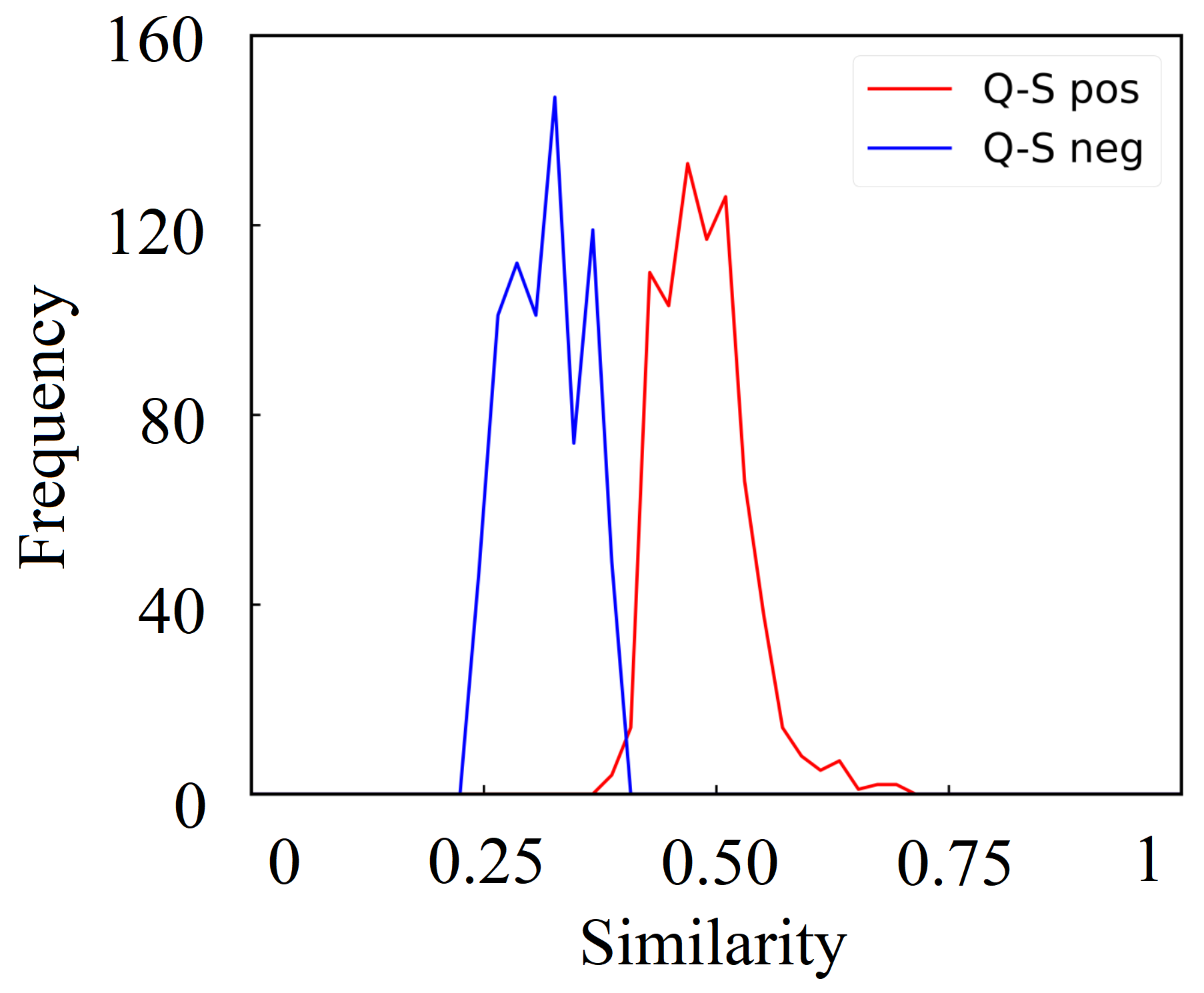

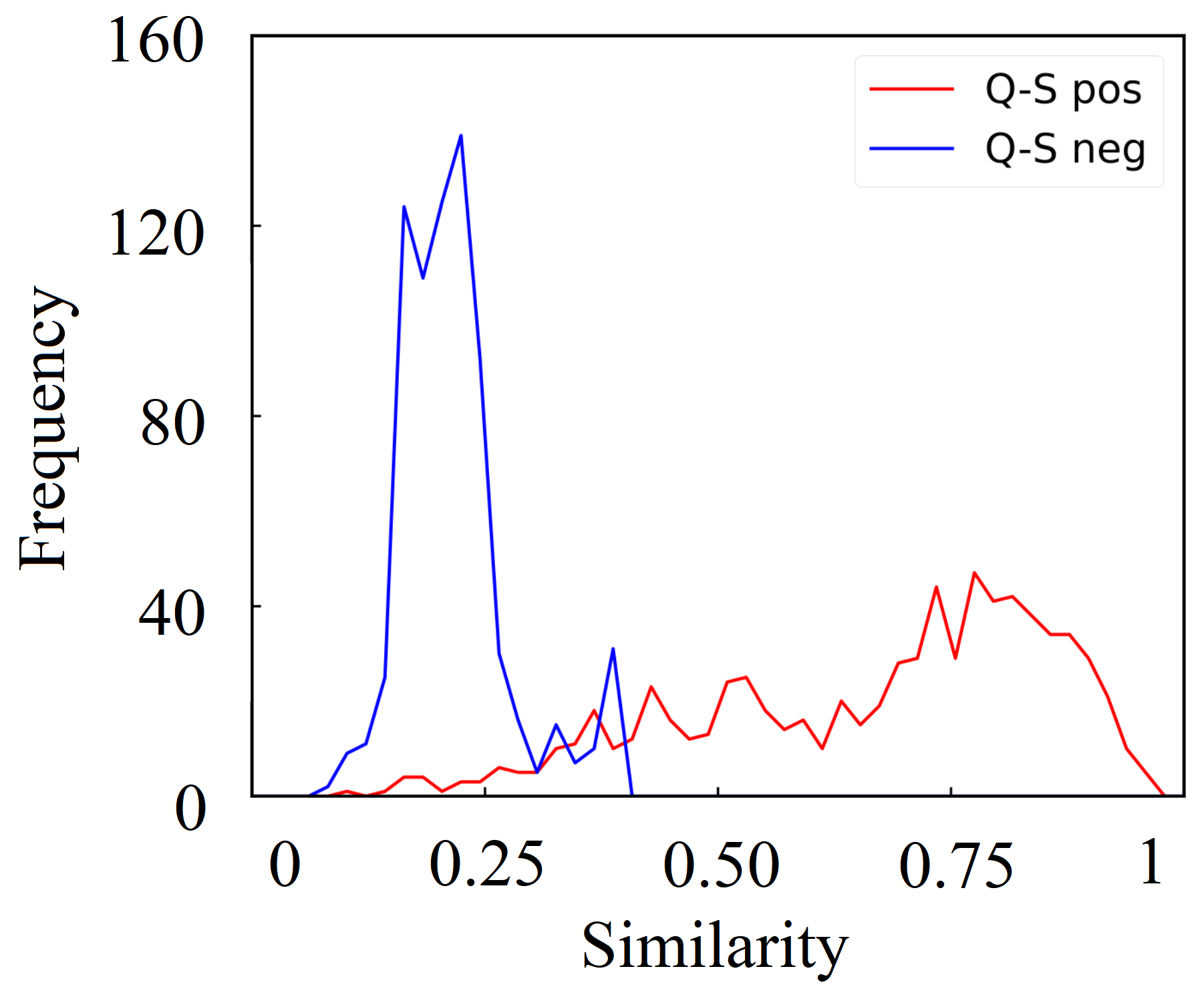

Visualization of Similarity Distributions

To illustrate the effectiveness of the proposed approach, the similarity distribution between query-support pairs is provided (Figure 3). The QSFormer significantly separates positive from negative pairs, a testament to its robust metric learning process.

Figure 3: Comparison of similarity distribution between Baseline and our QSFormer.

Conclusion

The proposed QSFormer model introduces a comprehensive framework that unifies representation learning and metric learning for few-shot classification. The ability to analyze both global and local relationships within query and support samples represents a significant advancement, as evidenced by experimental success across standard benchmarks. Future work may explore further refinements of this model in handling varying few-shot scenarios and extensions to diverse application domains.