- The paper introduces a Bayesian Theory of Mind model that enables AI agents to simulate human belief systems for improved collective decision-making.

- It demonstrates that Bayesian agents outperform human decisions, especially in complex Hidden Profile tasks, by mitigating cognitive biases.

- Empirical results from 145 participants show that the model enhances team performance by effectively integrating private and shared information.

Collective Intelligence in Human-AI Teams: A Bayesian Theory of Mind Approach

Introduction

This paper develops a computational framework that models the Theory of Mind (ToM) abilities within Human-AI teams using a Bayesian approach. ToM is crucial for understanding and inferring the mental states of others, which is pivotal in effective team communication and decision-making. By utilizing Bayesian inference, this framework allows AI agents to form mental models of their human teammates' beliefs and knowledge, thus enabling more effective collective decision-making. The study draws on empirical data from a structured online experiment to analyze the efficacy of these approaches.

Framework and Methodology

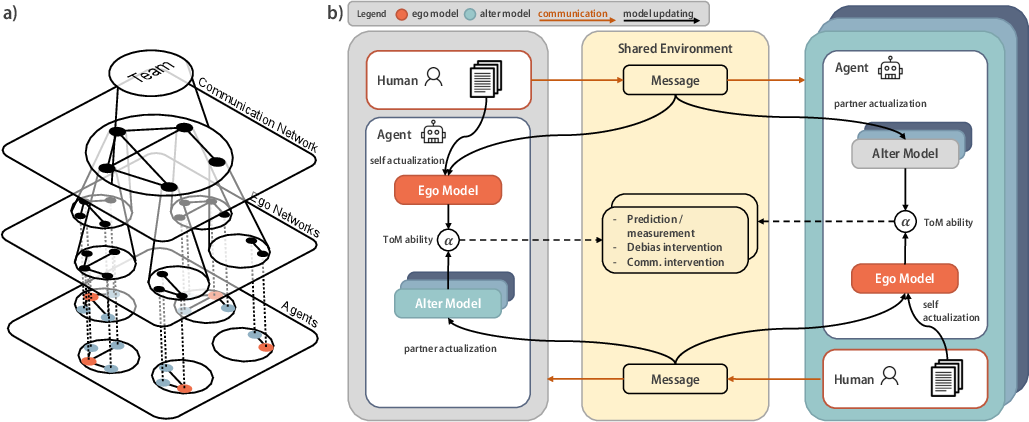

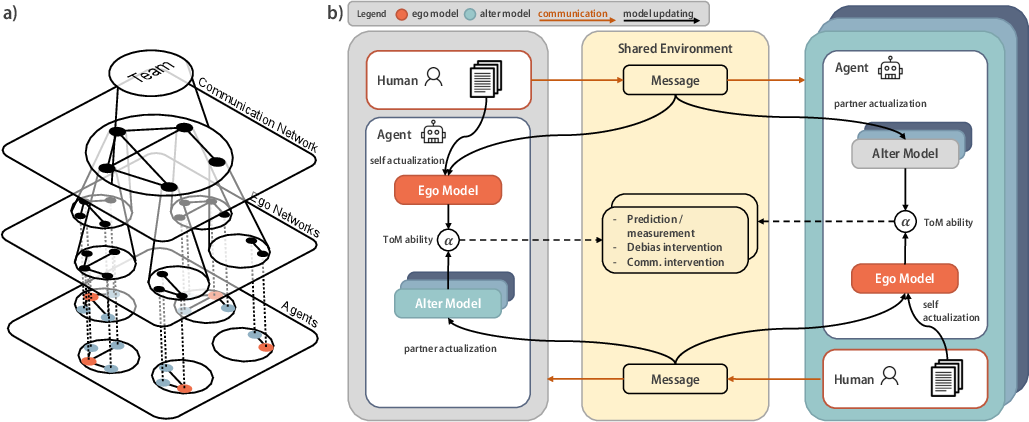

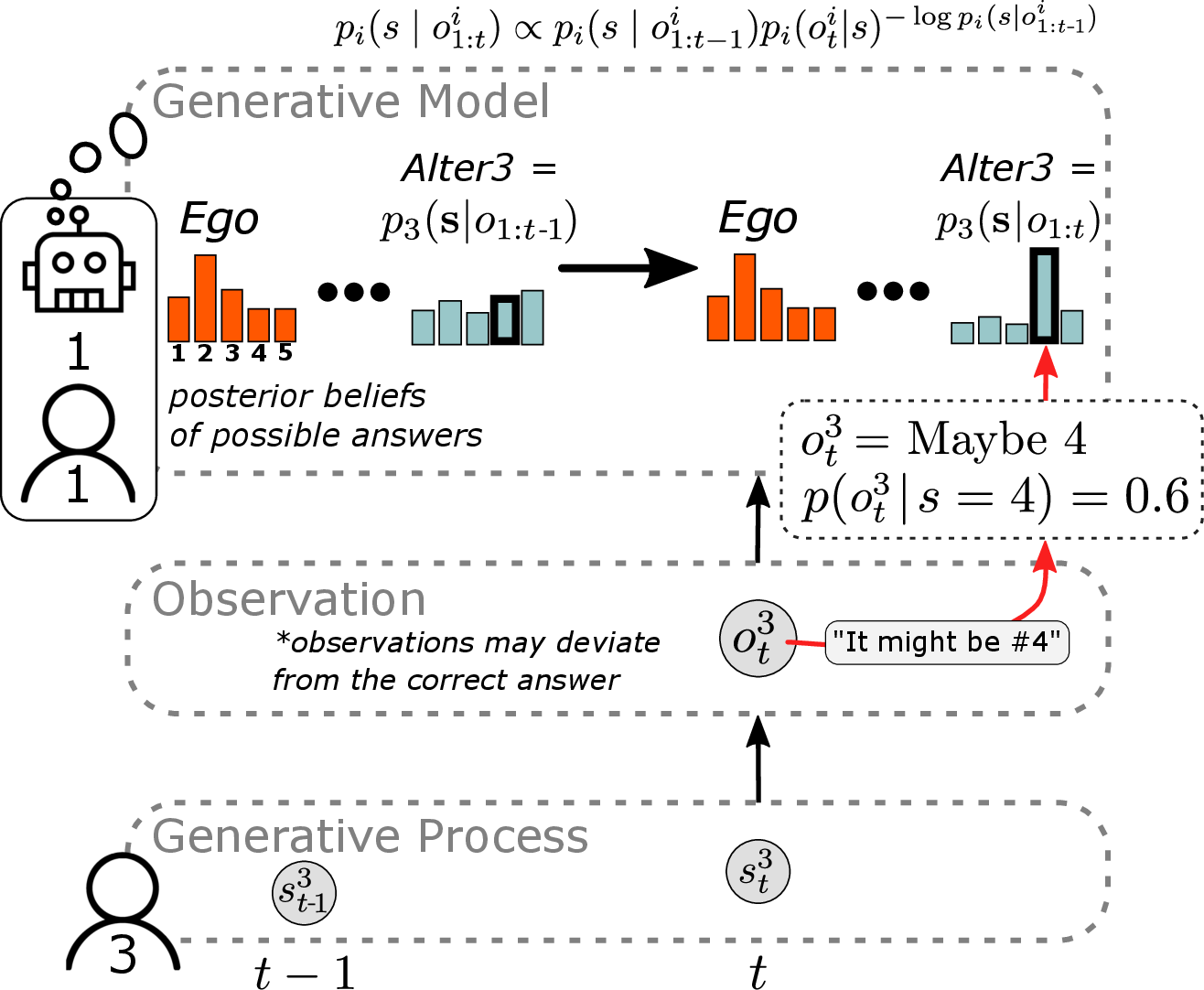

Human-AI teaming is modeled using a network of Bayesian agents, where each agent shadows a human team member. The framework comprises nested layers of ToM agents that model ego networks, enabling the agents to infer both their own beliefs and those of their teammates. Each human participant is paired with an AI agent that observes and processes communication to update its beliefs based on received information.

Figure 1: Framework of human-AI teaming with Theory of Mind (ToM).

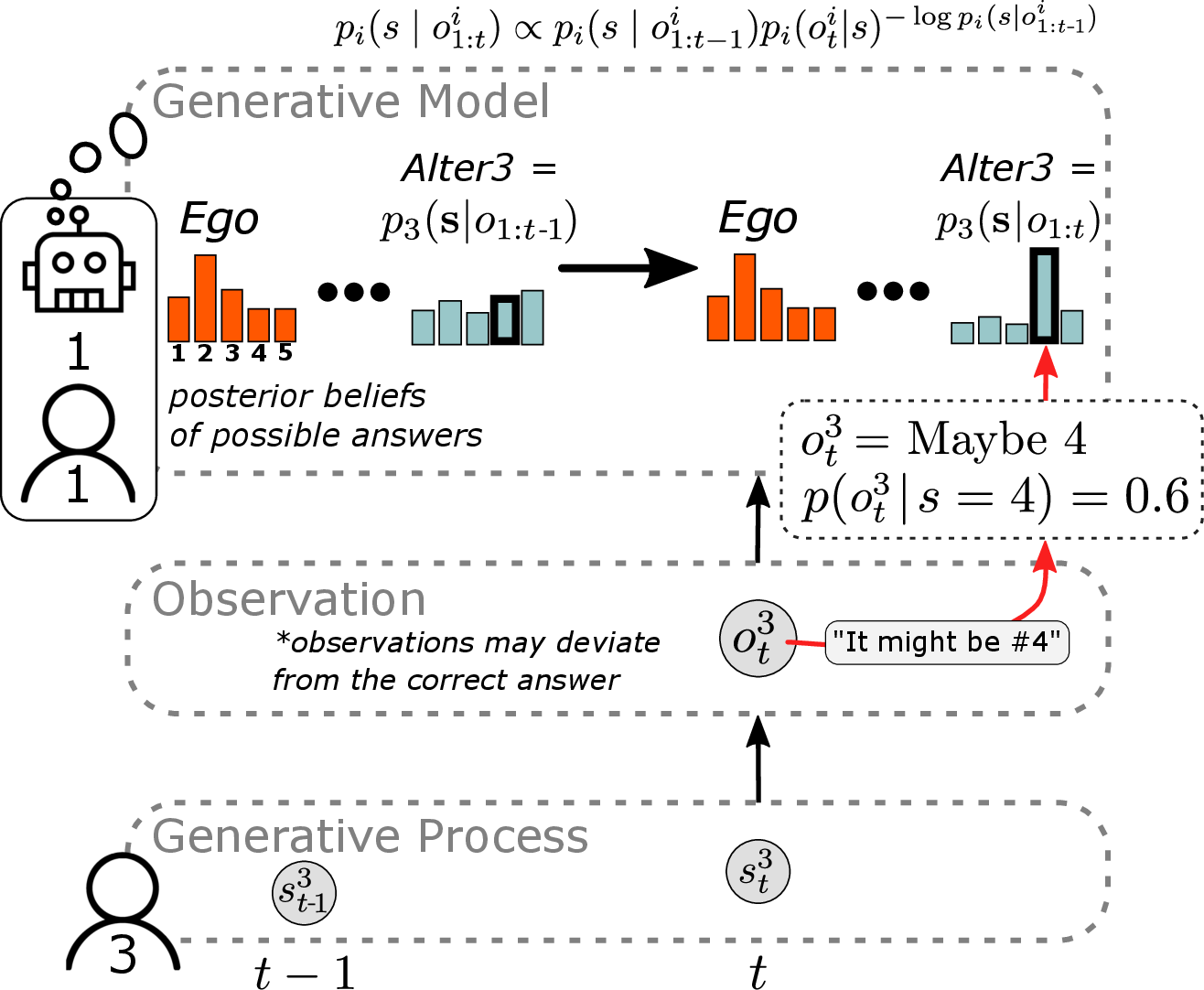

The Bayesian model is structured with both Ego Models and Alter Models for each neighboring team member, providing a comprehensive understanding of team dynamics and communication flow (Figure 2). Ego Models are updated with self-generated knowledge, while Alter Models are updated with information gathered from teammates.

Figure 2: Bayesian model of Theory of Mind.

Experimental Setup and Results

The experimental setup involves an online platform where 145 individuals, organized into 29 teams of five, engage in a Hidden Profile task. This task is designed to evaluate the ability of teams to integrate private and shared information to reach a collective decision. Each participant receives unique and common clues about a task, and team members must communicate via a chat system to solve the problem collectively. The results show that while humans struggle with information integration under high communication load, the Bayesian agents effectively mitigate these biases and improve team performance.

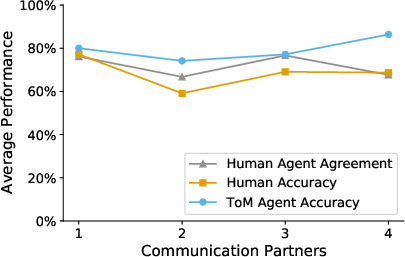

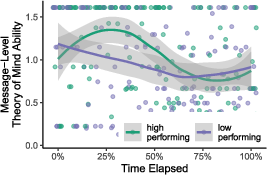

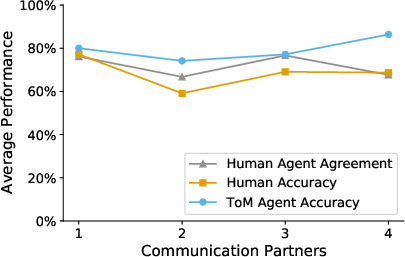

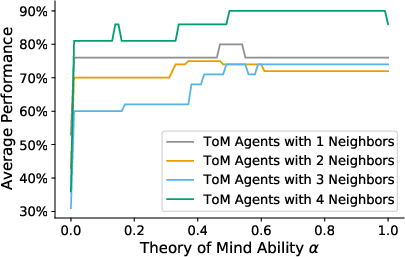

The results reveal that human performance decreases with the difficulty of the task, while Bayesian agents outperform human participants, especially in more complex scenarios (Figure 3). The analysis identifies cognitive biases in human communication, such as a tendency to underweight ambiguous but potentially useful information. Bayesian agents increase overall team performance by efficiently aggregating information from individual participants and reducing divergence between personal and team knowledge.

Figure 3: Human performance varies with task difficulty and number of communication partners.

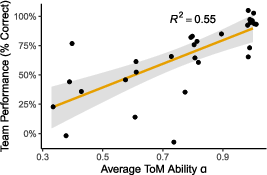

Theory of Mind Ability

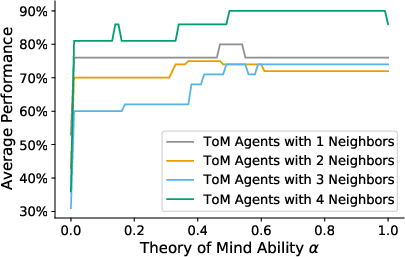

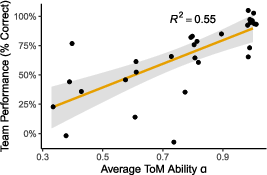

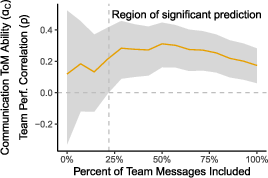

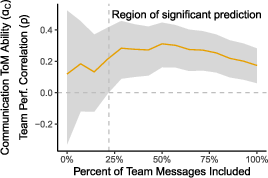

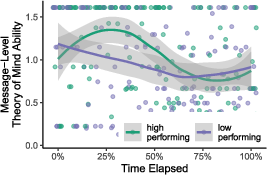

The study introduces two real-time measures of theory of mind ability, which correlate with improved individual and team performance. The Bayesian models facilitate interventions that increase human-AI team efficiency by targeting communication strategies to align team beliefs better. These measures explain 8% of team performance variation, significantly surpassing existing static measures.

Figure 4: Theory of Mind ability predicts team performance.

Implications and Future Directions

The findings demonstrate that Bayesian models can significantly enhance Human-AI collaboration by compensating for human cognitive biases and improving information integration. This framework provides a valuable tool for real-time assessment of team dynamics and offers promising pathways for future interventions aimed at boosting collective intelligence in diverse team settings. Future research could expand on these models by incorporating more complex team settings, diverse communication channels, and adaptive learning strategies that dynamically adjust to changing team configurations.

Conclusion

This paper establishes a comprehensive Bayesian framework for modeling and enhancing collective intelligence in Human-AI teams. By employing Theory of Mind principles, AI agents can more effectively facilitate communication and improve overall team performance, providing tangible benefits in contexts where team decision-making is crucial. The demonstrated improvement in measuring theory of mind and team performance in real-time highlights the potential of this approach for broad applications in organizational settings and collaborative technologies.